Inside the Mind of Alan Wake 2: A Series

Inside the Mind of Alan Wake 2 is a deep dive into the creative and technical processes that shaped the game’s audio experience. This blog series explores the meticulous decisions, challenges, and innovations behind Alan Wake 2’s soundscape. From crafting dynamic dialogue and immersive Echoes to the vital role of audio QA and the game’s unique profiling system, step inside the minds of the audio team who brought this world to life.

In the first installment, Senior Dialogue Designers Arthur Tisseront & Taneli Suoranta explored the intricacies of dialogue implementation in Alan Wake 2, breaking down the interplay between spoken and narrated dialogue. If you missed it, check it out here: Inside the Mind of Alan Wake 2: Distinguishing Dialogue.

In this second installment, Principal Audio Designer Tazio Schiesari takes us behind the scenes of designing the sound of Echo interactions in Wwise, sharing how granular synthesis helped shape an evolving, interactive soundscape.

Introduction: Designing Echoes in Wwise

Echoes are a gameplay mechanic introduced in Alan Wake 2 that are visions of an adjacent narrative involving Alex Casey, the protagonist of Wake's novels. They are often sources of inspiration for Wake to write his way out of the Dark Place, a nightmare dimension where he has been stuck for 13 years.

Breaking Down the Echoes

From a practical standpoint Echoes can be separated into 2 main components:

- The Echo interaction, an entity placed in the levels and visually represented by a glimmering VFX that needs to be interacted with by the player. The interaction is a puzzle mechanic where the player needs to find the right viewing angle, access the vision part and further the story.

- The Echo vision itself is a live action cinematic overlayed onto the game’s 3D environment.

During this article I’ll be focusing on the Echo interaction part. So, for simplicity I’ll refer to Echoes interactions simply as “Echoes” and the overlayed cinematic as “vision” when needed.

The Challenge: Incorporating Dialogue into Sound Design

When tasked with designing the sound for the Echoes, the initial direction from the game directors Kyle Rowley and Sam Lake was to create the sound of a voice trapped, unable to escape.

Incorporating dialogue into sound design in games can often be a double-edged sword. On the one hand, it offers a powerful storytelling tool. On the other, it introduces significant challenges especially when considering localization. Translating spoken lines into multiple languages can exponentially increase the workload, and this additional effort traditionally surfaces during the hectic final stages of production.

Building the Echoes’ Sound Identity

Initially, I experimented with unrecognizable, manipulated voice loops as a placeholder for the Echoes. However, this approach felt disconnected, because the difference with the dialogue lines that played in the live action during the visions was too jarring. I decided to set aside concerns of scope and explore the idea I truly wanted to execute.

To better match the Echoes’ sound design with the vision’s dialogue, I decided to use the first few words of the actual live action footage. The idea was to work backwards from the vision and render these clean lines more fragmented and increasingly unintelligible when the player was far from solving the Echo mechanic. Conversely as the player got closer to the solution, the words became clearer and more coherent.

This blurred looping voice also served as a luring cue for the player to be able to find the location of the Echoes, but it also naturally blended into the dreamlike and uncertain soundscape of the Dark Place, adding an ominous texture while maintaining a thematic cohesion to the game’s audio direction.

Realizing the Design with Granular Synthesis

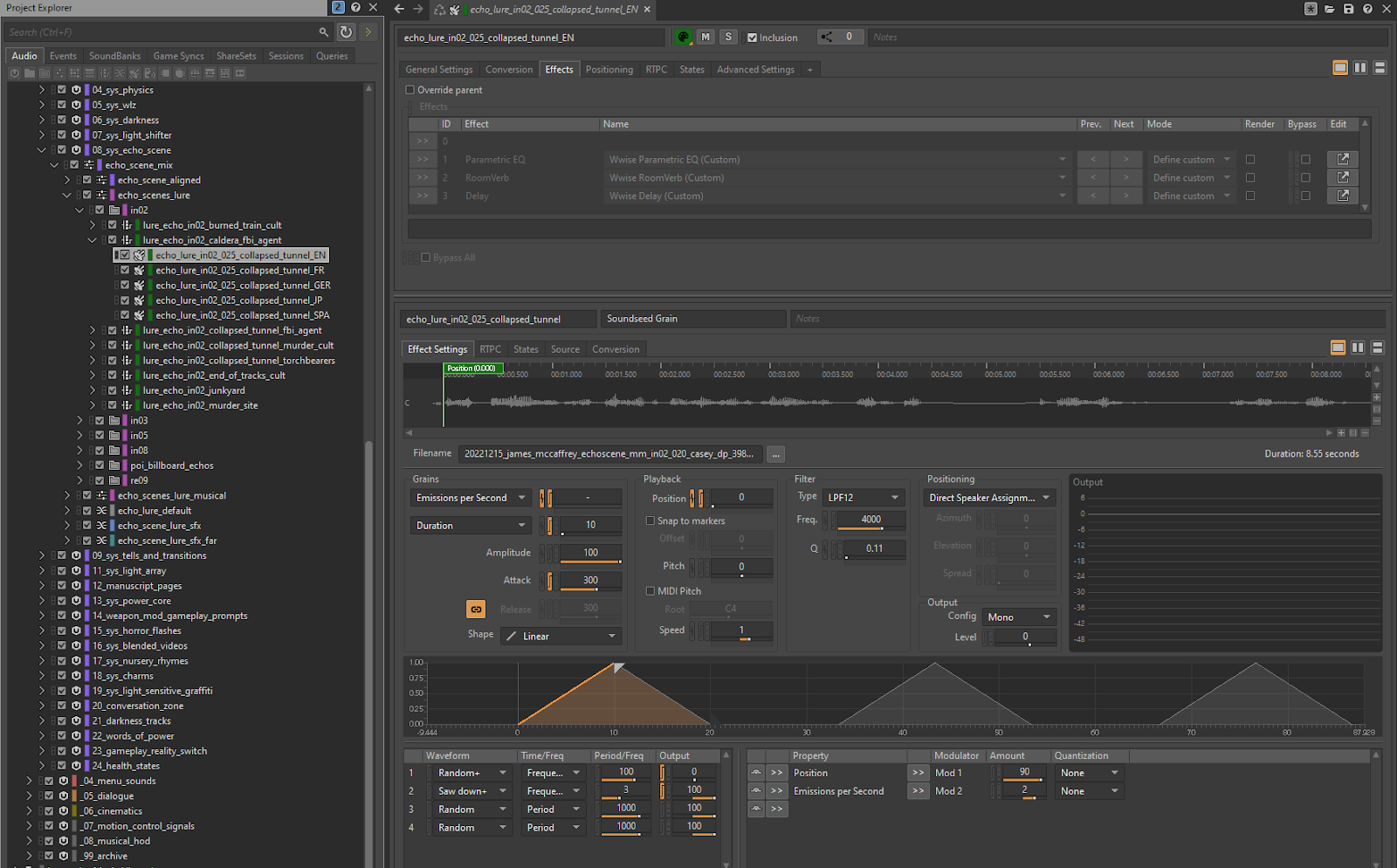

To realize this design inside the game, I reached for Wwise’s SoundSeed Grain.

With a solid understanding of granular synthesis, translating the concept into practice was quite straightforward.

After importing the dialogue audio file, the first goal was to match as closely as possible the original line's quality through granular playback. This would serve as the transition point when the player nearly solved the Echo so that it blurred the difference between the two different playback methods: granular for the echo interaction and linear for the vision.

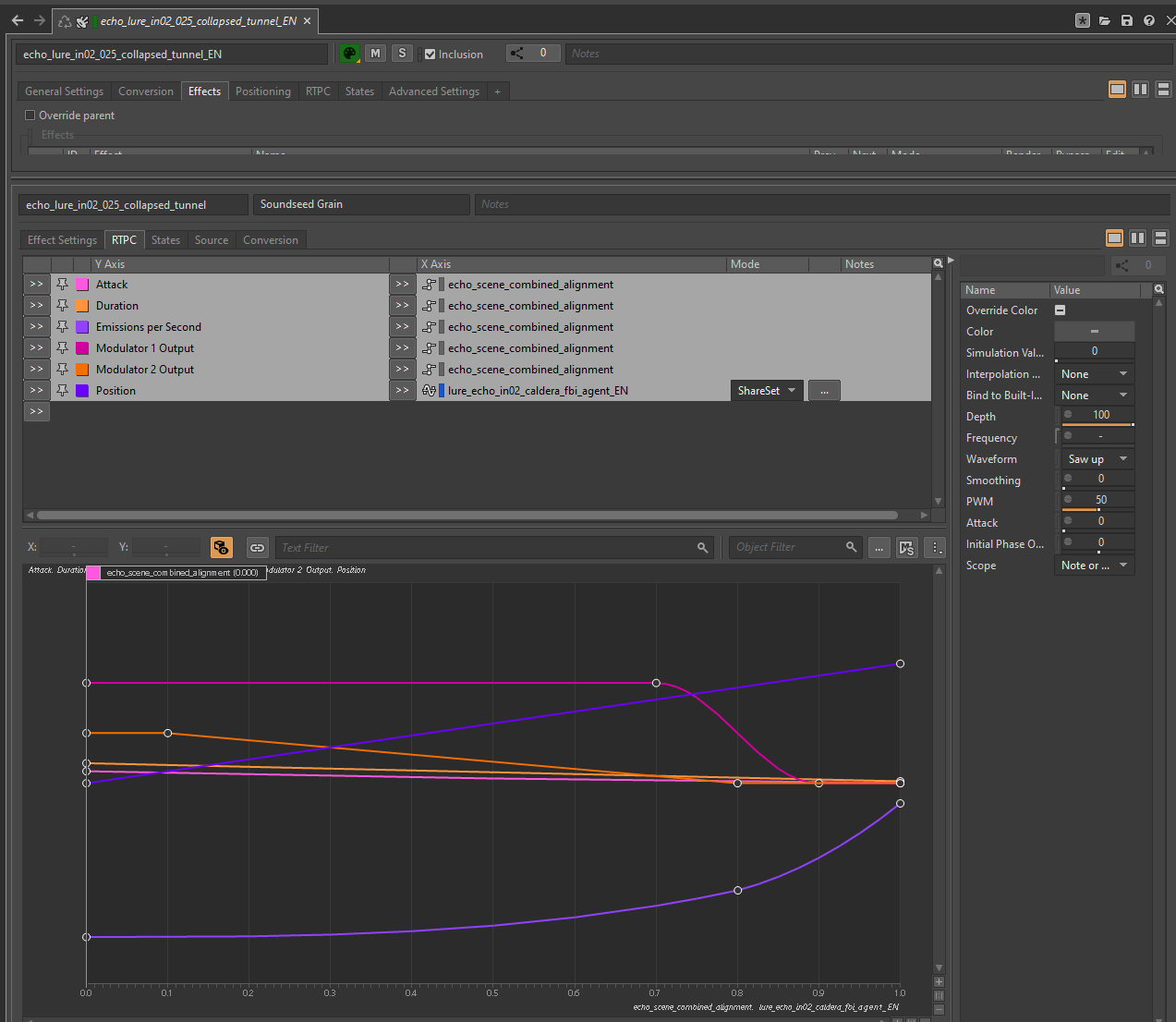

This was achieved by scrubbing the position with a ramp modulator and by finding the correct modulation speed of the ramp to match the original line delivery as well as other grain settings that best suited spoken words.

From there, I tried to deconstruct the phrase using longer and more spread-out grains with extended attack times, changing the playback direction to nonlinear by randomizing the playback position so that random words or syllables were spoken out of order. This also gave the sound a more scattered rhythm and incoherence.

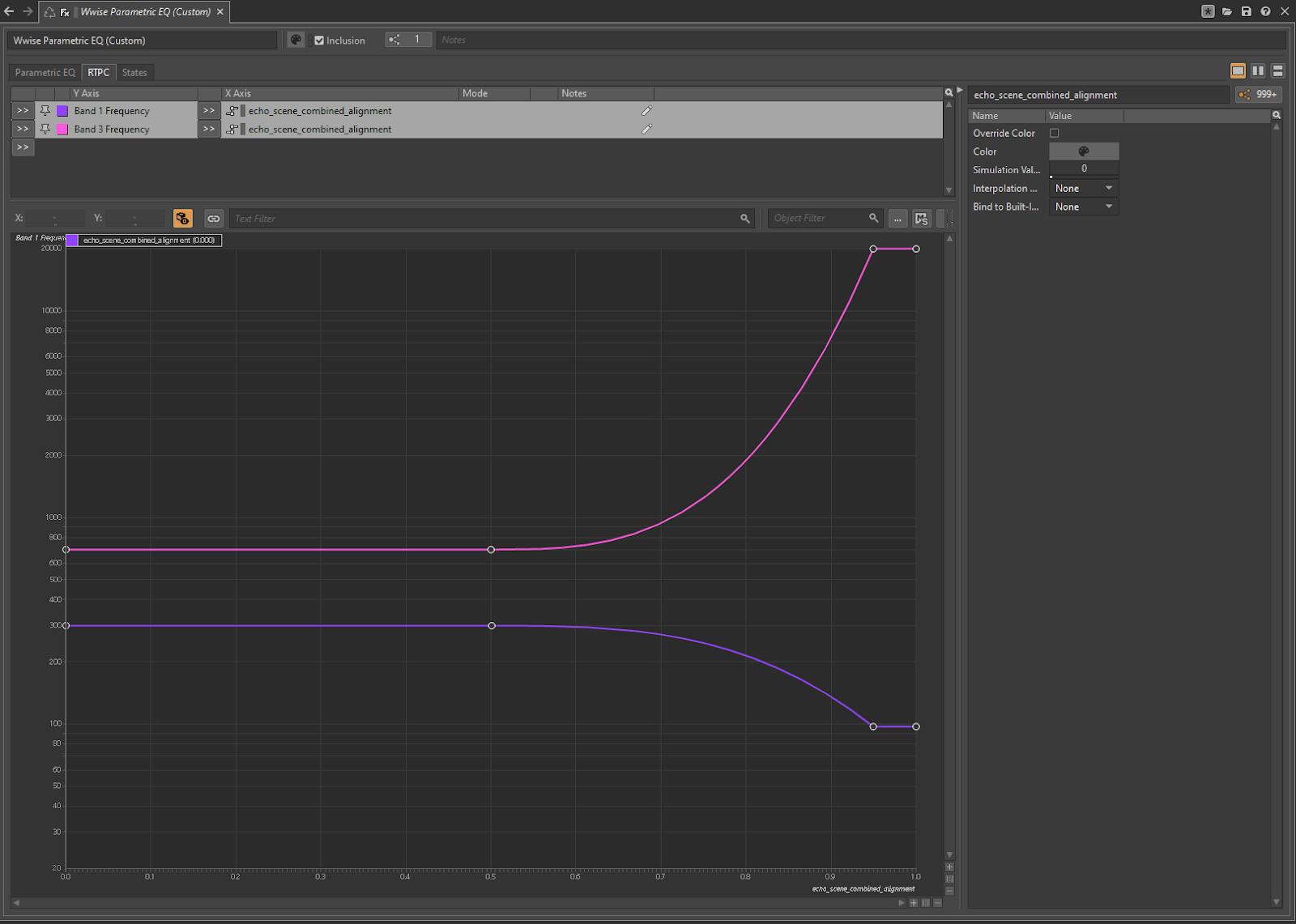

Finally, three real-time effects were applied on the top parent, Delay and Reverb to push the dialogue further back in the mix and reducing clarity over distance and a 3-Band EQ which served as a high-pass and low-pass filters combination narrowing the voice’s frequency range to around 500 Hz, creating the sensation of a voice trapped in a bubble. These three effects would gradually disappear the closer the player was to solving the Echo.

The result was an interactive and evolving soundscape that felt both atmospheric and purposeful.

Overcoming Localization Challenges

When it came to it, despite the expected increase in scope due to localization, it amounted to just over one extra day thanks to some good planning and a cheeky MAX patch I built to figure out the right modulator settings for scrubbing the position of each language-specific performance for each Echo (170 total unique modulators!).

In the following video, the graph at the bottom right of the screen represents the RTPC of the combined camera orientation and the distance of the player from the Echo changing based on how close the Echo interaction is from being solved. Listen closely to the iconic voice of the late James McCaffrey changing dynamically along with the RTPC.

Credit also goes to Josh Adam Bell and Kit Challis (Senior Technical Audio Designers) for the critical help with the technical audio support needed to achieve this.

A final and very Remedy detail of the Echoes visions that some players might have noticed is that the silhouette of the characters is audio-driven, meaning that the VFX reacts to the audio data coming from the dialogue lines. But that’s for another time.

Comments