Introduction

This is the first of a 3-part tech-blog series by Jater (Ruohao) Xu, sharing the work done for Reverse Collapse: Code Name Bakery. In this article, he dives into using Wwise to drive in-game cinematics, featuring the Wwise Time Stretch plug-in. Stay tuned for Part 2 & 3 in the coming weeks!

Jater (Ruohao) Xu recently wrote another article on the Audiokinetic blog, titled "Reverse Collapse: Code Name Bakery | The Important Role of Wwise in Remote Collaboration", where he and Paul Ruskay shared their process for managing character & custom animation sound design, creating interactive music systems for the game, and more.

Using Wwise to Drive In-Game Cinematics, Featuring Wwise Time Stretch plug-in

Tech Blog Series | Part 1

To address potential audio/video sync issues stemming from scenarios like rapid Alt-Tabbing between program windows or stuttering frame rates due to performance limitations, we've implemented audio/video sync code using the Wwise clock instead of relying solely on the in-game clock for Reverse Collapse. Additionally, we've taken an extra step to support slow-motion and fast-motion effects essential for highlighting cinematic moments within the game. This was achieved by leveraging the versatile Wwise Time Stretch plug-in available to us. These approaches have proven effective in mitigating the stated challenges. It's worth noting that while the term "cinematics" encompasses various game engines such as Unity Timeline and Unreal Sequencer, our examples will be demonstrated within a Unity environment, given that Reverse Collapse is built using Unity.

The Basics

Beginning with the fundamentals, the underlying philosophy to make this approach work is refreshingly simple, and it's applicable across various game engines.

If the current audio playback time is slower than the current video playback time, pause the video.

If the current audio playback time is faster than the video playback time, play the video to the audio playback time.

If both time values are equal, do nothing since we are already in perfect sync in this situation.

Since the game is using Unity, a pseudo algorithm in C# will demonstrate the code to interpret the notions above as the following:

private void AudioVideoSyncLogic()

{

if (audioTime < videoTime)

{

video.Pause();

}

else if (audioTime > videoTime)

{

video.Play();

}

else

{

continue;

}

}

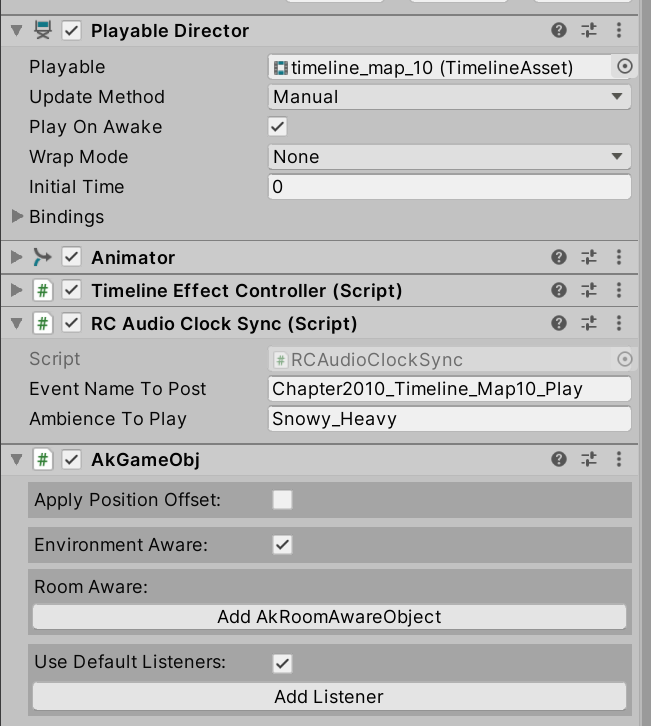

With this initial code snippet, we can integrate this function into the default Unity Update() function to ensure its execution every frame during cinematic playback. It's important to remember to adjust the Timeline update method from the default "Game Time" to "Manual" for it to be effective.

The picture above shows the particular setup of the RCAudioClockSync.cs script. In this case, we also can pass an event or ambience that we want to play as a string input.

Problem-Solving

When integrating the code above into the script, several issues will arise that necessitate revisions and enhancements to ensure the cinematic remains playable throughout various interactive scenarios of the game. The most evident issues that need to be addressed include:

- Retrieving the current playback audio time: Ensuring the script can accurately track the audio's progress.

- Synchronizing video and audio when the cinematic is stopped: Guaranteeing the video stops correctly with the audio when the cinematics conclude.

- Implementing fail-safe methods: Providing safeguards in case the cinematic is not properly set up.

- QA functions for audio syncing: Developing additional functions that the testing team may find useful for verifying audio synchronization against the non-synced default setup.

By addressing these issues, we can enhance the script's robustness and reliability in handling cinematic sequences within the game.

Retrieving the current playback audio time

Wwise has a super useful function available for the programmers to use. This is GetSourcePlayPosition() (Link: GetSourcePlayPosition (audiokinetic.com)).

GetSourcePlayPosition() will return the current audio playing time on a given playing ID. Conveniently, we have an easier access version of the function in the Wwise Unity Integration compared to its original C++ call, this is:

public static AKRESULT GetSourcePlayPosition(uint in_PlayingID, out int out_puPosition, bool in_bExtrapolate)

To utilize this method, first, we assign the return result of calling it with a provided playing ID. Upon receiving an Ak_Success result, the current audio time will be passed to the parameter modifier, out_puPosition. If we encounter an Ak_Fail for any reason, we output -1 instead. The pseudo-code below demonstrates this solution, noted that the part of retrieving playingID variable will be skipped in the example.

public int GetSourcePlaybackPositionInMilliseconds(uint playingID, bool extrapolated)

{

int returnPos = 0;

AKRESULT returnResult = AkSoundEngine.GetSourcePlayPosition(playingID, out returnPos, extrapolated);

if (returnResult == AKRESULT.AK_Success)

{

return returnPos;

}

else

{

return -1;

}

}

Thus, by calling GetSourcePlaybackPositionInMilliseconds() and assign the return value to audioTime variable, we can retrieve the current audio playback time in real-time. Remember to handle the situation where the variable gets -1 and skip the audio video sync logic.

Synchronizing video and audio when the cinematic is stopped

Recall the AudioVideoSyncLogic() function mentioned earlier. When the audio ends, the video should seamlessly catch up and continue its last action, meaning it will maintain whatever state it is in. Ideally, if the audio and video end simultaneously, both should stop. For instance, if the video is paused when the audio ends, it will remain paused, potentially causing the game to freeze. Conversely, if the audio file has a few seconds of silence at the end, the video will continue playing, potentially causing visual artifacts until the audio stops. To avoid introducing game-breaking bugs, we need to address and resolve these issues in our implementation.

Sometimes, this issue can be resolved by destroying the cinematic game object itself, but this approach depends on many factors outside the audio department’s control. We can implement a self-explanatory universal solution by explicitly stopping the video when the audio reaches the end. To achieve this, we just need to add the pseudo-code below to the bottom of the AudioVideoSyncLogic() function, noted that the part of retrieving videoDuration variable will be skipped in the example:

if (audioTime > videoDuration)

{

video.Stop();

}

Implementing fail-safe methods

In development, we often encounter situations where cinematics have placeholder audio or no audio at all. On the gameplay side, there could be rare instances where the methods fail to run, causing the video to freeze and the gameplay to get stuck. Therefore, we can’t solely rely on the previously discussed methods for software stability. A fail-safe method needs to be implemented when the above methods fail, whether during editor time in cinematic development or at runtime when the player is playing the game. This approach differs from simply using Unity's default update method, especially during runtime, as players should not have control over the updating methods being used. Switching to the default update method midway through a cinematic could cause more known-ons than anticipated.

The function below shows a manual update method of the cinematics, in this case, the fallback method, noted that the part of retrieving videoTime variable will be skipped in the example:

private void AudioVideoSyncFallbackLogic()

if (videoTime < videoDuration)

{

videoTime += deltaTime

video.Play();

}

else

{

video.Stop();

}

}

AudioVideoSyncLogic() will be executed when both valid playing ID and valid audio time are acquired, thus AudioVideoSyncFallbackLogic() will come into handy for all other situations.

To sum them up, in the update or tick method, we should have the following code segments and function calls, using the pseudo-code and an example:

private void LateUpdate()

{

int audioTime = GetSourcePlaybackPositionInMilliseconds((uint)playingID, true);

if (playingID != -1 && audioTime != -1)

{

AudioVideoSyncLogic();

}

else

{

AudioVideoSyncFallbackLogic();

}

}

Note that we use LateUpdate() here to ensure that all animations and other elements in the cinematic (e.g., Unity Timeline) are fully updated before synchronizing the audio and video. This approach helps achieve better results if anything isn’t updated properly on rare occasions. While the normal Update() function could be used, LateUpdate() is recommended for timelines with a significant amount of customized scripts and visual features. The implementation can vary from project to project, and you might benefit from adding custom input parameters to the functions to reference a Timeline playable or other assets.

QA functions for audio syncing

We added a toggle for our QA team to facilitate A/B comparison videos for any cinematic-related bugs. This toggle allows for a simple bypass of the audio-video sync and its fallback method, reverting to the default Game Time update methods before video recording. This way, we can easily determine whether the bugs are caused by the newly added audio-video sync feature or by the assets or scripts within the Timeline.

Slow Motion and Fast Motion using Wwise Time Stretch plug-in

Following all the steps outlined below should yield an audio-video sync system that functions effectively for many games. However, there may be special cases in each project where additional customized features are necessary. In Reverse Collapse, for example, we need to support this sync system with slow-motion and fast-motion features to emphasize exciting moments occurring in the cinematics.

To achieve this goal alongside the functioning audio-video sync system, the new features need to be integrated into the existing infrastructure. This is where the Wwise Time Stretch plug-in (Link: Time Stretch Plug-in (Time Stretch (audiokinetic.com))) comes into play. This plug-in can adjust the playback speed of voices in Wwise without altering their pitch, making it ideal for our use case.

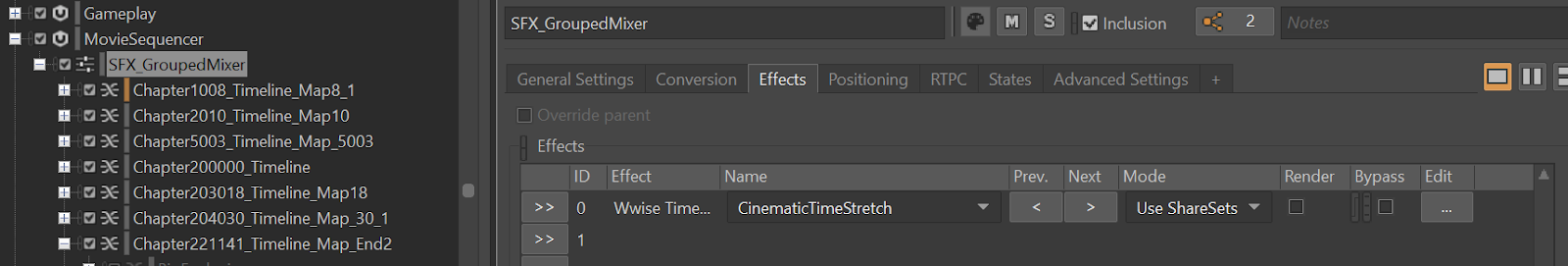

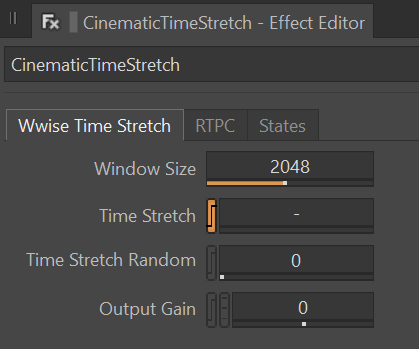

To set up Time Stretch, navigate to the effect tab of the relevant mixer, container, or audio sources in the project explorer hierarchy. In this scenario, we're configuring it on the cinematic SFX actor mixer. This mixer oversees the playback track for cinematic SFX globally, meaning any changes made in Time Stretch will be applied to all cinematic SFX included beneath it. This setup governs every cinematic timeline in the game, each of which plays one at a time. (This is going to be the track used to drive Unity Timeline).

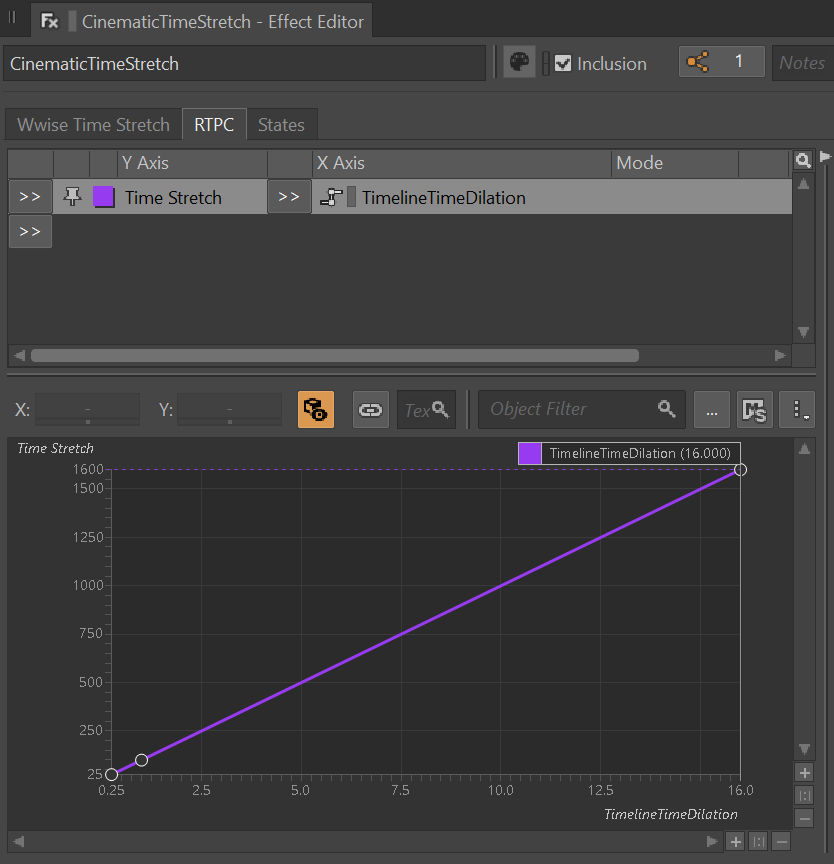

The plug-in, we leave it at the default settings for its most properties, but we are interested in altering the Time Stretch property via an RTPC which is going to be implemented in the code.

The Time Stretch feature provided by Wwise offers a range of 25 to 1600 on the Y axis, as per the official Wwise documentation. This value represents the percentage of the original sound duration, where 25 signifies four times faster playback, and 1600% denotes 16 times slower playback. To simplify calculations, we created an RTPC (Real-Time Parameter Control) with a range of 0.25 to 16 on the X-axis. This represents the inverted multiplier of the actual playback speed of the original audio. By dividing 1 by this number, we obtain the usable multiplier. For instance, 1 divided by 1/4 equals 4, indicating four times faster playback, while 1 divided by 16 equals 0.0625, representing 16 times slower playback.

The only drawback of this setup is the limitation of the time stretch multiplier from 0.25 to 16. If we reach the upper or lower bound, we can't exceed these limits to achieve faster or slower playback. However, for our specific use case if not in many other games, this range is more than enough to cover all situations of slow and fast motions. In Reverse Collapse, we're only incorporating a maximum multiplier from 0.25 to 4 based on requests from the game design and animation team.

In this example, we'll create a small wrapper function to extract the output value of the parameter modifier and apply it on demand in the area where we intend to use this functionality.

public float GetGlobalRTPC(string rtpcName)

{

int rtpcType = 1;

float acquiredRtpcValue = float.MaxValue;

AkSoundEngine.GetRTPCValue(rtpcName, null, 0, out acquiredRtpcValue, ref rtpcType);

if(acquiredRtpcValue >= 0.25f && acquiredRtpcValue <= 16.0f)

{

return acquiredRtpcValue;

}

else

{

return 1.0f;

}

}

In addition to setting the RTPC globally, the function above will also ensure that if incorrect values are detected, it will ignore the RTPC to be set, and reset the value to 1.0f, which is the default.

Finally, we are about to add this function into the code segment where the audio-video sync logic happens. Now, insert the following line in the middle between executing playing the cinematic logic and the logic of stopping the cinematic after the video ends, and assign the calculated value to the Unity timeScale variable.

Time.timeScale = 1.0f / GetGlobalRTPC(“TimelineTimeDilation”);

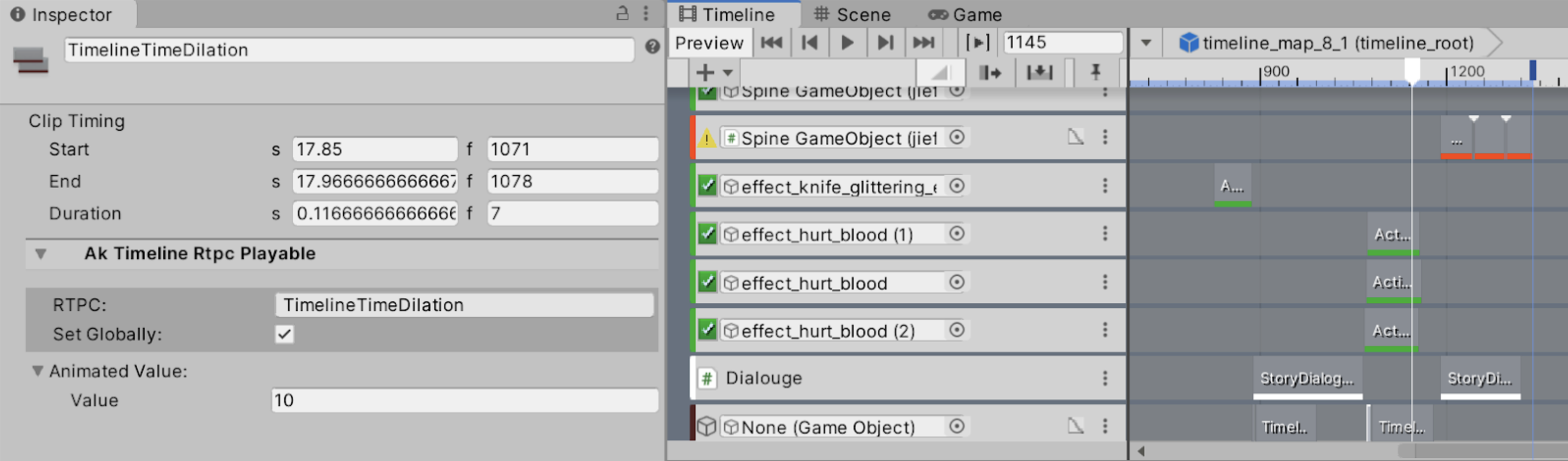

With all the steps introduced above, we’ve successfully implemented the use of Wwise to drive in-game cinematic timelines. Additionally, we have the capability of utilizing features such as slow motion (down to 16x) and fast motion (up to 4x) for any duration and frame time, all at our disposal. We can now freely set the RTPC in the Unity Timeline window.

The image above illustrates an example of 0.1x slow motion from frame 1071 to 1078. In our implementation, the multiplier needs to be manually reset to 1 by creating another RTPC following the slow-motion ending frame, in this case, frame 1079.

For the final result, both sounds and visuals slow down correctly, driven by Wwise, eliminating concerns about audio and visual sync. This approach also saves sound designers development time by reducing the need to create audio assets for slow-motion and fast-motion frames. And for animators, no more fine-tuning on the slow-motion and fast-motion curves.

Here is a video clip to demonstrate the final result, there are multiple intentional frame stutters throughout the video to showcase the feature working. The slow motion driven by Wwise Time Stretch can also be heard at 0:23’ of the clip.

Disclaimer: The code snippets utilized in this article are reconstructed generic versions intended solely for illustrative purposes. The underlying logic has been verified to function correctly, specific project-specific API calls and functions have been omitted from the examples due to potential copyright restrictions.

评论