Technical report on Wwise, the audio authoring middleware

(Translated from Japanese)

Audiokinetic, the developer of the audio authoring middleware Wwise, held a launch event for their Japanese company Audiokinetic K.K. at the Canadian Embassy in Akasaka, Tokyo. Mr. Naoto Tanaka, Music Director (BGM) and Mr. Kentaro Nakagoshi, Lead Sound Designer (SE), who were responsible for the audio of METAL GEAR RISING: REVENGEANCE, had the opportunity to present the significance of introducing Wwise into their production pipeline.

The presentation is geared towards audio professionals. We will share some of the material that was presented and some details provided by Mr. Ohmori, who was in charge of sound programming on this project. We hope this may help game developers who are considering implementing Wwise.

About the sound engineering:

Using Audiokinetic’s Wwise in METAL GEAR RISING: REVENGEANCE

About BGM:

Interactive music in METAL GEAR RISING: REVENGEANCE

BGM Example 1 video

BGM Example 2 video

Note that sound effects are turned off in the videos so you can hear the music better.

Mr. Ohmori received some questions after the presentation, so he would like to explain a little more on how long it took to integrate Wwise, as well as some points on how we worked with Wwise.

1.Integration period

・About 2 weeks.

・The number of Wwise APIs are quite few.

・If we didn’t know what to do, we just used the sample [settings] for the time being, and it would run.

He thinks it took about 2 weeks to build the basic sound system. It was built to have some level of compatibility with the existing sound system. There aren’t that many APIs. In other words, what programmers can do is quite limited, as he will explain later. There are parts that will ultimately have to be adjusted, like I/O and streaming, but at first, we just kept the sample [settings] as is.

2.Difficulties at initial integration

・There is little that we can program!

He explains that the biggest difficulty during the initial integration of Wwise was that they couldn’t do anything from the programming side. From the programming side, the only thing they could do was execute events, and at first, it felt like their hands were tied. (There are parameter controls and other functions in Wwise, but the main feature is events.)

There’s not even an API to change the volumes. So if the sound team asked for the BGM volume to be lowered during pause, all he could say was, “You have to make that event.” When we first started using Wwise, he remembers saying over and over again, “There’s nothing the programmer can do. You have to create the event yourself.” Once we got used to it, the sound team started setting the necessary processing within the event. After that, all that the programmer had to do was call up events, so work became very easy.

3.CPU and memory performance

・Of course, the richer we make it, the heavier it becomes.

・Quality and performance can be balanced by the sound team.

Many of you will be worried about performance, so he made some performance captures of an actual in-game scene. It’s quite a busy scene, and he hopes it will be a good reference for you.

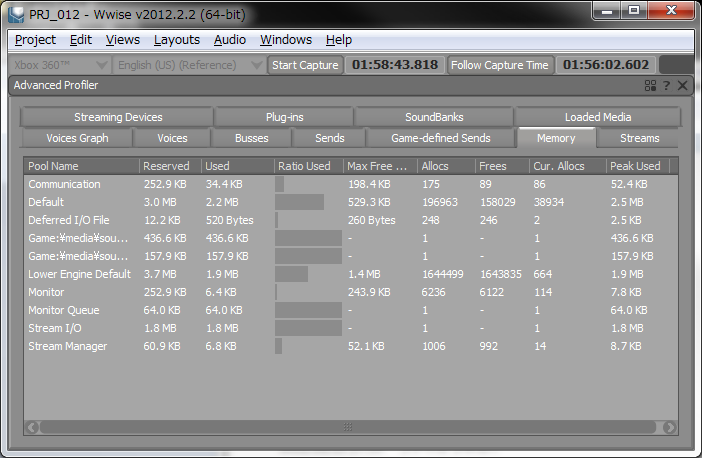

The capture is on an Xbox 360. First, the memory.

In all, it’s almost about 10MB. However, wav data are basically not included. In this particular scene, he checked the wav data total, and it was less than 12MB. So the total used for the entire audio is about 22MB.

We allocated more memory to the Wwise engine than the sample allocation. This is because there are always multiple streams running concurrently, and also to support areas where there are a great number of voices. In this scene, in order to reduce processing, some of the BGM is in memory. Despite that, he thinks the wav data is small. On PS3, the Vorbis format is used, so it’s even smaller.

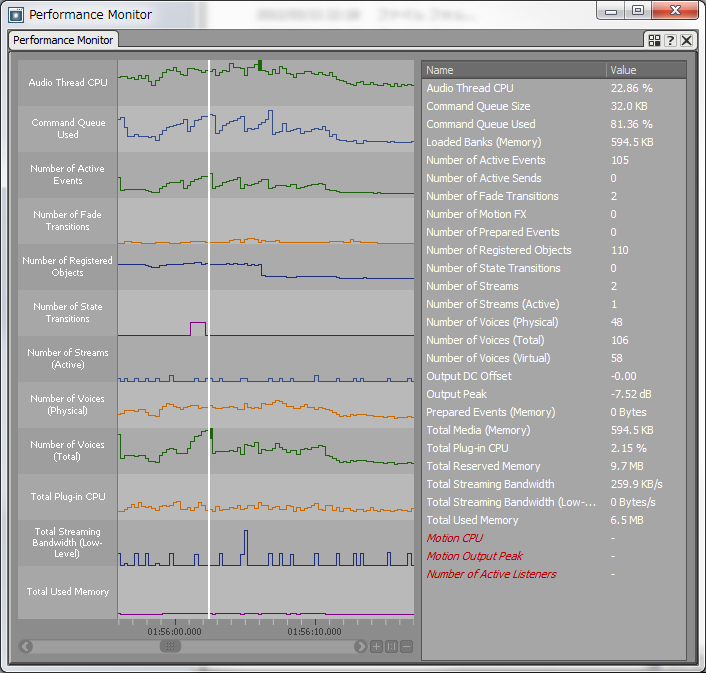

Next, various performance indicators.

The number of voices is 106, the number of voices where the signals were actually controlled (Number of Voices (Physical) is 48, and CPU is 22.86%. This is an Xbox 360 capture, and one hardware tread is used at about 23%. The load in the total context is about 4-5%.

In the case of the PS3, a lot is done on the SPU, so the CPU load becomes far less. You can also see from this graph that the CPU peak and the peak in the number of voices are not aligned. When you look at where CPU peaks, it is at the same time as when the Number of Registered Objects is greatly reduced. This is because when there is a switch in the in-game situation, a large number of objects are released, and this process results in a momentary increase in CPU load.

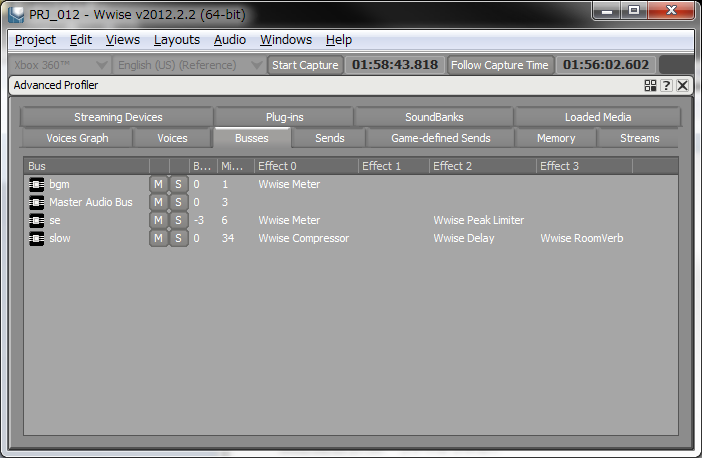

The last capture is on buses.

There are four active buses. The effects being used are: Meter, Peak Limiter, Compressor, Delay, and RoomVerb. In this particular scene, Delay and RoomVerb are bypassed, so the load from effect calculations (Total Plug-in CPU) is smaller. We reviewed these items to make the final decision on memory and CPU. These capture data can be saved and you can check the part where you want later on. We would keep the capture function on and do test plays, and then go back to where we were concerned about, so it was very useful in checking various peak areas. There are different logs that are saved too, which were effective for debugging.

When we first started using Wwise, sometimes he was not happy. There is little that the programmer can do, there is more work for the sound team, and the processing isn’t that light, either. Ultimately, though, using Wwise helped us very much. All that the programmer has to do is call up events, and on cost and performance issues, the sound team can make their own decisions, to their own satisfaction, within the predetermined budget. While there was a definite increase in workload for the sound team, they had more control, and less negotiating, and so Mr. Ohmori thinks that maybe it wasn’t too painful for them.

And that’s about it. If you have any other questions, he will answer them as much as he can. Please feel free to comment. He hopes that he can be of help in your evaluations, etc.

This report was originally published in Japanese, on PLATINUMGAMES

评论