A common question within the game audio community is: How can I explain the benefits of using middleware to my boss or client? From our side of the fence as audio professionals, it may sometimes seem like a no-brainer. To be able to answer this question pragmatically however, we would need to look at it from the client's point of view. Why should they spend extra money on middleware when it may be possible to get the job done without it? How do the benefits stack up against the costs? In today's game development landscape where using third party engines like Unreal or Unity with built-in audio capabilities is becoming increasingly popular for their all-in-one solutions, especially amongst the many small indie teams working with tight budgets, how can we justify the investment towards a specialized sound engine?

From the perspective of the producer or studio director planning their project, the license fee is cash that they can't spend on something else. Consider a single platform project with a production budget for a single platform budget between $150K and $1.5M USD—the Level B license fee, in line with the production budget, is $6,000. In this example, using Wwise may therefore be equivalent to something in the order of one senior or one and a half junior team member work months. Perhaps that cost means dropping a feature or producing fewer assets. The point is that it's a trade-off. To justify the cost, you need to demonstrate how the benefits on the other side of the scale outweigh these considerations, and from a high level perspective, this would ideally involve improving quality while decreasing time spent, for both the sound designers and programmers involved.

If Wwise costs the equivalent of hiring an audio programmer for one month, but in fact saves the equivalent cost for two months of an audio programmer’s rate, the numbers would speak for themselves. By using Wwise you've enabled the Producer to in fact add resources by using some on Wwise, and allowed them to improve their margin on their project.

In other words, whenever our pitch talks about a particular feature or benefit, we need to be asking ourselves and essentially answering for the client, "how does this feature save time and/or enhance quality?" The specific answers will vary from one project to another of course, depending on the requirements and the baseline of features available in the existing engine of choice, but here's an overview of how some key Wwise features save time and improve quality.

Authoring Workflow

Most of the sound designer’s work in Wwise takes place in the authoring application. Creatively, the Authoring workflow delivers a powerful set of features and provides the sound designer with tools in a language they understand. For example, volume expressed in decibels, a bus mixing hierarchy, envelopes, LFOs, and visual editing of parameter curves. It’s the same language used in DAWs and samplers, which allows sound designers to work quickly and accurately. Secondly, separating audio functionality from game objects minimizes dependencies on access to files in the project - the vast majority of the work can be accomplished without modifying scene or map files, game objects, or blueprints, all of which other team members also need access to. This not only saves the sound designer time (which ultimately feeds into quality - less time wrangling blueprints and source control and waiting for access to files means more time spent making the game sound great!), but also allows other team member to save time.

Creative Features

For designing and implementing sound effects (we’ll come to VO and music later), the beating heart of Wwise is the Actor-Mixer Hierarchy and its container types - Random, Blend, Switch and Sequence. With these four container types (which can be nested and combined in any way imaginable) we can construct a huge variety of sound behaviors, from essential basics such as picking a sound at random from a pool of variants, to highly complex layering and dynamic sound structuring. On top of this we can define Real-Time Parameter Controls (RTPCs), Switches and States, so that they are received from the game and mapped to a wide variety of sound properties.

Consider footsteps as an example. If a character requires footstep sounds for 5 different surface materials and 3 different speeds, we can assemble a structure with (from the bottom up): Random Containers containing the samples for each permutation e.g. Grass/Walk and Grass/Run; Blend or Switch Containers mapping each permutation to a “speed” parameter or Switch; and a Switch Container at the top level switching between materials. This can all be assembled in minutes, and all that’s required from code is a “play footstep” Event on each footstep and “material” and “speed” values to be set as they change.

Here’s another example. A weapon sound may consist of an attack portion, a tail, and a “mechanism” layer that you only hear close up, each with variants. This can be assembled using a Blend Container combining 3 Random Containers, perhaps delaying the mechanism layer by a variable amount of time. Again, this takes minutes to assemble and it all can be triggered by a single Event.

To implement these behaviors from scratch would take time and testing, and would be unlikely to deliver the same speed of use as the authoring UI provides. Trust me, I’ve done this using Unity and I know! You may be approaching the cost of a level B license implementing something equivalent to these basic container types alone, and in all likelihood you would still have a less streamlined workflow and a limited set of capabilities from what you would have using Wwise.

Profiler

One of Wwise’s most powerful features is the Profiler. The Profiler connects the authoring tool to the game while it’s running, and monitors everything that is going on in the sound engine. Incoming Events are displayed in real-time along with the associated Actions performed as a result, such as sounds starting and stopping, or changes to volumes and positional information. I could also display error messages, parameter values, and resource usage. It's a fantastic tool for debugging and optimization, and it saves huge amounts of time by allowing us to quickly identify if and why sounds are not behaving as intended.

There is no “out of the box” audio toolset amongst any of the leading engines that is currently providing anything comparable, and it would be prohibitively expensive and difficult to independently implement what the Profiler does. The question here isn’t so much “What does it cost to implement?” as “What does it cost to do without it?” A bug that could be diagnosed and fixed rapidly by the sound designer alone using the profiler would often require programmer support and more extensive QA testing, as well as more time from a sound designer to fix. Given that we might encounter dozens of such bugs over the course of a typical project, it is very reasonable to estimate that Wwise would be saving at least several days of work, if not weeks.

Real-Time Editing

When the Authoring Tool is connected to the game (with the same connection used for the Profiler), we can edit in real-time. This enables us to mix and fine-tune the game as we play it. In contrast to the way some game engines work when live-editing, when using Wwise, changes are retained at the end of the session and this invaluable for level mixing, but it also means that we can fine-tune RTPC mappings, attenuation, effect sends, timing properties, blends and many other sound properties and behaviors in their context. This significantly reduces the time a sound designer would need to spend on iterations, and elevates the accuracy with which we can mix and tweak, ultimately, helping us drive up quality.

Dynamic Mixing

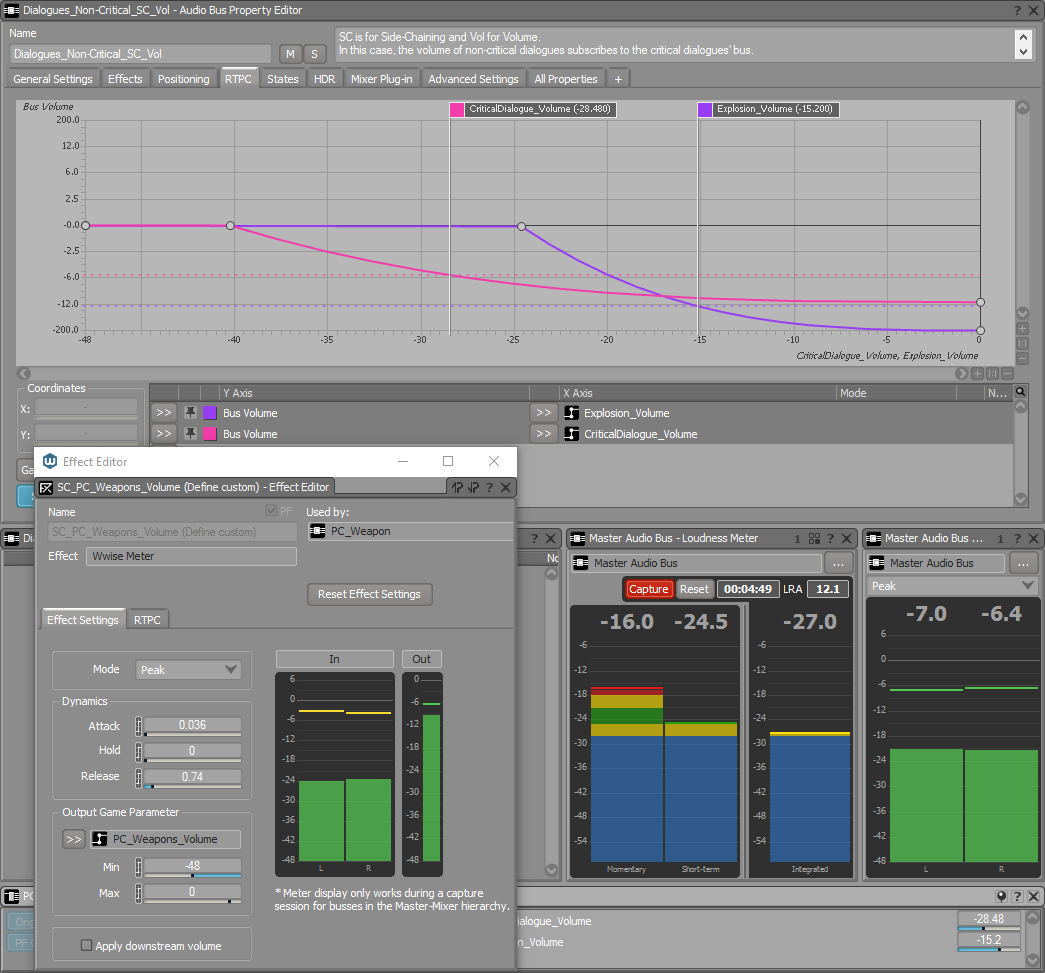

Wwise also provides a number of different ways to control the mix dynamically in response to game Events and States, and the content being played at any given time. High Dynamic Range (HDR) enables the mix to adapt to the loudness of the content being played, surpassing quieter sounds to make room for, and increase the perceived loudness of louder ones. States can modify Bus volume, Filtering or Effects. Ducking, can also be set up with a few clicks.

While a basic ducking system based on whether a sound of a given type is playing may be simple to implement, metering-driven ducking (Side-Chaining) and flexible State mixing are not, and HDR in particular is highly complex. If features like these are required, the implementation time without Wwise may be measured in weeks, not days.

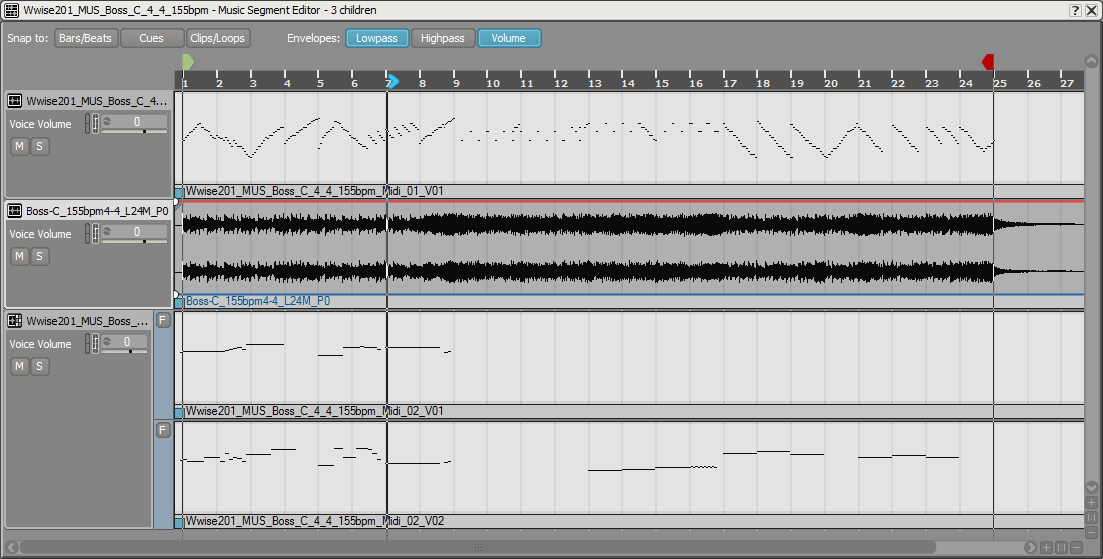

Interactive Music System

Most games need some form of interactive or dynamic music, and this is one of the least well-supported areas of audio within game engines. Wwise’s solution is flexible enough to support whatever approach you want to take, with sample accurate synchronization and highly configurable transition behaviors. It is a State driven system, which means all the game needs to do is start the music system when you start the game or level and set States as required.

Implementing an interactive music player with even a fraction of the power, timing accuracy (vital for music synchronization), and ease of use is likely to take weeks of Programmer time.

Localization Support

As with interactive music, VO localization is similarly not well supported by default in most game engines. Wwise, on the other hand, makes it incredibly easy. All you would have to do is drag in assets with matching names and set their language in the import dialog. And, from a coding standpoint, it would require a single function call to set the language.

Platform Support

Cross-platform development is one area where the commonly used engines are particularly strong, and this extends to audio as well. Wwise still manages to provide a bit of an edge, however, with comprehensive control over compression and format conversion at micro and macro levels via ShareSets (see below), invaluable for making per-platform content optimization fast and effective.

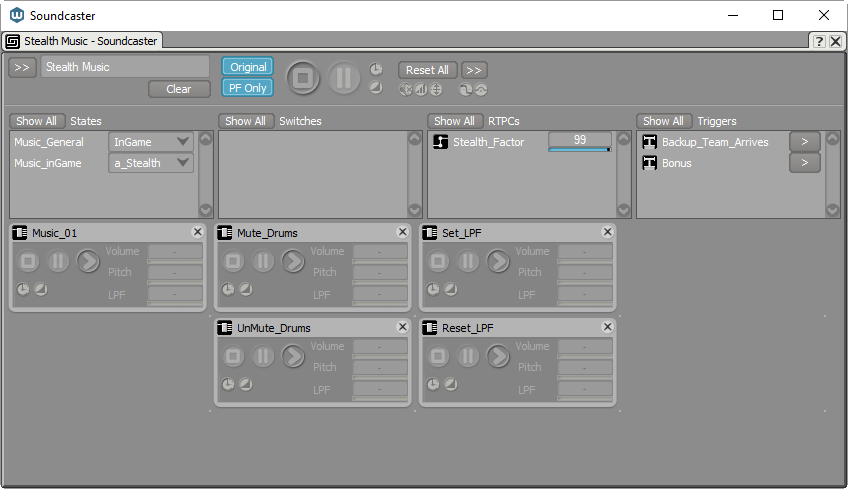

Soundcaster / Prototyping

A principle that has always been at the heart of Wwise's design is to empower sound designers by providing them with maximum control of sound behaviors, with minimum dependency on game code. When a weapon is fired, all the game does is post an Event to Wwise. What happens from there onwards, such as layering, randomizing, sequencing component sounds, and perhaps even affecting other sounds is all in the hands of the sound designer.

The Soundcaster enables the sound designer to test these behaviors offline, simulating game inputs before they have been implemented, or in more controlled conditions than the game allows. Therefore, the idea behind breaking the dependency between audio and code also enables implementation designs to be tested before any code is written. The result is that we can clearly establish what works in a rapid prototyping environment and eliminate slower and more costly code iterations.

ShareSets

ShareSets are probably some of the most unacknowledged yet deserving of Wwise features. They are essentially reusable settings templates for Attenuations, Modulators, Effects and conversion settings. Hardly the sexiest sounding, but here's the thing: they enable the sound designer to make both sweeping macro level changes and manage tuning of fine details, in an extremely efficient way.

A great example would be platform optimization. Let's say our game is ready to ship but the PS4 SoundBanks exceed their memory budget. How are we going to shave off those 4 MB that we have been asked to cut? Using ShareSets, we can adjust compression settings, affecting the whole platform. Or, we can create a custom setting for an entire category of sounds to compress them more or compress them less, downsample them, or even force them to mono to balance quality against memory usage.

In the same way, we can also apply attenuation settings across large or small categories of sounds to ensure that the changes we make in one place are applied everywhere we want them to be.

The benefits here in terms of time, efficiency, and keeping a project manageable are huge. The main beneficiary is the sound designer. With Wwise, sound designers can channel all their time into perfecting audio quality rather than wading through settings, adjusting and testing, and adjusting again.

Conclusion

So, “What can Wwise do to benefit the project overall?” In summary, it saves significant amounts of sound designer time, establishes an efficient workflow, and allows for time focused on value-added objectives such as creating content and making the project sound great. It also minimizes coding time by putting control and creative features in the hands of the sound designer, which therefore means that it can potentially free up weeks or even months of programming resources to be spent in the best way possible: ensuring that your game or project is the best it can be.

Comments

Tom Todia

October 18, 2016 at 08:45 pm

This is indeed a great mapping of "Why Wwise". Really well written and thought out blog post Ciaran, thanks for sharing it.