Or: How I learned to stop worrying and love the brief.

Photo: That’s me, at home, struggling to alternate between VR and coder- reality

Introduction

"OK, so what's this guy selling?", I hear you ask. That's a fair point (ha-ha). After all, building DSP plug- ins for various audio APIs has been an activity I've built my career around. However, it's precisely that experience that has taught me just how critical a tailor-made DSP is for any modern video game.

The common scenario

If you're a sound designer, you've probably thought at one time or another that you wanted something that didn't exist yet. Maybe a close-fit did exist, but it was too expensive ($$$ or CPU-wise). Maybe it didn't already exist for a commercial reason - too esoteric. I'll disclose this much: It's not often that someone has a completely original plug-in idea. More likely, the explanation as to why something like what they've got in mind doesn't exist is rather mundane.

If you're an audio programmer, no doubt you've at least thought about writing a DSP plug-in as part of some kind of personal development, or you've worked on a few commercial 3rd party plug-ins that already had an established business case - even if it wasn't immediately obvious to you (I've certainly been there). However, surprisingly few audio programmers in the game industry have experience writing bespoke DSP plug-ins from scratch. It's seen as a "black art".

Often the pursuit of enlightenment is simply asking the right questions, so let's start by asking this: Why do commercial 3rd party DSP plug-ins exist at all?

- Most obviously because Audiokinetic supports it! Naturally, this facilitates their own development of built-in audio effects, but fortunately they've opened it up to the rest of us, and for that we should all be eternally grateful.

- A technology company has some proprietary audio DSP technology they want to license to game developers, and since Wwise is a popular middleware solution, the best way to package that technology is as a Wwise plug-in.

- A video game console platform vendor has some special audio hardware in their box, and the cleanest way to get game studios to access it is by authoring a Wwise plug-in.

- Any combination of these last two reasons.

Whiteboard: The initial phase of the development process

Note that rarely is a game developer in the loop about how any of these plug-ins are made. Audiokinetic's own effects suite notwithstanding, either your studio has made the decision to support a certain platform (and that platform vendor is supporting you in some way), or you're presented with a shelf of after sales parts that might be of use.

Now, I mean this with absolutely no disrespect to those 3rd party vendors, but if we were talking about graphics and your studio was expected to only use built-in or 3rd party shaders, would that be acceptable? Probably not, because as we all witness from time to time, video games need to have their own visual aesthetic in order to differentiate them from the rest and, in practical terms, there are all kinds of performance reasons why bespoke shaders are written for a game.

As far as I'm concerned, game audio is no different.

So here's another question for you: Why DON'T in-house Wwise plug-ins get developed?

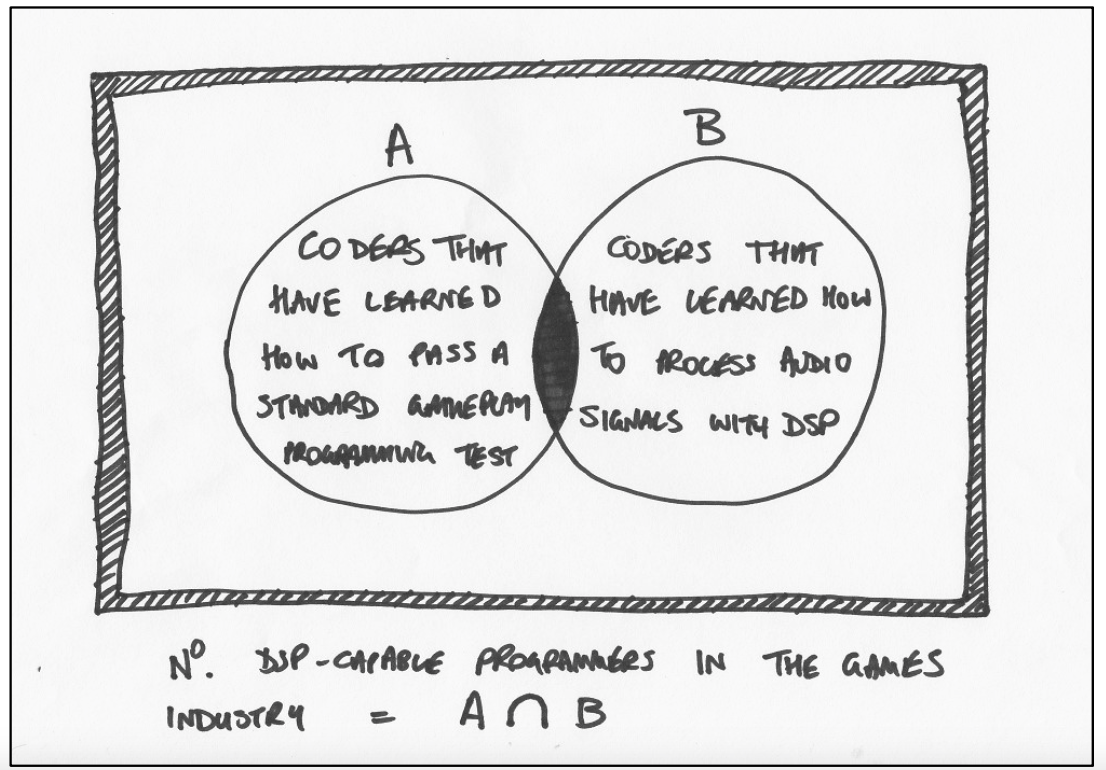

- Typically, studios don't have the expertise in-house. As regrettable as that is, game audio programmers with low-level DSP knowledge are "as rare as hen's teeth", or some other idiom I'd rather not repeat here.

- "There's no time, we're trying to make a game here!" Not everyone’s an audio expert like you are, and so the rest of the team might not initially understand what you’re trying to achieve. Budgeting for asset creation and sound design may well be a given, but DSP development time usually needs clear justification.

- "Why can't we just do it the way we've done it before?" Considering that this industry was born out of technological innovation, studios can be incredibly risk-averse. There's a comfort zone associated with game audio, and a lot of studios will languish in it - even to their peril.

Whiteboard: A later phase of the development process

I have some personal views on the last three points, which aren't going to be terribly popular with some people, but here we are. Let's get it over with...

There are in fact thousands of software engineers out there who are experienced low-level DSP coders. They work in other industries, such as in-car systems, cinema, and music production tools. The reason you don't see them in the game industry is because the recruitment methods that a lot of studios use to assess a programmer's ability involve testing for game-play or game-play-related disciplines instead. Therefore, the only audio programmers that get hired in the games industry are usually the ones that have learned how to pass those standardized game-play code tests. A few of them have DSP skills as well.

Whiteboard: Any excuse to use a Venn diagram

When it comes to budgeting for audio code development, it really depends on the kind of game you're making and what tech you've already got. I'm not going to delineate what game types I think need more or less audio code development, but I'll leave you with this little nugget:

The more immersive the game audio is, the less sensitive the player is to graphics rendering issues. (1)

Thinking about making a VR game? Multiply that last statement by 8. (2)

That last point is rather contentious because the video game business is a high-risk endeavour, so it would make sense that if a studio has a proven way of working that they should stick to it. Here's the other side of that coin, however: resting on one's laurels is also a massive business risk. It takes just one studio to ship a game that raises the quality bar in some meaningful way, for its competitors to be put squarely on their back foot for their upcoming games. Particularly if you're doing a triple-A title, surely the whole point is to produce something that is state-of- the-art in every possible way? If you let some other studio take that next step ahead of you, your next game will likely be in trouble. What game developer would not prefer to out-class its competitors, whether it's on graphics, physics, AI, or anything else? If scientific experiments demonstrate that what a player hears during gameplay is critical to their experience, then there is lots to be gained by developing new audio tech too. Did I mention VR audio yet? It's a gold rush right now.

Where to begin

Aside from getting your plug-in idea green lit, there are actually several types of Wwise plug-ins to consider before deciding how you're going to approach development.

The most widely used Wwise plug-in types

Effect plug-ins

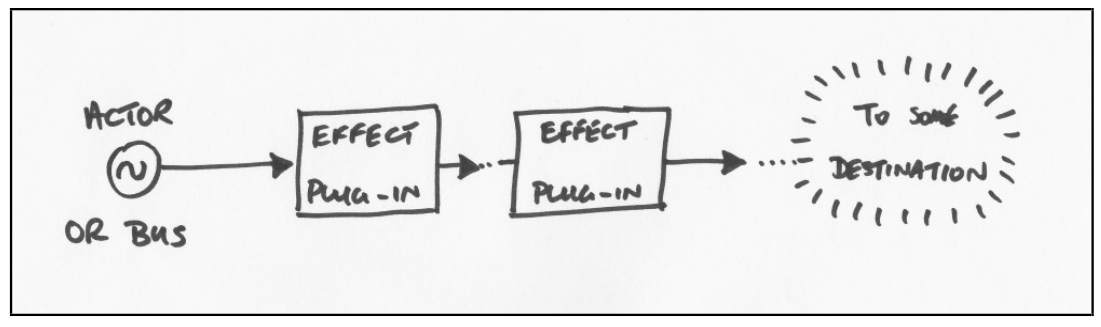

Effect plug-ins apply processing to elements in either the Actor-Mixer or Master-Mixer Hierarchy, and they achieve this by inserting themselves into an element’s signal chain. Any kind of dedicated Effect (such as filters, dynamics, saturation, time-based envelopes, echoes, and reverbs) intended to be used in isolation, or in a group of elements, should be implemented as one of these.

Whiteboard: How effect plug-ins can insert into the elements of the Actor-Mixer Hierarchy

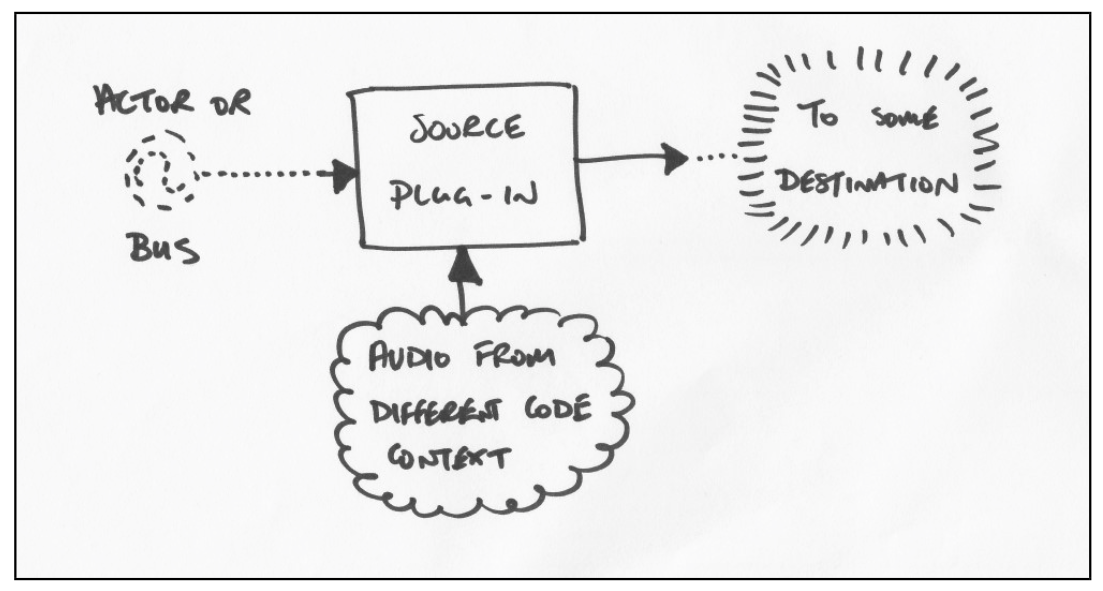

Source plug-ins

Like effect plug-ins, source plug-ins can be inserted into the effects page of any element. However, they are used to inject sound into the system, which is why they are commonly placed in an element in the Actor-Mixer Hierarchy. There’s no technical reason why you can’t place them in an element of the Master-Mixer Hierarchy, it just means they will be playing continuously (in some cases, that’s what you want). The obvious applications would be a signal generator or some kind of synthesizer; but, equally, you could also use a source plug-in to stream audio from a different context entirely. If you want to make a sound effect procedurally, then this should be your starting point.

Whiteboard: How to inject audio somewhere into the hierarchy

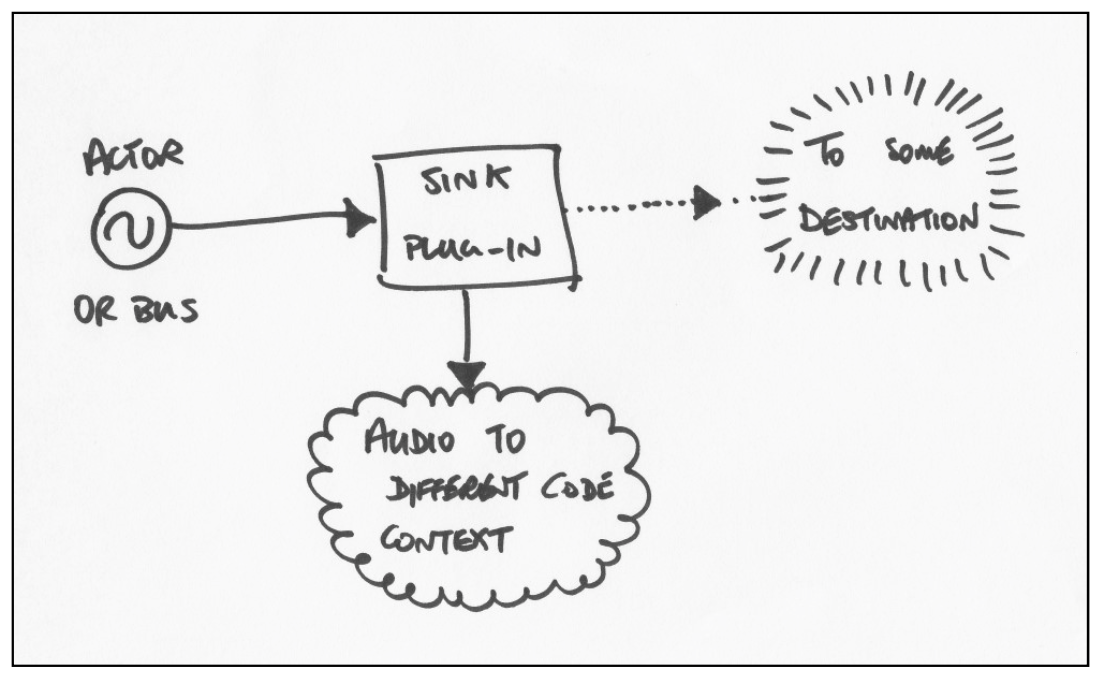

Audio Device (a.k.a. Sink) plug-ins

While outgoing sounds normally resolve to a system OS output, they don’t have to. Sink plug-ins allow you to tap off sound from an element and send it to a different context altogether. Need to make your game react to sound in some way? Then this is the plug-in type for you.

Whiteboard: How to extract audio from the somewhere in the hierarchy

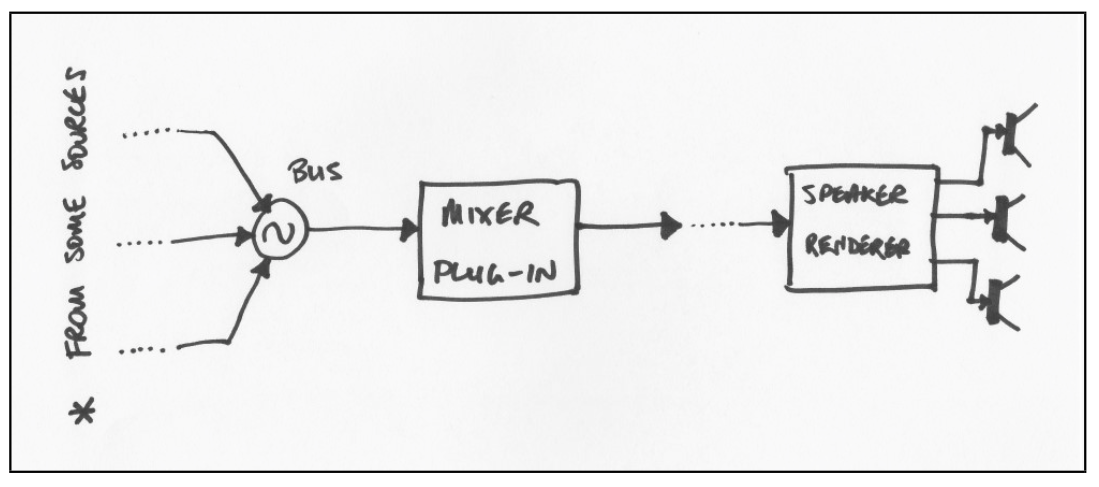

Mixer plug-ins

Unlike the other three, these mixer plug-ins can only be placed in the mixer slot of a master-mixer element. They are similar to effect plug-ins in that they insert themselves into the signal path of an element. The difference is that they take part in the rendering process that turns a sound object into discrete speaker channels. If you’re intending to implement your own 3D panner, or a processor that needs to be aware of other instances in other master-mixer elements, this is the form your plug-in should take.

Whiteboard: How mixer plug-ins insert into elements of the Master-Mixer Hierarchy

So you’ve got a grip on the different ways you can customize the handling of audio in Wwise. Now, on to how you can convince the production team that you should make something new!

The trouble with describing those plug-in types in the way that I have done above is that they are fairly dry explanations which probably won’t garner much enthusiasm from your non-audio-savvy production team. I believe it’s much better to sell your DSP idea conceptually or by rapid prototyping.

By example

In Guitar Hero Live, the sound entering the microphone needs to be pitch tracked in order to display a graph and score the accuracy of the singer’s performance. Now, clearly this has been done before with the likes of the previous Guitar Hero, Sing Star, Rock Band, or any other recent karaoke system. If you’re ever in the position to make another rhythm action game that supports vocals, the key is to reference the feature as a “minimum shippable requirement” or MSR. Production shouldn’t be surprised, and they won’t need to know the audio coder’s dastardly plan to use protomatter in the signal matrix. It may be the only way to solve certain problems.

Speaking of Sci-Fi, in Elite: Dangerous, when you use the in-game voice chat, something happens to your dialogue such that it sounds like Apollo mission chatter at the receiving end. This makes the atmosphere of talking to other human pilots very, very “astronaut-like”. So, the subjective description for that work could have been “make the voice-chat sound like the players are Apollo astronauts”. Whatever the combination of Wwise plug-ins required to make that happen is up to the audio designers and coders. Production just needs to know how many hours they need to budget to make it happen.

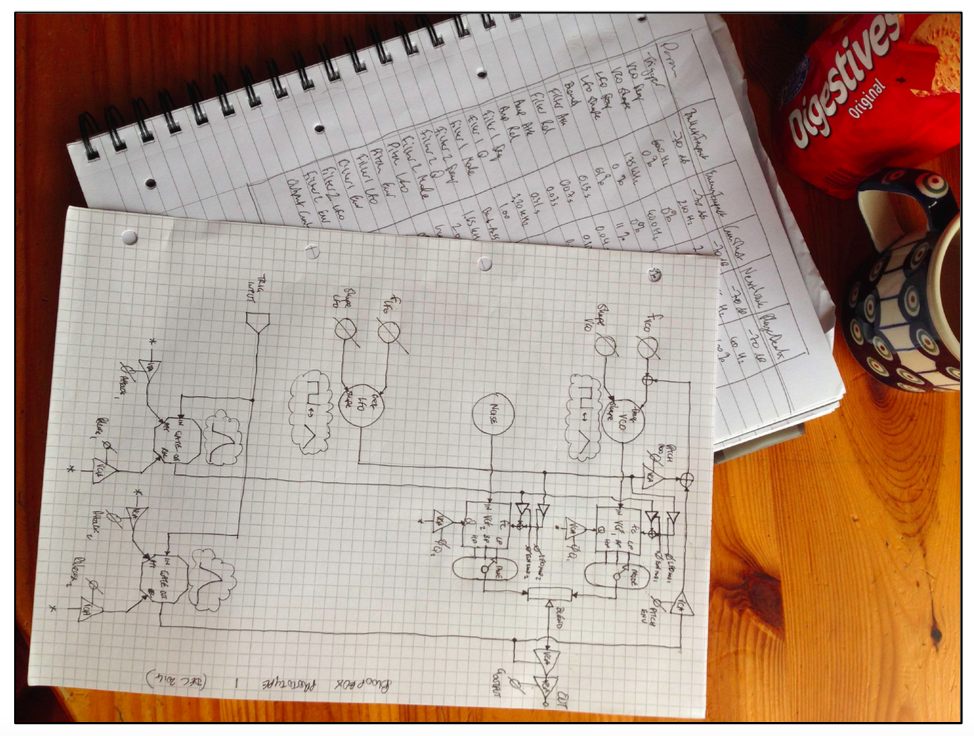

By rapid prototyping

There are a number of modular sound design tools out there that could, in principle, demonstrate your plug-in idea without getting bogged down in code straight away. It doesn’t even have to work in game. So long as your prototype can give a live, responsive demonstration, everyone will be on the same page. Have you ever played around with one of these?

- [OpenSource] Pure Data- Also, check out Enzien Audio’s “Heavy” tool set– it compiles Pd patches and they’ve just added some Wwise support!

- [Cycling ‘74] Max

- [Native Instruments] Reaktor

- [Plogue] Bidule

Also, when you get to implement the real thing, your prototype will have given you a clear idea of what parameters you’ll need and what their ranges are. Seriously, get that all sorted out before you start using your plug-in in earnest. You’ll thank me.

Photo: Planning ahead doesn’t take a lot of effort, although a decent beverage biscuit is important

You've got your green light, now you just need a little help on the DSP side

It is pure arrogance for any programmer to think that they know everything there is to know about a given programming technique, and, in my mind, the chance to learn something new is usually half the point of being a programmer. While there are bits and pieces of audio code explained on the web, by far the most useful information that I know of exists in the following books.

CURTIS ROADS "THE COMPUTER MUSIC TUTORIAL"

This is more of an ideas book than anything, as there is very little maths and virtually no code examples to mention. However, despite being (in technical terms) an inch deep, the book's coverage is a mile wide, and therefore great for introducing digital audio concepts to the uninitiated. I'd recommend this to sound designers and programmers wanting to start learning about the high-level concepts.

UDO ZÖLZER "DAFX - DIGITAL AUDIO EFFECTS"

Literally the opposite of the last book, this one focuses on blueprints for building specific DSP blocks. If you need a modern day technical reference that uses citations, this is probably the best investment you'll make for a long time. While it tends to skip over some of the finer details of some of the DSP techniques it describes, implementation-wise, it'll get you 90% of the way there, 90% of the time.

HAL CHAMBERLIN "MUSICAL APPLICATIONS OF MICROPROCESSORS"

Probably the first publication of its kind, Hal Chamberlin was apparently building synthesizers with 1980s microcomputers. The fact that it's still relevant just goes to prove that maths is timeless. A long time out of print, it is available second-hand and occasionally at affordable prices. If you can grab it, do so, but the juicy parts are probably found in the next recommendation.

BEAT FREI "DIGITAL SOUND GENERATION" (1) & (2)

Much in the same vain as DAFX - lots for DSP blocks described in true technical fidelity. Also, it's free and it's amazing. Not just because it's free, but OMG it's free.

PRESS, FLANNERY, TEUKOLSKY, VETTERLING "NUMERICAL RECIPES - THE ART OF SCIENTIFIC COMPUTING"

This is one of those source books that a lot of science and engineering people acquire at some point while at university. Mine's the Pascal edition - apparently I'm older than I look. The most recent version purports to have been completely rewritten for C++, but that's not really the point. The point is, if you ever need to write something like a Fast Fourier Transform routine from scratch, this book should probably be your starting point.

Whiteboard: Many forms of Numerical Recipes have existed throughout the ages

Whiteboard: Many forms of Numerical Recipes have existed throughout the ages

And finally, some friendly advice...

Know what you know, know what you don't know

More often than not, complex signal processing techniques can be broken down into a network of simple signal processing stages. So, work out what they are first and work on parts where your experience is weakest before committing to the full-fat implementation. It's simple risk mitigation.

There is absolutely no shame in changing your mind

Unless you are reproducing something you've made before, most sound processing ideas evolve as you work on them. Stay flexible and avoid producing so much implementation from the get-go. You're going to need to try bits out and see if it's close to what you imagined, or else you might not end up with the right result. Demonstrate, then iterate. I guarantee you that if you allow yourself the time to edge your way towards a high-level concept, the end result will be more satisfying.

Give your plug-in idea the love it deserves

As a game gets into the later stages of development, priorities can shift massively. You may be familiar with this scenario: The development effort goes from being proactive to reactive. This type of environment can eat into your plug-in’s development effort. And, if you let that happen for too long, your plug-in will never be all that it can be. It may never be. DSP is hard to get right and, therefore, sustaining focus is a necessity, not a luxury. Aim to get this work done early in the development cycle and it will get the love it deserves.

References

[1] http://captivatingsound.com/phd-thesis-captivating-sound-the-role-of-audio-for-immersion-in-games/

[2] Not 7, not 9, exactly 8. OK, I may have made that coefficient up, but the point still stands.

Commentaires

Toby Mason

January 10, 2017 at 06:27 pm

Thanks for these most timely reading resources! I really want to create some procedural plugins for WWise for my VR project, but I know that I don't know all the DSP. Excited to learn!

Robert Bantin

January 11, 2017 at 12:26 pm

You're welcome! Make sure you look at the example plug-in source code supplied with the Wwise SDK and (especially) have a working stub plug-in before getting all-crazy. ;-)

Jim Croft

January 11, 2017 at 07:34 pm

Good piece Rob !

Enrico Pietrocola

January 13, 2017 at 06:22 am

Thanks for the article and the referenced books! I'm writing a dissertation after doing an Audio Programming Traineeship, may I link and quote your article? I'll credit you of course (it will be used at Milan's Conservatory as a document for aspiring game audio programmers) Enrico ;)

Robert Bantin

January 13, 2017 at 07:08 am

Cheers Jim. I'm humbled by your admiration. :)

Robert Bantin

January 13, 2017 at 11:59 am

Sure, be my guest!

Edo Aneke

January 14, 2017 at 04:56 am

What a great article. Well done and I hope there are more in the pipeline ...

Monty Mudd

January 23, 2017 at 12:42 pm

Excellent article. The hardest part is convincing people of the importance of audio, because it can be difficult sometimes to put it into concrete terms. Thanks for giving game audio the love it deserves!