Over the past few years, I've been lucky enough to take part in several plays as sound designer and musician. By chance, or perhaps by preference, I often find myself playing the music directly on stage, alone as a one-woman band or with other performers.

Thanks to my training in classical music, I'm well skilled to compose in a linear fashion as heard in opera or melodrama (for example, Igor Stravinsky's A Soldier's Tale). This method of composition, used for hundreds of years in the Western music tradition, certainly has its benefits. Scenes can be shaped with high precision, the musical score and text, whether sung or unsung, woven together, and this altogether can create marvellous works. We spend time in rehearsal to get everything just right, get on stage, and play the composition to perfection in the same way every night.

This approach works very well in the context of a play that doesn't interact with the audience. In a work that includes improvised moments, on the other hand, a pre-composed soundtrack or one that follows a score is sometimes too rigid. That's where Wwise comes in.

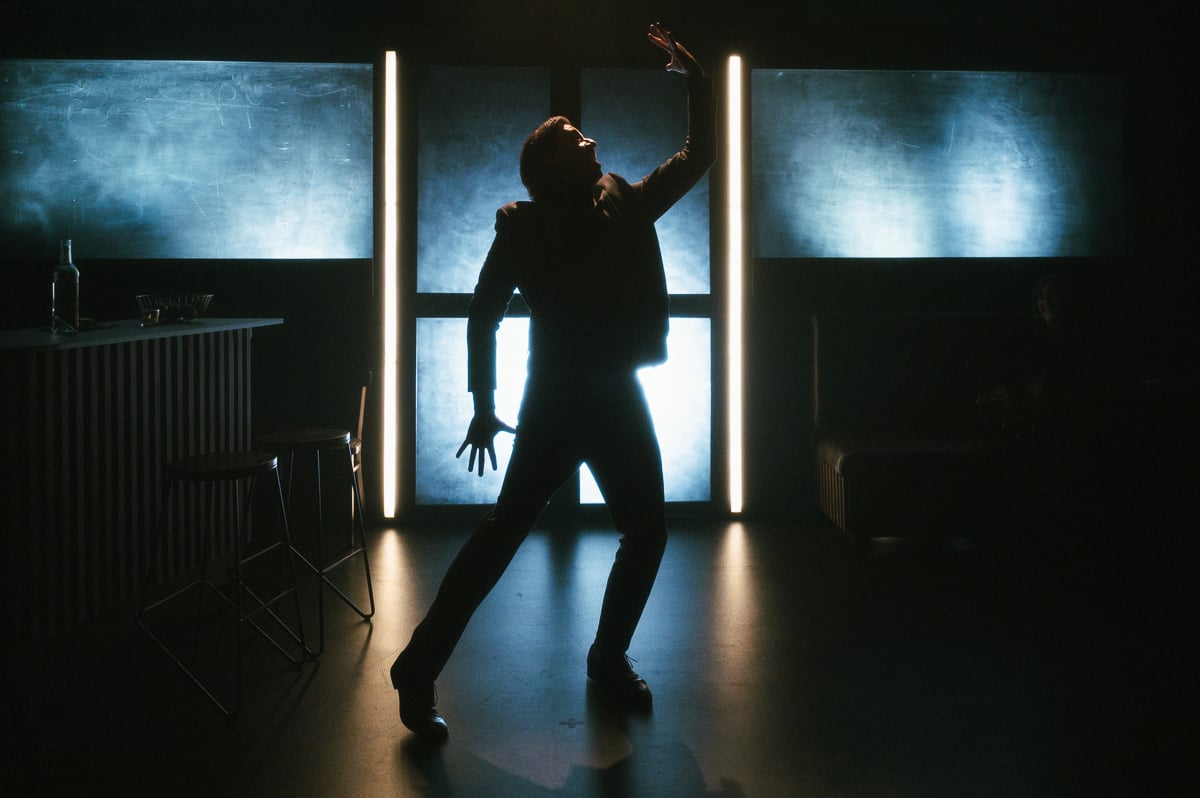

Interpreting music on stage can lead to beautiful theatrical moments (Merci d'être venus, Théâtre Périscope, 2023).

Today I'd like to tell you about my sound design for the play Merci d'être venus ("Thank you for coming") and what led me to choose Wwise as a tool for creating and performing on stage.

Why Use Wwise in Theater?

Merci d'être venus is a play by the company Le Complexe that makes extensive use of the codes of stand-up performance; the main character engages with the crowd several times during the show and can improvise at any given time. When we started designing sound, we wanted the music to be able to adapt to the improvisational nature of the text and the antics of Gabriel Morin, the play's sole actor. We also knew that I would be accompanying Gabriel directly on stage, and that I would be performing all the sound cues directly from my on-stage seat in the set.

For me, performing arts such as theater have always related more to video games than films: actors are a living and sometimes unpredictable material, like the player's character in a game - and music has to react accordingly. Having worked with Wwise in the past, I tried it out in rehearsal and the team loved it.

As well as being able to play cues the traditional way, Wwise naturally offers all the features we associate with video games. It's a very powerful tool for crafting complex soundscapes thanks to the Actor-Mixer Hierarchy; there are also the almost infinite applications of RTPCs (modifying a cue in real time with a low-pass filter, for example), all that without mentioning the interactive music features.

For me, using Wwise in theater allows you to create a lively, unique and complex sound design that adapts organically to the acting of the performers on stage.

Wwise as a stage instrument

At the beginning of my adventure, I wondered whether the Soundcaster was going to be reliable enough to trigger cues throughout the show. The article Using Wwise for Theatre: Adaptive soundscape for Theatre Play Le Léthé by Pierre-Marie Blind had me reassured and gave me the confidence to go ahead with the idea.

Since I was going to be on stage rather than in the control room, and the director didn't want a computer on stage, I figured I could assign the Soundcaster's Events to keys on a MIDI controller, linked to a computer backstage. I came across a fascinating video by Aaron Brown that convinced me of the infinite possibilities of using MIDI and Wwise.

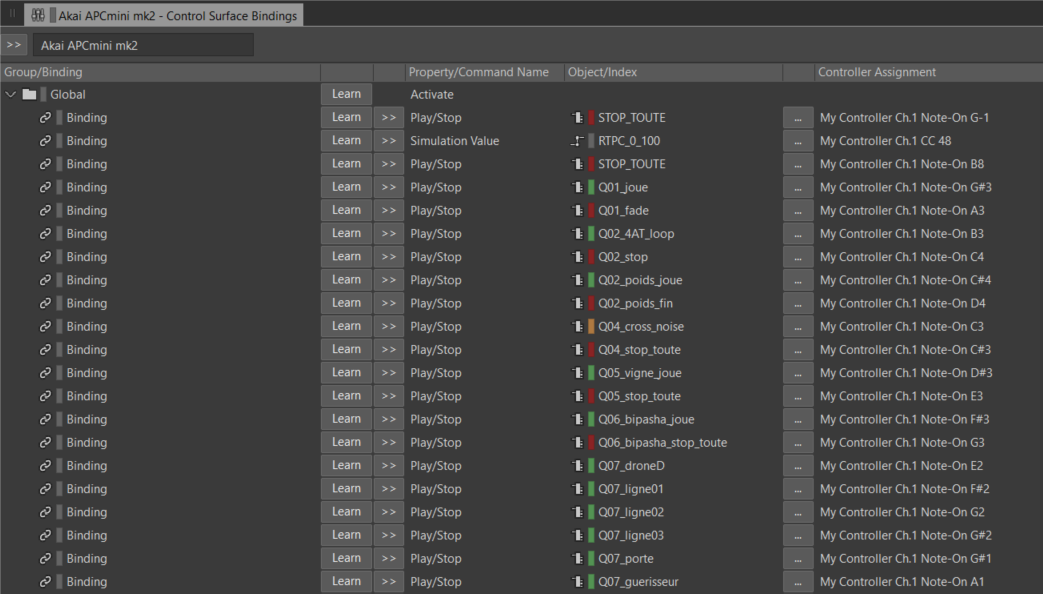

Once the MIDI controller is connected, it's easy to assign Events to specific keys in a Control Surface Session.

Since I wanted to have the entire show's Events on a single page and several knobs to change the value of the RTPCs, I chose the APC Mini mk2 as a controller.

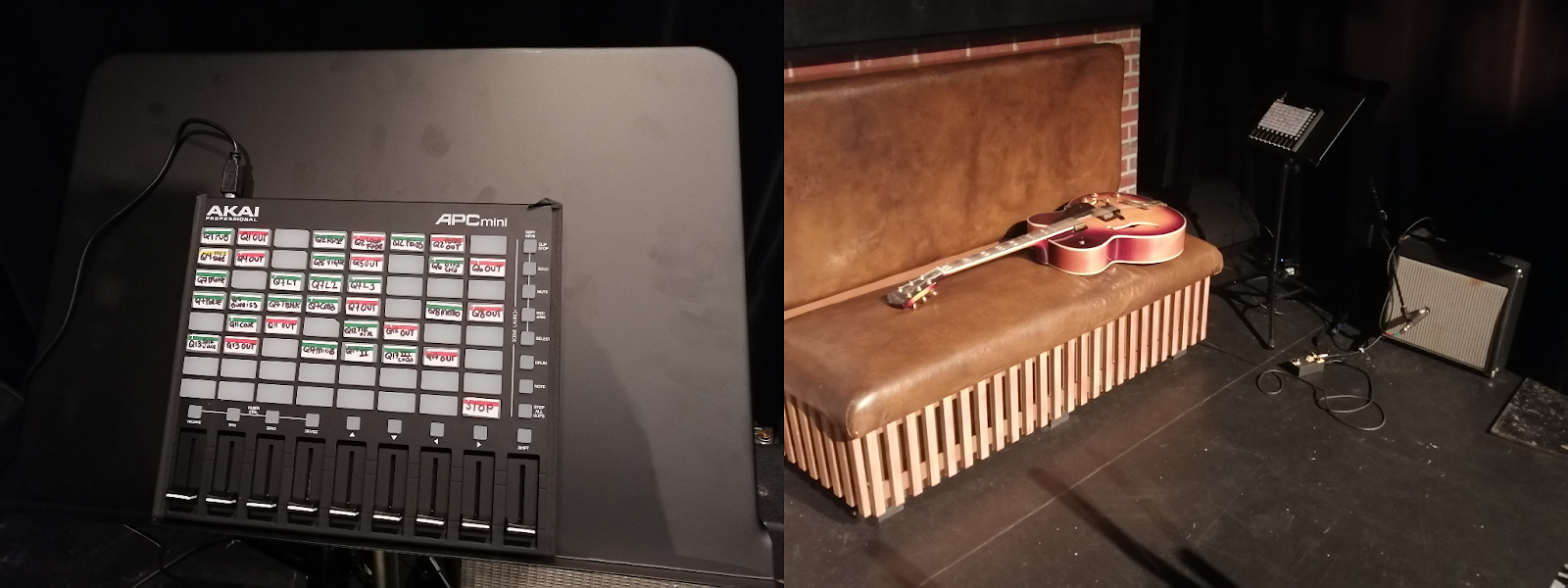

Left: my APC Mini mk2, annotated and ready to play cues. Right: my music corner in the background.

Actor-Mixer-Hierarchy as a Musical Tool

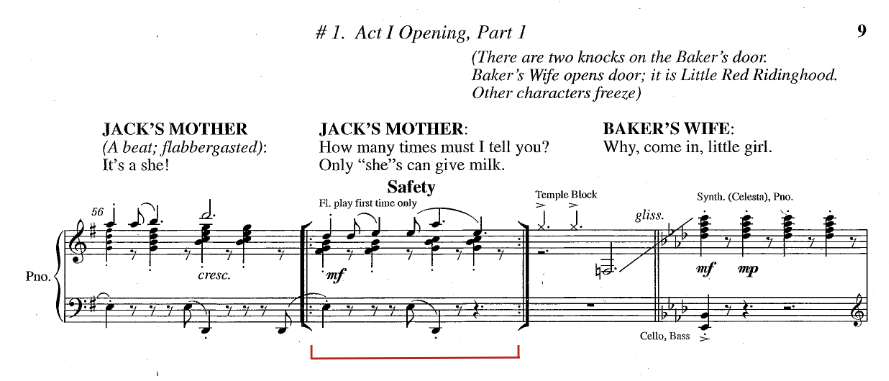

Naturally, composers have found ways to make written music more flexible over the years. In musicals, for example, safety bars (ex. 1) are a way for the orchestra to wait for characters to arrive at the right moment in the text before moving on to the rest of the score.

ex. 1 Into the Woods, Stephen Sondheim (1986)

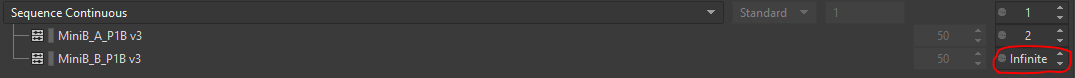

I thought this kind of method would work beautifully in Wwise with the Music Switch Containers and the various States that can be created in the Game parameters. All you need to do is split the music into several States and make sure that the last item in the Playlist is looped (ex. 2).

ex. 2

We can then move on to another State and carry on with the rest of the track, after taking great care to program adequate transitions. This is all pretty standard in the video game industry, but being able to apply this method to theater with pre-composed musical segments was new to me - and, let's face it, pretty exciting!

Merci d'être venus, Théâtre Périscope, 2023.

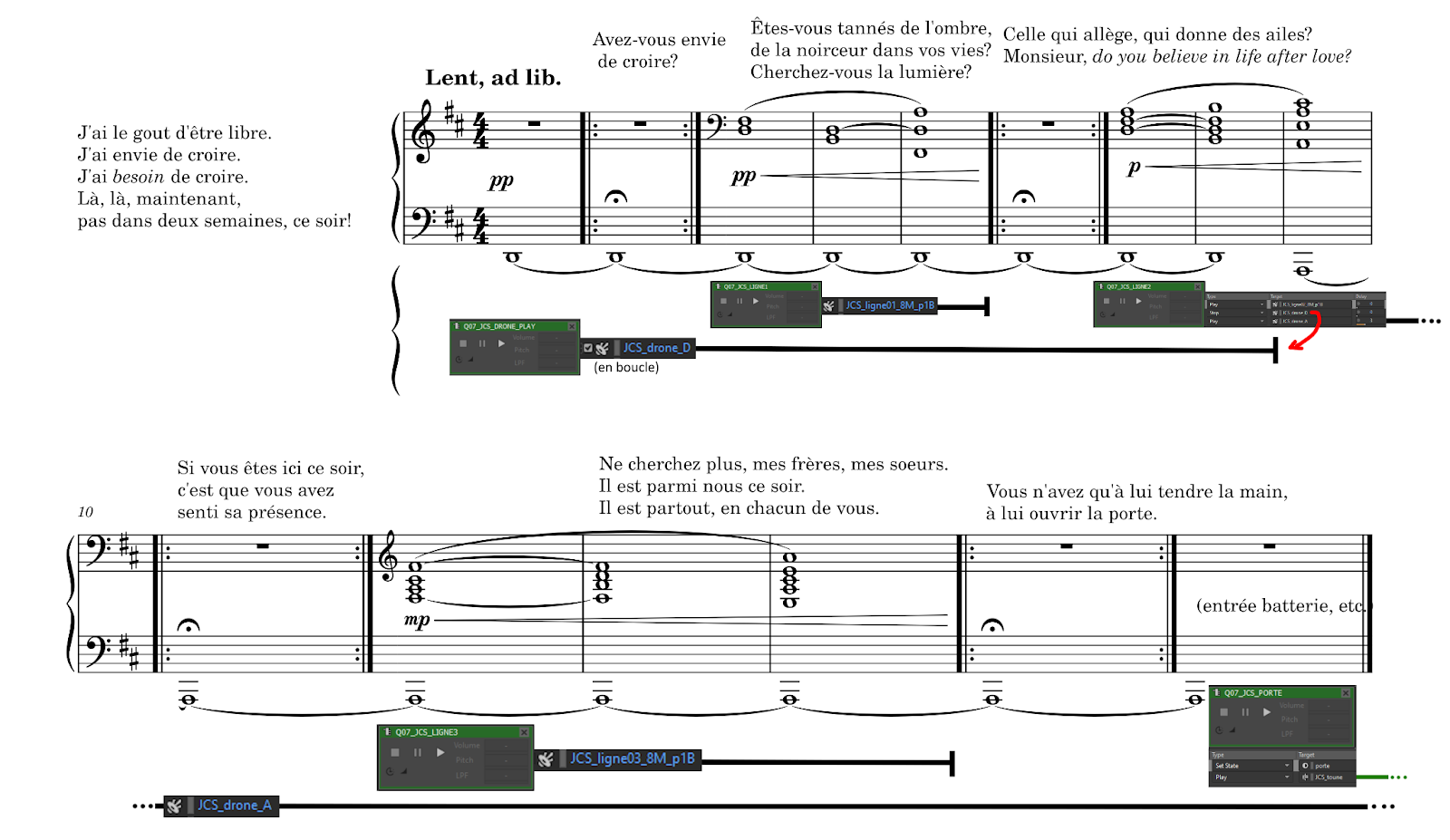

At one point in Merci d'être venus, the actor slowly shifts to a musical section and gets the audience involved. I obviously used the States approach described above, and it worked like a charm. However, at the very beginning of this section, the lyrics are scored with bars-free, tempo-less music. I probably could have used the Interactive Music Hierarchy, but it was simpler for me to use the Actor-Mixer-Hierarchy and handle the musical elements as sound design elements.

ex. 3 Score and Wwise Events relation in Merci d'être venu.

As you can see above (ex. 3), I've assigned a looping drone in an Event. When the actor gets to a specific moment in the text, I trigger another Event to play the first organ line over the drone. The next Event plays the second organ line, but also changes the drone note from D to A. The third organ line overlays the drone without a hitch. These Events are accessible at my fingertips on my MIDI controller, and I can trigger them whenever I like!

Now comes the time in the play where I need to bring drums and a tempo, so the next Event starts the Music Switch Container JCS_toune (pardon my vernacular naming) while continuing to play the drone below. The rest of the musical section is set in the Interactive Music Hierarchy.

I very much appreciate the flexibility that the Actor-Mixer-Hierarchy offers for musical cues that don't have a fixed tempo, and use it a few times in Merci d'être venus.

Interactivity at the Heart of the Theatrical Performance

Because Merci d'être venus is a show that tackles some pretty heavy topics head-on, it strongly relies on the interaction with the audience. Intense moments are interrupted with stand-up-like moments, and when the actor decides to keep going with a joke because the audience reacts well, the music has to follow him too.

I could have simply accompanied the actor on guitar (earlier versions of the show were all acoustic guitar), but for this iteration we wanted to push the design further and have more musical moments, with more instrumentation. Being the only musician, Wwise gave me access to more complex instrumental textures without giving up the soundtrack's interactivity. Furthermore, the possibilities offered by the Control Surface Sessions in triggering Events using a MIDI controller made performing the show surprisingly simple.

Wwise is already a powerful tool for video games, and I was pleasantly surprised by its capability in a theatrical context. I recommend it to any designer who wants to build an interactive design, whether from the control room or directly on stage.

Merci d'être venus will be presented across Québec in 2024 and 2025.

댓글