When was the last time you truly noticed the music playing in a game during an epic battle? Game scores just don't command the same sort of attention as, say, a memorable film score from the likes of John Williams. While he expertly accentuates a well-placed haymaker to the jaw of a fascist soldier, a game score quietly ducks out the background music, deemphasizing the music. This is in large part because a film composer watches a near-finished version of the film to match music to action whereas a game has unpredictable player agency to contend with. A player can choose to do none of the things the music would otherwise be trying to highlight. As game developers, how do we create scores for unpredictable player action while also reaching the level of immersion cinema has enjoyed for over a century?

To get to a place where games match music scores, we have to understand the two kinds of methods to match game music to action: reactive and proactive audio. Most game developers are familiar with the reactive form of game audio. This method uses Triggers, Switches, States, Events, and RTPCs to provide the audio engine with game state updates that allow the audio engine to adjust the sound mix. This method is put to great effect in almost all games. Such approaches have enabled games like Resident Evil to ratchet up the hallway music intensity when a specific puzzle key is found. The developers of Killer Instinct on the Xbox One were able to turn their combo system into a clever music sequencing system that integrates a character's leitmotif into the BGM with a properly executed Ultra Combo. Nintendo has used dynamic MIDI compositions to create music that ebbs and flows based on location or major character events. Super Mario Kart uses area triggers to shift music instrumentation based on where the player is in the level. Middle-Earth: Shadow of War features a system that plays musical stingers based on very specific actions to give it more of a custom scored soundtrack feel. All of this has been made possible with the more advanced systems that audio engines like Wwise offer.

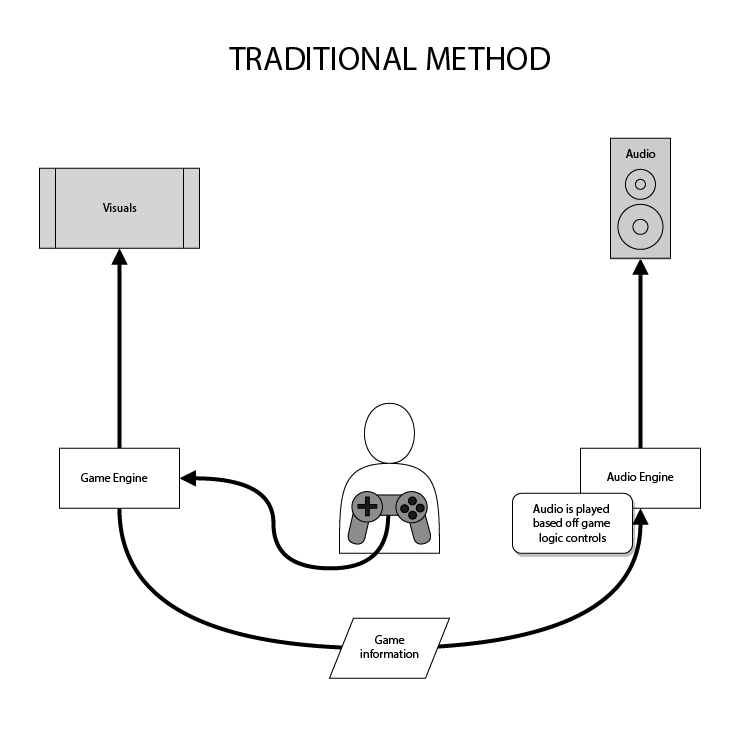

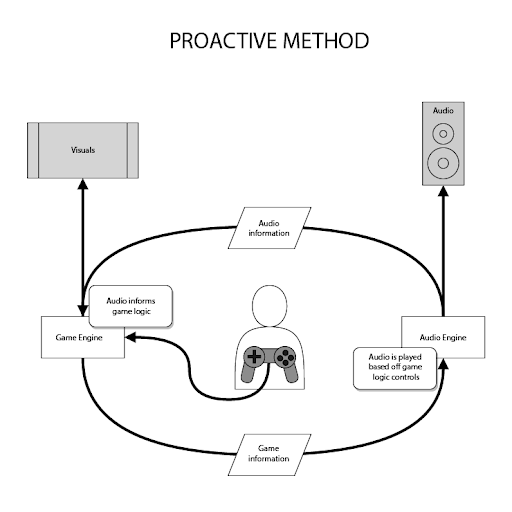

These methods are proven to create really impactful game moments, but how do we bring our audio interactions to that cinematic level where the game music adaptively highlights player actions? The answer is to flip the approach on its head: instead of driving music from game action, you drive actions based on music. That’s proactive audio design. Traditional interactive game audio is reactive, as communication starts in the game engine and ends in the audio engine.

Reactive vs. Proactive Game Engines

A proactive audio system, then, is one where the audio engine sends information back to the game. Wwise support for proactive audio design is implemented through Marker, MIDI, and Music callbacks. With Marker callbacks, a cue can be embedded in the audio and cause Wwise to send a notification back to the game.

Wwise’s system creates a great foundation for proactive audio design, but what if we were to turn that approach up to 11? What if we didn't just say “here is a point of interest in the music” but pushed it to the level of “here is an upcoming beat, with this much intensity, this many milliseconds in the future.” A game system armed with this information can make important-yet-simple logic decisions: a brawling system could delay a game’s haymaker punch animation by 40ms to land exactly on beat, gaining a perceptual boost from the synchronization with the inherent musical impact. The game could simultaneously queue a sound effect, or musical flourish, to land on that future sync point and use a standard reactive audio trigger to cue the audio system to layer the composition with more intense music. That new music can then send new signals to the game. This game loop can be built up and made as complex as the designer wants. What results is a system that allows the music to be more traditionally composed and a game that can adjust to work with the audio (and vice versa). It is a far more intelligent interactive audio architecture where the game systems and the audio engine can coordinate with each other. What’s more; this can be achieved with relatively minimal game logic overhead, and can compliment existing audio systems like Wwise.

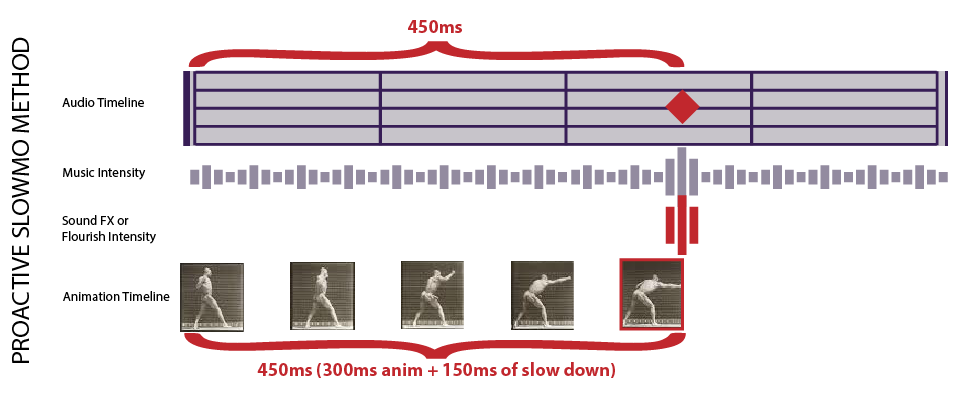

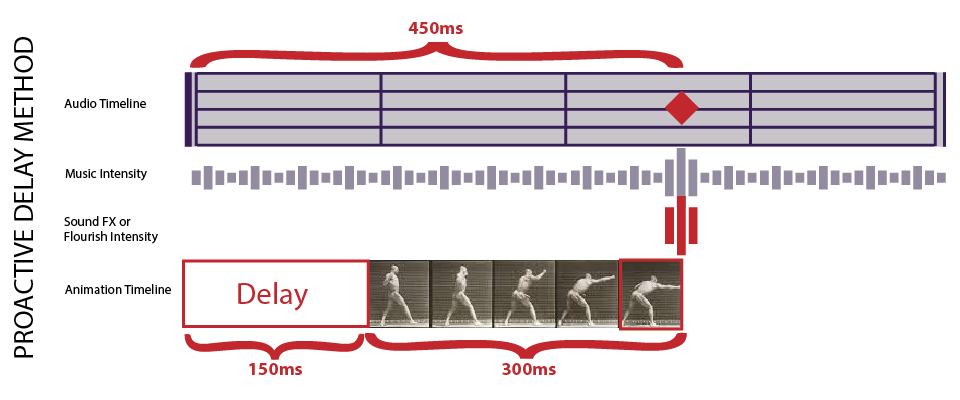

How about an example? Let’s look a bit deeper at how proactive audio might cinematically enhance a standard brawling system for just about any action game. A cinematic brawling system is one where the background music ebbs and flows with the balance of power between hero and enemies; where swells of music coincide with powerful hits and devastating blows. The music reinforces the actions of the player while simultaneously providing the type of situational feedback that we've come to expect from game audio. Let's take a look at what achieving this effect looks like. In the diagrams that follow, our goal is to align the impact of the punch animation with a high point of music intensity.

.png)

With the standard reactive audio approach, the game's animation system has no knowledge of what's happening in the audio, so the animation simply plays immediately, resulting in no synchronization between music, sound effects and/or musical flourishes, and animation.

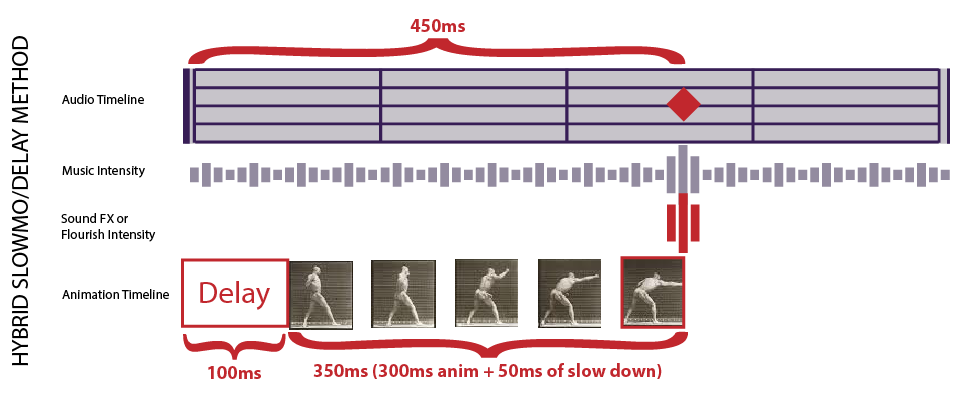

With the detailed timing information provided by a proactive audio system, the animation system can slow down an animation (or entire simulation), and its accompanying sound effect, such that a special moment aligns with a powerful moment in the music. In this case, an animation with a punch impact 300ms in is "stretched" by 150ms to match a musical impact point 450ms away.

Alternatively, the animation system can delay the start of an animation such that the animation will reach its "crescendo" at the same time as the music.

These solutions can be combined depending on the needs of the game to provide a flexible, responsive experience for the player.

The result, without any adverse change to the player, is a system that naturally aligns action with music and preemptively determines when to add a musical stinger that accentuates the player's actions.

But why would you go through the trouble of flipping audio design on its head? Proactive audio enables game systems to coordinate with the traditional [reactive] audio system. Game audio doesn’t have to be relegated to the background when you can match the unpredictable player behavior to the soundtrack. You can go as far as creating a dynamic music sequencer that is driven by, and synchronized with player action. This new approach allows game developers to close the gap between game soundtracks and the kind of cinematic scoring you see in huge blockbuster movies. There is no longer any reason games can't reach the same level of quality.

Music is hugely important to the game experience and the industry's ability to tell compelling stories. It’s easy to let music slip when graphic rendering techniques are pushed to sell ever more realistic visuals. As we hit the limits of huge graphical leaps, however, deeper integration of music with other systems will become ever more important. Proactive audio systems, paired with an excellent audio engine like Wwise and clever game design, can get us there today: dynamic musical scoring can be a reality.

Happy designing!

댓글