We’re excited to announce that Wwise 2017.2 is now live!

Below, a short-list of what’s new in Wwise 2017.2.

Workflow and Feature Improvements

Improvements with States

Most RTPC properties now available to States

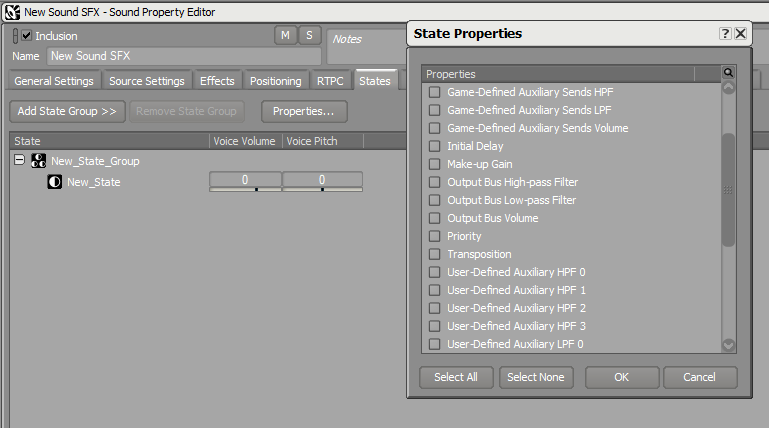

All audio properties that support multiple RTPC curves and most properties from the Wwise plug-ins can now be controlled by States. To add new State properties to an audio object or plug-in, simply open the State Properties view and select the properties as seen below. This selection is done on a per object or plug-in basis, so you don’t waste space with all possible properties used by your project.

A new State icon has been added next to the link/unlink and RTPC icons to help you identify which properties can be modified by States.

Mixing Desk Workflow Enhancements

It’s now possible to quickly change which State Group is listening to State changes. This makes faders move when States are changing on motorized controllers. It’s also possible to expand and collapse State Groups (and other main categories, such as Monitoring, Positioning, and Effects), which is convenient when Mixing Desk sessions contain multiple State Groups.

Wwise Spatial Audio improvements

Spatial Audio: Sound Propagation, Rooms, and Portals

Development in Spatial Audio continued during 2017.2 with a focus on expanding the existing features by enhancing usability, runtime efficiency, and flexibility. Here’s an overview of the main elements:

Sound Propagation

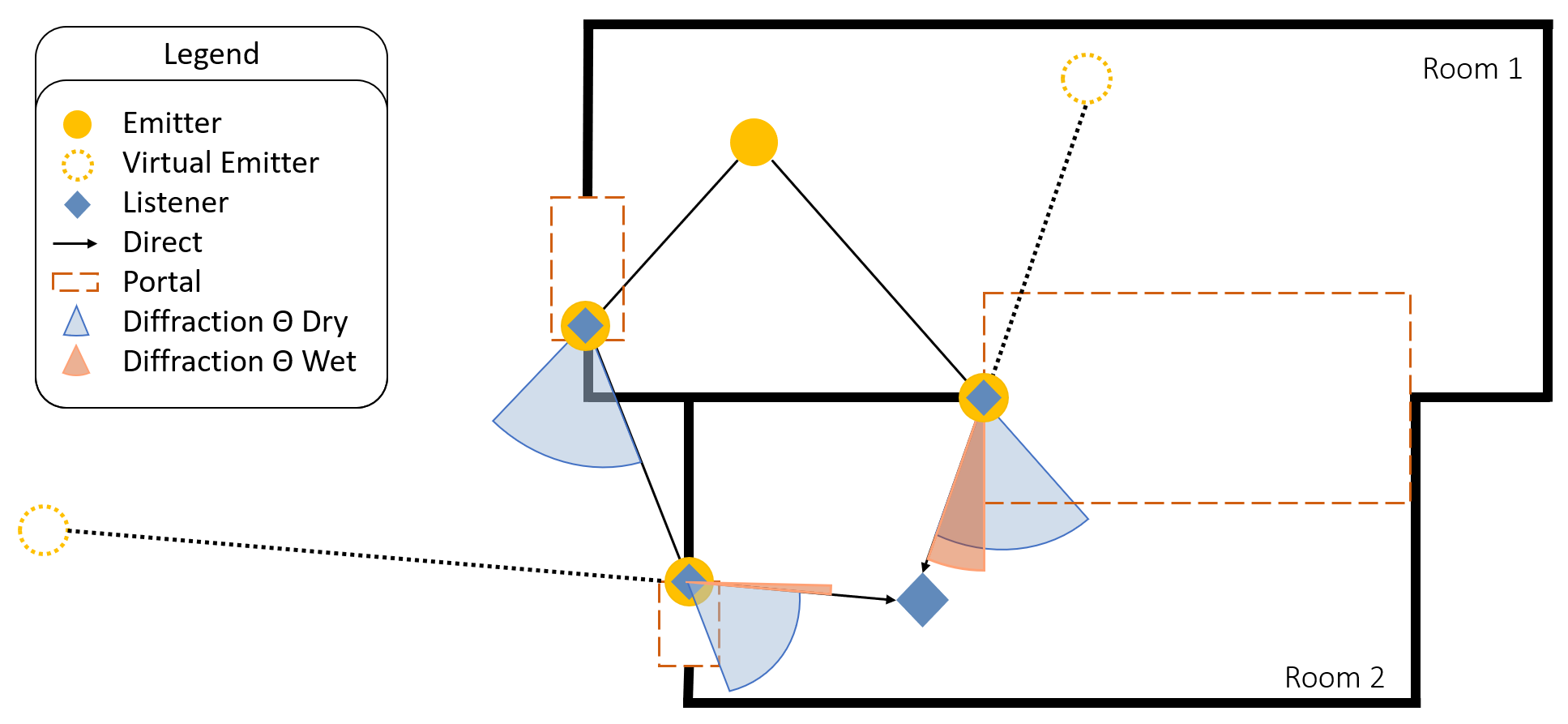

- The path of sound from an emitter to reach a listener can now traverse one or multiple Portals.

- Virtual positions are attributed to sounds as if they are coming from the Portal closest to the listener.

Smooth Transitions Between Rooms

- Portals are now volumes instead of being point sources, which allows for smoother and more accurate panning and spread when going through Portals.

- Room reverbs now smoothly and automatically crossfade over distance.

- Spatialized Portal Game Objects can also send to listener’s room.

Diffraction Modeling

- Diffraction is represented as an angle ranging from 0° (no diffraction) to 180°. It can be driven using obstruction and/or the new Diffraction built-in parameters.

- The emitter dry path(s) and a Room's wet path have different angles. The dry diffraction angle is the deviation from the straight-line path, and the wet diffraction angle is the angle from the normal of the Portal.

Basic Transmission Modeling

- When no paths through Portals are found from the emitter to the listener, the ‘sound transmission’ path goes through the walls.

- Rooms are tagged with ‘wall occlusion’ values, which are used to set the Wwise occlusion value on the emitter.

Portal Obstruction

- The Spatial Audio API provides means to set game-driven obstruction values on the Portal objects.

Profiler Improvements

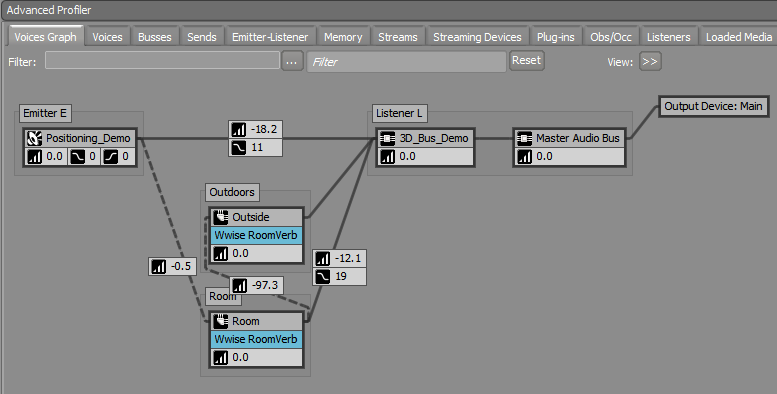

- LPF and HPF values are now complementing the volume values in the Voices Graph tab of the Advanced Profiler view.

Efficient Game Object Usage

- Multiple positions are leveraged at the Portals when the listener is not in the Room, which results in an important performance improvement over 2017.1 as only one game object is now instantiated per Room.

- Single position (not using orientation) following the listener is used when the listener is located inside a Room.

Integrations in UE4, Unity, and an SDK Example Exposed in the Integration Demo Are Provided.

Filters in (and on) Busses

Built-in Low-pass and High-pass filters have been reworked in the sound engine's audio graph to better model filter values coming from different features such as Wwise user parameters, Attenuation, Occlusion and Obstruction. Previously, Wwise had a single filter on the voice output, and competing parameter values for this filter would have to be logically combined into a single value; only the minimum value was used. Wwise 2017.2 features individual filters on each unique output, including outputs from busses, so that values pertaining to different rays or output busses no longer have to be combined.

An example of how this is useful can be seen when using multiple listener scenarios. A game object that has multiple listeners will have different Attenuation curve evaluations for each listener, since they may be at different distances. The curve defines a Low-pass filter value; however, in previous versions of Wwise, only one Low-pass filter value could be used per voice. Now, upon mixing the single voice into each listener's output bus instance, the correct filter value (as determined by the curve evaluation) will be applied for each output bus.

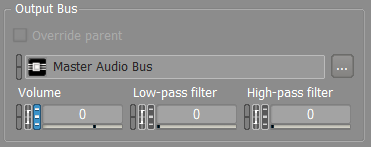

Busses have three new controls: Output Bus Volume, Output Bus LPF, and Output Bus HPF, allowing one to filter the output of mix busses. Also, low-pass and high-pass filtering of user-defined sends is now possible via RTPC.

New Built-In Parameters: Listener Cone & Diffraction

- Listener cone represents the angle between the listener's front vector (gaze) and the emitter position. It can be used to implement the listener cone via RTPC. This can be useful to, for example, reduce focus on emitters outside the front cone of the listener or simulate microphone polar patterns.

- Diffraction angle between emitter and listener operates in tandem with Portals. The diffraction built-in parameter ranges from 0° (no diffraction) to 180° and can be used to, for example, shape different Attenuation curves for Rooms' dry and wet paths.

Ambisonics IR Now Packaged with Wwise Convolution Reverb

Packaged with the plug-in, all ShareSets and stereo impulse responses included with the original Wwise Convolution Reverb now come with their ambisonics equivalents. Projects that already licensed the Convolution Reverb can simply get access to the ambisonics versions of the impulse responses from the "Import Factory Assets..." menu in Wwise (you may need to first download them from the Wwise Launcher).

Audio Output Management and Motion Refactor

Audio output management and motion have been refactored to offer greater flexibility, and represent the foundation for future improvements for output management and support of haptic devices.

Audio Output Management

- The management of audio output is now mostly done in Wwise Authoring by assigning Audio Device ShareSets to master busses.

- It is now possible to create any number of master busses in the Master-Mixer Hierarchy and assign specific audio devices to them.

- Independent audio device ShareSets are created to output specific audio content, like voice chat or user music, to specific audio devices such as game controllers or alternative physical outputs.

- On master busses, different output devices can be assigned for authoring and runtime, which, among other things, greatly simplifies auditioning during the development of complex sound installations.

Wwise Motion Refactor

The motion system used by Wwise Motion to support rumble on game controllers has been refactored. Instead of using a specific code path, it now uses the same feature set and API as the audio. This simplified model allows support for third-party haptic plug-ins for devices such as VR kits or mobile platforms.

Wwise Authoring API improvements

Wwise Authoring API New Features

A series of feature requests from early adopters of WAAPI have been added to 2017.2. Here are a few examples!

- To ease transfer across computers, it’s now possible to import audio files from base64 without the need to write files on disk.

- Switch Container associations with audio objects can now be gathered or edited from WAAPI.

- The WAAPI API can be queried to get all available functions. For each function, the information is returned with its JSON schema.

- WAAPI can bring Wwise to the foreground and expose its Process ID.

- The Wwise search can be used from the Command Line Interface.

- Applications using WAAPI can subscribe to transport activity notifications.

Game Engine Integrations - Unity

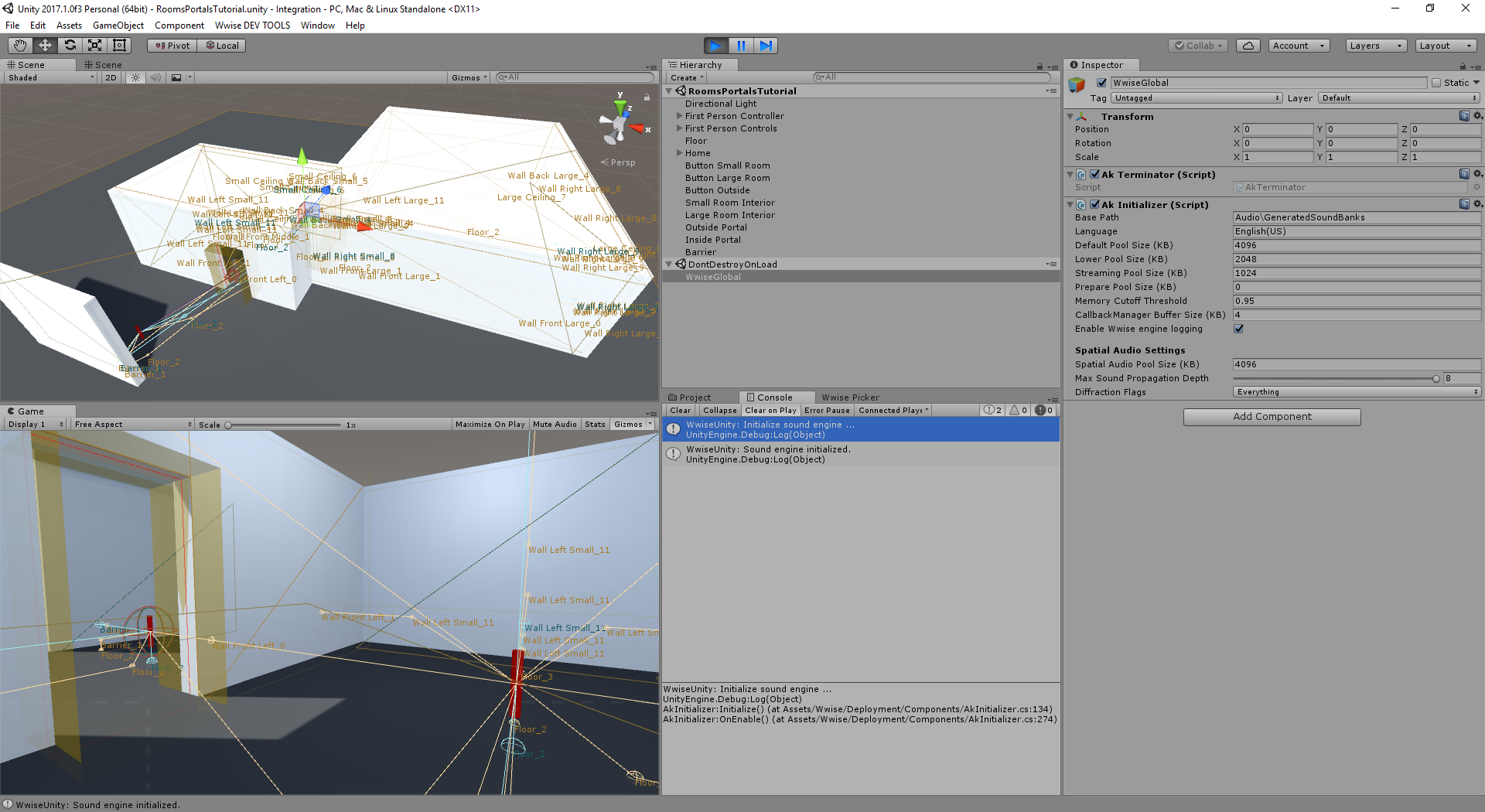

Wwise Spatial Audio

The Spatial Audio suite is now fully integrated in the Unity integration. There’s also a step-by-step tutorial to help you discover its functionality!

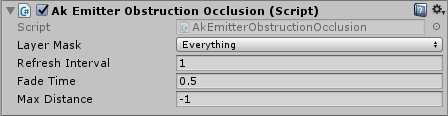

Simple Obstruction and Occlusion

The Ak Emitter Obstruction Occlusion component is applied to emitters and offers a basic ray-casting system to occlude or obstruct sounds. The presence or lack of the Ak Room component within the scene determines whether occlusion or obstruction should be used:

- When an Ak Room component is added to a scene, the Ak Emitter Obstruction Occlusion component uses obstruction.

- When there is no Ak Room component present in the scene, the Ak Emitter Obstruction Occlusion component uses occlusion instead.

While its system might be too elementary for certain games, it should be useful to many other projects needing a simple and straightforward mechanism to manage occlusion and obstruction.

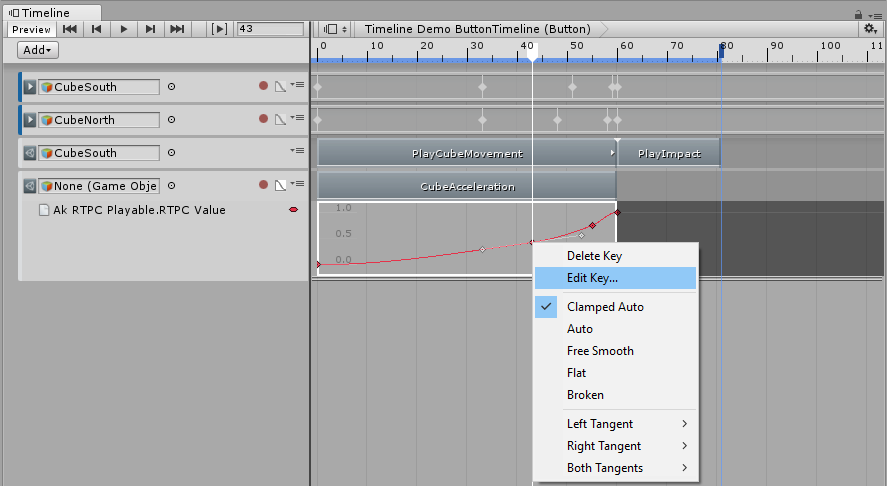

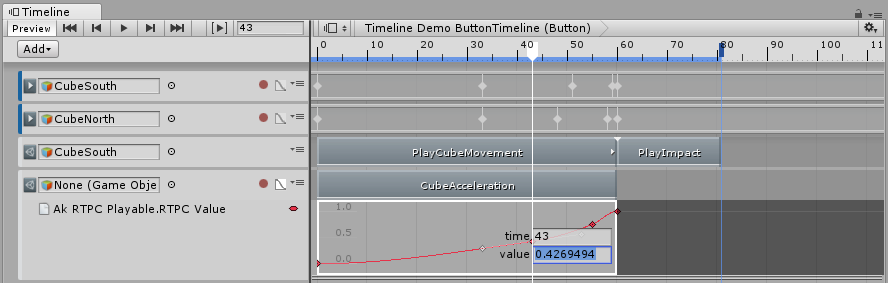

Timeline & Audio Scrubbing

It’s now possible to play back from anywhere in the timeline to allow, for example, more control when editing in-game cinematics. Audio scrubbing is also supported, which can be helpful when syncing audio to video.

Automatic SoundBank Management

A manual copy of SoundBanks in the StreamingAssets folder is no longer required. There is a pre-build processing step now that generates and copies SoundBanks to their appropriate location for the Unity build pipeline.

New C# Scripts

MIDI Events can now be posted to Wwise via C# scripts. Further, the Wwise Audio Input source plug-in is now accessible via C# scripts.Preview in Editor

It is now possible to preview sounds from the Inspector view without entering Play Mode.

Game Engine Integrations - Unreal

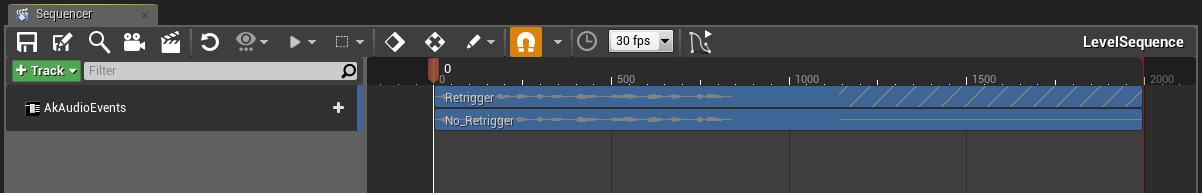

DAW-Like Workflow in Sequencer

There are significant improvements in the Unreal Sequencer to support audio scrubbing, seeking inside tracks, and waveform display. These improvements should be particularly useful when editing in-game cinematics and linear or interactive VR experiences.

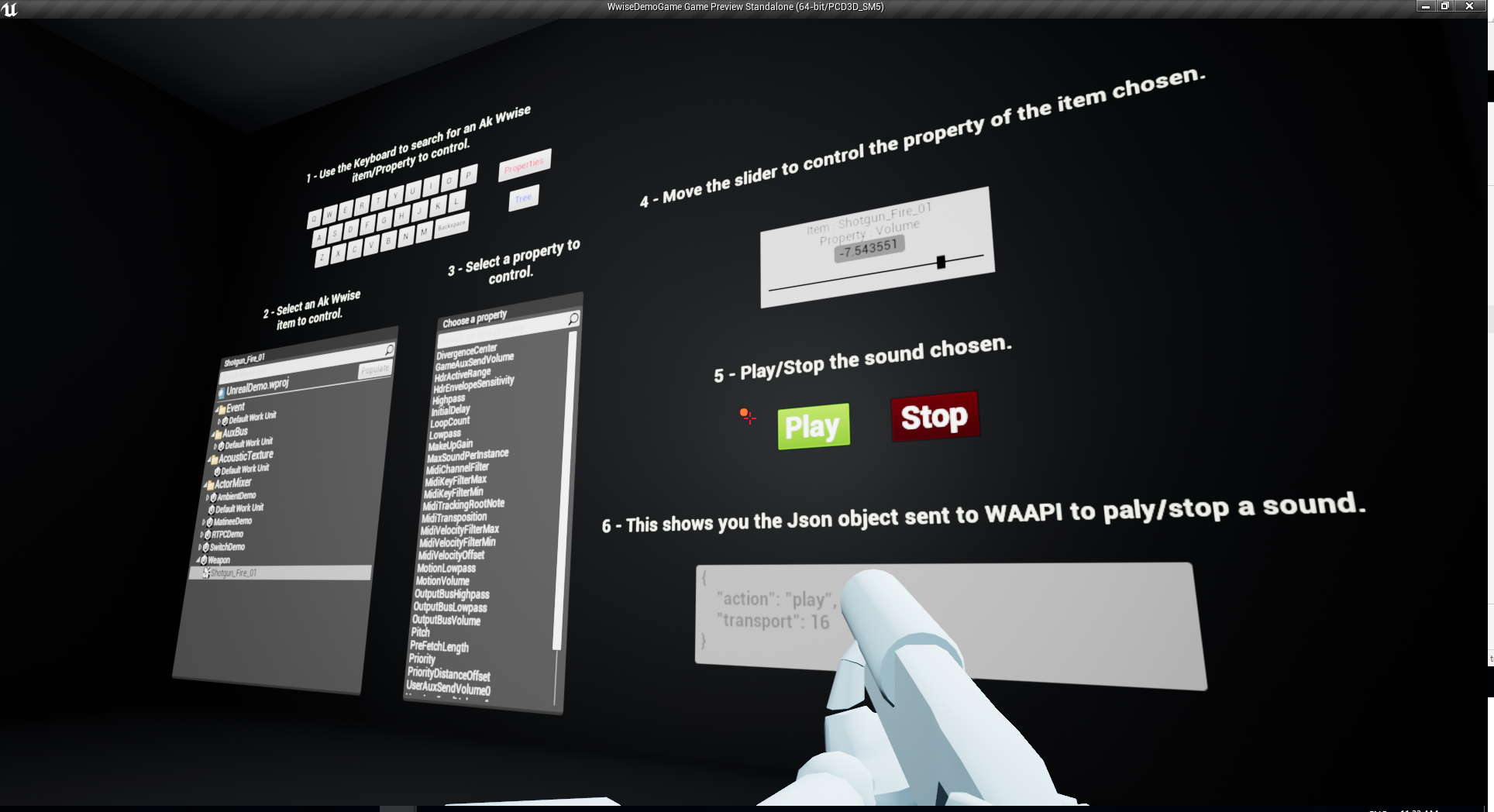

WAAPI Integration in UE4

- UMG Widget Library: Using WAAPI, you can control Wwise directly from Unreal. With this new widget library, you can build your own custom UI in Unreal to optimize your team's workflow.

- Blueprint: WAAPI is now accessible from Blueprint, allowing you to use built-in UMG widgets to control Wwise.

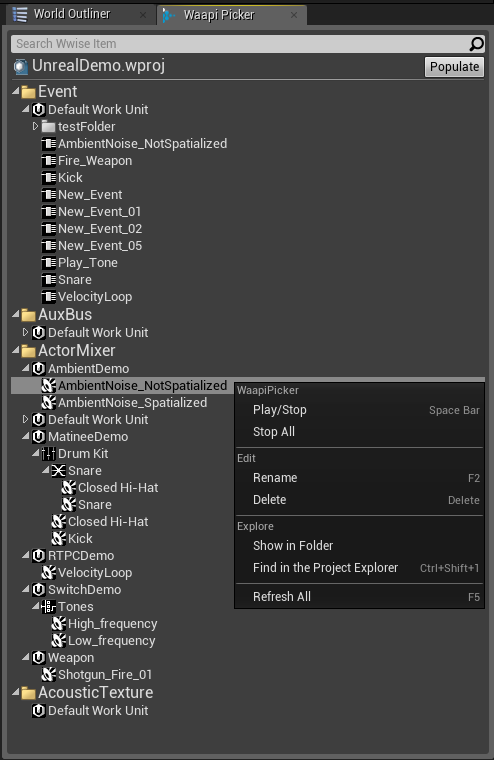

- Wwise Picker: A new WAAPI-enabled Wwise Picker has been added to the UE4 integration, which allows to complete a number of operations (such as selecting audio objects, modifying volume, and playing/stopping) directly in the Unreal Editor.

Improvements with Listeners

AkComponents can now support more than one listener. Further, a listener, which follows the focused viewport's camera position, has been added to the Unreal Editor (when not in Play in Editor mode). It can be used to, for example, preview sounds and distance attenuation directly from the Animation Editor.

댓글