Within the Architecture Engineering and Construction industry (AEC), presenting design ideas through 3D models is now common in the design process and is a valuable tool for communication of ideas and collaboration.

3D models could also be used in acoustical simulation software to calculate the sound propagation in a space from a source to a receiver. The information of how sound propagates from a source to a receiver is contained in the so-called Room Impulse Response (RIR), which can be used to simulate, through convolution, how a sound, a music track for instance, is perceived by a listener in that room. This process is called “auralization”.

Auralizations have been part of the architectural and acoustical design process for a while now, but they are usually limited to static (no headtracking) binaural representations of the soundfield in one source-receiver location.

Now that new immersive technologies are gaining ground in architecture and commercial and residential cinema space too, and VR/AR applications are finding their way as design tools, simulating sound propagation in the virtual space is crucial to keep the virtual reality experience intact. Sound and acoustics play a fundamental role to recreate realistic experiences in non-static immersive technologies where audio has to continuously adapt to the listener’s head orientation.

Simulating the acoustics of a space could be a powerful tool to inform design decisions, such as material and shape selection. Sound interacts differently with each surface and informs our brain of our surroundings. Sound absorbing, scattering, and reflecting materials are used by designers to control objective or subjective parameters as loudness, reverberation, speech intelligibility and acoustical capacity of a space. The acoustical comfort of a space supports the function for which the room was designed, and it is important for the health of its users. When music is reproduced in a room, the audience needs a calibrated and clear response from the room avoiding detrimental reflections or excessive coloring of sound. A fragile relation must be balanced between sound and space.

At Keith Yates Design, we set up a demo of an acoustically untreated home theatre to explore new possibilities in virtual reality with Wwise and Unity, using their powerful, well documented, and smooth integration that allows for a dynamic gaming-like experience of a space. Acoustical simulation of the room is performed with CATT from which soundfield B-Format (i.e. Ambisonics) Room Impulse Response are exported and subsequently imported in Wwise. The room is simulated without any acoustical treatment panel to control the reverberation, sound decay and spatial envelopment, that are included in the final design.

Overview of the system

We envisioned a VR experience of a home theatre as a 3-DOF app. Giving the ability to walk through the room was not essential and would have increased the complexity of the demo itself. Generally, acoustical demos are aimed at comparing seats in a room and consistency of the soundfield in multiple positions.

Visual renderings for sightlines and auralizations are typically two separate outputs obtained from different software and are generally static with no possibility of listening or looking around. We wanted to cross that bridge and take this process one step further.

As the demo starts up, the scene is set inside the home theatre with, for the purpose of this demo, two screen speakers (left and right channel) that can be switched on from a UI menu (Figure 1).

/1_image3.jpg) Figure 1 – Screen speakers (left and right) highlighted in red. These are the AkAmbient (simple mode) sound sources.

Figure 1 – Screen speakers (left and right) highlighted in red. These are the AkAmbient (simple mode) sound sources.

The player/listener is in the audience seats and can be “teleported” to other seats and experiment the space by listening and looking around.

Playthrough and look-around of the demo. Both left and right screen speakers are playing the stereo tracks. Better experienced with headphones.

/2_image5.png) Figure 2 – Block diagram of the system.

Figure 2 – Block diagram of the system.

The impulse response of the source-receiver pair is calculated beforehand and has to be fed into Wwise when preparing the scene. Thus, once source and receiver positions were selected, we used CATT (software used professionally for acoustical simulations in ray tracing) to precompute accurate impulse responses of the room (Figure 3a, 3b), considering the 3D radiation pattern of the selected speakers and their specific aiming at the audience seats (Figure 4).

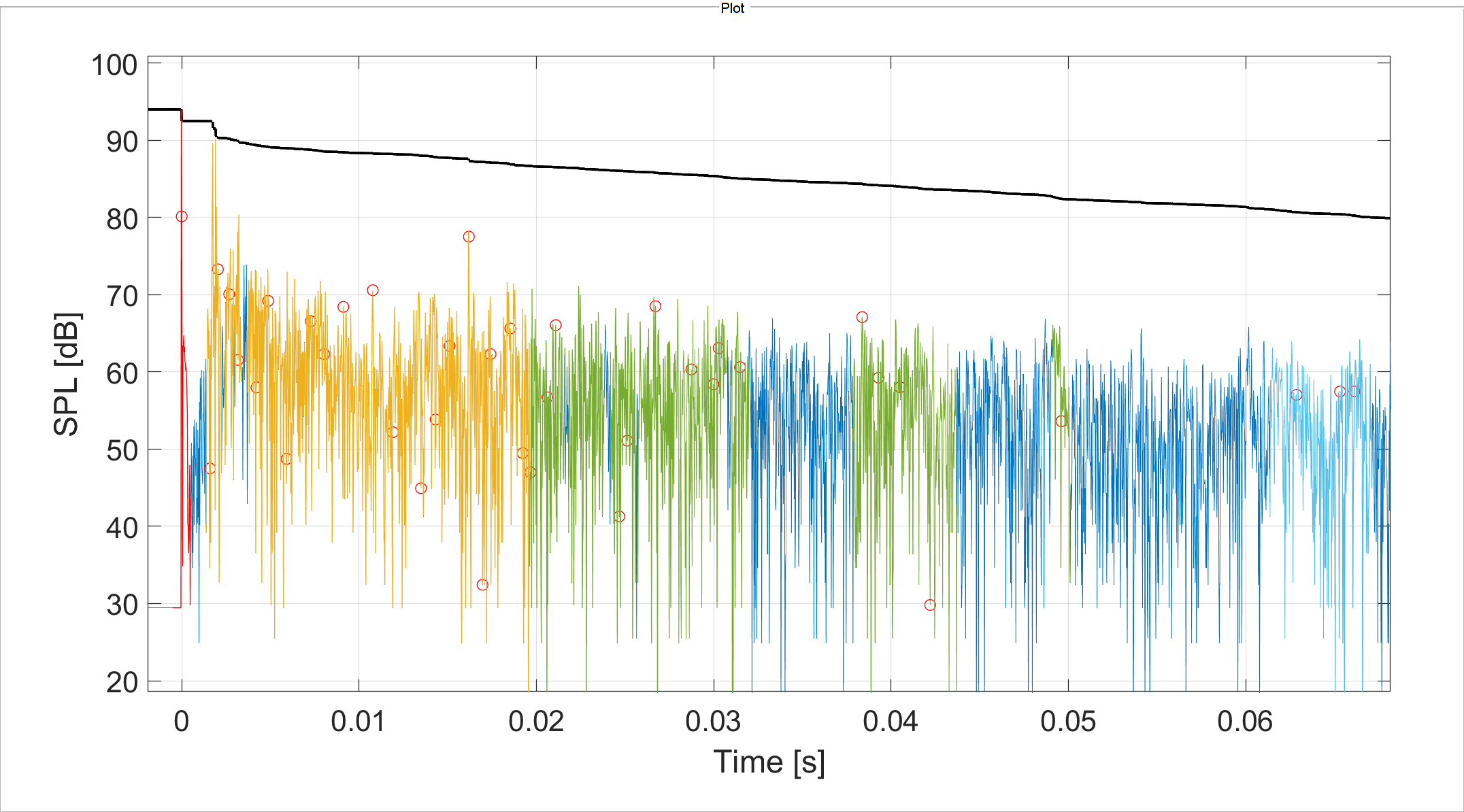

/3_image10.png) Figure 3a – Spatial analysis app of B-Format 1st order room impulse response developed in Matlab by the author. The hedgehog plot shows the module and direction of arrival of sound intensity vectors and their colour indicates the time of arrival. Sound reflections are identified and highlighted with the respective colour in the RIR waveform in Figure 3b.

Figure 3a – Spatial analysis app of B-Format 1st order room impulse response developed in Matlab by the author. The hedgehog plot shows the module and direction of arrival of sound intensity vectors and their colour indicates the time of arrival. Sound reflections are identified and highlighted with the respective colour in the RIR waveform in Figure 3b.

Figure 3b –First 70ms of the W (pressure) channel of the room impulse response with right loudspeaker as source and receiver at mid row middle seat.

Figure 3b –First 70ms of the W (pressure) channel of the room impulse response with right loudspeaker as source and receiver at mid row middle seat.

Figure 4 – Selected speaker model of the room and an example of the radiation pattern data of a flush mounted screen speaker.

Simulations of sound propagation can take hours in CATT as it uses hundreds of thousands of “sound rays” interacting with purpose made models and their surfaces. Each surface has assigned material properties (absorption and scattering) in frequency octave bands. The algorithm used by the software and its tools achieve a simulation accuracy that goes beyond “simplified” methods recently introduced in some audio middleware for gaming. For this reason, we decided not to use Wwise Reflect, or Steam Audio, as we cannot compromise on acoustical accuracy resulting from a specific architectural design. Wwise Reflect, instead, could be very useful for fast prototyping of spaces to analyze early sound reflections and Speaker Boundary Interference Response. We are currently experimenting with it to simulate how direct sound and early reflections interfere with each other at the listening position without having to create an acoustical model for CATT.

Time trace simulation of sound propagation of the left and right speakers. The simulation is truncated at 100ms with “sound rays” shown up to the 5th order of reflection.

Once the room’s acoustical response is “baked” for several source-receiver pairs, we imported the results in Wwise and we used the Convolution Reverb plugin and Wwise Recorder to obtain the convoluted ambisonics tracks.

Convolutions with Convolution Reverb could be done in real time, but this would put a burden on computational resources and memory pools. It may be worth to use this approach if different RIR files have to be switched at run time using a script. However, this is not found to be straightforward and required knowledge of Wwise APIs and specific plugin mechanics.

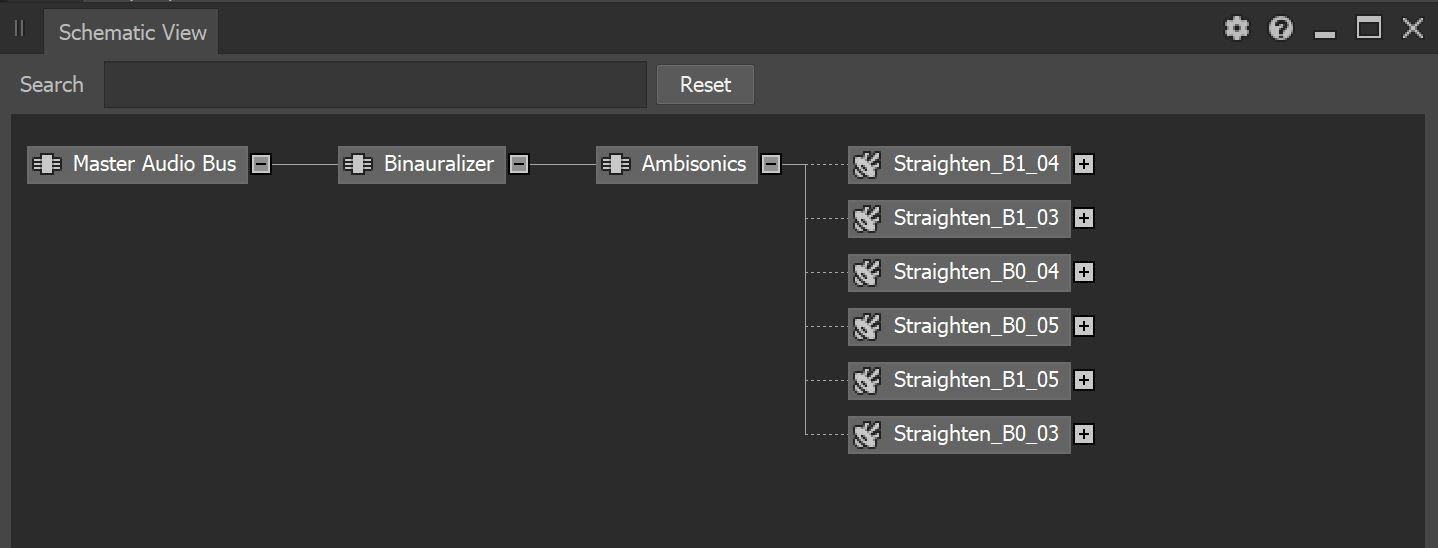

/8_image8.jpg) Figure 5 – Schematic View and an example of a Mixing Session in Wwise to visualize sound sfx, bus routing, active plugins attached to each bus and active states.

Figure 5 – Schematic View and an example of a Mixing Session in Wwise to visualize sound sfx, bus routing, active plugins attached to each bus and active states.

The convoluted ambisonics tracks are assigned to an nth order Ambisonics output bus in Wwise which is a child of a “Binauralizer” bus that takes as input the ambisonics track and outputs a binaural track to the Master Audio Bus. The Binauralizer bus uses the Auro-3D Headphone plugin to render the binaural output, but special care should be taken in setting up the parameters of the plugin if we don’t want to change the RIR. The speaker distance should be set to be greater than the room dimensions so that Auro Headphone’s algorithm would not generate additional early reflections and the Reverb option should be disabled.

/9_image1.jpg) Figure 6 – Auro-3D Headphones settings used in the demo.

Figure 6 – Auro-3D Headphones settings used in the demo.

The ambisonics tracks positioning must be set to 3D spatialization with respect to the emitter 3D position to allow for soundfield rotation. No attenuation should be used as that information is already contained in the simulated IRs while the Spread curve should always be 100% for a correct spatialization of the soundfield around the listener’s head. Wwise 3D Meter is a useful tool to visualize the soundfield at the listener’s position in real time.

Split screen example of Wwise connected to Unity in Profiler layout. The 3D Meter selected as ambisonics bus meter shows the soundfield around the listener in real time. For this example, pink noise bursts are played by the left speaker only for ease of visualization.

Game states and Reverb Zones are used to manage the audio tracks that play at each source-receiver position. Audio tracks are attached to emitters using AkAmbient scripts while Reverb Zone is a Unity Collider with a custom script component attached that triggers the Wwise entry and exit states. Wwise states are linked with the corresponding convoluted ambisonics soundtracks that get pushed down the Wwise routing and are played in the binaural master audio output.

/5_image4.png)

/6_image2.jpg)

/10_image7.jpg) Figure 7 – The green box colliders in the room are positioned where RIR per source-receiver pairs were simulated. Each collider triggers an AkState when the player enters or exits the area.

Figure 7 – The green box colliders in the room are positioned where RIR per source-receiver pairs were simulated. Each collider triggers an AkState when the player enters or exits the area.

댓글