Please read Part 1 of this blog first.

Sound Effects and Dialogue

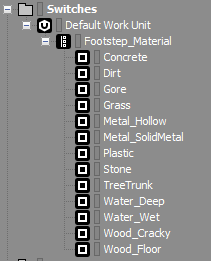

We were looking to enhance immersion with realistic sound effects and rich sound content. We designed a variety of footstep and collision materials, and used the Switch feature to switch between them in order to match the scene. We also used a lot of Random Containers with multiple samples for each sound and tried to optimize samples before importing them to Wwise to reduce the number of plug-ins used within Wwise and, therefore, save on CPU usage.

Footstep Material Group

Footstep Material Group

For dialogue, the Wwise built-in language system allowed us to easily use and manage assets in different languages. In most cases however, we still needed to import localized voices into the DAW for trimming or rendering to ensure consistency in regards to the listening experience and playback duration between different languages.

AI System Chinese

AI System English

AI System Japanese

Eventually, no matter what compression format we use within Wwise, the game would smoothly retrieve the Marker information in the original files. This was very convenient for calling the dialogue lines, and also saved us a lot of work.

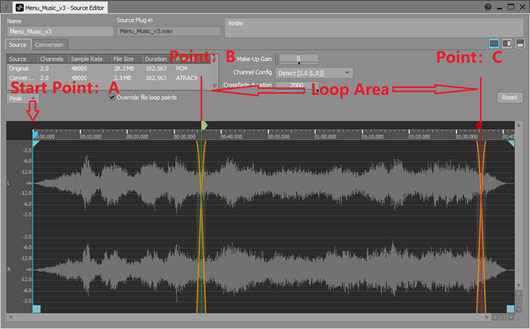

Dynamic Music

Since the duration of VR games is usually shorter than that of other platform games, the amount of music in Immortal Legacy is not very large—around 30 pieces in total. Only half of them used the dynamic music system. For some of the cut-scene music and ambient music which didn't require special processing, I made the choice of putting them under the Actor-Mixer Hierarchy instead of the Interactive Music Hierarchy and treating them as SFXs. In some cases, the Actor-Mixer Hierarchy’s Source Editor makes it easier to achieve certain things. Here is a simple example: Let’s say we have a piece of music called Menu_Music, and we need to start from Point A for every playback, then loop indefinitely between Point B and C. In this case, all we have to do is set up the loop point under the Actor-Mixer Hierarchy as shown in the following screenshot. Even though we cannot tap the music according to its tempo, the overall idea is very simple, so we can just set an appropriate cross-fade duration and adjust it to perfection.

Music Loop in Source Editor

Music Loop in Source Editor

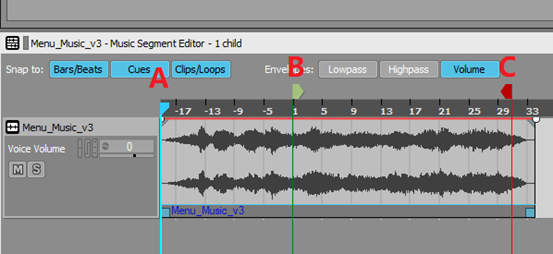

However, if it is processed as dynamic music, we can only achieve the desired result through the Transition feature, unless we reimport and copy it to the corresponding segments. To be specific:

First, we need to set the A-B segment slightly before measure 1, and use it as the pre-entry of the entire music.

pre-entry

pre-entry

Then let it loop indefinitely between Point B and C by setting certain transition rules.

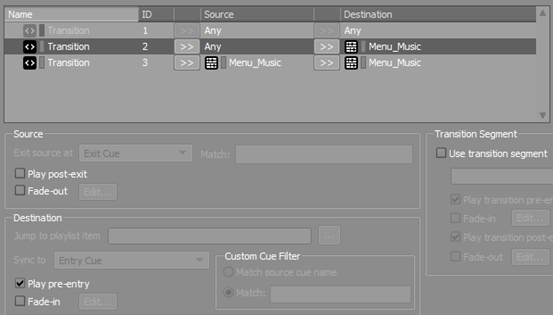

Transitions Rule 1

Transitions Rule 1

Transitions Rule 2

Transitions Rule 2

Note that the conditions for these two transitions, from Any to Menu_Music and from Menu_Music to Menu_Music, do not conflict with each other. It specifies that:

1. It always starts the playback from Point A whenever switching to Menu_Music.

2. It will not play the A-B segment when switching from Menu_Music to Menu_Music itself (switching from Point C back to Point B for instance).

Of course, the above example is just to show that we don’t need to put the music under the Interactive Music Hierarchy if it’s not going to be processed as dynamic music. For static music, the Actor-Mixer Hierarchy works better sometimes.

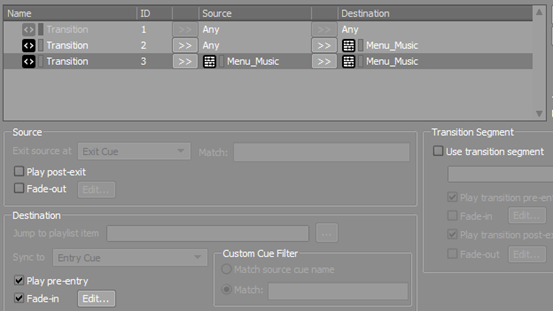

Considering the overall linearity of Immortal Legacy’s game flow, we didn’t use much complex design techniques. The most common method we used for the music was creating horizontal sections and vertical layers. Horizontally, we can split the music into segments, then transition and combine them according to their States, for example. Vertically, we can create layers based on their content, such as instruments and voices, then switch, overlap, and transition between different layers through RTPCs. Let's take the Fight music in the game as an example. It was divided into three phases: PreFight (entering the fight area), Fight (engaging in a fight) and End (the fight ends). The music during each phase consists of one or more Music Tracks. Meanwhile, the Fight music has two different versions. Each time the game switches from PreFight to Fight phase, it will randomly select a version to play according to the preset probability. And, when it switches to the End phase, corresponding outgoing tracks will be called to stop the music based on the music version currently played during the Fight phase.

Dynamic Music Demonstration

Although the overall logic of the music itself is not complicated, we needed to optimize Transition settings to ensure no obvious gaps occurred whenever switching between phrases.

Music Transitions Rules 1

Music Transitions Rules 1

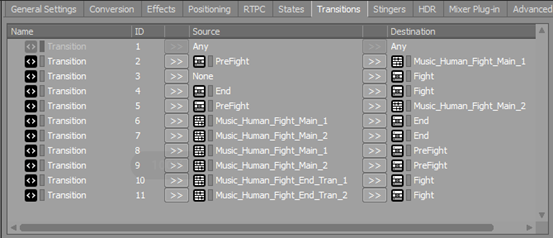

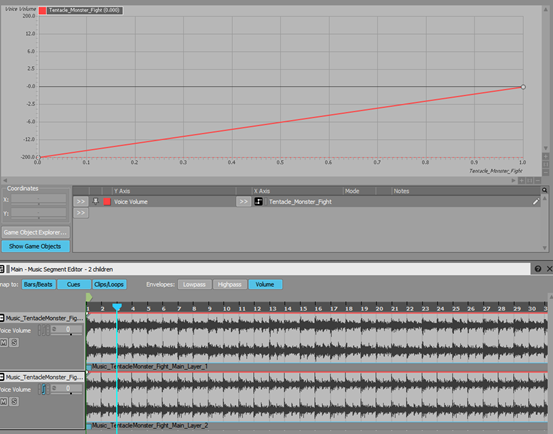

For another Fight music in the game, we used vertical layers to switch between two Music Tracks and configure the volume ratios when certain conditions were met.

The collaboration between composers and Wwise engineers was very important to ensure that we had a thorough plan during composition for the ways we wanted to import and split the music.

Mixing

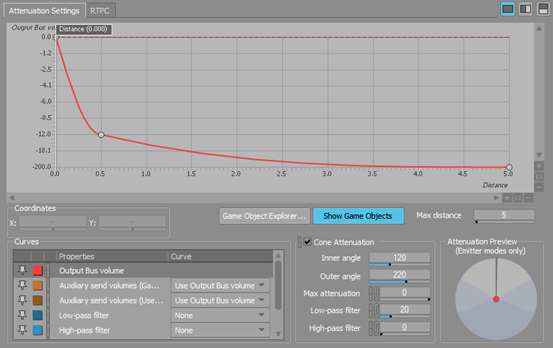

From my point of view, mixing is the most difficult and challenging part in game audio production. To achieve desired results, we need to constantly adjust project settings within Wwise based on feedback and our requirements. Additional to testing on a computer, we can also use the SoundCaster to perform limit tests directly and effectively. Since the SCE plug-ins from SONY implement downmix behaviors through the encapsulated libAudio3d audio end-point within PSVR, we combined Sulpha to test correct sounds on PC. It was also important to use the Profiler and Voice Monitor to identify exactly what the problems were to solve them quickly. Besides common mixing methods such as ducking and side-chaining, we also used some unique sound effects for special purposes in the Immortal Legacy project. For example, there are a couple of portable Voice Recorders for playing NPCs’ messages in the game. Usually, when these dialogues with diegetic information are played, other sounds will be lowered through ducking or side-chaining to ensure that players don’t miss any important information. However, we did not want to use obvious artificial processing methods here, so we utilized the interactivity of VR games. Since listeners are attached to the character model’s head, we exaggerated the voice recorder’s Attenuation in one unit.

Voice Recorder Attenuation

Voice Recorder Attenuation

When players pick up the voice recorder and put it close to their ears, they can hear the played content clearly. When players move the voice recorder away from their heads, the volume drops off quickly. In this way, we ensured that players are free to listen to voices of the recorder or other sounds in the game.

Voice Recorder

On busses, we applied HDR to some sound effects with caution while using HDR parameters to control the overall dynamic range. Although most VR games recommend using headphones as the main output device, this doesn’t mean that other device outputs such as TV and home theater systems are ruled out. From the dynamic perspective, we classified game output devices into headphones, TV speakers and home theater sound systems. Each type of output was given a respective value and hooked to the Game Parameters within Wwise. The parameters for bus compressor and HDR, and even the Make-up Gain for particular busses, were controlled through RTPCs depending on players’ choices, which allowed us to manage the overall dynamic range. However, when players choose TV as the output device, the speakers are usually limited in dynamic range, and the quality varies depending on their brands. It would be very difficult to restrict TV outputs' dynamic range to ensure most of the sounds can be heard clearly. On busses, we used the McDSP ML1 plug-in as the end-point controller, which works better than the Wwise built-in Limiter. The McDSP ML1 plug-in in Wwise is a little different from ML4000’s ML1, it allowed better control on the clipping distortion of overload signals. In a way, it not only works as a Limiter on busses, but also can be used as a Maximizer.

McDSP ML1

McDSP ML1

Project Settings and Optimizations

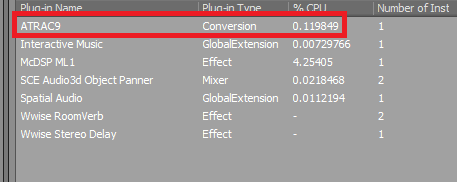

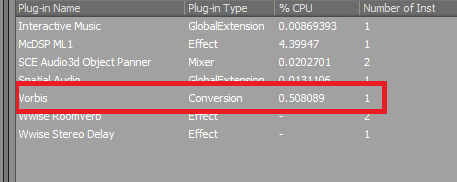

VR games are resource-heavy. Besides, SONY has strict requirements on their frame rates. The overall optimization of the game project is therefore particularly important. In many case, we have to make compromises on the audio side in order to leave more CPU resources for processing animations and program features. In Immortal Legacy, we used the ATRAC9 encoding format. As SONY’s own audio format, ATRAC9 has great hardware support on PlayStation devices. This significantly reduced the CPU and memory usage. Let’s take a look at the difference between ATRAC9’s highest quality and Vorbis’s Q8 settings.

CPU Usage Under ATRAC9

CPU Usage Under ATRAC9

CPU Usage Under Vorbis Q8

CPU Usage Under Vorbis Q8

Notice that ATRAC9 costs much less in CPU resources than Vorbis, yet with no obvious difference in the listening experience.

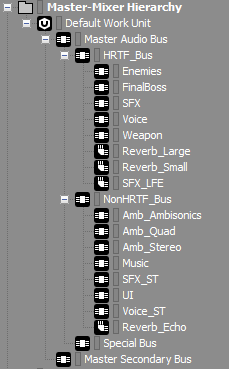

There are many factors to be considered when we build the bus hierarchy. For example, whether it fits the project type or meets the functional requirements. It’s also important to figure out how to configure bus plug-ins or ducking settings during post-optimization. In fact, each project can have its own unique hierarchy design. For Immortal Legacy, we divided busses into two types: HRTF and non-HRTF.

Bus Hierarchy

Bus Hierarchy

This meant that all 3D point sources in the game will be rendered through the ambisonics pipeline, then output by HRTF plug-ins. By contrast, 2D sources such as music and UI sounds, and sounds played according to the original channel configuration such as Ambisonics or Quad ambiences will be configured and played based on the original channel settings, without going through HRTF plug-ins. This is because HRTF plug-ins will force the sounds to be downmixed according to the 3D Position settings when transforming into binaural signals, as such they won’t be played based on the original channel settings. For example, after a stereo sound is downmixed by HTRF plug-ins, the sound which originally appeared only in the left channel would now also appear in the right channel. Basically, we used the “Ambisonics 3rd order” channel configuration for all sub-busses of 3D busses. For 2D busses, audio format Stereo, Quad or Ambisonics was selected based on the channel settings of the sound effects. As for HRTF plug-ins, Wwise provides a number of third-party plug-ins, such as Auro 3D, Steam Audio, RealSpace3D, Oculus Spatializer and Microsoft Spatial Sound. The essential functions of these plug-ins are generally the same, but the final result varies due to different algorithms used by vendors. Since Immortal Legacy is based on the PSVR platform, SONY integrated its own Spatial Audio feature inside the PS SDK, i.e. the PS4 libAudio3d feature set. So, we can use the SCE 3D Audio plug-ins within Wwise to output 3D sound effects through the SCE Audio3d Object Panner without applying any other HRTF plug-in to the busses, otherwise sonic redundancy will be generated. Fortunately, Wwise's Unlink feature allows us to select plug-ins separately for each platform as needed.

In the end, we split SoundBanks per level and scene. Instead of loading a single large SoundBank, the General SoundBank and a separate SoundBank for the current level loads in each level. For multi-language projects however, it's better to create a SoundBank for sound voices independent of other sound effects. Otherwise, the same sound effects will be generated repeatedly in different language folders, which unnecessarily increases the total size of the SoundBanks. The way in which SoundBanks are split may be completely different for each project. When we split SoundBanks more finely, there will be less pressure on the memory. In contrast, the communication with the program will be more complex. So, you may want to find the best way to split your SoundBanks according to your own situation.

Thanks for reading. I hope my blog will be helpful for you. Please feel free to share your ideas or comments with me below. I'll leave you with the theme song at the end of Immortal Legacy. Enjoy!

旋转的罗盘(Revolving Compass)作曲&词:Eddy Liu演唱:Dubi瞳孔在放大天空盘旋着乌鸦停止了呼吸没有了挣扎恶魔的咆哮萦绕我却不害怕黑暗的尽头才是我的家命运的罗盘永远都无法停下绚丽的花朵总开在悬崖是谁在这里守护不朽的神话乌云散却后是否有彩霞破碎的心,耳边靡靡之音想要看清他却只留下一个背影抓不住的叫宿命让我们静静聆听掉落花瓣子弹旋转 好像滴答滴答滴答滴答It's my Dream是谁在耳边唱起那段旋律让我们停止厮杀回忆却慢慢风化泪水静止在我的脸颊 |

Revolving CompassSongwriter & Composer: Eddy LiuSinger: Dubi

|

댓글