Introduction

This is the 2nd of a 3-part tech-blog series by Jater (Ruohao) Xu, sharing the work done for Reverse Collapse: Code Name Bakery. You can read the first article here, where he dives into using Wwise to drive in-game cinematics. Stay tuned for Part 3 in the coming weeks!

Custom Wwise Listener Position Projection System for Tilted 2D View

Tech Blog Series | Part 2

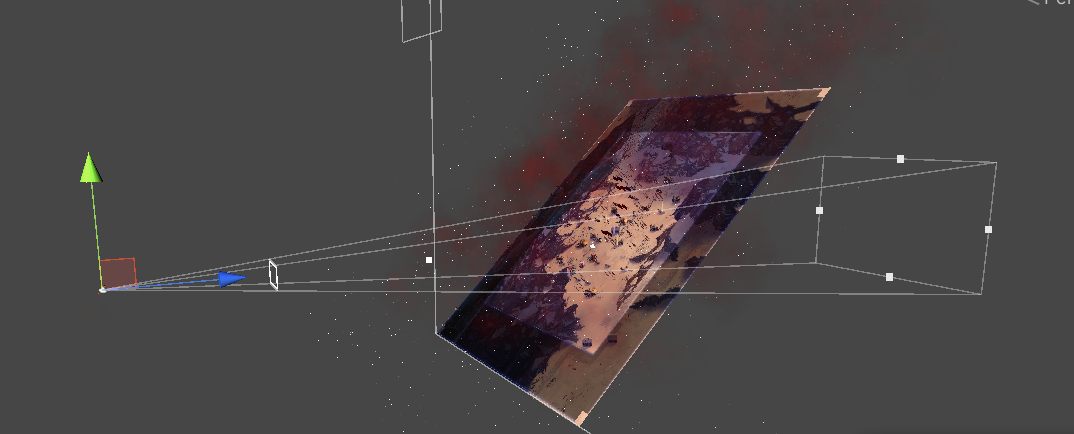

Reverse Collapse features a distinctive art style, and one of the artistic choices to make the game stand out is the use of tilted 2D level maps. At first glance, the game presents a 2D top-down camera view. However, behind the scenes, each level of the map is meticulously crafted with a slight tilt from the direction that the camera is facing.

Implementing 3D audio in a typical top-down view is relatively straightforward. However, when dealing with a tilted 2D top-down view like in Reverse Collapse, the default 3D audio system may not provide accurate results. Initially attempting this approach led to issues with attenuation bugs, especially when moving the camera from left to right or top to bottom.

To address this, a customized system is necessary to make adjustments to the default 3D audio system and ensure it works seamlessly with the tilted 2D top-down view.

The image above highlights the issue we're encountering: from the perspective of the camera view frustum, the map appears tilted, leading to inaccuracies in the default audio attenuation.

The solution involves utilizing a simple projection system created with Unity's game objects, scripts, and Wwise RTPC, to establish a projected X, Y, and Z coordinate system specifically for audio purposes. By moving the audio listener from its default position on the main camera to the converted position after the map has been rotated, we can achieve our objectives. This approach seamlessly integrates with the default Wwise audio attenuation. Additionally, we can leverage the customized coordinate system for special cases that require manual adjustment of the attenuation range through Wwise RTPCs.

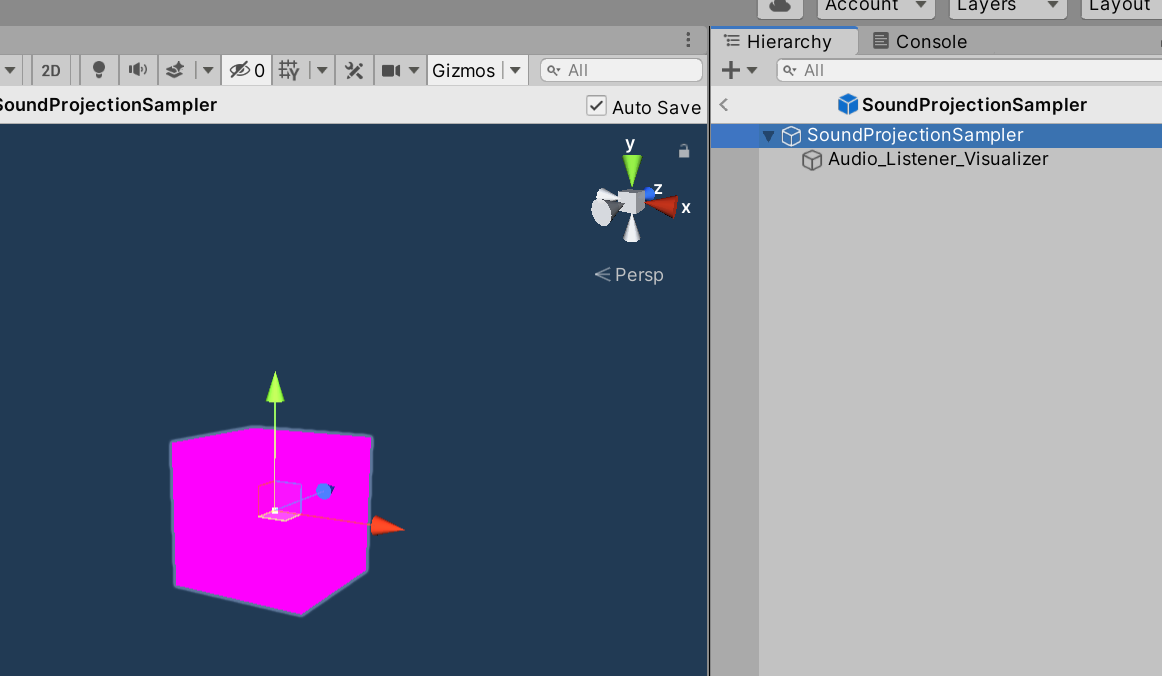

To achieve this, we first need to set up the projected location, denoted by the magenta cube in the video below, as a prefab game object. This game object should be attachable to any camera with a fixed offset distance to the main camera. It's essential to make this game object a prefab for our project because we have multiple camera setups in the game. Therefore, we need the ability to install or switch the projection system as a child of the game object of any camera setup at runtime. However, it's worth noting that this step may not be the same for every project.

After setting up the prefab game object for the project location, the next step is to add the AkAudioListener.cs script, which comes with our Wwise Unity integration, to the prefab parent game object. This action designates it as the audio listener for the game. Subsequently, we should remove the default audio listener from the MainCamera game object.

The image above shows the prefab setup for our special audio listener game object that is used in the sound projection system; we named it SoundProjectSampler,

The above video demonstrates the problem of the map from the camera view, and showcases the final working system in the engine.

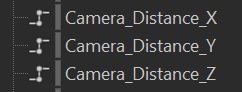

In Wwise, we configure the RTPC to transfer coordinate data from Unity to Wwise. This allows us to apply the results of the sound modifications we desire. The specific range of each RTPC depends on the unit sizes of the map in each game. In our case, we set the range between 0 to 100.

On the camera object, similarly to the solution above, we attach the script and include these RTPC calls within the LateUpdate()function. While it would also function with Update(), using LateUpdate() ensures that all rendering operations are completed before updating our audio coordinates. This sequencing helps to maintain synchronization between audio and visuals.

AkSoundEngine.SetRTPCValue(CameraDistance_X, Normalization(soundProjectionSampler.transform.position.x, 0.0f, 100.0f));

AkSoundEngine.SetRTPCValue(CameraDistance_Y, Normalization(soundProjectionSampler.transform.position.y, 0.0f, 100.0f));

AkSoundEngine.SetRTPCValue(CameraDistance_Z, Normalization(soundProjectionSampler.transform.position.z, 0.0f, 100.0f));

Note that you may find, in the solution above, we have a helper function called Normalization(), which will convert any given float value based on a minimum and maximum range, to the final range of 0 to 1. It is highly recommended to have something like it to help us unify the range for the RTPC that we are working with. The function can be simply written by following the mathematical normalization formula:

Normalized Value = (Value To Be Normalized - Lower Bound) / (Upper Bound - Lower Bound)

There are plenty of implementation examples of normalization formulas in different programming languages, which include instances in C# and C++; a simple online search will yield many results for you in this case, so we will skip this part here.

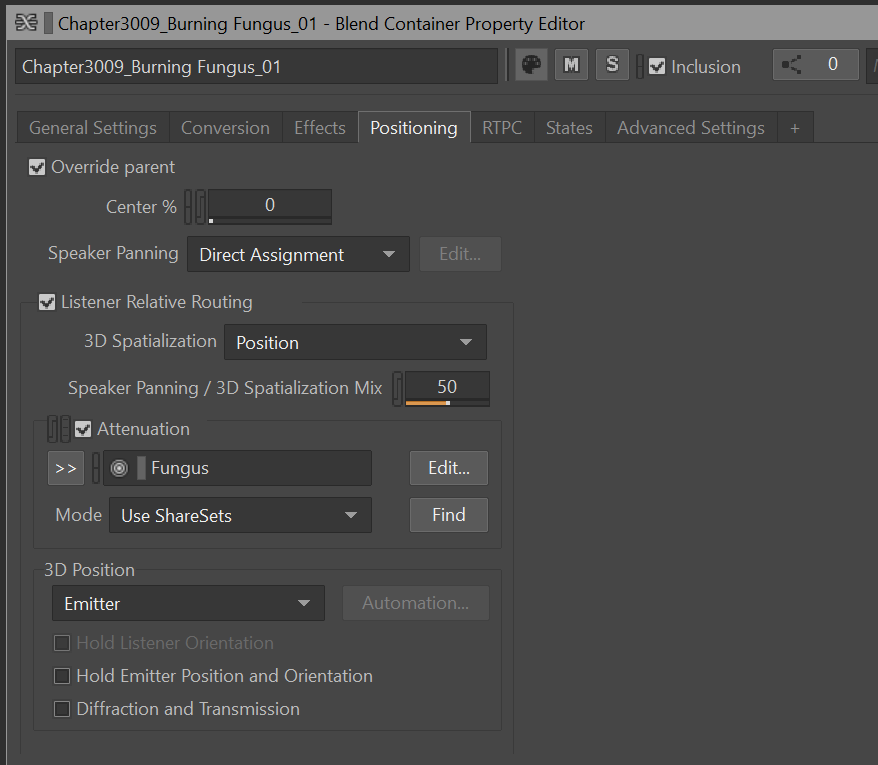

With this RTPC hooked up, we can achieve accurate audio results using the default Wwise attenuation system. In addition, we have the flexibility to fine-tune each sound by applying the X, Y, and Z coordinate RTPCs directly to the audio sources themselves. This allows sound designers to set their own attenuation system, or augment it on top of the the default attenuation system.

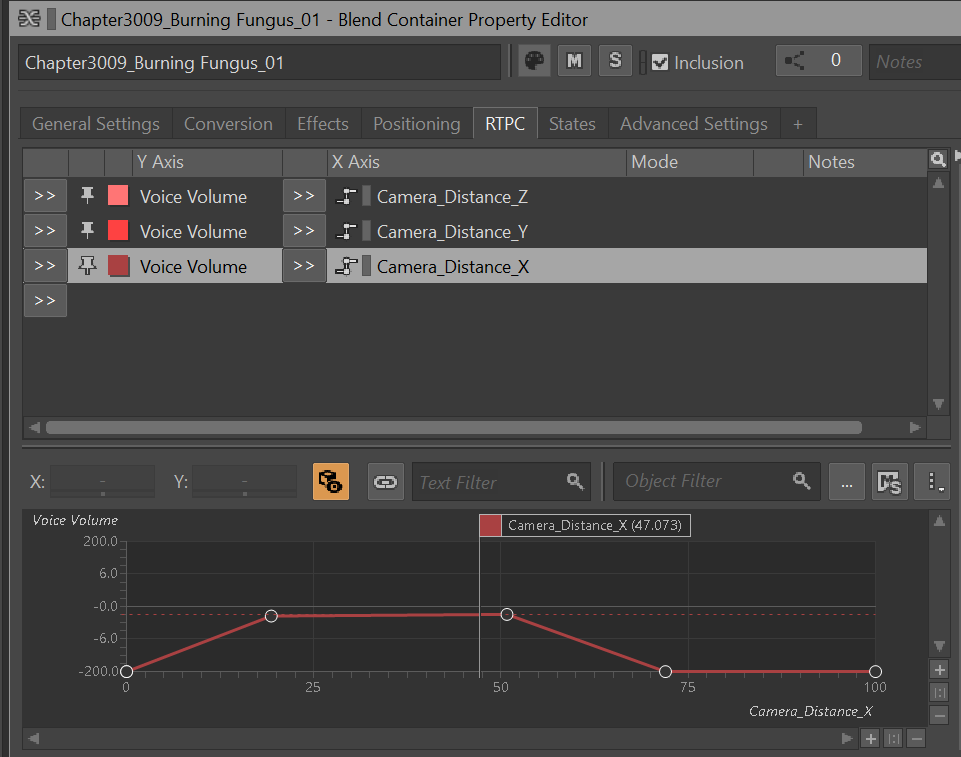

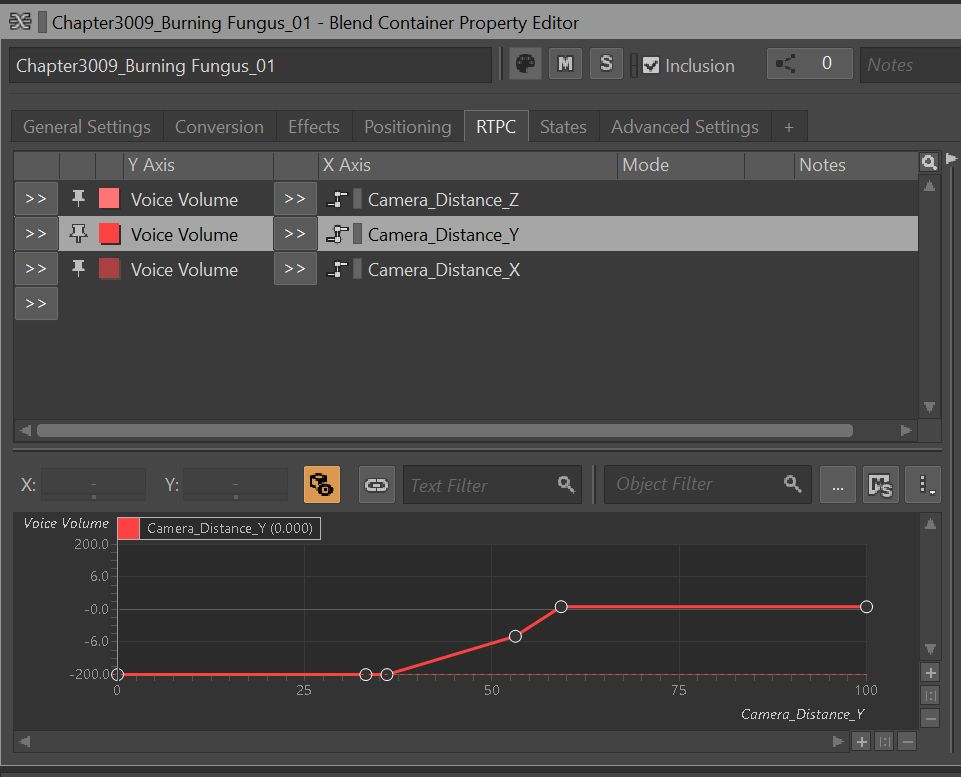

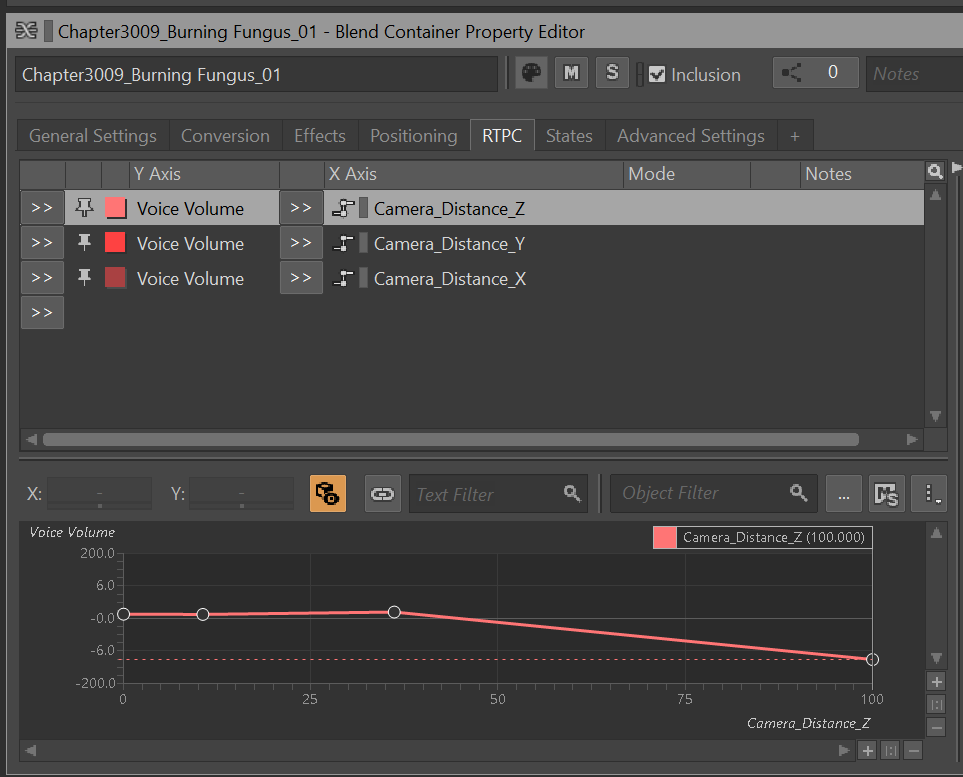

The example demonstrates how the sound attenuation of the fungus is tuned when they are ignited by grenades in the 3009 gameplay level. Initially, the fungus sound is set up as a 3D sound with Wwise's attenuation, utilizing the default attenuation settings. Additionally, the attenuation is driven by the projected coordinates we created, allowing for further customization and fine-tuning of the sound attenuation based on the precise game map units.

In addition to working with existing attenuation profiles, the X, Y, and Z coordinate RTPCs can also independently create attenuation effects on game object scope sounds. Sound designers can fine-tune the sound on each axis of the map, allowing for more precise adjustments that generate accurate acoustic results in unique map angles, such as those found in Reverse Collapse.

This flexibility empowers sound designers to craft immersive audio experiences that complement the game's visuals and enhance the overall gameplay atmosphere.

Disclaimer: The code snippets utilized in this article are reconstructed generic versions intended solely for illustrative purposes. The underlying logic has been verified to function correctly, specific project-specific API calls and functions have been omitted from the examples due to potential copyright restrictions.

댓글