Hello, I’m Carlo, a music composer and sound designer for video games. Today, we’ll dive into using Wwise for an interactive live performance piece.

I recently had the chance to work alongside performer Tiziana Contino on a project called “Classic Dark Words”. The piece is part of a larger project called "Typo Wo-man", which translates gestures associated with a body into letters that become a sort of “bodily alphabet”, which is then interpreted as a performative act/dance, to tell a story that only the body can express.

This alphabet was created entirely with the performer’s body, which became a typographic sign of expression, a recording of the moods of a body. The alphabet then became a glossary of terms that were translated to an app developed alongside the performance through which participants could elaborate. Using the app, it was possible to interact by building narrations that Tiziana Contino performed with her body, making the performance unique each time. The links between the visual and auditory context were performed by me through my music and Wwise in real time. Both of us interact with the words written by the audience through a tablet that displays the content of the app on the computer. Here’s a video showcasing an example of a performance:

Behind the Music for Classic Dark Words

I created the musical performance for Classic Dark Words with the help of the glossary developed by Tiziana Contino. I found a relationship between the planets and some astronomical objects, such as the Sun and the Moon. I searched through various esoteric books and detected a correlation among the astronomical objects and the major scale modes (Ionian, Dorian, Phrygian, Lydian, Mixolydian, Aeolian and Locrian).

I then asked myself,

“How can I change modes during the performance while I play the keyboard and shape synths sounds?“

I found the answer in Wwise.

But first, let's talk a little about music theory so that we can understand the metaphorical relationship between these modes and astronomical objects.

A Little Music Theory

In music theory, the scale degree corresponds to the position of a specific note in the scale, relative to the root of the scale. As an example, let’s take the C major scale (C - D - E - F - G - A - B). Every note in the scale has its function, and this function does not change if the root changes. So we can find:

C: Tonic

D: Supertonic

E: Mediant

F: Subdominant

G: Dominant

A: Submediant

B: Subtonic or Leading Tone

Instead, a major scale’s defining quality is its pattern of Whole (W) and Half (H) steps. For example, for the C major scale, the pattern is as follows: W-W-H-W-W-W-H.

This pattern of whole and half steps determines the mode of a scale (in this case, Ionian). And if we change the root to F (F - G - A - B - C - D - E), the order of whole and half steps becomes W-W-W-H-W-W-H. Note that we didn’t change B to Bb. This is important because the B natural is the characteristic note here that determines the mode (in this case, Lydian.)

Isn’t that simple? All we have to do is change the order of the whole and half steps, and this changes our universe; not only in the musical sense, but also in terms of the perception and emotion of the music. Every mode has its flavor, sensation, and emotion:

Ionian (W-W-H-W-W-W-H): very positive, joyful, celebratory

Dorian (W-H-W-W-W-H-W): ancient, meditative, mellow

Phrygian (H-W-W-W-H-W-W): mysterious, exotic, mystic

Lydian (W-W-W-H-W-W-H): surreal, ethereal, floating

Mixolydian (W-W-H-W-W-H-W): energetic, optimistic

Aeolian (W-H-W-W-H-W-W): soulful, quiet

Locrian (H-W-W-H-W-W-W): chaotic, tense, hypnotic

Let’s take a step back to the astronomical objects and discuss the relationship between them and the various modes.

Musical associations with astronomical objects

According to Roman mythology, each planet or astronomical object possessed a unique energy or quality. For example, Jupiter was always associated with joy, optimism and expansion. So why don’t we create music with Mixolydian, with its energetic & optimistic sound? This is the link that I was looking for!

Indeed, I ended up composing seven tracks for Classic Dark Words:

Ionian -> Sun -> Heal, title Healing Sun

Dorian -> Saturn -> Ancient, title Ancient Saturn

Phrygian -> Venus -> Mystic, title Mystic Venus

Lydian -> Mars -> Fast, title Fast Mars

Mixolydian -> Jupiter -> Growing, title Growing Jupiter

Eolian -> Moon -> Quiet, title Quiet Moon

Locrian -> Mercury -> Worlds, title Worlds of Mercury

Here's a YouTube Playlist for the seven tracks:

Composing the music & Wwise implementation

Composing seven tracks isn’t so hard, especially when you have a lot of time. But in this case, considering that I decided to use Wwise to manipulate the modes/moods in real time, I found myself simply composing eight bars for each track.

I was planning to use a common video game music composition technique called vertical layering.

Vertically layered compositions consist of a single track with multiple intentions. For example, our character is in the forest, and the exploration music is playing… Imagine, only pads and a few string chords… but what the player doesn’t know is that there are several tracks of percussion on mute, just waiting to be layered in for when combat begins.

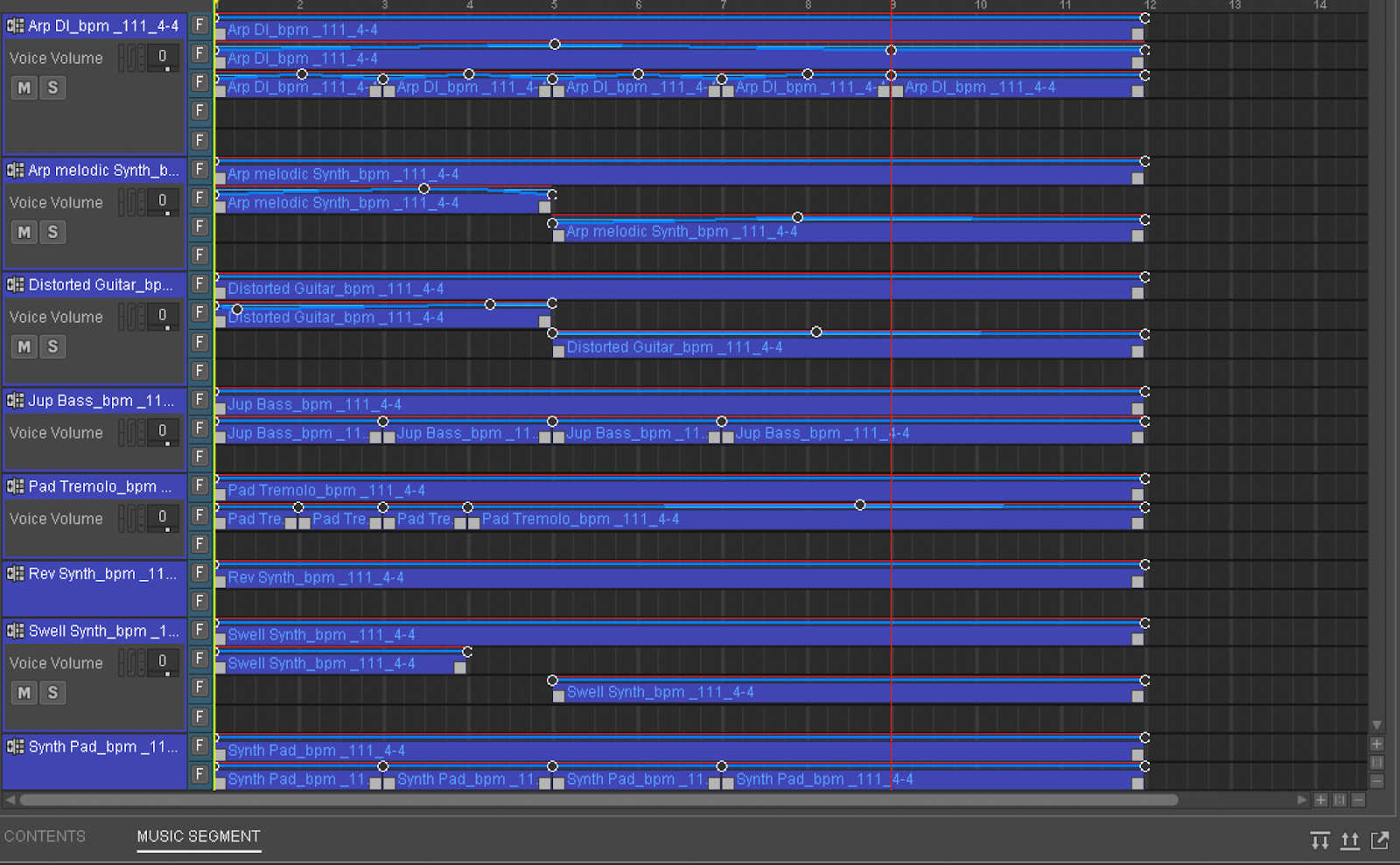

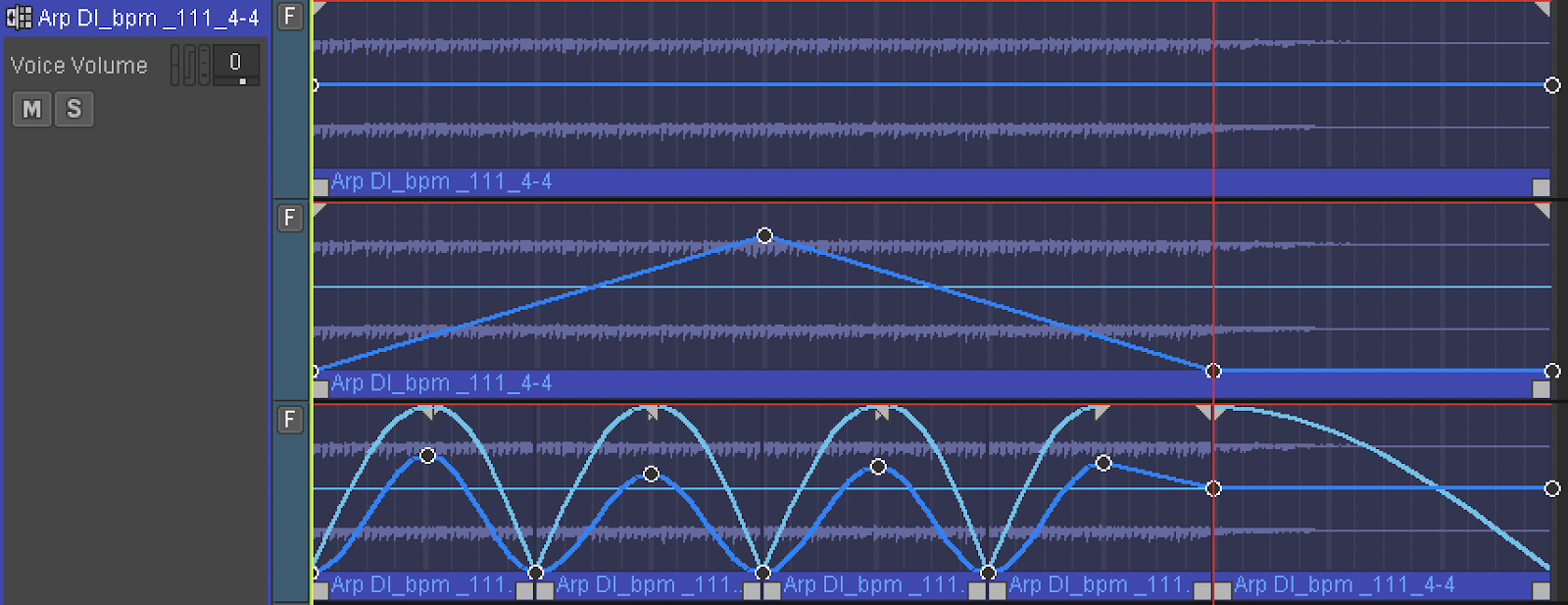

When our character meets an enemy, depending on how we set up vertical layering (Switch, RTPC), the combat music layer fades in and we can listen to the exploration music and the tribal percussion reinforcing the battle. This technique was exactly what I needed for the performance. Since I wasn’t able to handle changing Switch groups or modulating RTPCs (in truth, I decided to use RPTCs for something else that I’ll explain later), I chose to give Wwise a little bit of randomness with a layered approach using sub-tracks.

The Music Segment sub-tracks are amazing tools to use to create variation in a single 30-second piece.

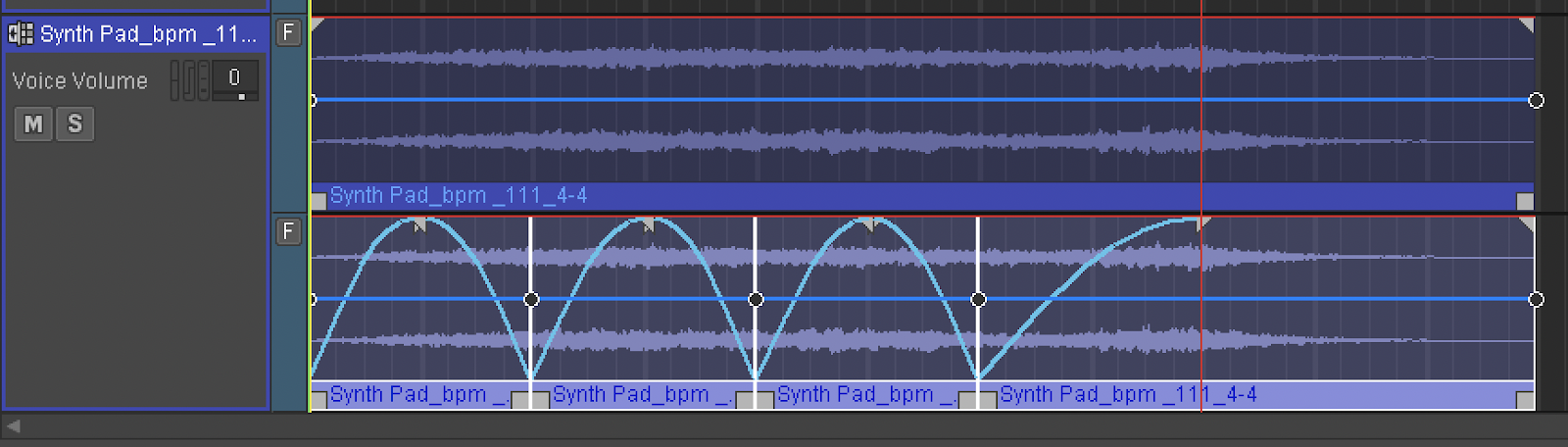

In particular, there’s one track that is “always on”, meaning that it’s constantly playing. This synth track is present in all the compositions as a glue for the base sound; it’s the base structure of the chords that determines the mode. I used the same track in one sub-track because it was exported with its dynamics. In another, I used Wwise to create a fade-in/out.

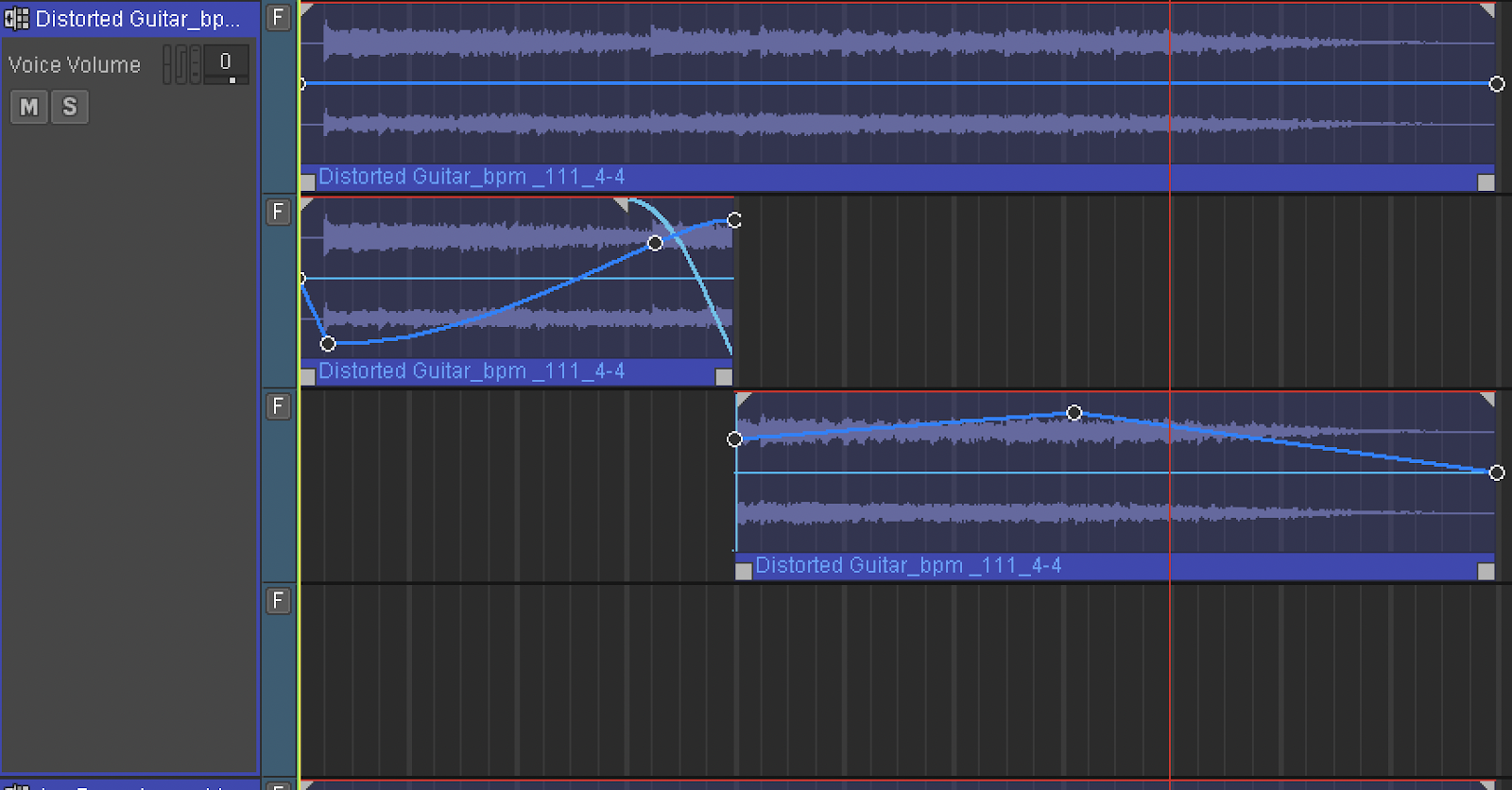

In other cases, I used automation for the volume and low-pass/high-pass filters too. I duplicated the same track and split it into two equal measures. In the first split track, you can hear the guitar in the first four measures; in the second, you can hear it in the last four measures.

For my purposes, I didn’t use Music Segment sequence-tracks because I wanted it to be really random with less repetition.

It’s important to use the same audio clip while changing parameters to make the clip sound different.

After setting up all the Music Segment sub-tracks in all modes, I then moved on to creating a Music Playlist Container to make the Music Segment infinitely loopable.

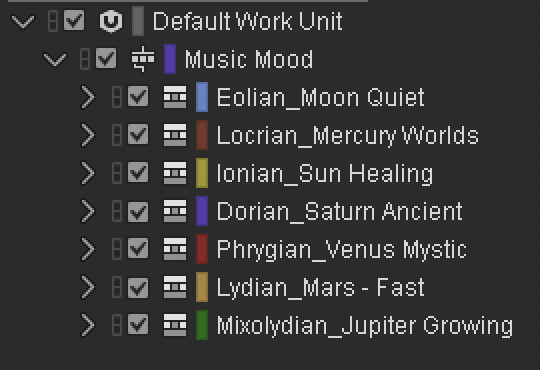

I also did another thing that I highly recommend: color coding everything!

I’ll give you two reasons for this piece of advice:

- In a large project, color coding is essential for organization and productivity.

- If you work in a team, especially in the video game industry, you probably work with other composers and/or sound designers. Having the same color coding helps you out when merging work units from multiple contributors.

In this case, I color coded my Music Playlist Container for a first impression of the mood.

As you can see, after I put all of the Music Playlist Containers inside a Music Switch Container, I needed something that would make it possible to change the playlist with a simple click. For the transition, I used States in Wwise. Like the States in a video game in which, based on gameplay, the player character could be “Alive”, “Dead”, “Victorious”, or “Defeated”, etc., these game states could be used to change the music.

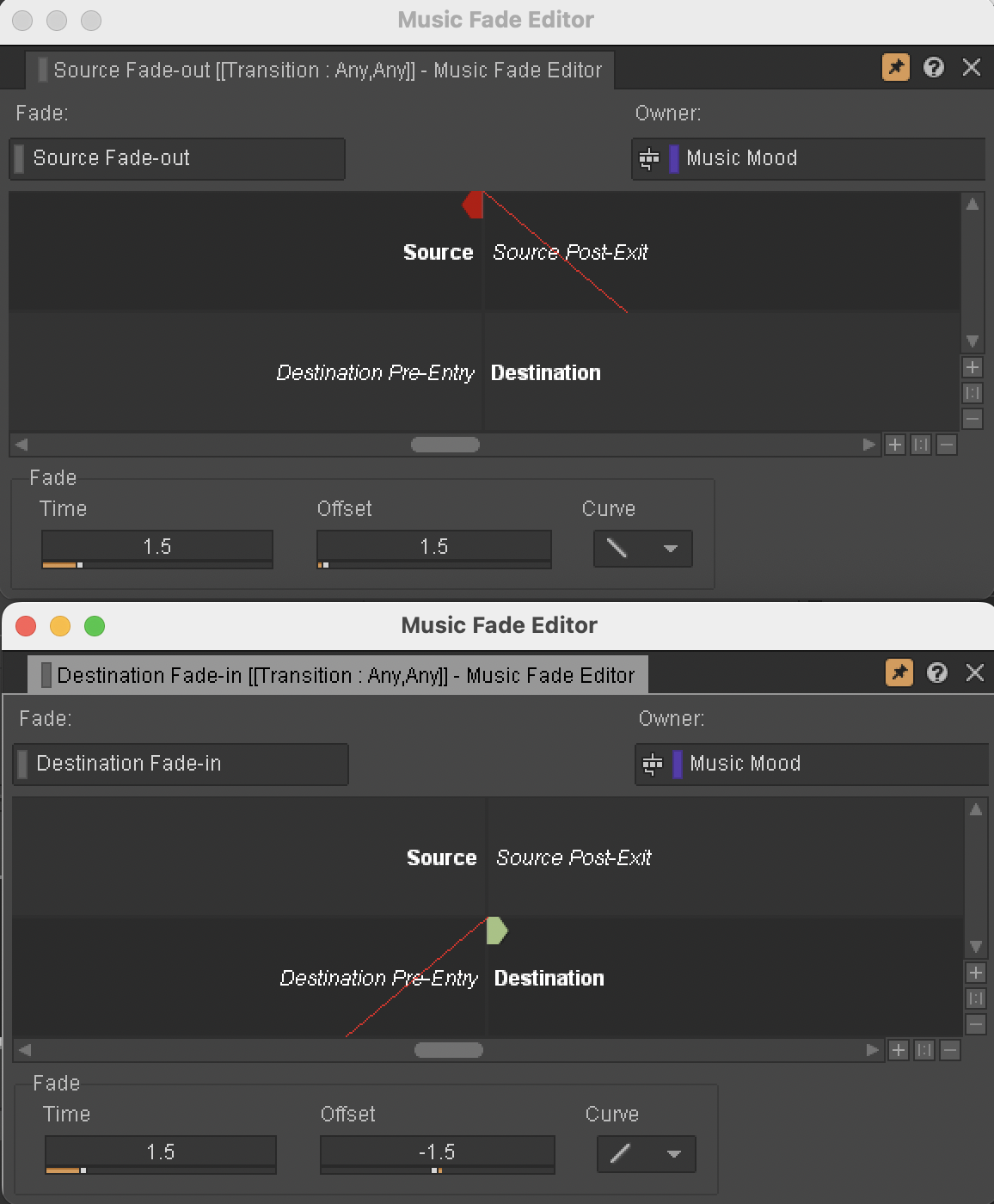

For this project, I created States for every mode. This allowed me to smoothly transition between Music Segments in the Music Playlist Container. When looking at the image below, notice that I didn’t use a unique rule for transitions; instead, the (default) “Any to Any” transition has been set to Exit Source at Next Bar along with the fade-out/in timing.

This is why my music performance was focused on playing transitions, creating synths during the performance and elaborating on the words surfaced through the app by people participating in the event to contribute to the music, mood, modes and expressions.

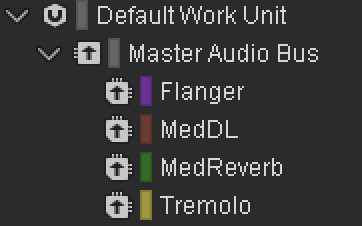

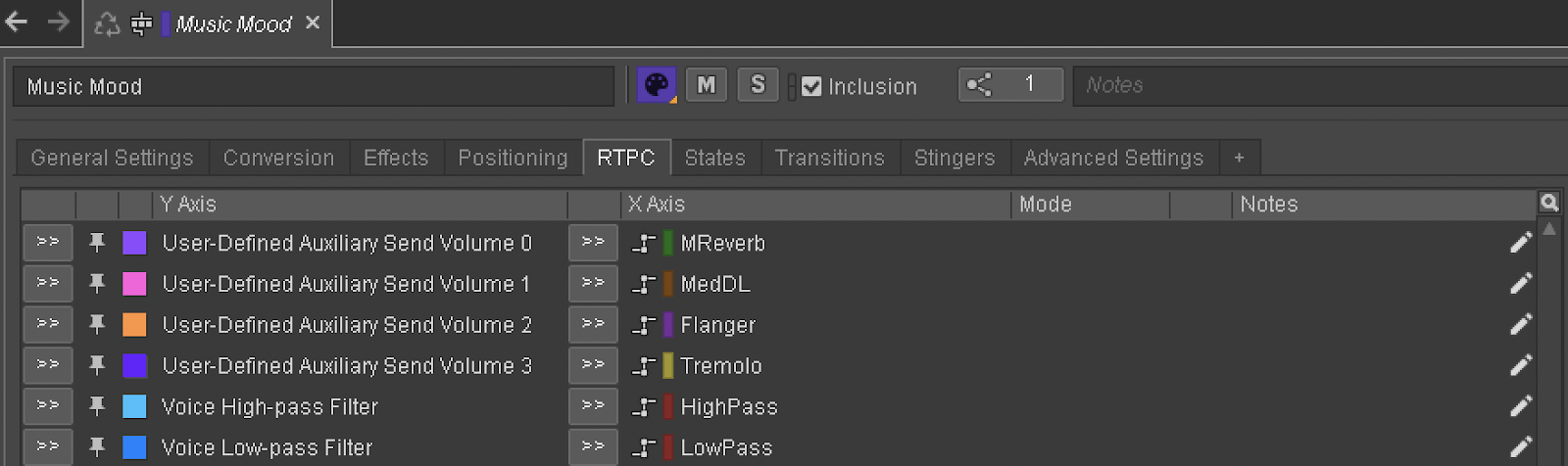

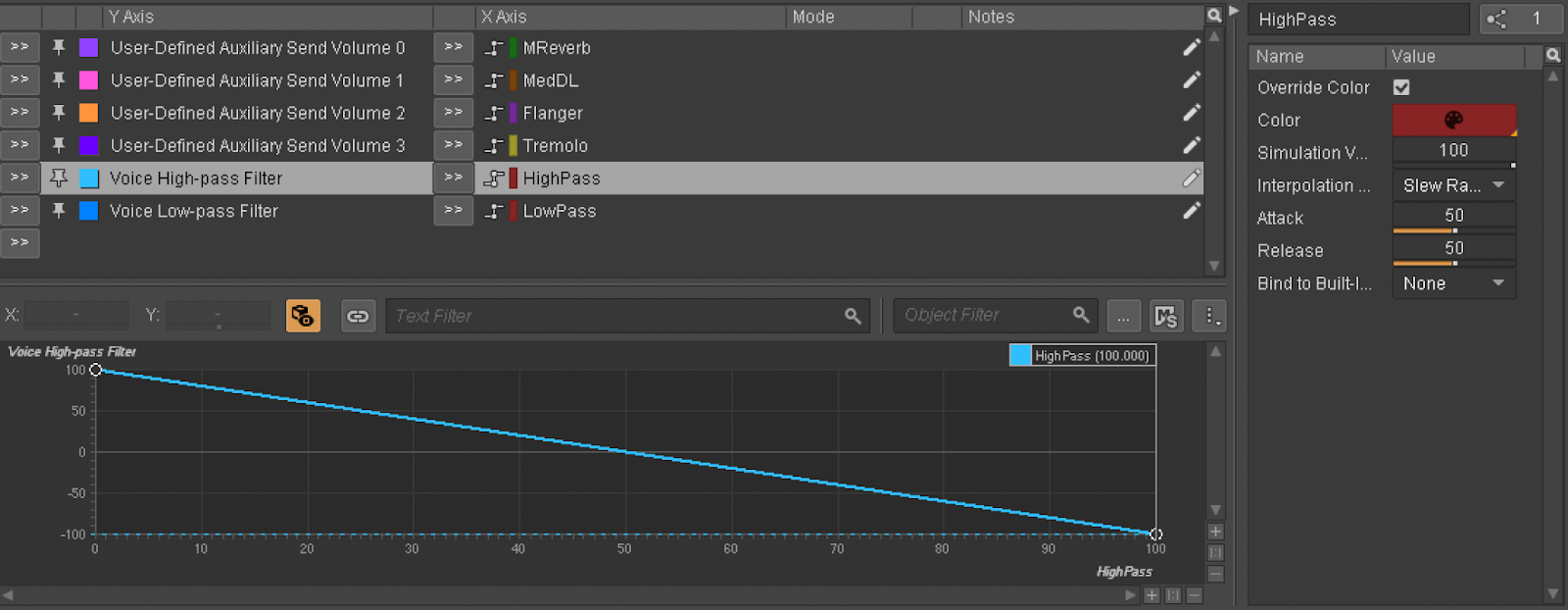

To have more creative control of the audio performance, I used RTPCs (Real-Time Parameter Controls) to change the volume of the reverb, delay, flanger, and tremolo. I also included a high-pass and low-pass control. At one point I had to stop putting in more FX because it’s so easy to go overboard with this stuff. I also thought about manipulating the pitch, but decided it didn’t make sense at that moment for the purpose of the entire performance.

After I created the Auxiliary Bus for each FX, I used the RTPCs linked to the Music Playlist Container, as you can see in the image below.

In order to avoid the effect jumping straight to the target value, while allowing for more control of the high/low-pass filters (and in general for all FX), I preferred to have the Game Parameter Interpolation set to Slew Rate with an Attack and Release set to 50. This created a smooth transition between values when the RTPC changed.

Game Parameters for high-pass and low-pass were created with a value of 0-100 to use when simulating these filters, like a send value for the mix.

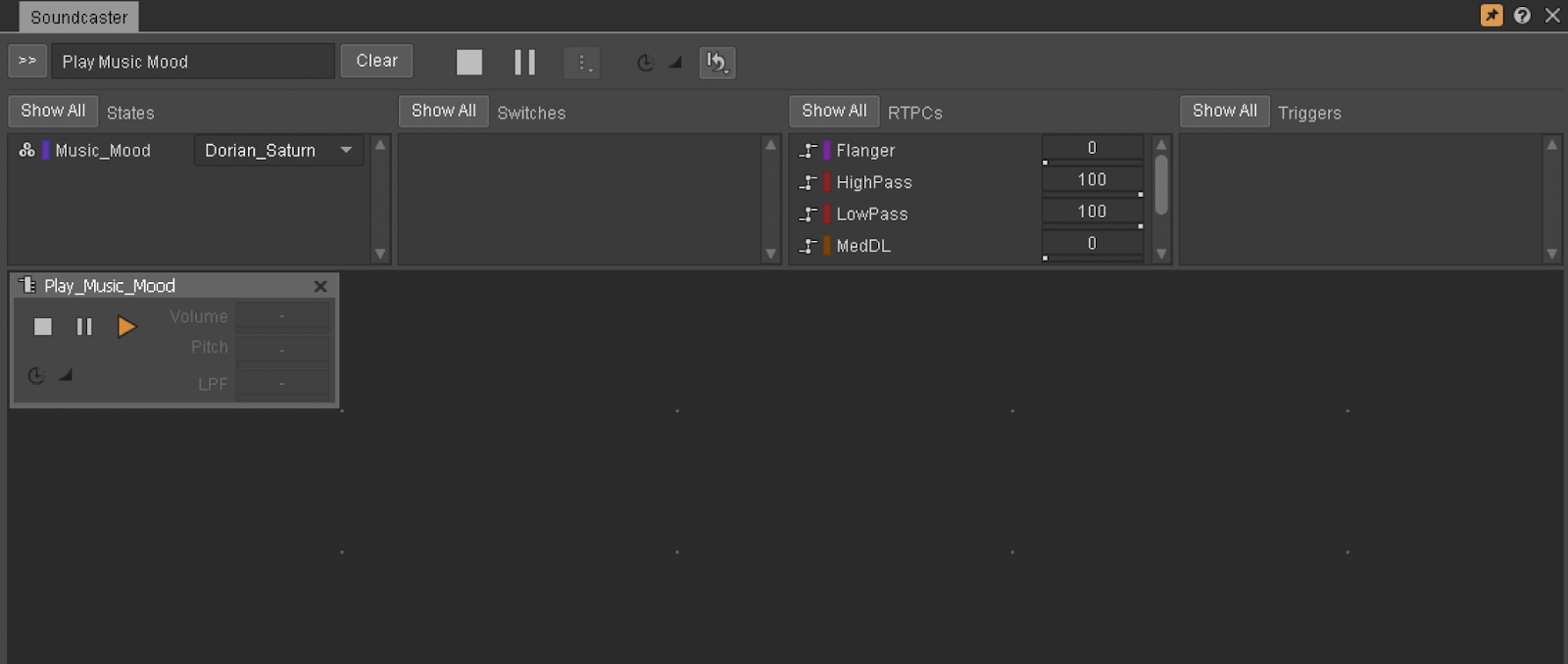

Then came testing all of that in the Soundcaster session.

The Soundcaster session was not only useful for pre-performance testing, but because I decided to use it as my main music control system. I was able to start the “Play_Music_Mood” Event, which references the Music Playlist Container, and change the States to switch between modes. Additionally, I could change different RTPC values with my mouse. All of these changes happened in real time, while Tiziana was performing, and while I was simultaneously playing the MIDI Keyboard.

One important thing that I want to point out is how the randomness of the Music Segment sub-track helped me during the performance. In one case, for example, when the words “Anxiety” were written on the app, I naturally changed States to play the Locrian mode as soon as possible. I also added a low-pass filter using an RTPC and to my surprise, the drum ended up sounding like a heartbeat. The heartbeat was irregular, since the selected sub-track was one that I had edited before, which just added to the feeling of anxiety. This is the magic of randomness combined with creativity!

Conclusions and Next Steps

After reading this, I hope you’ll be inspired to use Wwise in other creative and uncommon ways! Of course, this was just a small illustration of a large project that can be very adaptive to performance. I’m already thinking of adding various transition segments between modes, more adaptive elements, expanding the Music Playlist Container, and more for future performances!

If you want to listen to the music, check out the Spotify version. While it’s not as adaptive as the original live performance, it’s immersive in my interpretation of “Classic Dark Words”. The linear form of the music used in this performance is here: https://open.spotify.com/intl-it/album/2GDOP0O3EapUtSwc4rXIzb?si=x9mG1MdBQ7eRmziGDgB0xQ

댓글