Introduction

My name is Máté Moldován: sound designer, composer, audio implementer, and production manager for video games. In this article I will share my in-depth findings and technical implementation tips to provide game audio professionals with a summary of currently available resources on how to make games accessible for blind players through the use of dynamic audio implementation, and to make games more interesting for all players by communicating the gameplay experience through clear, logical design using Audiokinetic’s Wwise.

As this topic comes up more and more often in game development articles, this article breaks down the individual components of audio in games that can enhance accessibility. By the end, you should have a deeper understanding of the types of sound and audio behavior systems necessary to include in a game to improve the gaming experience of visually-impaired (VI) and blind players. You will also gather some tricks and tips on how to achieve this in Wwise.

All information conveyed in this document is an altered / extended version of my article previously published on A Sound Effect, which you can find here: https://www.asoundeffect.com/game-audio-blind-accessibility/

Why Should You Care About Blind Accessibility?

Video games have always given people an escape from everyday struggles. Players are able to relax better, improve their cognitive skills and problem solving capabilities, be inspired by, and in fact, become motivated to perform task after task when playing a game. Today’s game stores have all kinds of genres available to meet everyone’s needs: action, adventure, action-adventure, role playing (RPG), action RPG, simulation, strategy, puzzle, sports, and the list goes on and on. It’s basically limitless, and everyone can find their favorite.

The visuals of these new worlds and characters in the latest games are astonishingly realistic. While awesome graphics can be achieved using available free game engines (e.g. Unreal 4 and 5, Unity, CryEngine), not all gamers can benefit from the realistic visuals.

A blind or visually-impaired person relies less on the visuals and more on the auditory and haptic feedback of their environment. Because games wish to place the player into their own virtual world, for a blind player to be part of it, games need to try different techniques and systems to make the behavior of audio more realistic and helpful, acting as a navigational tool to improve the player’s immersive experience.

Let’s see how this has been done in recent games and how the enhanced audio behaviors improve the overall gameplay, as well as how these systems can be recreated within Wwise.

Sound Categories

Before you create sound assets for the game you’re working on, you will likely have a spotting session (either alone or with the directors/lead developers) to get the vibe of the game. This is a perfect opportunity to specify the different categories of sound the game needs and start thinking about how these categories can serve the gameplay experience of visually-impaired players.

The most common categories are dialogue, sound effects, ambience, and music:

- Dialogues exist mostly to drive the narrative and enhance immersion in role playing. It is advisable to design dialogues with a mindset that it won’t be annoying for either sighted or blind players, thus “accessibility dialogues” can be included in the game by default. Additionally, further guiding dialogue lines can be implemented in the game with an on/off toggle in the game’s menu.

- Sound Effects (and UI) give real-time auditory feedback of user actions, mostly to point out interactable game objects. It should be possible to set these functions with an on/off toggle in the game’s menu.

- Ambience creates a bed for all other in-world sounds to improve immersion with the game world. Well-designed, detailed, and interactive ambiences should be included in the game by default.

- Music supports the narrative and gives a dynamic feel to the game, and improves gameflow and immersion. Well-designed, dramatic or playful but definitely interactive music should be included in the game by default.

These sound categories help us separate the individual tasks of making a game accessible for blind players. To further break down these categories, you will need to define the game mechanics that will be used the most by the player.

Dialogues

Navigation / Traversal

Dialogues in games are generally not designed to be used as an accessibility tool, although in many cases character dialogues can increase the immersion and navigation of a VI player by explaining the situation the player is in. NPCs, loudspeaker announcements, creatures (and many other things) can suggest directions to the player and can be included in the game by default. Also, game states, such as fighting/roaming, can be supported with pre- and post-fight dialogue cues (e.g. "All right, let's do this!" / "Phew, they almost got me there!"). If the states don’t change often, they can be separated with short in-game cinematics (as in The Last of Us Part 2) that set the scene with dialogue. The timing and volume of these state change indicator voice lines are crucial.

Example of a bad implementation:

I have played games that used this technique. However, they also made the ‘player character’s thoughts’ audible during fighting states, even though they were very difficult to hear because although the fighting state increased the music volume, the ‘inner thoughts’ voice lines’ volume wasn’t raised with it (not to mention that during a fight, the last thing a player pays attention to is the ‘player character’s inner thoughts’). This implementation error not only made the writers’ work useless, but also made the voice lines counterproductive and the fight state unnecessarily more confusing. So you see, the error here wasn’t only with the volume implementation, but the implementation logic itself. Instead of having the ‘character’s thoughts’ played through the fight music, it could be paused using the Pause command in Wwise’s Event Editor when the fight music event triggers, and then resumed using the Resume command in the Event Editor when the roaming state becomes active again.

Before the player can move around in the game world, they enter it through the game’s menu, which means that a blind player needs information to navigate both the menu and the game world. Mortal Kombat 11 is a great example for accessible menu navigation, as the game has its own dedicated narrated menu, which makes navigation for a blind player a much simpler task than ever before.

Having a dedicated space for accessibility options in the game’s menu that is easily accessible is a huge help for VI players, just like in FIFA21.

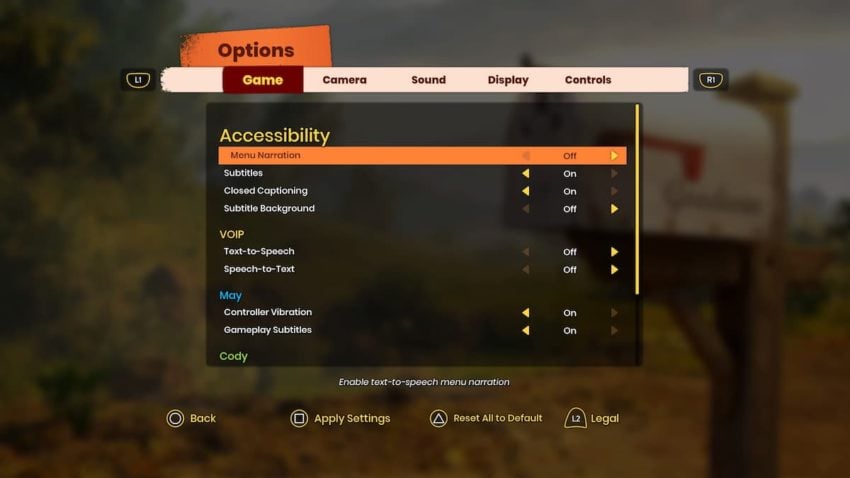

Even better is if the game starts with the accessibility settings when it’s launched for the first time, just like in It Takes Two.

The Accessibility menu pops up when player launches It Takes Two for the first time

Puzzle / Objective Hints

If the game has puzzles or the player needs to complete objectives to finish the game, these tasks will need to exhibit smart hints to actually tell the player what needs to be done. Dialogues are a quick way to suggest hints in puzzle levels, as well as scenes that are not supported with other sounds, or in not-too-obvious situations. The dialogue can be the main character talking in their head, a narrator/storyteller (interactive audio description), a conversation with another in-game character/NPC (the Uncharted series and Red Dead Redemption 2 are both great examples) or a text-to-speech function.

Combat / Quick Reflex Hints

If the core game mechanic is based on shooting and fighting, then additional audible combat/quick reflex hints are needed. Well-timed warning shouts can also be useful in games that have multiple supporting characters (JRPG, Multiplayer Shooters, etc.), or by a chatty main character (e.g. Spiderman, Uncharted series). These can also be confusing if the fight scene is already well populated with sound effects and loud music; thus timing, volume, a healthy priority system for your sounds and a reasonable ducking/sidechaining system must be done right, so the execution sounds as natural as possible.

Further Notes for Dialogue

- When text-to-speech is active in the game, it has to be ducked down by any in-world dialogue. The in-world dialogues are considered a higher priority than the text-to-speech function. You can set the ducking parameters in the Audio Bus Property Editor - Auto-ducking tab.

- UI narration is one of the most important voice aspects that allows blind gamers to play a game. Not only do the menu font characters need to be recognised, but the gameplay UI characters as well. The player needs to know how many bullets they have, how much health they have, their special powers, etc.

- If possible, have a separate dedicated button on the keyboard or controller to toggle text-to-speech on/off, or make all buttons assignable and create an option for text-to-speech toggle.

Sound Effects

Navigation / Traversal

Sound effects have a big role in traversal accessibility. Functional audio cues, such as a ledge guard to prevent falling along with audio and vibration feedback, enhanced listening modes to highlight nearby enemies and objectives within a radius of the player, and other useful traversal and combat-related cues can drastically increase the accessibility of any game. These are especially important in navigation assistance, both in-game and in the game’s menu. For menu navigation, speech and UI narration is preferred; however, sound effects can still carry cognitive information, such as a sound for toggling something on or going deeper into the menu (e.g. Playstation’s menu UI sounds), and the reversed version of the same sound when toggling something off or exiting the menu.

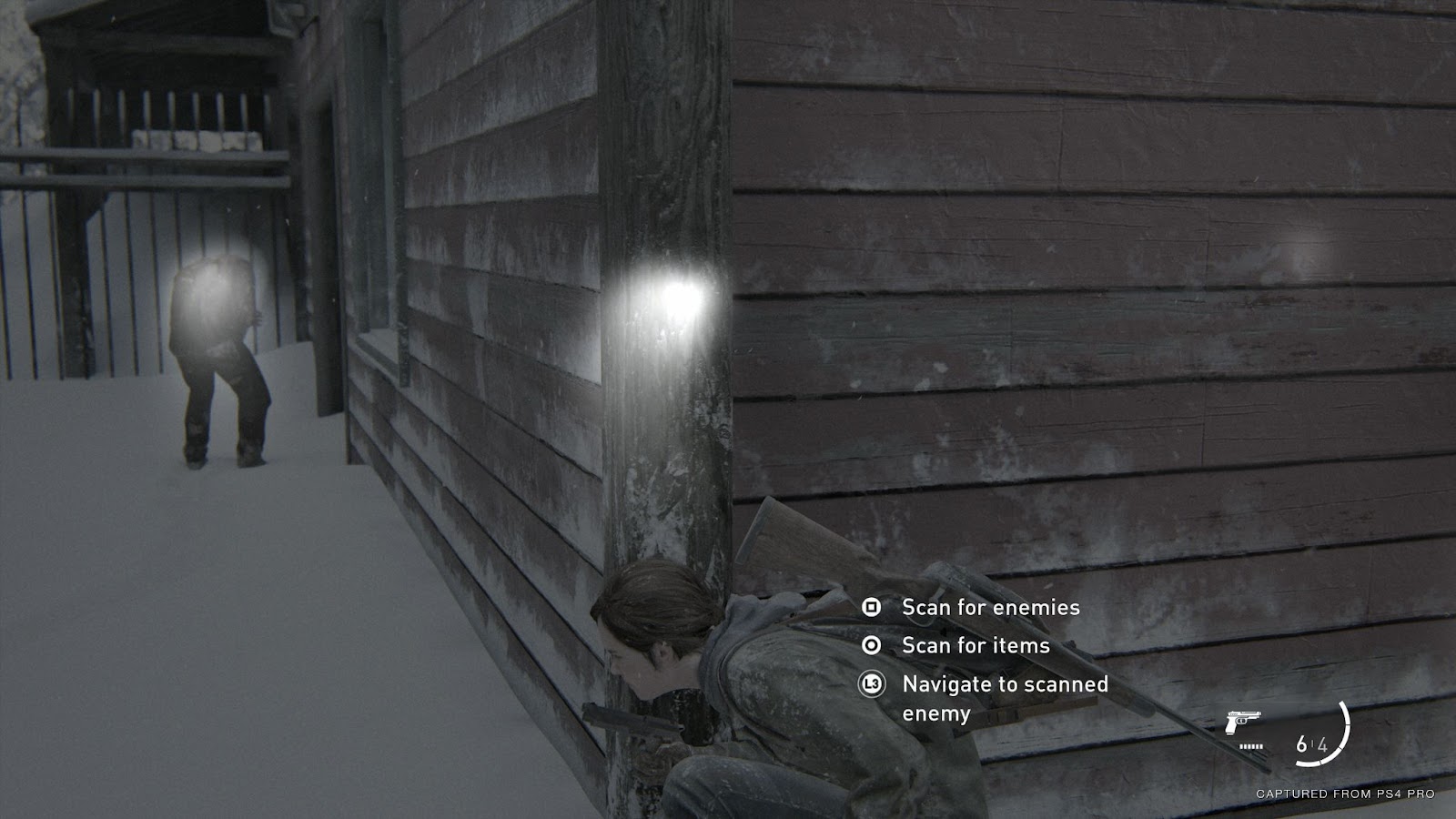

For in-game navigation, the most commonly used audio cue is a sonar-style sound to mark the objective’s echolocation from the direction of the next objective or correct path (with 2D stereo panning or 3D object-based sounds such as in TLOU2). If there is an obstacle towards the objective that prevents the player’s smooth navigation, it needs to be marked with additional audio cues to advise the player, such as in TLOU2.

Enhanced listening mode in The Last of Us Part 2. When triggered, a sonar sound sweeps through the area around the player, and nearby enemies become more audible, as all other ambient sounds are being filtered out while the mode is active.

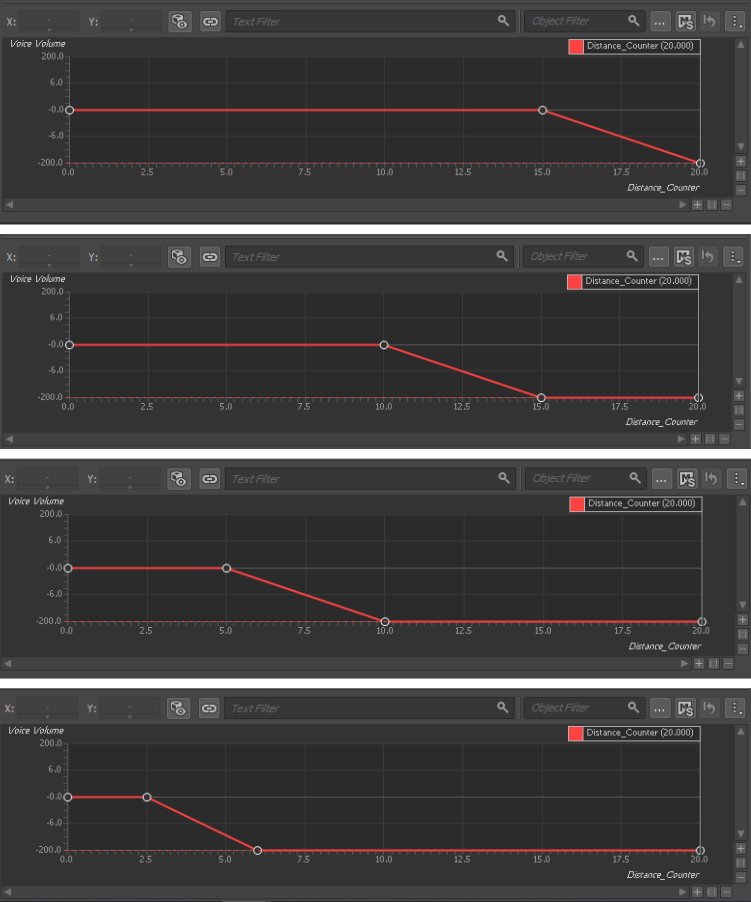

When the player is close to an interactable/collectible object, using an attenuated audio cue that loops until they have been collected or interacted with is the best currently available option to point the player toward the required object. For 3D object-based sounds, you can get creative by attaching a long amplitude to the distance parameter so that the closer you get, the sharper the sound becomes, highlighting that the player is getting closer to the object. TLOU2 uses a transient-less, soft looping UI beep, and once you are close enough, the loop event stops and plays the same sound but with its transient. If the player leaves the trigger-box radius and the objective wasn't fulfilled, the subtle looping beep starts again.

For other objects along a pathway (like a door, a narrow squeeze-through, a ledge, or in the water), the UI audio cue can be accompanied with a sound that reminds the player of the interactable object (e.g. a UI “Heads Up!” sound is followed by a door creak when the player approaches an unlocked door, or it plays the rumble of a lock if it is locked). Discussions concerning virtual movement, navigation, and action indicator sounds, aka iconosonics, seem to be underrepresented in the game audio industry, so allow me to explain it in a little more detail.

In video games, the omnipresent nature of sound makes it an incredibly powerful tool in communicating information and supporting visual clues that otherwise would be imperceptible for the player. Speech is a great way to support player navigation in games. However, it can take more time to deliver and for its language to be encoded by the listener compared to sound effects and music, which are capable of incorporating embodied information. Sound effects and music are therefore the perfect tools to create "language-free" action indicator sounds, thereby making training in games quicker. In order for an iconosonic effect to be effective, it has to be triggered at the same time as the event it represents, helping the player learn and ascribe meaning to the sound, the same way that the human brain learns new words as pictures and sounds. Repetition is important when using iconosonics because each time the sounds are played, they improve the learning and recognition of this new language. The use of genre-valid sounds is also important from an aesthetic point of view because remembering sounds from games from the same genre is much more likely, as the brain creates a cognitive link quicker. A perfect example of this is the “alert” sound effect from the game Metal Gear Solid, which has a very similar “remade” sound in the game Cyberpunk 2077, used for the same purpose, to alert the player that they have been spotted by enemies.

To make object selection quicker, sound designers of The Last of Us Part II used a ‘heads up!’ stinger derived from the UI sound family before playing the action indicator sound, thereby giving a distinctive signal before the actual iconosonic was played, helping the listener cognitively separate it from background sounds.

To ensure the player receives audible feedback about whether their action was successful or not, confirmation sounds need to play when the job is done or when the next target is reached (suggesting “Good job!” *shoulder tap*). Nudging sounds can be played after cutscenes and in-game cinematics when the player needs to be told that an action is required (like pressing forward or if the player needs to press a specific button). In the following video after reading a letter, the game wants to tell the player with a subtle ‘woop woop’ sound, that they can now move on.

These can easily be set in Wwise by adding the nudging sound events to be triggered by state changes.

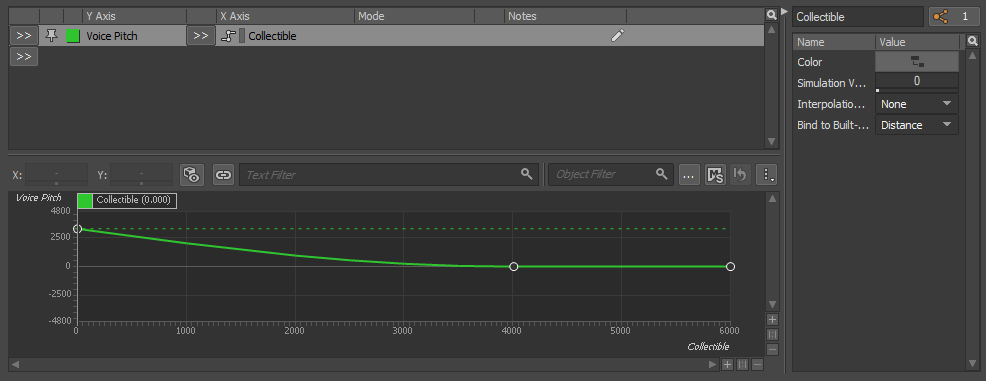

A good example for unintentional player navigation accessibility can be found in Gears 5 in which a fabricator needs to be protected by the player in Horde mode. When using the fabricator's locational ping, it will play back in a pitch that changes based on the player's distance. The closer the player gets to the fabricator, the higher the ping's pitch becomes. To achieve this, apply the Voice Pitch parameter to an RTPC and attach that to the item whose pitch you want to change based on player distance. You can also bind the RTPC to a distance Built-In-Parameter to implement this system without the need for coding knowledge.

The rising pitch sound can be replaced by a loop that becomes faster as the player gets closer to the item. Place the looping audio in the Interactive Music Hierarchy and apply an RTPC with the Playback Speed parameter on your item.

In Hades (2020), the majority of the gameworld objects are 3D spatially-positioned sounds: enemies make noises while fighting, and music and sound effects are ducked down when dialogues are active. After killing enemies, collectibles play a looping heartbeat sound until the player collects them. These are all good and not necessarily accessibility related examples of helping player navigation in games.

Puzzle / Objective Hints

As previously explained, for nearby interactable/collectible objects, objective hints can play looping cues or one-shot-style sounds. These sounds need to work closely with the game mechanics and cognitive logic, and they can be 2D UI sounds or 3D objects, depending on the style of the game.

These hints should be separate from the traversal sound family, as they suggest more quiz-like activities. They don't necessarily fall under the accessibility category, as they are used in many games (like the Uncharted series), but while a developer usually triggers these puzzle hint sounds after a period of time or when a number of wrong actions have been performed, the sounds can be triggered immediately or after the first missed action when the accessibility function is turned on. For instance, to not play sounds the first and second time, but play it the third time, you can use a Sequence Container in Wwise and place Wwise silence in the first two slots, so the sound will only play when the player has repeated their actions three times. This is a difficult function to get right, because the developers still want to challenge the player, and the VI player also wants to be challenged, thus it is important for the developer to bear in mind that there are probable elements of the puzzle/objective that need to be explained to the player so they fully understand what is expected from them.

Combat / Quick Reflex Hints

Just like in the dialogue cues, these combat sound effects can also be confusing if the fight scene is already well populated with in-world sound effects and loud music, thus timing must be done right. These sound effects need to be distinguished from other combat sounds so as to have a 'heads up!' kind of feel to them to cut through the already busy mix. Therefore, it is probably wise to keep these hints 2D stereo panned or mono. The other approach is to attach the sound to a button logically, like a dodge button that needs to be pressed at the perfect moment. In these cases, if the dodge button (in a fighting state) is the same as the crouch/prone button (in a roaming state), then a more aggressive version of the accessibility traversal button sound can be played, as we are just borrowing the button sound from the travel function that is more important in a combat scene.

Aim Assist

Highlighting enemies in a scope when aiming can also be done with an audio cue, for instance a constant looping pitch or noise that turns on while aiming, and the pitch and volume increase when there's an enemy in the crosshairs, similar to a metal detector. The use of RTPC with Pitch parameters affected by the distance (from the crosshairs, or azimuth, of the enemy) is also a good solution. Based on my research, TLOU2 is so far the best game in using audible aim assist by playing an audio cue when there’s an enemy located in the crosshairs. In recent shooter games, bullets that successfully hit the target get an extra layer on top of the original gun firing sound: a short white noise or high-frequency sound that works as feedback for successful bullet hits. There are multiple ways of achieving this: it can be a switch that is attached to the scoring system (and selects the high-frequency sample when a target is hit) or a volume parameter placed on the high frequency bullet sounds that only opens up (making the sample audible) when a target has been hit. It can also be coded directly in the game engine to separate the empty and successful bullet hits into two individual events. Additionally, when an enemy is killed, a dedicated “kill stinger” can be played to give feedback to the player of the successful attack. This is very important for a VI player as they have no other way of knowing when an enemy dies.

Punches, kicks, and other fight moves should also sound different when they reach their target, as opposed to when they hit the air. Mortal Kombat 11's most appreciated accessibility feature (apart from the narrated menu) is the fact that every single fight move has a distinct sound. This allows VI players to compete and beat their sighted friends – therefore, they can enjoy the game together!

Music

Navigation / Traversal

Certain places can be separated by music states. For instance, let’s say there's a beach somewhere, then there's a mountain, then a village; these can all be separated by music states. Additional layers of music can be spatialized (3D object based) and attenuated, thereby suggesting path openings. For instance, music was designed and implemented very cleverly as part of a warning signal in the game A Plague Tale: Innocence (2019). When the player is roaming in the game, the music is generally rhythmless and behaves more like a calm ambience bed to match the stealth nature of the game. As the player moves closer to enemies, certain instrument layers of the music change drastically, and rhythmic cello and percussion become gradually louder and more frequent in the mix. In a short time, the music that was used as a narrative coloring tool instantly becomes a built-in accessibility audio cue that helps the navigation of both sighted and VI players in the game world. You can also suggest new objectives by luring the player closer by spatializing the instrumentation (e.g. four layers of a flute chord progression aligned with the music's BPM with a volume RTPC applied to it, so the closer the player gets to the objective, the more layers of the flute join the music).

There are other clever compositional tools, like the rising arpeggio in the movie Up! when the balloons lift the house, and a falling arpeggio when this house is falling back to the ground. They’re all situation dependent, but no one should underestimate the power of well-composed (and implemented) music, a.k.a. ‘Mickey Mousing’, which can act as a narrative tool (Rayman Legends does it very well, and it makes the gameplay super fun).

Puzzle / Objective Hints

Just like in the sound effects section, music can suggest player actions, and motivate them to make decisions in a quiz-like environment. As Hans Zimmer explains the question / answer method in his masterclass (https://www.masterclass.com/classes/hans-zimmer-teaches-film-scoring), these compositional techniques can serve an incredibly helpful and entertaining function in games as long as they are well implemented. And of course, depending on the game state, if the player is in a puzzle state, the right music can inspire and stimulate players to solve puzzles with joy. (https://www.youtube.com/watch?v=Uc32TpW7Zpw&ab_channel=GarryThompsonGarryThompson)

Combat / Roaming Hints

The majority of action-driven, shooter, and stealth games change their music between three main states: Combat, Roaming and Cutscenes. Switching between musical states can be made more interesting with the use of transition tracks. This is probably the biggest deal-breaker for a VI player that helps them realize which game state they are in and which game state is approaching, which gives the player time to grab their weapon. It is advisable to use variations in the music layers/instruments, which can be randomly repeated to keep the music interesting. Similarly, the music changes between two main states in the game Hades (2020), which are roaming and combat; however, there is a third state where there is no – or very quiet – music, as the game channels the player’s focus onto the game’s story using dialogues.

Ambience

Navigation / Traversal

In a 3D game, if the ambience and sound effects are done well with attention to detail, they already provide an environment that is easy to navigate. Spatial rooms, convolution reverb, an accurate amount of reverb on the dialogues, and audio objects – all these things together can greatly help in making the game environment easy to navigate. A beach, for instance, will have water sounds, seagulls, boats, and perhaps people; while a cave will have a long reverb, echo, slow dripping water but be generally quiet. The Wwise Spatial Audio plugin can be extremely useful when building (mostly indoor) environments that need accurate sonic conditions.

Wwise Spatial Audio plugin used in Unreal 4 engine

The environment in games can be designed to behave like a navigational tool, just like wind, a river, an echo in a cave, or a little creature that the player needs to follow can be very helpful for VI players. For instance, in a cave’s corridor, the wind’s pitch, strength, and volume can tell the player where the wind is coming from, suggesting the direction of the cave’s exit. The wind’s pitch value can be modulated by its azimuth parameter that is dependent on the player’s position, and the volume can be gradually increased towards the exit. Using a Blend container in Wwise, the soft wind can fade into a strong wind audio sample based on the player’s distance to the exit, making this “wind machine” act as a navigational feedback.

Puzzle / Objective Hints

The accurate use of reverb and filtering can play a big role in puzzle solving if the level design takes sound spaces into account. For instance, in the game Superliminal (2019), when an item grows huge, its sound becomes lower and heavier as opposed to when it’s small and higher pitched. When these sound effects are routed through the room’s space, the reverb and echo will change with the size of the object, thereby suggesting its size.

Combat / Quick Reflex Hints

In games where the environment can be used in combat scenes, for instance to drown an enemy in water or push them off a ledge, the environment can suggest and represent these abilities with sound, so the player can cognitively connect the sounds with the action required.

Further Audio Events That Can Improve Acessibility

- Provide individual sliders for audio channels in the game’s menu: music, sound effects, dialogues, accessibility sounds (or audio cues), and a mono/stereo slider (for hearing impaired players). For example, this allows blind players to decrease the level of music when they find it difficult to understand the dialogues. There’s an ongoing debate about the ideal default levels of these volume sliders, but in most games, they all start at 100% except the text-to-speech slider, which is usually set to 50% or 80% by default.

- Ensure sound / music choices for each key object / event are distinct from each other.

- Provide separate volume controls or mutes for effects, speech, and background / music.

- Provide a stereo / mono toggle.

- Keep background noise to a minimum during speech.

- Simulate binaural recording.

- Use distinct sound / music design for all objects and events.

- Provide a pingable sonar-style audio map.

- Provide pre-recorded voiceovers for all text, including menus and installers.

- Use surround sound (this probably also means spatially positioned 3D game objects with accurate attenuation curves).

- Provide a voiced GPS (advanced).

- Ensure no essential information is conveyed by sounds alone.

- Provide an audio description track.

Conclusion

It is clear that there is no universal way of making games more accessible for the visually impaired. In fact, the use and implementation of accessibility audio cues are so diverse that it was advisable to separate the subject into four main categories. By using dynamic music, a game can communicate the game’s state and narrative to players. Moreover, it was shown that music can also be used to support game mechanic-related messages with the use of musical stingers and warnings to help player navigation. By using well-localized audio cues with accurate convolution reverb, attenuation curves, and spatial information, navigation can be simplified for VI players, and the ‘pingable’ sonar sound was found to be the most effective navigational tool within the sound effects category. All the solutions detailed above can be implemented in games using Wwise, and the sample projects are a perfect place to prototype these accessibility systems. https://www.audiokinetic.com/education/samples/

The majority of indie game developers are simply not aware that there are existing regulations in the game industry that their games need to comply with. I hope the information conveyed in this article can be shared among game developers and game audio professionals to develop games with accessibility features included with the aim to support VI players’ gaming experience in the future. And remember: these are only guidelines and existing techniques to encourage sound designers in the industry to come up with their own solutions for creating accessible audio systems.

Literature and Useful Links

- The Last of Us Part II is currently the most accessible game available on the market (Venkat, 2020), so it’s worth having an observation session to see how and why the audio team dealt with blind accessibility in the game. The available accessibility settings can be found on the following website: https://www.playstation.com/en-us/games/the-last-of-us-part-ii/accessibility/

- CVAA guidelines relating to audio (21st Century Communications and Video Accessibility Act): http://gameaccessibilityguidelines.com/full-list/

- Full list of game accessibility requirements: http://gameaccessibilityguidelines.com/full-list/

- About game accessibility generally: https://www.game-accessibility.com/about/

- Accessibility on a budget: https://www.gamesindustry.biz/articles/2017-10-09-accessibility-on-a-budget

- Audio Description organizing principles: https://www.acb.org/adp/guidelines.html

- What it’s like to play games when you’re colorblind: https://kotaku.com/what-its-like-to-play-games-when-youre-colorblind-1606030489

- Accessibility in gaming should be the rule, not the exception: https://www.gamesindustry.biz/articles/2016-05-27-accessibility-in-gaming-should-be-the-rule-not-the-exception

- Accessibility and “difficulty” aren’t the same thing: https://www.gamesindustry.biz/articles/2019-04-11-accessibility-and-difficulty-arent-the-same-thing

댓글