Please read Part 1 of this blog first.

4. Real Application - Soundscape Design for Detroit: Become Human

4.1 Design Ideas

4.1.1 Background Setting

Detroit: Become Human is a third-person action-adventure game developed by Quantic Dream. The plot follows three androids: Kara, who escapes her owner to explore her newfound sentience; Connor, whose job is to hunt down sentient androids like Kara; and Markus, who devotes himself to releasing other androids from servitude. These three characters can live or die depending on the dialogue and action choices made by the player.

Writer and director David Cage wanted the story to be grounded in the real world, so the development team chose Detroit in 2038 because of its great past; The city was once a powerful and wealthy place, then went through very hard times, and now it’s gaining increased revitalization. In the game, they thought of Detroit as the fourth playable character and they tried to make this character live, with the soundscape evolving and changing throughout the story instead of staying static [1]. This also reflects the interactive nature of the game's soundscape. For example, in the early stages of the game, the city is sunny and bright. As the story unfolds and develops, it becomes rainy and darker. And then the snow arrives and covers everything near the end. In the soundscape production, it is necessary to design the time and season change through the synchronizer in the sound engine.

As for the design of the city scenes in-game, the production team extended the respawn curve of Detroit to 2038, and imagined what the urban environment would be like then. In the team's vision, technology will become more and more invisible and elegant, as well as more visual [2]. Just as the game eventually presented, the design for the androids’ clothing as well as busses and trams in the city are pleasingly simple. Meanwhile, the e-zine and computer interfaces that frequently appear in the game have a flat design. When it comes to the modeling of vehicles, buildings and androids, the art team didn’t want them to be too futuristic to be true, because 2038 is not far from now. So while highlighting the technology development, authenticity needs to be retained. Everything has to be based on life currently [2]. Therefore, the future soundscape of Detroit also needs to retain some sounds of the past. And, the soundmarks in Detroit must be preserved. The goal is to create something familiar which the player can identify with in this future setting.

After Detroit's local government went bankrupt in 2013, many tech startups were brought in to revitalize the economy. Detroit's wide streets, vacant factories and low land costs made it an android development center in-game. The graphic director Christophe Brusseaux described Detroit as an industrial wasteland [2]. In such a social context, the future soundscape of Detroit in-game needs to present a sense of space, and create an atmosphere with a combination of machinery and rust.

As with other cyberpunk works, the rainy weather is a major element in-game. Kara's awakening and escape, Marcus's appearance and rebirth, as well as many other important episodes, all happened on rainy nights. As a result, the sound of rain becomes a key part of the soundscape. Based on the three characteristics of the game soundscape mentioned before, while creating the rain sound in-game, there should be enough rain assets in order to avoid repetition. Also, the density and loudness of these assets during playback should change along with the ups and downs of the plot. It’s also important to control the level and position of the rain sound while mixing to avoid auditory fatigue and distraction. Also, the rain sound must not cover other sounds.

4.1.2 Characteristics of the Game Soundscape

Previously, I introduced the soundscape concept and the sound design for cyberpunk films. However, when applying these theories to game soundscape design, it’s also necessary to take into account the specificity of games. Unlike the sound production for movies, game sound designers cannot arrange the audio assets on a fixed timeline, because the sound effects in-game are controlled by the player. There are three characteristics need to be considered during the game soundscape design: instantaneity, interactivity and spatiality.

a.) InstantaneityOriginally, all sounds were originals. They occurred at one time and in one place only. Tests have shown that it is physically impossible for nature’s most rational and calculating being to reproduce a single phoneme in his own name twice in exactly the same manner [3]. One of the core properties of sound is its ephemerality - it does not endure long past its production, and even a recording is but a subjective representation of reality [4]. In the game, the player may enter the same game scene several times, but the soundscape experienced each time should be different. This requires a system with randomly playing sound assets in the game sound engine.

b.) InteractivityIn a particular game environment, the player requires sounds with different purposes [5]. The listening experience should vary when the weather, surroundings or player’s perspective changes. Therefore, the game soundscape needs to be adjusted in a timely manner, and the sound assets triggered by the player's operation must be handled carefully.

c.) SpatialitySonic elements in the game soundscape can convey the shape and characteristics of the space through their loudness, frequency, reverberation, phase and other features, and render the narrative space of the game effectively. For example, in a game scene within a forest, sometimes the player cannot see birds flying through the air due to obstructed vision; however, it would make more sense to have bird chirps playing in the background. Bird chirps from various directions with different reverberation and delay can help the player understand the terrain and dimension of the scene. According to the Acoustic Niche Hypothesis (ANH) theory mentioned before, the player is even able to identify the ecology in the scene through these sound sources that are not in sight. When the player switches to another scene, the new soundscape can help the player immerse themselves immediately in that scene. While depicting the narrative environment, the game soundscape has the ability to guide, orient and transform the space to ensure that the player gets a comprehensive experience in-game [6].

In terms of the sound quality, a good soundscape is first and foremost a high-fidelity soundscape - a sonic environment with both sound signals and keynote sounds that do not overlap with each other frequently [7]. An obvious characteristic is that obstructions seldom occur, i.e., sounds of different frequencies can be clearly heard by the player. In such a soundscape, the environment information can be effectively conveyed. And, the player can recognize certain properties, characteristics and states of the sonic environment based on the sounds that are heard. In addition, the diversity of sound effects can indeed create a rich listening experience. But the redundancy of auditory information leads to the same result as the lack of information, which are useless to the player [8]. Therefore, while shaping the game soundscape, the sonic elements should be subtle and reasonable, rather than abrupt and cluttered.

4.2 Sound Design

Let’s take Shades of Color, Chapter 3 of Detroit: Become Human, for example. Here is the flowchart for this chapter:

.jpg)

Figure 5: The Flowchart for Chapter 3

Keynote sounds in-game consist of three main components: biophony, geophony, and anthropophony. These sounds can be used to reflect the well-organized and sophisticated living environment, convey the environment information to the player, and lay a foundation for the spatiality of the soundscape. While walking from the open plaza to the roofed shopping arcade, the player should hear the wind sound being affected by the terrain, which results in a change in the timbre; While passing through the roofed area on a rainy day, the sound of rain falling on the roof should be heard.

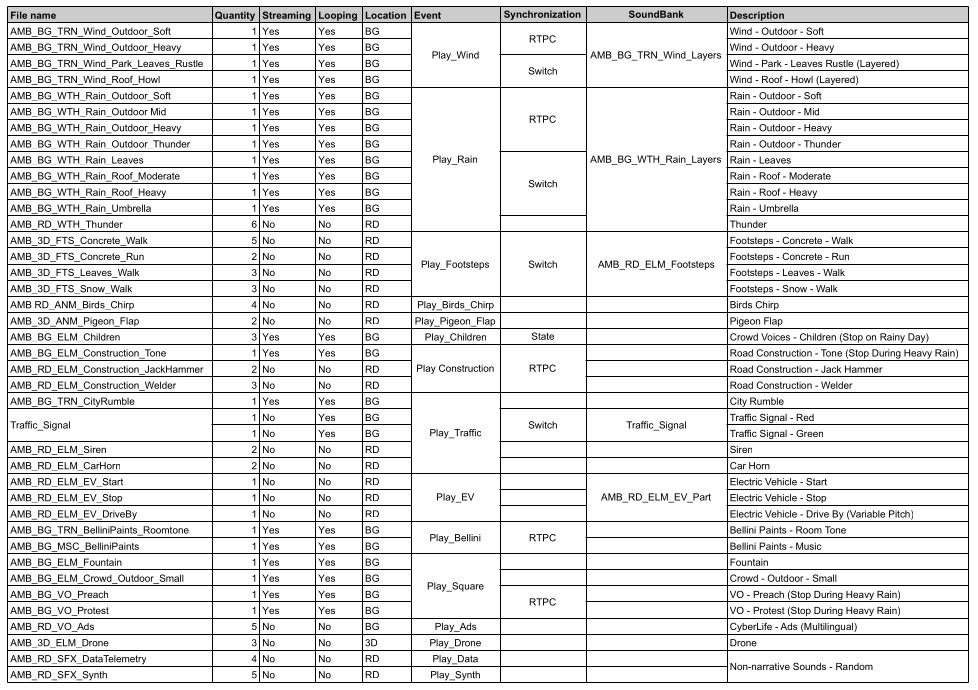

During gameplay, the player may stay in one location for a long time, or pass through the same area repeatedly. Therefore, the soundscape needs to be variable, expressive and transient. Eventually, the designer divided the soundscape elements into two general layers: one for looped sounds within the area, which reflects the basic characteristics of the environment, such as the wind or rain sounds, or the crowd voices in the park; the other for random sounds, which enhances these characteristics, such as the random thunder sounds during a thunderstorm, or the intermittent machinery sounds at a construction site.

Subtle changes in the keynote sounds can also affect the player's emotional development. While marching through the city from Markus' perspective, the player will learn about the social environment and the situation of androids, which results in an emotional progression. At first, the player will see the housekeeping androids who get along with the elderly and children in the Henry Ford Commemorative Park. When the conversation with the hot dog vendor is triggered and the preacher and protesters yell, the player starts to understand that androids are actually discriminated and oppressed in the society. Therefore, in the park soundscape, the wind sounds, birds chirping, children crying and laughing, and the randomly looped footsteps of NPCs running and walking, complement the scenery in the picture. The harmonious social landscape and lush natural environment give the player a pleasant and harmonious first impression. However, in the plaza soundscape, the sounds of protesters and the preacher make the player want to rebel against human infringement of android rights.

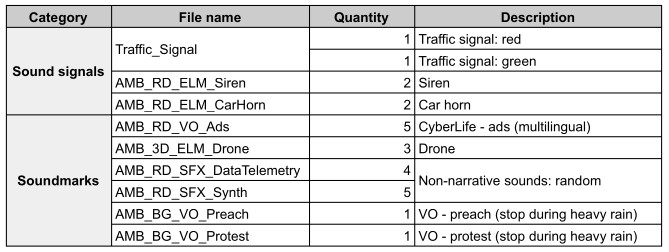

Figure 6: Keynote Sounds

The sound signals in-game mainly include traffic signals, car horns and sirens. The sounds of car horns and sirens are played less frequently. And, they will be processed by low-pass filter and delay effects to create a sense of distance and space, while avoiding noise-ifying the sound signals.

The sound signals in-game highlight the technology and fusion characteristics of the cyberpunk soundscape, while expanding the off-picture space and allowing the player to focus on Markus' perspective. In a 2038 city, technology is everywhere; the drones flying around over the plaza, the electric vehicles passing through the street, the electronic billboards beside the sidewalk... these are also represented in the soundscape. Among them, the noises of electronic malfunctions play randomly in the form of non-narrative sounds. And the electronic devices everywhere make these random sounds more reasonable. However, their presence is not limited by whether the player is surrounded by a corresponding sound source, which adds a sense of futurism and richness to the soundscape. The CyberLife store next to the plaza sells androids. Its multilingual advertising messages make the player feel the diversity of the city from the perspective of sound.

Figure 7: Sound Signals and Keynote Sounds

By the way, the music playing in the Bellini Paints store is "Trois Gymnopedies" performed by British electronic music pioneer Gary Numan. Originally composed by French composer Erik Satie in 1888, "Gymnopedies" was reinterpreted by Gary Numan in 1980 in new wave style. The song reflects the fusion of culture, time and space as well as the use of technology, which is in line with the characteristics of the cyberpunk soundscape.

4.3 Production Process

During game audio production, the sound designer can use the sound engine to design the audio architecture and playback mechanism without any help from the programmer. This could streamline the workflow. Let’s take Wwise and use its various playback rules and parameter settings to implement the instantaneity, interactivity and spatiality characteristics of the cyberpunk soundscape.

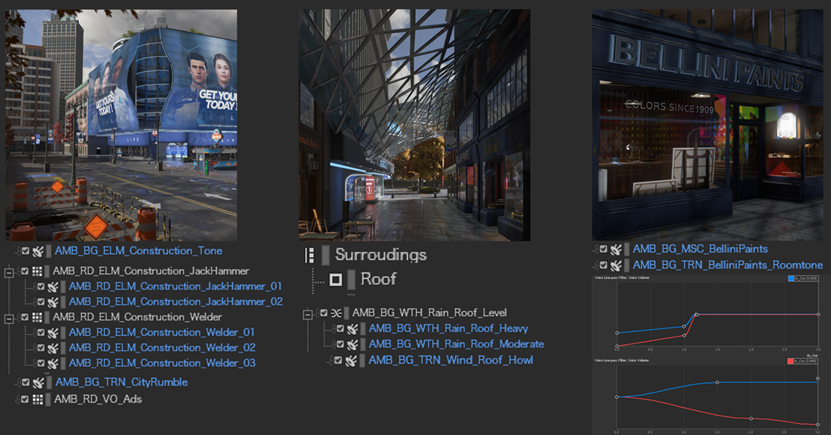

Keynote sounds in-game such as the wind or rain sound are controlled with Switches. For example, the wind blowing between roofed buildings will produce more prominent mid-high frequency sound with a higher pitch; when it rains, a layer of rain falling on the roof will be added to the keynote sounds, while ducking the sound of rain falling on the ground.

Figure 8: Using sounds to convey the environment information

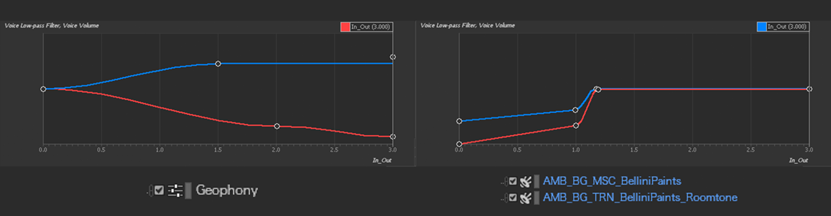

As shown below, the X-axis indicates the player's position (0 for outdoor, 3 for indoor), and the Y-axis indicates the cutoff frequency and relative level of the low-pass filter. When the player enters the Bellini Paints store, the level of outdoor geophony will decrease, and high frequencies are properly filtered; the level of indoor room tone and music will increase, and low frequencies increase gradually.

Figure 9: The X-axis indicates the player's position

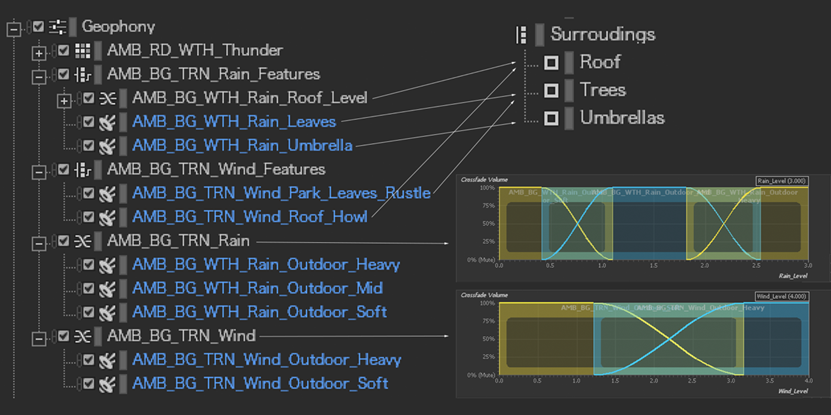

The interactivity characteristic is expressed in a variety of ways, mainly reflected in the keynote sounds. Both wind and rain sounds will change based on the player's surroundings. As shown below, when the player stands under a tree in the park, a layer of leaves blowing in the wind or rain falling on the leaves will be added to the wind or rain sound; when someone holding up an umbrella walks by, that layer will switch to the sound of rain falling on the umbrella cover. This can be done with Switches. The wind or rain sound that was looped in the Blend Container will also respond to weather changes. And, this can be done with game parameters.

Figure 10: Interaction system for geophony

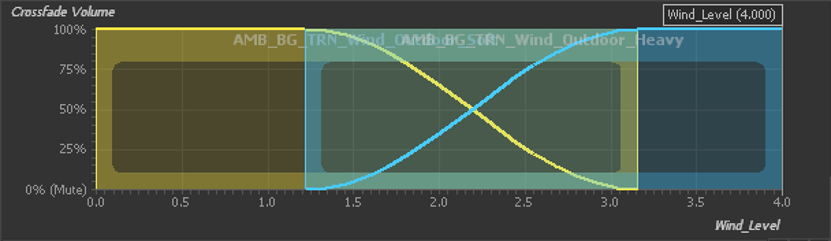

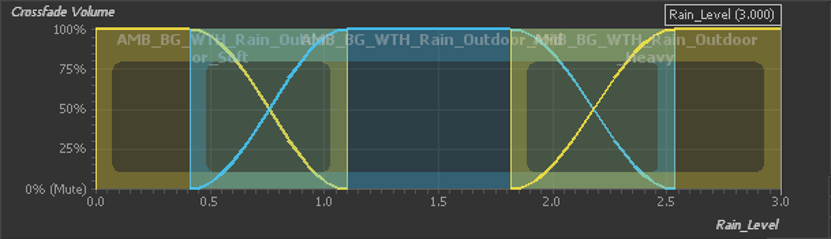

As shown below, the horizontal axis indicates the rain or wind level, the vertical axis indicates the crossfade volume. When the wind changes from weak to strong level, the wind asset will switch from Soft to Heavy; the rain asset will alternate between Heavy, Mid and Soft depending on the rain level.

Figure 11: The transition of wind sound

Figure 12: The transition of rain sound

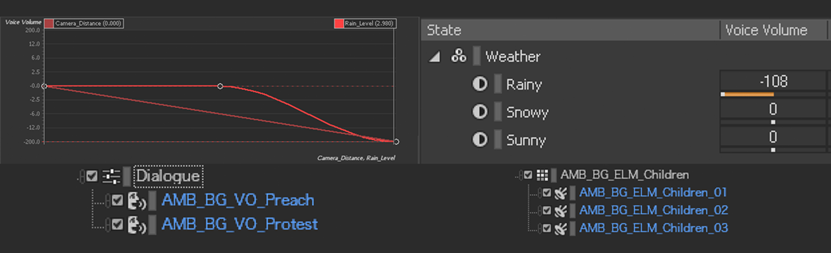

In order to achieve more realistic results, the sound of children playing and protester yelling will stop when it rains. Even in rainy weather, protesting can still take place, so the sound of the protesting crowd will gradually diminish as the rain intensifies. This can be achieved with RTPCs. However, children in the park will stop playing on a rainy day, so the frolicking sound will be switched off immediately. This can be achieved with States.

Figure 13: The crowd voices will respond to weather changes

To avoid repetition, a blank sound asset is added to the Birds Chirp Random Container. The asset is used to create a proper white space. It has a higher playback probability than other sounds. By doing so, the assets can be divided into the smallest units and then combined organically. This takes advantage of the Randomization and playback rules to make the soundscape more realistic.

5. Conclusion

A key to shape the cyberpunk world through sounds is the building of the environment, i.e. soundscape design. Cyberpunk works are usually set in a society with highly developed technologies, collapse of the social structure, environmental pollution caused by war, etc. It’s very different from today's world. Therefore, in order to let the player empathize with the character’s experiences during gameplay, it’s necessary to immerse them in a specific social environment. This makes the soundscape design for the environment particularly important.

In soundscape design, the first thing to consider is the connection between sounds, the player and the environment, as well as the arrangement of keynote sounds, sound signals and soundmarks in the soundscape. To do this, we need to retain the authenticity and add some creativity at the same time. On one hand, it’s necessary to extend the diverse sounds that exist in the current environment into the future time and space. The goal is to create something familiar which the player can identify with, and establish a connection between the future world and the real world in-game. On the other hand, we need to build a new sound ecosystem, and design sonic elements that may appear in the future world based on the background setting of the game. Meanwhile, we have to pay attention to the atmosphere of the soundscape in order to reflect the darkness characteristic of the cyberpunk world.

During sound production, it’s necessary to pre-process the assets in a DAW, and prepare enough assets that can be chosen to play. While implementing the soundscape playback, we need to leverage the synchronization feature in Wwise to define the interaction mechanism with all interaction dimensions in mind. This way the instantaneity, interactivity and spatiality characteristics of the game soundscape can be reflected in the game.

Appendix

Appendix 1

コメント