The Wwise 2019.1 release introduced Wwise Events triggered in Music Segments, a "music cues" feature, which lets you fire off events at specific points in your interactive music timeline. This provides a simple method for implementing "music driven animation", as demonstrated by a Unity/Wwise project titled "cbx2".

The app is based on photo display software I wrote music for many moons ago. There was an ambient background track, and ten looping motifs to accompany photos passing by. The "tiles" were designed to play with "serendipitous sync" -- trigger them whenever, and there's a good chance interesting polyrhythms and harmonies will result.

I still had the original MIDI data on a backup disk, so I re-engineered the audio files in ProTools, and loaded them into the Wwise Interactive Music system.

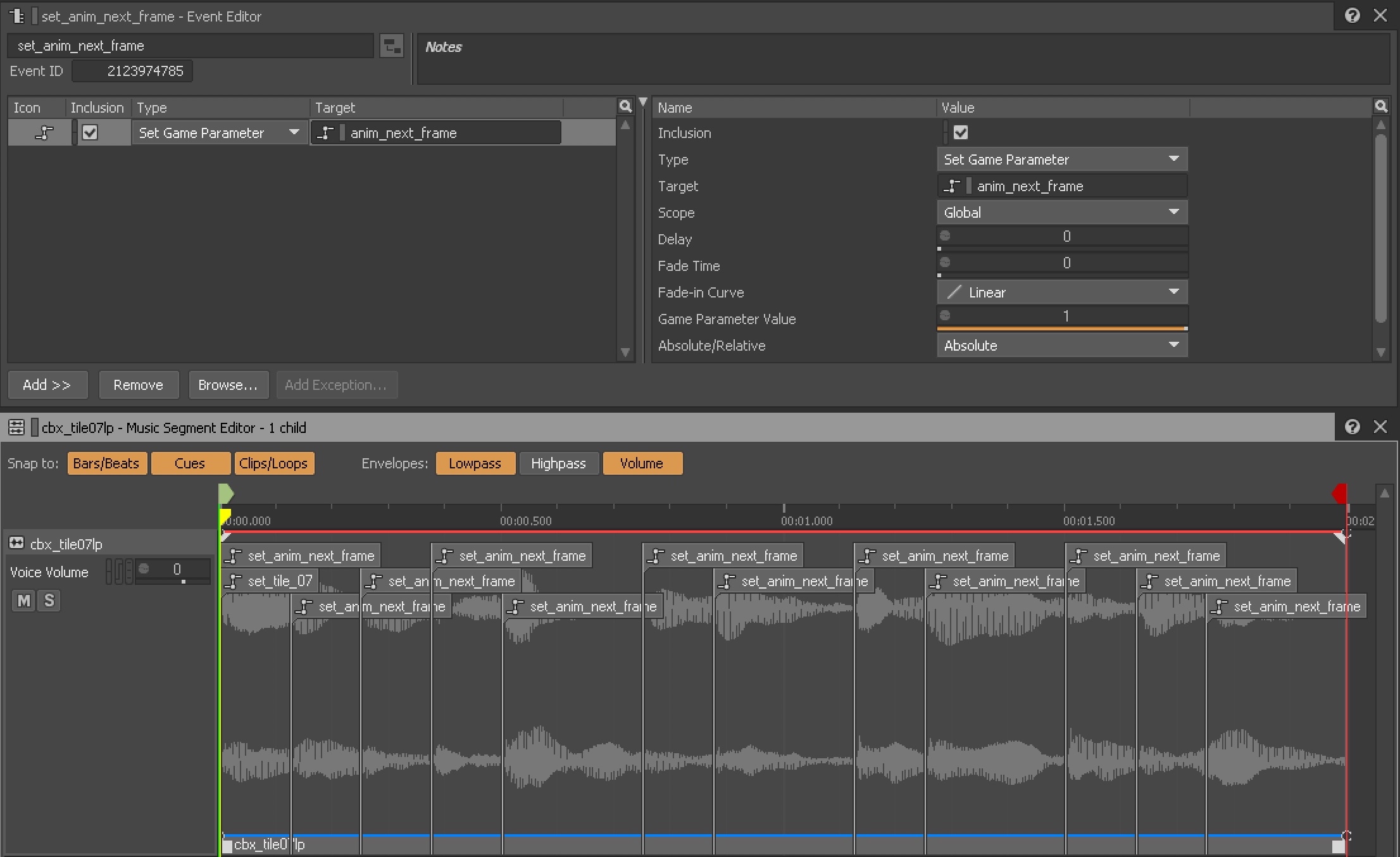

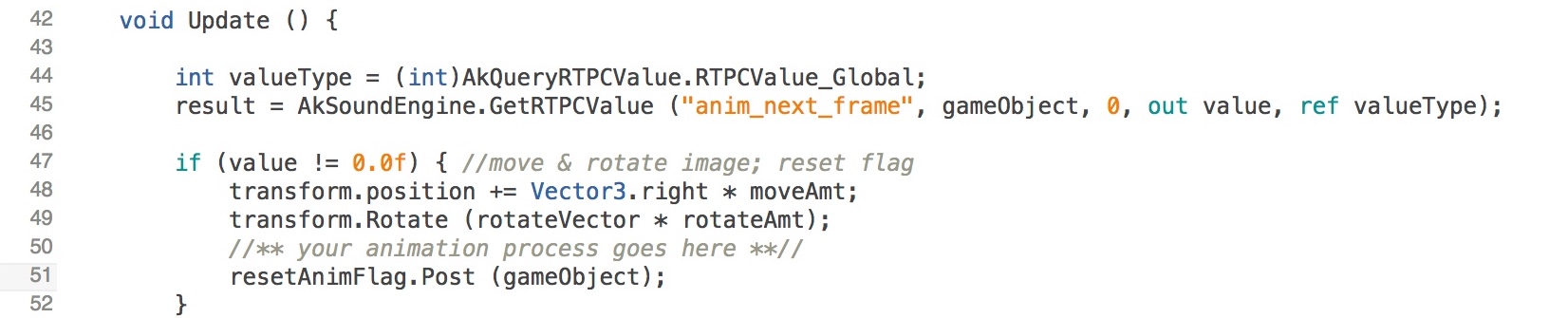

I then added multiple "set_anim_next_frame" music cues at various points in the music segments, depending on the rhythm of the phrase. The event merely sets the "anim_next_frame" RTPC to 1. In Unity, the RTPC is read in the Update loop (once per frame), and when it changes, the next frame of animation is triggered, and the RTPC flag is reset to 0.

And that's basically all there is to it!

NOTE: Obviously, I am not an animator. Each "animation" frame is just a "move right and rotate" command on a flat image. The arcane graphic alchemy required to create interactive 3D sprites is beyond my purview -- but one imagines a talented animator could use this technique in conjunction with a composer to produce "picture sync to music" features (rather than "music sync to picture", like every movie you've ever seen).

By using keyframes, and other techniques, music cues could enable a variety of animation effects:

- Your character does a complex victory dance in sync with whatever level music is playing.

- The ninja fight moves match the tempo of the music, even when the tempo changes.

- Your art piece is generated by an interactive music score.

- The emotional context of a scene transitions when the accompanying music changes tone.

- The path through the maze changes every twelve bars.

- Use different music cue types to control left/right arms, legs, and other bones individually.

- Adaptive tap dancing, for a chorus line of kangaroos and penguins.

- Cartoon footsteps and pratfalls controlled by Carl Stalling-esque musical motifs.

- .insert.your.concept.here.

For me, the most interesting part of this technique is that the composer is in charge of the rhythm of the animation. The graphix would be designed to move from one keyframe to the next (as is common with interactive characters), but the timing would be set by the music, not the other way around.

The technique does require the composer to enter music cues in the music segment(s) by hand, rather than relying on a DSP algorithm to determine beat/tempo/etc. This could become a somewhat tedious task for lengthy complex pieces, but you can copy-paste cues, and setting flags at rhythmically appropriate points is facilitated by the segment's snap-to edit grid.

To quote Brian Schmidt "Art is not a batch process", so some amount of obsessive-compulsive behavior when setting cues might come in handy (as is not uncommon for a variety of audio tasks). But the reward for this kind of detailed tagging would be animation sequences that locked to music, even when the music changes: no re-timing, re-editing, or re-recording necessary.

For more detail, or if you'd like to incorporate this technique into your next game or interactive music experience, the Unity/Wwise projects, plus video of the app in action, are available at:

www.twittering.com/demo/cbx2

- pdx

.jpg)

コメント