Aperçu

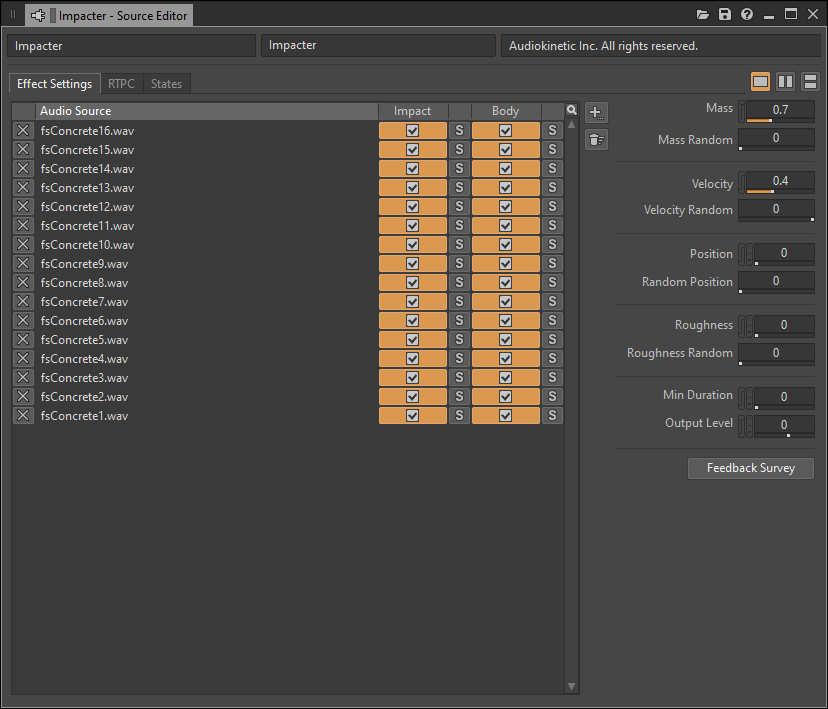

Impacter est un nouveau plugiciel source inspiré par l'esprit du plugiciel original SoundSeed Impact. Il permet aux concepteurs-ices de charger des fichiers sons de type « impact » dans l'application de création Wwise, de les analyser et de les sauvegarder comme modèles de synthèse. Le plugiciel d'exécution peut alors reconstruire le son original ou, ce qui est plus intéressant, générer des variations sonores selon une méthode intuitive basée sur des paramètres physiques et de la synthèse croisée.

Les paramètres de masse (« Mass »), de vélocité (« Velocity ») et de position (« Position ») permettent de modéliser respectivement le comportement de la taille de l'objet impacté produisant le son, la force de l'impact, et la réponse acoustique de l'endroit où il est frappé. Il est également possible de contrôler chacun de ces paramètres aléatoirement.

La gestion par synthèse croisée se fait en chargeant plusieurs fichiers sons que le plugiciel divise en deux composants, l'impact (« Impact ») et le corps (« Body », voir plus bas), et qu'il combine ensuite parmi différents sons. Il est possible d'inclure ou d'exclure individuellement l'impact ou le corps de chaque fichier présent dans la liste de sons afin de générer des combinaisons spécifiques.

Bien que la méthode de travail soit similaire à celle du précédent plugiciel Impact, plusieurs améliorations substantielles ont néanmoins été apportées. Le plugiciel ne nécessite plus d'outil d'analyse externe, du fait que Wwise gère directement l'analyse audio en arrière-plan. Bien que les combinaisons reposant sur la synthèse croisée étaient possible dans le précédent plugiciel Impact, des risques d'instabilité sonore et d'importants écarts de gain persistaient. En revanche, Le nouveau plugiciel Impacter offre une stabilité fiable quelle que soit la combinaison de synthèse croisée employée. L'interface utilisateur a également été repensée afin de faciliter les manipulations de groupes de sons, permettant même de tirer parti de la liste de lecture aléatoire afin de la faire fonctionner dans certains cas comme un Random Container plus complexe. Les paramètres ont par ailleurs été soigneusement conçus pour être plus intuitifs et plus parlants en termes de comportement physique par rapport aux sons qu'ils traitent.

Motivations

Le développement d'Impacter est né d'un travail de recherche sur les méthodes de transformations créatives appliquées à des sons compressés. Il s'est avéré assez difficile de concevoir une représentation audio générale et universelle propice à la manipulation, du fait que la recherche d'une meilleure flexibilité se révélait souvent incompatible avec des possibilités de transformations riches et intéressantes.

En revanche, le fait de se concentrer sur une famille spécifique de sons a permis d'obtenir des manipulations sonores bien plus expressives. Le premier choix s'est naturellement porté sur les sons d'impacts, car ils peuvent être manipulés selon des paramètres intuitifs de propriété physique. En complément de notre travail sur l'audio spatialisé, dont l'objectif est de fournir au-à la concepteur-ice sonore un contrôle créatif du comportement de la physique acoustique (propagation du son), nous avons imaginé un synthétiseur permettant de travailler de manière similaire avec le son produit par des évènements physiques tels que des collisions.

Nous espérons amener un terrain d'innovations en permettant ainsi aux concepteurs-ices sonores de travailler avec du matériau sonore existant plutôt que de les synthétiser en partant de zéro. De cette manière, ils-elles pourront appliquer des transformations de type physique de jeu à leurs enregistrements de foley favoris ! Nous avons également pris en considération que la plupart des meilleures banques de sons et d'échantillons ne fournissent pas d'impacts par groupes uniques, et il nous semblait important de développer une méthode de travail permettant de manipuler des groupes de sons de pas, de balles, de collisions, etc. La synthèse croisée est un outil judicieux et efficace pour permettre au-à la concepteur-ice sonore de travailler avec des ensembles de sons d'impacts, et d'explorer les variations possibles entre eux.

Définitions

Avant d'explorer Impacter plus en détail, il est important d'établir quelques définitions et concepts liés au fonctionnement des algorithmes sous-jacents au plugiciel :

Un modèle source-filtre est un procédé de synthèse sonore modale reposant sur la lecture d'un signal source excitateur au travers d'un filtre. La voix ou la parole est généralement modélisée à l'aide d'un signal source et d'un filtre, où un excitateur de type source glottique ou gutturale (l'air passant au travers des cordes vocales) est modélisé en sons de voyelles à l'aide d'un filtre (le conduit vocal). Certaines méthodes d'analyse classiques, comme le codage prédictif linéaire (ou « LPC » pour « Linear Predictive Coding »), décomposent un signal d'entrée pour en tirer un filtre et un signal d'excitation résiduel.

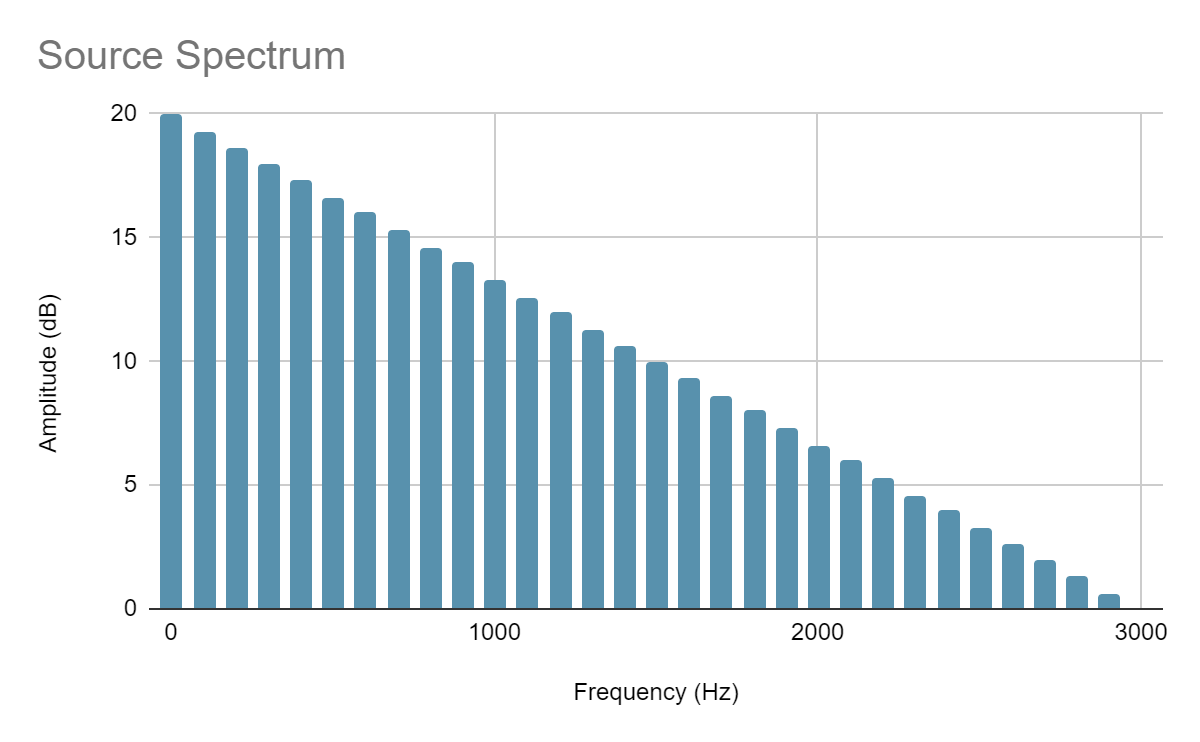

Image 1. Exemple du spectre d'une source sonore et de la fonction du filtre qui la modélise. Le spectre source correspond à l'excitation glottique et la forme de la fonction de filtre correspond à la forme du conduit vocal. (Ouvrir l'image dans un nouvel onglet pour l'agrandir)

|

.png) |

.png) |

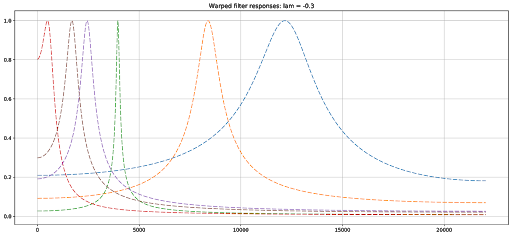

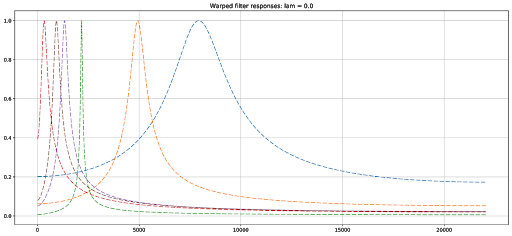

La déformation fréquentielle (« Frequency Warping ») est une mise à l'échelle non uniforme appliquée dans le domaine spectral. Elle consiste à prendre les fréquences sur l'échelle linéaire et à les redistribuer selon une autre échelle, souvent en comprimant la partie inférieure du spectre vers 0, et en étirant la partie supérieure pour remplir l'espace restant, ou vice-versa. La déformation fréquentielle peut être directement appliquée aux fréquences d'un ensemble de sinusoïdes, ou aux coefficients d'un filtre correspondant à une courbe dans le domaine fréquentiel.

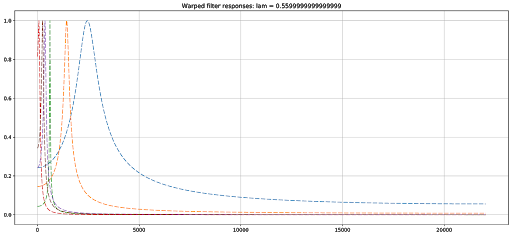

Image 2. Exemple typique des différentes réponses fréquentielles d'un filtre utilisé dans Impacter et modifié par déformation fréquentielle. (Ouvrir l'image dans un nouvel onglet pour l'agrandir)

|

|

|

Pour faire simple, on peut considérer que l'activation de différents modes acoustiques sur un matériau physique correspond à une présence d'énergie dans différentes zones du spectre. Dans le cas d'un algorithme de synthèse comme Impacter, cela affecte la position et le gain de chacune des bandes du filtre et des sinusoïdes. Par analogie au domaine physique, le principe des modes acoustiques repose sur le fait qu'un matériau excité (subissant un impact) présentera certains modes particuliers en fonction de l'endroit de sa surface où l'impact se produit. Un impact au centre d'une membrane, par exemple, activera un minimum de modes acoustiques disponibles, alors qu'un impact en périphérie en activera un plus grand nombre. Lorsqu'il s'agit de contrôler les paramètres de ce comportement acoustique, comme nous le verrons plus bas, il est souvent suffisant de modéliser la distance par rapport au centre d'une surface. Dans un modèle simplifié, le comportement acoustique sera approximativement le même n'importe où sur la membrane, tant que la distance par rapport au centre reste la même. Cette considération est particulièrement importante pour comprendre le fonctionnement du paramètre de position dans Impacter.

Par ailleurs, il y a une corrélation entre la taille d'un objet et le nombre de modes acoustiques qu'il peut contenir. Un objet de plus grande taille contiendra des modes acoustiques plus nombreux et avec plus de basses fréquences, ce qui peut générer une plus grande densité spectrale s'ils sont excités à différents emplacements. En règle générale, la perception de l'augmentation de la taille d'un objet s'accompagne d'une baisse de la hauteur du son. Cela est dû à l'apparition de nouveaux modes dans les fréquences basses, qui tendent à changer la fréquence fondamentale du son d'impact. Les recherches [1] ont mis en évidence que la déformation fréquentielle nous permet d'avoir une approximation pertinente de la modification de la taille d'un objet, en reproduisant la densité croissante des modes dans le bas du spectre, tout en ayant une quantité fixe de modes générés par un algorithme de synthèse. Cela s'avère être très utile pour modéliser l'effet d'un changement de taille, sans pour autant demander à l'algorithme qu'il fasse plus de travail pour synthétiser plus de modes.

Synthèse et analyse

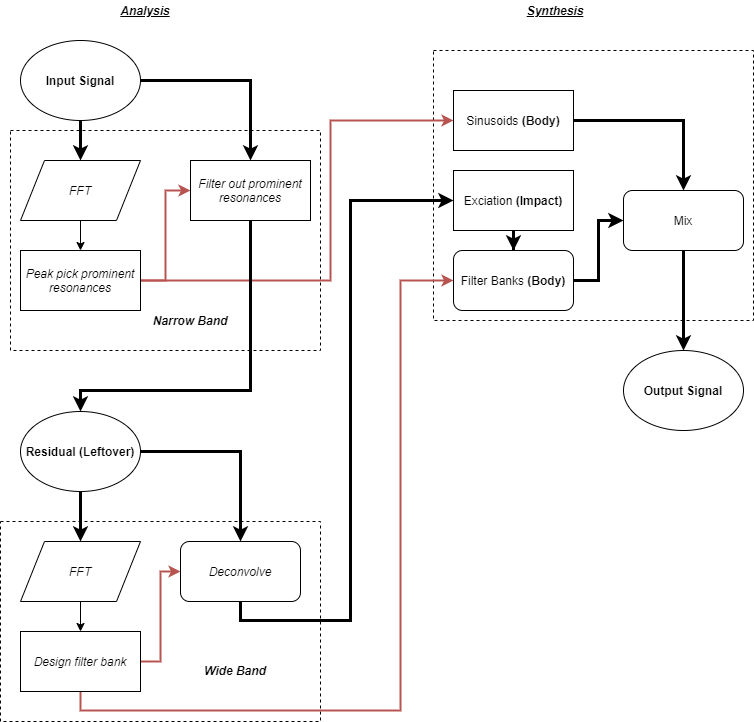

L'algorithme de synthèse d'Impacter repose sur la combinaison d'un système par sinusoïdes et d'un système source-filtre. En d'autres termes, n'importe quel son original d'entrée est reconstitué pendant l'exécution de l'application, en utilisant une combinaison de sinusoïdes et un signal d'excitation passant par une banque de filtres. Conceptuellement, nous associons le signal d'excitation des composants source-filtre aux composants « Impact » d'Impacter, tandis que la banque de filtres et les sinusoïdes du modèle source-filtre sont associées au corps (« Body »).

L'analyse effectuée en arrière-plan par Impacter repose sur un algorithme en deux étapes. Dans un premier temps, cette analyse extrait les informations de fréquences et d'enveloppe pour le modèle de synthèse sinusoïdale. Dans un second temps, elle extrait une banque de filtres ainsi qu'un signal d'excitation résiduel qui seront utilisés dans le modèle de synthèse source-filtre.

La première étape, appelée analyse par « bande réduite » (« narrow band »), effectue une sélection de pics sonores sur le signal d'entrée afin d'isoler les fréquences les plus résonantes de l'impact. Ces pics résonnants sont ensuite filtrés et extraits du son original afin de produire un son résiduel, et leurs informations d'enveloppe et fréquentielles sont stockées en tant que paramètres pour le modèle de synthèse sinusoïdal. Lors d'une deuxième étape, le son résiduel obtenu à la suite de l'analyse par « bande réduite » est ensuite passée au travers d'un traitement cette fois « large bande » (« wide band »), qui va permettre d'en extraire une banque de filtres pour le système source-filtre. Le signal d'excitation obtenu à la fin est produit par déconvolution du son résiduel et des banques de filtres.

Image 3. Diagramme du système d'analyse et de synthèse utilisé par Impacter.

Resynthèse

Tout le travail effectué en amont par l'algorithme d'analyse à l'intérieur de Wwise permet de limiter grandement le travail effectué par l'algorithme de synthèse lors de l'exécution du jeu : le mélange de quelques sinusoïdes au signal d'excitation passé dans un filtre biquad permet d'éviter l'usage d'une FFT ! Par ailleurs, soyez tranquilles, ce système garantit une reconstruction sans faille du signal original, et aucune partie du signal d'entrée n'est perdue.

Manipulations

Ce modèle de synthèse hybride offre de nombreuses possibilités de manipulations du timbre sonore lors de la synthèse, manipulations pouvant être effectuées ensemble ou individuellement.

-

Les coefficients de la banque de filtres et les fréquences de chaque sinusoïde peuvent être transformés par déformation fréquentielle afin de modifier le timbre sonore et la hauteur perçue de manière non-uniforme.

-

Le signal d'excitation peut être rééchantillonné (augmentation ou diminution de la hauteur) indépendamment du profil spectral de la banque de filtres ou des positions des sinusoïdes.

-

Les enveloppes de chaque sinusoïde peuvent être étirées ou réduites afin de correspondre à la durée du signal d'excitation rééchantillonné, ou bien pour désolidariser encore plus les résonances du son d'impact.

-

Il est possible d'activer ou de désactiver certaines sinusoïdes spécifiques et banques de filtres, ce qui permet d'agir en détail sur le son de manière intuitive, ou de créer un modèle d'excitation des modes acoustiques par rapport à la localisation de l'impact.

-

Une synthèse FM (par modulation de fréquence) peut être appliquée sur chacune des sinusoïdes. Plusieurs recherches ont démontré que l'usage de la synthèse FM permet de contrôler la perception d'agressivité d'un son d'impact. [2]

Exemples audio

Les manipulations 1., 2. et 3. sont toutes contrôlées par le paramètre de masse (« Mass ») d'Impacter.

« Mass » :

La manipulation 4. est utilisée de diverses manières afin de fournir un contrôle sur les paramètres de vélocité (« Velocity ») et de position (« Position »).

« Velocity » :

Dans le cas du paramètre de position, une valeur comprise entre un intervalle de 0 et 1 peut être choisie afin de simuler la distance par rapport au centre de la surface subissant l'impact.

« Position » :

Enfin, la manipulation 5. sert de base pour déterminer l'agressivité du son (paramètre « Roughness »).

« Roughness » :

Synthèse croisée

Il est possible, au sein du plugiciel Impacter, de charger et de synthétiser plusieurs fichiers audio sources, de manière à effectuer une synthèse croisée utilisant différents composants de chacune de ces sources, ce qui est rendu possible par le système hybride sinusoïdal + source-filtre. Bien qu'il existe de nombreuses façons de combiner les composants du modèle de synthèse, le résultat de synthèse croisée le plus convaincant reste de combiner un son excitateur - l'impact (« Impact ») - avec une banque de filtres et des sinusoïdes - le corps (« Body ») - d'un autre son. L'interface d'Impacter permet donc de sélectionner un « Impact » et un « Body » de manière distincte.

Prudence

Attention ! La combinaison de sons trop incompatibles entre eux peut souvent aboutir en une résonance sinusoïdale trop éloignée du son d'impact. Cela est dû au fait que les pics obtenus lors de l'analyse par bande réduite ont trop peu de corrélation avec le spectre de l'impact, ou bien parce que le son d'impact ne contient pas l'énergie initiale mise en valeur par la banque de filtres. En revanche, la synthèse croisée offre les meilleurs résultats lorsque l'on permute des sons provenant d'une même famille. Ainsi, un ensemble composé d'un nombre n de sons de pas d'une séance d'enregistrement peut rapidement être étendu à un nombre n2 de micro-variations, et ce avant même la modification des paramètres.

Conclusion et utilisations

Impacter a été conçu dans le but de fournir de nouvelles façons expérimentales de concevoir des sons pour l'audio de jeux vidéo. Le fait de se concentrer sur une classe spécifique de sons (les impacts) nous a permis de concevoir un plugiciel qui peut être utilisé de multiples façons. Pour un groupe de sons où plusieurs variations sont nécessaires, la permutation aléatoire offerte par la synthèse croisée, en plus de la gestion aléatoire interne des paramètres d'Impacter, permet d'obtenir un comportement comparable à celui d'un Random Container plus complexe. Dans le cas où le-la concepteur-ice sonore aurait accès à des RTPC ou Event liés à des paramètres de physique dans le moteur de jeu (collision, vélocité, taille), ceux-ci pourraient être aisément connectés aux paramètres physiques des sons de foley afin d'effectuer des manipulations intuitives.

Bien que le plugiciel Impacter soit présentement offert à nos utilisateurs en tant que prototype expérimental, son architecture et sa stabilité restent extrêmement fiables. Nous sommes convaincus qu'Impacter trouvera naturellement sa place dans votre workflow de création, et ce sans erreurs ni difficulté. Son fonctionnement lors de l'exécution du jeu, reposant sur un algorithme de synthèse stable et économe, n'entamera en rien votre budget mémoire ou CPU.

Commentaires