Introduction

Le HDR (High Dynamic Range) est une fonctionnalité de Wwise très efficace pour mixer et gérer la gamme dynamique du signal de sortie de votre projet. J'ai eu la chance d'utiliser Wwise pendant la majeure partie de ma carrière en audio de jeu, et j'ai lentement plongé dans le monde du HDR, de plus en plus profondément ces derniers temps. Bien que cette fonctionnalité existe depuis au moins la version 2013.1 de Wwise, je pense qu'il y a encore plusieurs manières de découvrir son utilité et de personnaliser la façon dont elle est utilisée sur les projets. J'espère qu'en lisant cet article, vous serez en mesure de comprendre un peu mieux comment le HDR fonctionne, et que vous repartirez avec quelques idées concernant sa gestion et son implémentation, de manière à en faire bénéficier les jeux sur lesquels vous travaillez.

Pourquoi utiliser Wwise HDR ?

Vous vous demandez peut-être pourquoi vous devriez utiliser Wwise HDR pour votre projet, alors que vous avez déjà d'autres outils de mixage à votre disposition ? La façon dont le HDR fonctionne est qu'une fois le système mis en place et les paramètres définis, le jeu peut pratiquement se mixer lui-même ! Il peut également s'avérer beaucoup plus transparent que les systèmes traditionnels de side-chaining et de compression qui s'appuient sur la détection de signal et les durées d'attaque et de relâche pour obtenir une compression de la plage dynamique. Cela vous permet également d'établir et de gérer facilement des relations de mixage relatives entre les sons au lieu d'effectuer un mixage absolu et parfois involontairement additif.

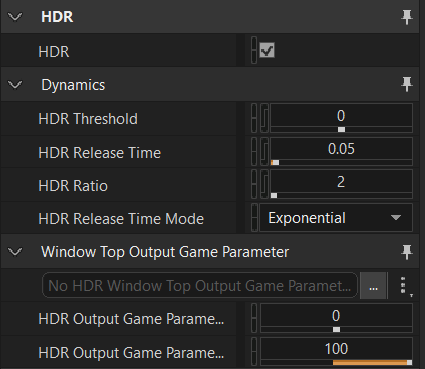

HDR Threshold et HDR Ratio

Afin de déterminer ce qui participe au système HDR et comment cela est affecté, il existe deux paramètres extrêmement importants dans Wwise - le HDR Threshold (seuil) et le HDR Ratio (rapport). Ces paramètres sont définis dans la Master-Mixer Hierarchy, là où le système HDR peut être activé.

Je recommande d'activer le système HDR et de régler ces paramètres dans le moins d'emplacements possible. Dans tous les projets sur lesquels j'ai travaillé, il n'y avait qu'une seule instance de HDR active dans la Master-Mixer Hierarchy. Cela facilite grandement la gestion et la compréhension du comportement du système HDR - tant que le son est acheminé vers ce bus ou un bus enfant de ce bus parent HDR, il se conformera à cet ensemble unique de règles HDR sans exceptions compliquées. Bien sûr, chaque projet a ses propres besoins, mais j'ai trouvé qu'il était préférable d'avoir un fonctionnement aussi prévisible et simple que possible, ce qui est extrêmement important étant donné que la taille des jeux (et leur complexité audio) continue de croître depuis ces dernières années.

HDR Threshold

Ce paramètre définit le seuil à partir duquel le son actif commencera à compresser la plage dynamique du mixage de votre jeu, ou plutôt à atténuer le volume des autres sons en dessous de lui. Wwise utilise le Voice Volume final du son pour déterminer s'il dépasse le seuil ou non. Ceci inclut les ajustements appliqués en aval au Voice Volume et au Bus Volume, mais n'inclut pas les ajustements de gain (Make-Up Gain). Je reviendrai sur les meilleures façons de gérer le Voice Volume et le Make-Up Gain plus loin dans cet article.

HDR Ratio

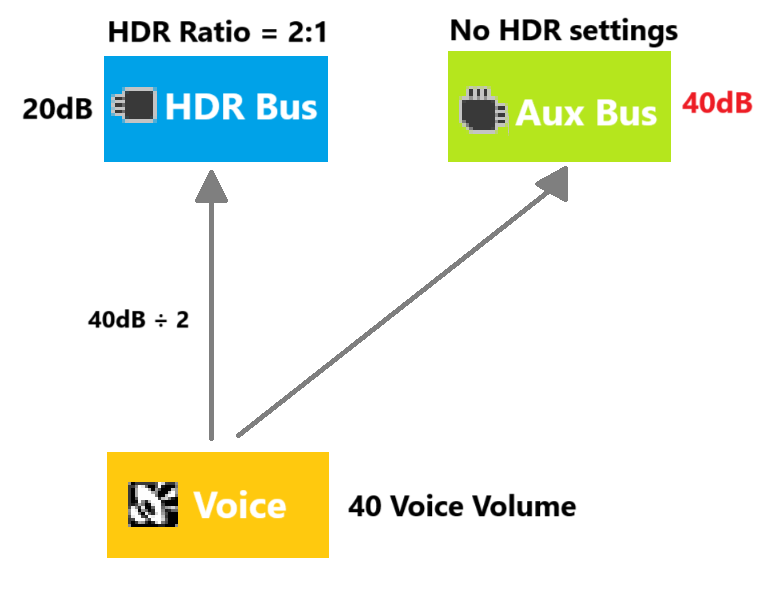

Ce rapport détermine dans quelle mesure le signal de sortie final du son est réduit au niveau du bus une fois qu'il dépasse le seuil HDR. Ainsi, le système HDR fonctionne comme un compresseur traditionnel ! Par exemple, si vous avez un son dont la valeur finale de Voice Volume est de 20 et que votre HDR Threshold est de 0, le son aura une valeur relative de +20dB au-dessus du seuil HDR. Si le rapport HDR est réglé sur 2:1, cela signifie que le son sera seulement 10dB plus fort. Dans ce cas, qu'advient-il des 10dB restants ? C'est là que les HDR Envelopes (enveloppes HDR) entrent en jeu, ainsi que la relation relative entre les autres sons de votre jeu. Je reviendrai sur ce point plus tard.

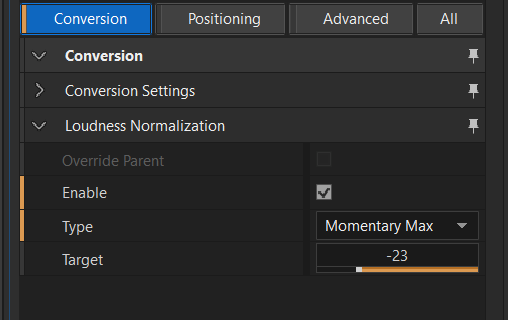

Utiliser le Voice Volume comme valeur absolue d'intensité sonore

Wwise utilise le Voice Volume d'un son pour déterminer comment il se comporte dans le système HDR. Il est donc très facile de plonger dans le système HDR avec l'idée que « intensité sonore (ou loudness) = importance ». Cependant, en fonction de la normalisation de vos ressources (ou de l'absence de normalisation), deux sons différents ayant tous deux une valeur de Voice Volume de 20 peuvent ne pas avoir la même intensité sonore. Si vous envisagez d'utiliser le HDR pour représenter l'intensité sonore réelle d'un son, il est préférable d'utiliser la fonctionnalité Loudness Normalization (normalisation de l'intensité sonore) de Wwise pour s'assurer que le Voice Volume soit une représentation absolue de l'intensité sonore d'un son plutôt qu'une valeur arbitraire. La valeur par défaut de Wwise est de -23dB LUFS, vous pouvez donc facilement activer cette normalisation à travers le projet afin que tout votre contenu soit à -23dB LUFS lorsque le Voice Volume est réglé sur 0.

L'utilisation de la normalisation de type Momentary Max peut être une bonne idée pour de nombreux sons, en particulier ceux qui sont courts par nature ou qui ont un contenu plus transitoire (comme les explosions, les tirs d'armes, les sorts de magie, etc.). L'utilisation de la normalisation de type Integrated est préférable pour les sons plus longs ou plus statiques (comme les boucles de vol de projectiles, les nappes d'ambiance et les émetteurs, etc.). À partir de là, votre choix de valeur de HDR Threshold devient une valeur absolue en décibels au lieu d'un nombre arbitraire. Par exemple, si votre HDR Threshold est réglé sur un Voice Volume de +5, cela signifie que tout son supérieur à -18dB LUFS verra son gain réduit par la valeur du HDR Ratio et atténuera également les autres sons plus faibles que lui. Cette méthode se rapproche de la façon dont nos oreilles entendent les choses dans la vie réelle et contribuera à créer un environnement de mixage intuitif.

Repenser le Voice Volume

Bien que la méthode précédente d'utilisation du système HDR de Wwise soit l'approche la plus rapide, il se peut qu'elle ne corresponde pas aux besoins de votre jeu. Et s'il y avait une façon de repenser la façon dont le Voice Volume est utilisé dans votre projet pour repousser les limites de l'utilisation du HDR dans le mixage de votre jeu ?

Lorsque vous utilisez Wwise HDR, vous pourriez considérer l'utilisation du Voice Volume comme une façon de déterminer l'importance sonore d'un son, ce qui ne correspond pas nécessairement à son intensité sonore. Par exemple, il est possible qu'un son doive être atténué pour laisser place à un autre plus important. Cependant, l'atténuation ne se produira pas si leurs niveaux absolus d'intensité sonore sont égaux. Cela est assez souvent le cas dans les jeux multijoueurs où les coéquipiers peuvent tirer avec des armes et utiliser des capacités qui devraient avoir une intensité sonore élevée pour correspondre à l'impact de l'action et des visuels, bien qu'un ennemi puisse également utiliser la même capacité ou tirer avec la même arme. Si vous utilisiez la méthode HDR consistant à Utiliser le Voice Volume comme valeur absolue d'intensité sonore, vous devriez soit baisser considérablement le volume des capacités et des armes des coéquipiers, soit augmenter encore plus le volume des versions ennemies afin d'obtenir la relation de mixage voulue. Cela créerait de grandes disparités de volume entre les sons et deviendrait de plus en plus difficile à maintenir au fur et à mesure que la hiérarchie de sons s'élargirait. En outre, selon l'environnement d'écoute du joueur, il se peut qu'il ne soit pas en mesure de tirer pleinement parti du mixage d'un jeu ayant une plage dynamique plus élevée.

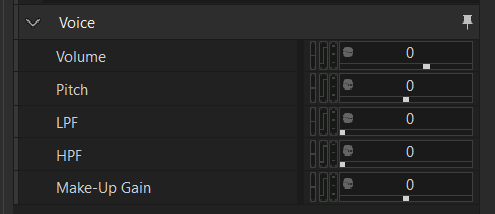

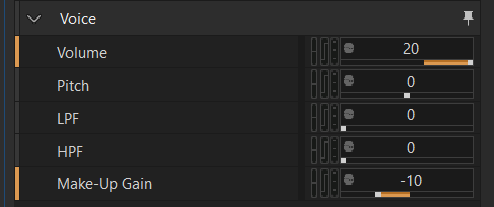

Utilisation du Make-Up Gain

Pour fonctionner de cette manière, le Voice Volume que vous attribuez à un son devient une valeur arbitraire « d'importance » au lieu d'un moyen de dicter l'intensité sonore ou le gain d'un son. Si vous optez pour cette méthode, il est préférable d'utiliser le Make-Up Gain comme méthode principale pour obtenir l'intensité sonore d'un son à l'endroit désiré. Le Make-Up Gain (comme la Loudness Normalization) n'a aucune incidence sur le Voice Volume d'un son et n'a donc aucun effet sur le comportement d'un son dans le système HDR.

Lorsque vous choisissez d'utiliser le Voice Volume de cette manière, vous définissez souvent des valeurs de Voice Volume supérieures au seuil HDR. Pour que l'intensité sonore finale du signal de sortie reste la même qu'auparavant, il est recommandé d'appliquer un Make-Up Gain négatif en compensation, équivalent à l'augmentation relative du gain du son après application du rapport HDR. Par exemple, si votre seuil HDR est de 0 et votre rapport HDR de 2:1, et que vous avez un son réglé sur un Voice Volume de 20, l'augmentation finale du gain de sortie du son sera égale à +10dB. Afin de garantir que le gain soit le même qu'avant le réglage de la valeur du Voice Volume, vous devez alors régler votre Make-Up Gain sur -10dB.

|

|

Un conseil important à cet égard est de se rappeler que les ajustements de valeur relative peuvent être faits dans Wwise en mettant « + » ou « - » après avoir entré le nombre. Par exemple, si le son a déjà une valeur de Make-Up Gain de -8, au lieu d'avoir à faire le calcul dans votre tête pour la nouvelle valeur, vous pouvez simplement taper « 10- » et la nouvelle valeur sera 10dB plus basse que la valeur précédente.

Mixage relatif

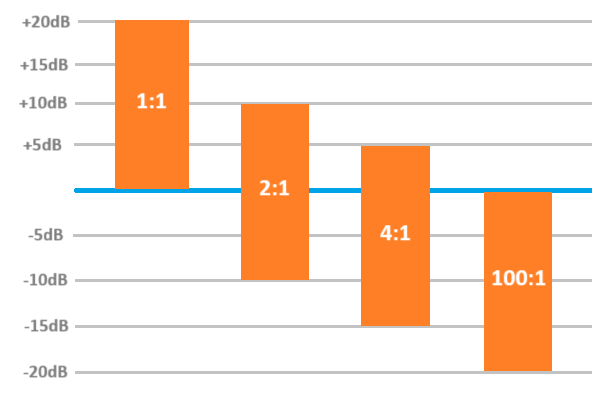

L'une des choses les plus importantes à garder à l'esprit au moment de déterminer les valeurs de Voice Volume est la façon dont le HDR Ratio entre en jeu. Ce rapport HDR détermine l'ampleur de la réduction de gain appliquée à un son dont le Voice Volume dépasse le seuil HDR, mais toute réduction de gain ainsi appliquée a un effet direct sur la façon dont ce son atténue les autres. L'équilibre entre l'augmentation du volume sonore et l'atténuation appliquée aux autres sons peut être comparé à un iceberg flottant à la surface de l'eau. Ce qui est au-dessus de l'eau est en fait perçu comme plus fort, tandis que ce qui est en dessous de l'eau sert à déplacer les autres sons.

Dans l'exemple ci-dessus, le son en question a un Voice Volume de 20. Avec un rapport HDR de 1:1, aucune réduction de gain n'est appliquée au son, qui est donc +20dB plus fort. Avec un rapport de 2:1, le son est seulement +10dB plus fort, mais diminue les autres sons de -10dB. Avec un rapport de 4:1, le son est seulement +5dB plus fort et atténue les autres sons de -15dB. Avec un rapport de 100:1 (qui est le maximum autorisé par Wwise), le son est essentiellement 0dB plus fort et atténue les autres sons de -20dB. Lorsque vous déterminez le paramètre de rapport HDR sur le bus, il est conseillé de choisir un rapport qui facilite le processus de mixage du son. Pour cette raison, 2:1 est un rapport vous permettant de profiter du comportement d'atténuation, tout en offrant des calculs de Make-Up Gain très faciles à effectuer à la volée.

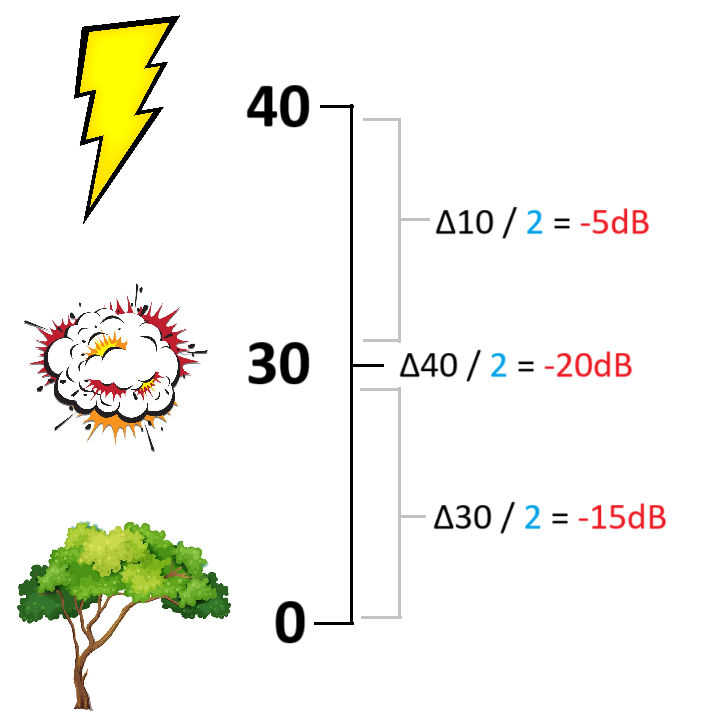

De fait, que se passe-t-il lorsque plusieurs sons se retrouvent à être joués au-dessus du seuil HDR ? Lorsque le système HDR détermine comment atténuer d'autres sons, il tient compte de la différence relative du Voice Volume du son au lieu d'utiliser le Voice Volume absolu d'un son pour déterminer l'atténuation. Supposons qu'il y ait trois sons joués en même temps - un sort de foudre provenant du joueur (Voice Volume de 40), une explosion provenant d'un ennemi (Voice Volume de 30) et une nappe d'ambiance (Voice Volume de 0).

Dans l'illustration ci-dessus, le rapport HDR est de 2:1 et le seuil HDR de 0. Comme le sort de foudre du joueur a un Voice Volume de 40, il n'atténuera l'explosion ennemie que de -5dB puisque la différence entre leurs valeurs de Voice Volume est de 10. Par contre, l'ambiance sera atténuée de -20dB puisque la différence entre elle et le son le plus important est de 40. Si l'explosion ennemie était jouée seule, elle atténuerait l'ambiance de -15dB puisque leur différence de Voice Volume serait de 30.

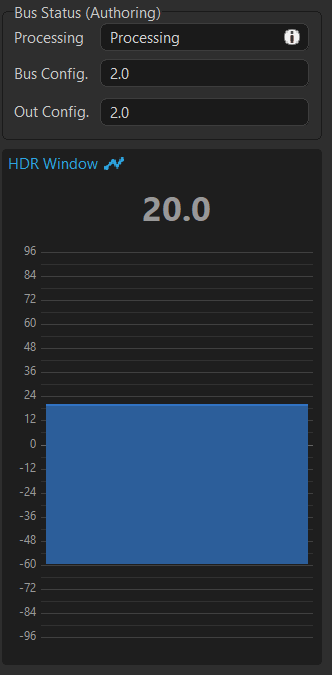

HDR Window

Il est important de noter ici que la présence de plusieurs sons avec des valeurs de Voice Volume plus élevées ne créera pas de situations d'atténuation additive. Les sons dépassant le Threshold HDR déplaceront la HDR Window (fenêtre HDR), décrite plus en détail dans la documentation Understanding HDR. L'atténuation d'un son ne peut aller au-delà de ce qui est défini par le haut de la fenêtre HDR, qui correspond essentiellement au Voice Volume actif le plus élevé à un moment donné. Cela fait du HDR une option idéale par rapport aux méthodes d'atténuation plus traditionnelles basées sur les Wwise Meters/RTPC, pouvant être additives par nature en fonction de la complexité des systèmes en jeu. N'oubliez pas que le déplacement de la fenêtre HDR entraîne également un déplacement proportionnel vers le haut du Volume Threshold (seuil de volume), ce qui peut modifier momentanément le point à partir duquel un son plus faible peut être envoyé à une Virtual Voice ou coupé.

|

|

Gardez à l'esprit que tant qu'un son est au niveau ou en dessous du seuil HDR, les sons au-dessus du seuil calculeront la différence comme si le son était au niveau du seuil HDR. Ainsi, si le sort d'éclair du joueur avec un Voice Volume de 40 était joué en même temps qu'un son avec un Voice Volume de -15, ce dernier serait atténué de -20dB et traiterait le Voice Volume relatif comme s'il était de 40. Pour cette raison et en raison du comportement du Voice Threshold et de la fenêtre HDR décrit ci-dessus, il est conseillé d'utiliser le Voice Volume pour mixer si un son doit rester en dessous du seuil HDR. Cela permet de s'assurer que le son sera toujours atténué de la manière voulue, tout en profitant du système d'optimisation de voix basée sur le Voice Volume.

Garder le pipeline propre

Parce que Wwise utilise le Voice Volume final d'un son comme moyen de déterminer son comportement dans le système HDR, vous devez être conscient de toutes sortes de changements cumulatifs qui pourraient être appliqués à votre son pendant qu'il voyage à travers le pipeline audio. Cela inclut des éléments tels que des Actor-Mixers parents, des RTPC, des States, des Randomizers et autres méthodes modifiant le Voice Volume. Il faut veiller à ce qu'il n'y ait pas de changements involontaires du Voice Volume qui pourraient affecter le comportement HDR de votre son. L'utilisation du Voice Inspector est un excellent moyen de déboguer ce qui se passe avec votre son et de voir tout ce qui peut affecter son Voice Volume. Cela peut vous aider à comprendre toutes les façons dont le Voice Volume est modifié dans votre projet, qu'il peut être utile de nettoyer ou même d'utiliser à votre avantage !

Une autre chose à noter est que les changements de Bus Volume relatif et d'Output Bus Volume ont aussi un effet sur les valeurs finales d'un son en ce qui concerne le HDR, si ces changements ont lieu avant que le signal n'atteigne le bus HDR. Par exemple, si votre son a un Voice Volume de 20 mais que son Output Bus (ou un bus en aval de celui-ci) a un Bus Volume de -4, la valeur finale du son sera de 16. Ceci s'applique également aux bus auxiliaires ! Ainsi, si vous envoyez votre son HDR à un bus auxiliaire ayant une valeur positive ou négative de Bus Volume sur le bus ou un bus en aval, l'envoi auxiliaire de ce son peut être ajusté différemment en fonction de sa valeur, du rapport HDR et de votre seuil HDR.

Pour toutes ces raisons, il est préférable de faire des essais et de décider d'utiliser ou non le HDR le plus tôt possible, afin d'avoir un meilleur contrôle sur les ajustements de gain du Voice Volume et de créer un précédent pour le reste de l'équipe. Si vous implémentez le HDR au milieu de la production, vous risquez d'avoir une longue bataille à mener pour vous assurer que le routing de votre son soit propre et cohérent considérant la manière dont votre pipeline affecte le Voice Volume. Tirer avantage de la fonctionnalité de Query et écrire vos propres scripts personnalisés peut s'avérer une aide précieuse pour déplacer les changements de valeurs de Voice Volume non désirés sur le Make-Up Gain, afin de repartir à zéro et de réinitialiser vos Voice Volume là où vous le souhaitez.

Si vous souhaitez conserver l'intégrité sonore de votre système HDR tout en modifiant le volume de sortie de ces sons, l'utilisation du Make-Up Gain peut s'avérer très utile ! Par exemple, si vous avez un RTPC lié au réglage audio du curseur de volume des SFX, vous pouvez utiliser le Make-Up Gain au lieu du Voice Volume pour que les relations de mixage relatives restent inchangées même si l'amplitude globale est diminuée. Vous pouvez également utiliser le plug-in Wwise Gain pour obtenir un effet similaire, mais gardez à l'esprit que si vous le placez dans la Master-Mixer Hierarchy, les effets ne traiteront que l'Output Bus du son, ce qui n'aura aucun effet sur le routing des voix audio, comme les Game-Defined Auxiliary Sends. Nous reviendrons plus en détail sur le pipeline de voix audio dans la prochaine section. Gardez à l'esprit que la fonction Loudness Normalization de Wwise n'a aucun effet sur le HDR.

En ce qui concerne le HDR, si vous décidez de faire des changements additionnels de Voice Volume pour quelque raison que ce soit, il est préférable de choisir de faire des changements de valeurs soustractifs plutôt que des changements additifs. C'est une chose de prendre un son situé au-dessus du seuil HDR et d'abaisser dynamiquement sa valeur, dans le cas où vous voudriez délibérément changer sa position dans la hiérarchie relative de mixage. Cependant, un changement additif de Voice Volume peut potentiellement élever un son au-dessus du seuil HDR ; et si ce son n'a jamais été destiné à jouer au-dessus du seuil HDR, il peut entraîner des effets secondaires involontaires.

Concernant la structure de gain de votre pipeline, vous pouvez choisir de modifier délibérément le Voice Volume afin de changer dynamiquement la façon dont un son ou même une catégorie de sons se comporte dans le mixage en fonction de certaines variables. Le concept de « menace » ennemie en est un exemple. Imaginez que vous ayez un son d'ennemi avec un certain Voice Volume, mais que vous puissiez combattre plusieurs de ces ennemis à la fois, toutes les instances de ce son n'auront pas besoin d'avoir la même importance dans le mixage. L'utilisation de diverses variables de gameplay liées aux RTPC permet de modifier le Voice Volume et le Make-up Gain de manière à donner la priorité aux sons qui en ont besoin et à réduire « l'importance sonore » pour ceux qui n'en ont pas besoin.

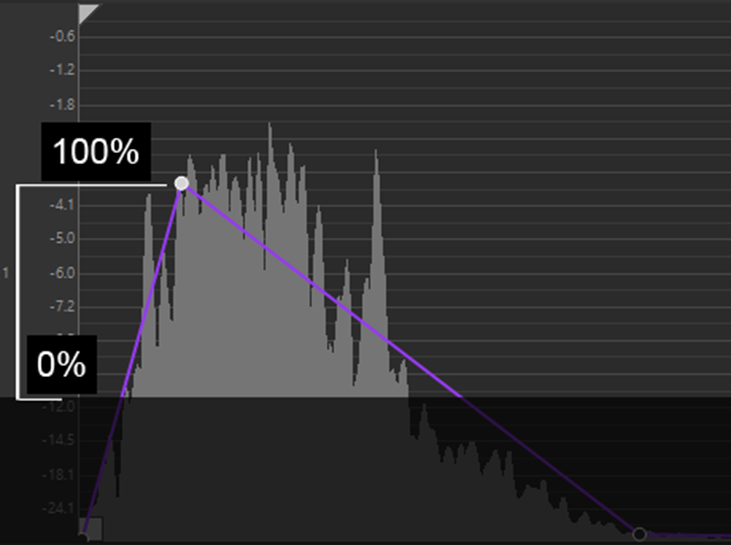

Dans cette image, vous pouvez voir que le niveau de menace ennemie « enemy threat » diminue le niveau de Voice Volume au fur et à mesure que l'ennemi devient moins menaçant, tout en augmentant le Make-Up Gain. D'après le rapport HDR, cela permet de s'assurer que le son ne devienne pas auditivement plus silencieux dans le mixage, mais qu'il provoque moins d'atténuation sur les autres sons, et qu'il soit donc plus souvent atténué en retour.

HDR et envois auxiliaires

Il est important de garder à l'esprit comment le HDR peut affecter vos envois auxiliaires. Rappelez-vous que les paramètres HDR sont définis au niveau du bus dans la Master-Mixer Hierarchy. Cela signifie que le seuil HDR et le rapport HDR ne s'appliquent qu'au signal acheminé vers le bus pour lequel le HDR est activé. Les envois auxiliaires Game-Defined et User-Defined définis dans l'Actor-Mixer Hierarchy seront acheminés vers des bus et chemins audio qui existent en dehors de votre bus principal ayant la fonctionnalité HDR. Pour cette raison, il est possible d'avoir un effet indésirable où le gain d'une source sonore sans effet (signal « dry ») sera réduit du fait du rapport HDR, alors que son envoi avec effet (signal « wet ») ne le sera pas. Par exemple, si un son avec un Voice Volume de 40 est acheminé vers un bus compatible HDR dont le rapport HDR est de 2:1, le gain de sortie réel du son sera de +20dB. Mais si ce même son est envoyé à un bus auxiliaire n'ayant pas de HDR activé sur son bus ou un bus parent, le signal avec un Voice Volume de 40 au complet sera envoyé dans ce bus, ce qui signifie que le signal « wet » du son sera +20dB plus fort que le signal « dry ».

Pour résoudre ce problème, il n'est pas pratique d'ajuster les valeurs de départ auxiliaire en Override, comme vous le feriez avec le Make-Up Gain. À la place, vous pouvez simplement activer le HDR avec les mêmes réglages de seuil et de rapport HDR sur le bus parent du bus auxiliaire, et tout son envoyé à un bus enfant de ce bus parent obéira aux règles HDR et verra le gain de son signal réduit exactement de la même manière que le signal « dry ». Vous pouvez également placer vos bus auxiliaires sous le même bus parent que vos autres bus HDR afin qu'ils aient les mêmes réglages HDR en ayant le même bus parent. Notez que si vous envoyez du contenu non HDR à ces bus auxiliaires HDR, Wwise reconnaîtra que leur signal dry n'a pas l'option HDR activée et n'appliquera pas le comportement HDR au routage auxiliaire de ces sons.

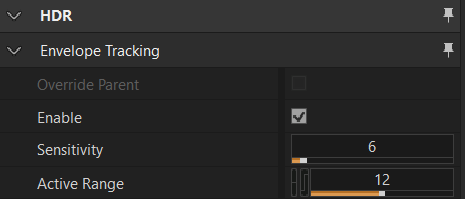

Sensitivity et Active Range

Une fois qu'un son est au-dessus du seuil HDR, la façon dont il affecte les autres sons au cours de sa lecture est dictée par son HDR Envelope (enveloppe HDR). Si le Voice Volume relatif dicte l'ampleur de l'atténuation d'un autre son, l'enveloppe HDR détermine la façon dont cela se déroule dans le temps. Si l'option Envelope Tracking (suivi d'enveloppe) n'est pas activée pour un son mais que son Voice Volume est supérieur au seuil HDR, toute sa durée sera intégralement prise en compte pour atténuer les autres sons.

Pour que le comportement d'atténuation corresponde mieux aux propriétés du son, vous devez activer l'option Envelope Tracking afin de personnaliser ce comportement. Pour cela, naviguez vers le son ou l'Actor-Mixer dans lequel vous souhaitez définir cette propriété et allez dans la section HDR du Property Editor, puis cochez la case Enable (Activer).

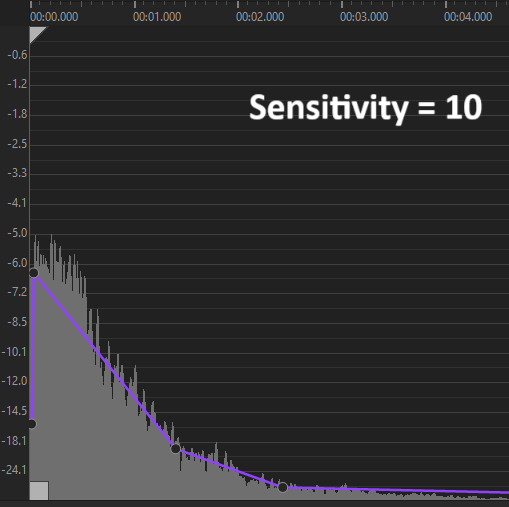

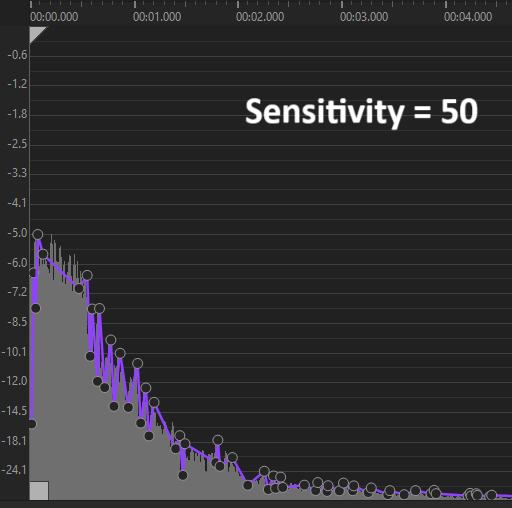

Sensitivity

Le paramètre Sensitivity (sensibilité) détermine le nombre de points qui sont dessinés automatiquement sur l'enveloppe HDR du son. Des valeurs plus faibles seront plus faciles à éditer et à contrôler mais offriront moins de détails granulaires, alors que des valeurs plus élevées offriront plus de détails, mais seront plus difficiles à éditer après coup et risquent de capturer des parties du son que vous ne souhaitez pas utiliser dans l'enveloppe. Vous devriez trouver une valeur de sensibilité par défaut qui se rapproche le plus de l'enveloppe que vous souhaitez entendre, puis la modifier manuellement à partir de là. J'ai constaté que les valeurs de sensibilité comprises entre 4 et 12 tendent à offrir les meilleurs résultats, mais ce qui fonctionne le mieux pour votre projet peut varier.

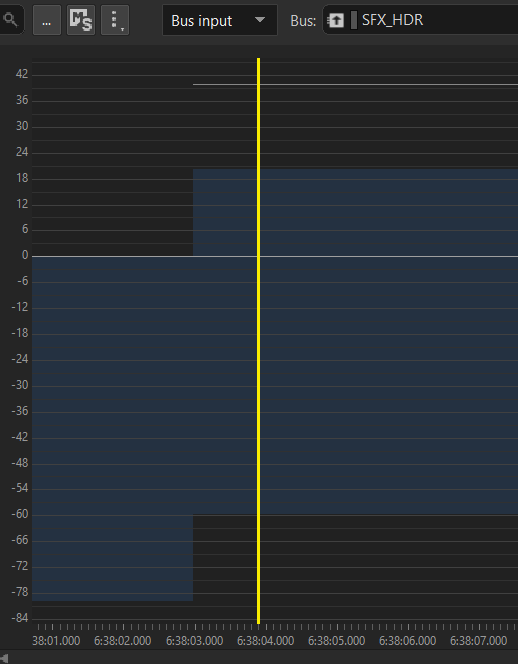

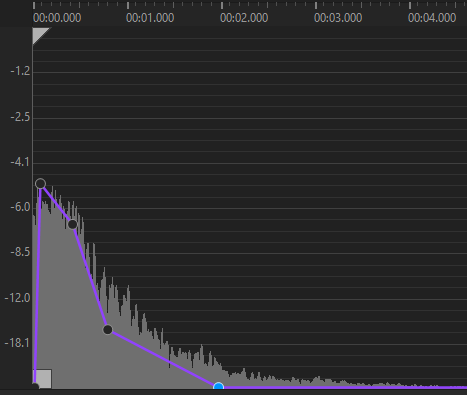

|

|

Pour réussir à éditer l'enveloppe HDR d'un son de la manière la plus représentative, veillez à changer la vue de la fenêtre Source de Peak à RMS, car c'est la valeur RMS du son que le système utilise pour générer automatiquement les points de l'enveloppe. Cela permet également de voir plus facilement l'énergie globale du son au fil du temps si vous choisissez d'éditer l'enveloppe d'une manière qui capture plus précisément ce dont vous avez besoin.

Active Range

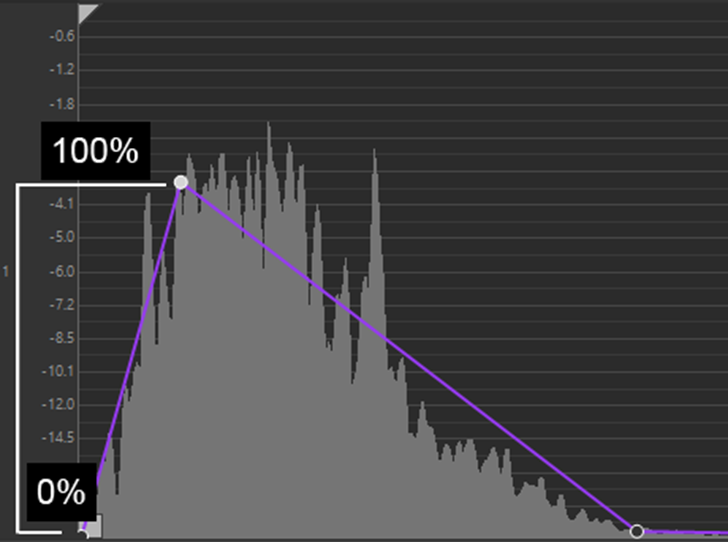

La propriété Active Range (plage active) d'une enveloppe HDR est un seuil qui détermine la quantité de l'enveloppe HDR à utiliser. Imaginez que l'on coupe le sommet d'une montagne, la plage active détermine la quantité à couper, en commençant par le sommet de la montagne.

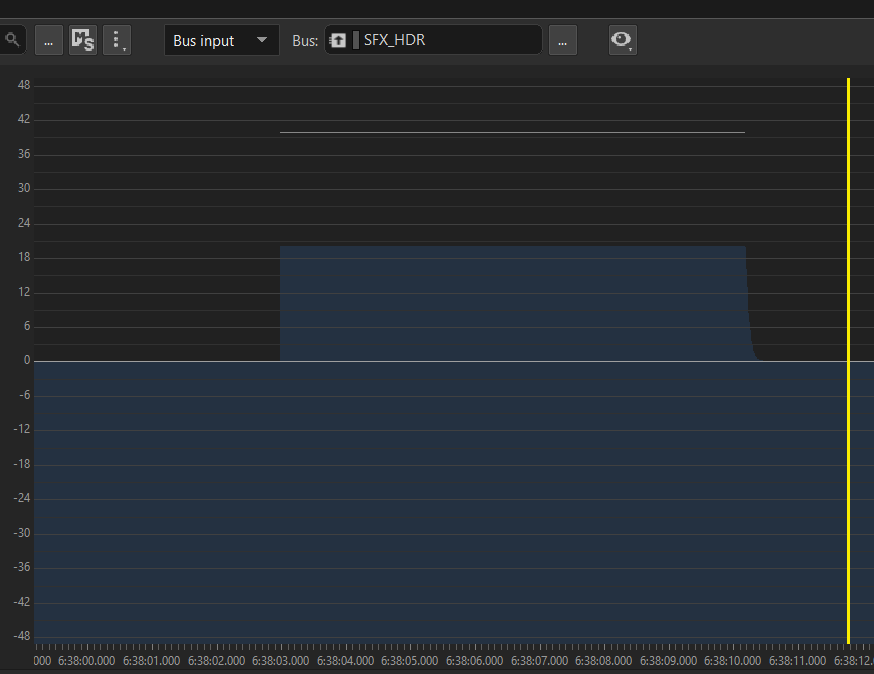

Commençons par cette enveloppe HDR générée automatiquement. Vous pouvez voir que le pic de l'enveloppe se situe à environ -3,5 dB. La position de cette enveloppe est relative et non absolue. Elle ne dicte pas l'ampleur de l'atténuation du son - celle-ci n'est définie que par le Voice Volume final du son. Vous pouvez plutôt considérer que l'écart relatif entre le point le plus bas et le point le plus haut de l'enveloppe représente de 0 à 100 % de l'atténuation possible. Ainsi, si votre Voice Volume est réglé sur 20, le bas de l'enveloppe sera de 0dB et le haut de l'enveloppe sera de 10dB (pour un rapport HDR de 2:1).

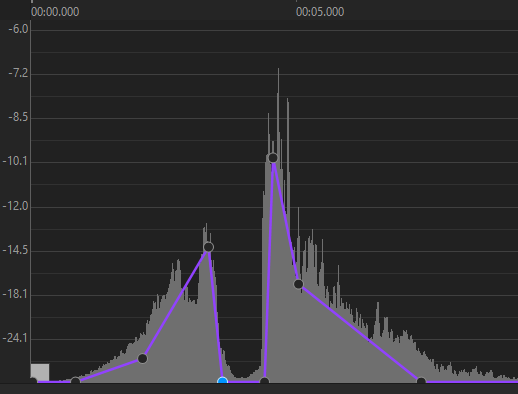

|

|

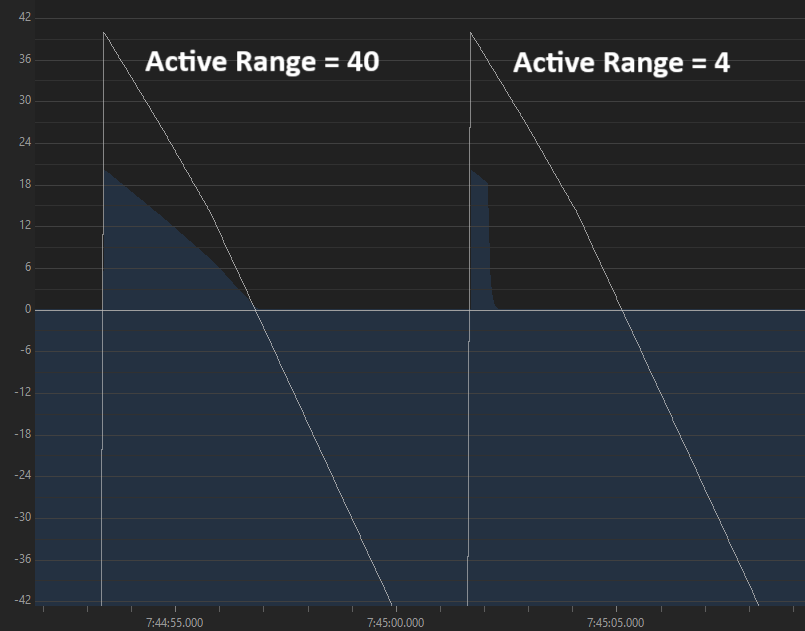

En ajustant la plage active du son, le mieux est de régler une valeur vous permettant d'utiliser toute l'enveloppe. Par exemple, si la plage active est réglée sur 30 ou plus, elle utilisera essentiellement toute l'enveloppe. Si vous partez du pic de l'enveloppe (-3,5) et que vous soustrayez 30, vous obtenez -33,5. La vue RMS du Source Editor ne descend que jusqu'à environ -35dB, nous utiliserons donc pratiquement toute l'enveloppe qui a été dessinée. Si vous choisissez de définir une valeur de plage active inférieure, le système HDR utilisera une partie beaucoup moins importante de l'enveloppe pour obtenir l'effet d'atténuation. Vous pouvez le constater dans l'exemple ci-dessous - la ligne blanche représente le Voice Volume et l'enveloppe générée du son, tandis que la forme bleue représente la fenêtre HDR telle que dictée par le seuil HDR et le rapport HDR. Le son avec une plage active de 40 utilise toute la durée de l'enveloppe HDR générée, alors que le même son avec une plage active de 4 utilise beaucoup moins de la durée de l'enveloppe. Cela peut être utile si vos enveloppes générées automatiquement ont tendance à capturer beaucoup de signal de queue ou de relâche de son, et que vous ne souhaitez pas nécessairement l'utiliser pour atténuer d'autres sons.

Un autre ressource utile disponible dans la boîte à outils de Wwise consiste à assigner à un son une plage active de 0. Cette fonction est très utile si vous déterminez qu'un son est suffisamment important pour avoir un Voice Volume supérieur au seuil, mais que vous ne souhaitez pas qu'il atténue activement d'autres sons. Il s'agit en quelque sorte d'un « contournement HDR ». Ceci est particulièrement utile pour les sons prioritaires comme les boucles d'ambiance, qui sont importantes pour la narration ou certains moments de jeu, ou les sons tels que les bruits de pas d'ennemis pour lesquels vous ne voulez pas nécessairement qu'ils atténuent d'autres sons, mais auxquels il est utile d'assigner une valeur de Voice Volume afin qu'ils soient moins atténués que d'autres sons. Gardez à l'esprit que le simple fait de désactiver le suivi de l'enveloppe ne désactive pas la capacité du son à atténuer d'autres sons - il utilise simplement à la place la durée intégrale du son pour effectuer une atténuation. En réglant la plage active sur 0, aucune partie de l'enveloppe n'est utilisée. Dans ce cas, l'aspect de l'enveloppe n'a pas nécessairement d'importance, mais il peut toujours être judicieux d'en créer une pertinente au cas où vous souhaiteriez ultérieurement que le son ait à nouveau une plage active.

Création d'une enveloppe

Le travail de Wwise pour générer automatiquement des enveloppes HDR est très convaincant, mais il est possible que vous vouliez aller plus loin en créant manuellement ces enveloppes pour avoir un contrôle total sur l'expérience de mixage. Afin de créer un système d'atténuation aussi transparent que possible, être capable de déterminer subjectivement quelles sont les parties les plus importantes du son et comment elles changent avec le temps peut être un élément indispensable de votre boîte à outils.

Dans la fenêtre Source Editor, vous devriez essayer de commencer par une enveloppe HDR se rapprochant le plus de ce qui correspond à l'énergie globale du son. Comme indiqué ci-dessus, une valeur de sensibilité comprise entre 4 et 12 peut être un bon point de départ. Voici quelques conseils pour ajuster manuellement vos enveloppes HDR :

Exemple n.1

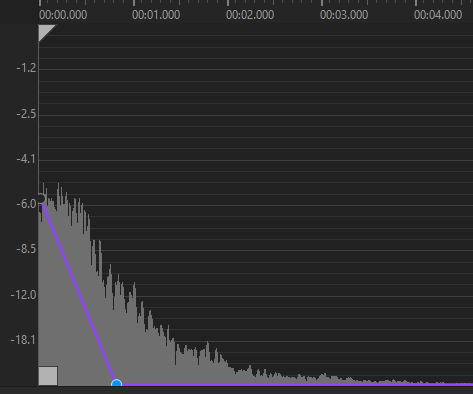

En reprenant le même exemple d'un son d'explosion, l'enveloppe ci-dessus démarre immédiatement sans temps d'attaque afin de faciliter l'atténuation. Cela peut avoir pour conséquence que la réduction de gain appliquée sur les autres sons peut devenir audible avant que l'énergie du son ne prenne le dessus. Même si cela ne dure que quelques millisecondes, cela peut suffire à rendre l'effet d'atténuation perceptible. Cette enveloppe se termine également très tôt, de sorte que l'atténuation sur les autres sons peut être un peu trop courte pour que l'on ressente la gravité du son de l'explosion. Même si tout dépend du contexte, il ne sera pas facile d'obtenir plus d'atténuation avec ce son sans en modifier l'enveloppe. Dans le cas inverse, il est au moins possible de réduire la plage active d'un son pour utiliser une plus petite partie d'une enveloppe plus longue.

Exemple n.2

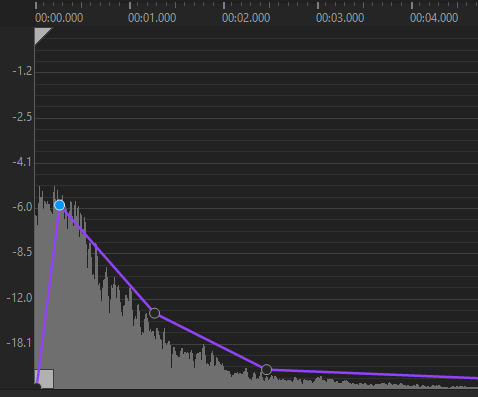

Dans cet exemple, l'attaque de l'enveloppe est trop lente, de sorte que le mixage ne laissera pas assez de place à la transitoire de l'explosion. Même si l'atténuation n'est probablement pas perceptible, la transitoire risque d'être masquée par d'autres sons. Pour le reste de la durée du son, la quantité d'atténuation qui se produit peut être trop importante par rapport à la valeur RMS du son. Je trouve toujours qu'il est préférable de « cacher » l'effet d'atténuation derrière le volume RMS du son afin de masquer la réduction de gain occasionnée. Enfin, la queue de l'explosion continue à atténuer d'autres sons bien après la fin de la partie importante du son, ce qui peut avoir pour effet de réduire les autres sons plus longtemps que prévu.

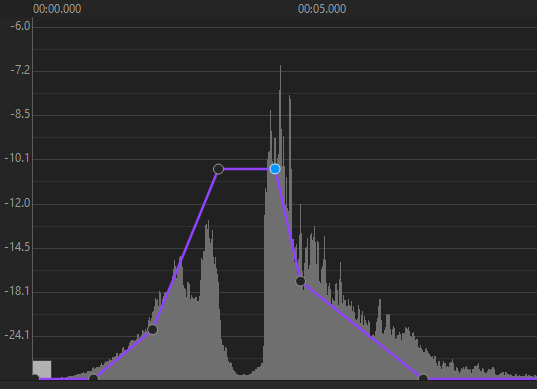

Exemple n.3

Pour ce son d'explosion, voici une enveloppe idéale. Comme vous pouvez le voir, l'attaque du son n'est pas immédiate. Le fait d'avoir ne serait-ce qu'une légère attaque sur l'enveloppe peut rendre l'effet d'atténuation plus transparent, ce qui est particulièrement intéressant si vous atténuez d'autres sons de -20dB ou plus. Bien que cela ne soit pas aussi visible sur une simple capture d'écran, la majeure partie du contenu pleine fréquence du son est localisée vers le début, là où, de fait, la majorité de la forme de l'enveloppe est concentrée. L'atténuation est généralement plus transparente lorsqu'il y a beaucoup de contenu fréquentiel pour masquer la réduction de gain, donc essayer de concentrer l'enveloppe là où l'énergie est la plus proéminente peut aider à masquer l'effet de réduction de gain occasionnée sur d'autres sons. Vers la fin du son, même si la queue est relativement audible, le fait que l'enveloppe HDR se termine volontairement tôt permet de faire remonter le gain des autres sons sous le couvert du son le plus important, de sorte que l'on ne remarque jamais qu'ils aient été atténués ni qu'ils reviennent.

Changement de perspective

Le HDR peut être un système très transparent, mais il arrive que vous souhaitiez l'utiliser d'une manière qui n'est précisément pas transparente. Prenons par exemple ce son de charge et d'attaque. Normalement, vous devriez essayer de suivre la forme du volume RMS aussi près que possible.

Cependant, vous pouvez utiliser l'enveloppe à votre avantage et créer un moment d'atténuation évident pour un effet dramatique. En choisissant d'étendre le pic de l'enveloppe à un endroit où il n'y a pas de signal pour le cacher, vous pouvez créer un vide avant que le son d'attaque ne se produise, créant ainsi un moment plus dynamique que si vous laissiez l'atténuation s'arrêter au moment précédant l'attaque, ce qui réduirait l'impact du son.

Comme on dit, il faut connaître les règles pour mieux les enfreindre. Tant que le résultat sonne bien, vous pouvez utiliser le HDR pour des décisions de mixage plus expressives plutôt que de vous concentrer uniquement sur la transparence.

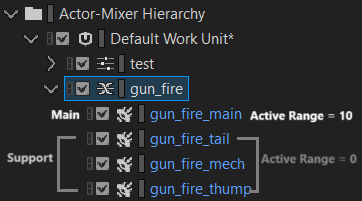

Approche par strates

Il existe de nombreux scénarios dans lesquels un événement sonore unique est composé de plusieurs strates sonores. Il peut s'agir d'un Blend Container parent unique comportant plusieurs sons enfants, ou d'un Event appelant plusieurs sons à travers l'Actor-Mixer Hierarchy. Dans tous les cas, les choses peuvent se compliquer si chaque strate sonore dispose de sa propre enveloppe HDR, l'effet combiné final pouvant être désordonné et difficile à contrôler. Dans ce cas, il est préférable de désigner une strate sonore comme « principale » qui recevra une valeur d'Active Range. Les autres strates, bien qu'elles soient destinées à jouer en parallèle, n'ont pas besoin d'atténuer quoi que ce soit. Vous pouvez donc les désigner comme des couches de « soutien » et régler leur plage active sur 0.

Des contextes différents nécessiteront des approches différentes, mais n'hésitez pas à expérimenter non seulement ce qui a le plus de sens dans votre flux de travail, mais aussi ce qui sonne le mieux ! Peut-être souhaitez-vous atténuer intentionnellement une couche sonore spécifique (comme « gun_fire_tail ») ; de fait, lui attribuer une valeur de Voice Volume relativement plus faible que celle des autres couches parallèles vous permettra d'obtenir de meilleurs résultats.

Le test du bruit rose

Afin d'affiner votre configuration d'atténuation ainsi que vos enveloppes HDR, il peut être utile de tester la façon dont un son va en atténuer d'autres en contexte. Pour tester le pire des scénarios, il peut être utile de mettre en place une boucle de bruit rose qui soit inférieure ou égale à votre seuil HDR. Si vous utilisez le Soundcaster pour jouer ce son ou même en l'implémentant dans votre jeu, vous pouvez écouter comment d'autres sons sont atténués lorsque des sons supérieurs au seuil HDR sont joués. Cela peut également vous aider à identifier les problèmes liés à vos enveloppes HDR et à vous assurer que, même dans les situations les moins idéales, votre atténuation HDR sonne comme prévu.

Vous pouvez entendre un exemple de cette méthode test en regardant l'extrait suivant de la présentation que j'ai faite à l'AirCon24 d'Airwiggles.

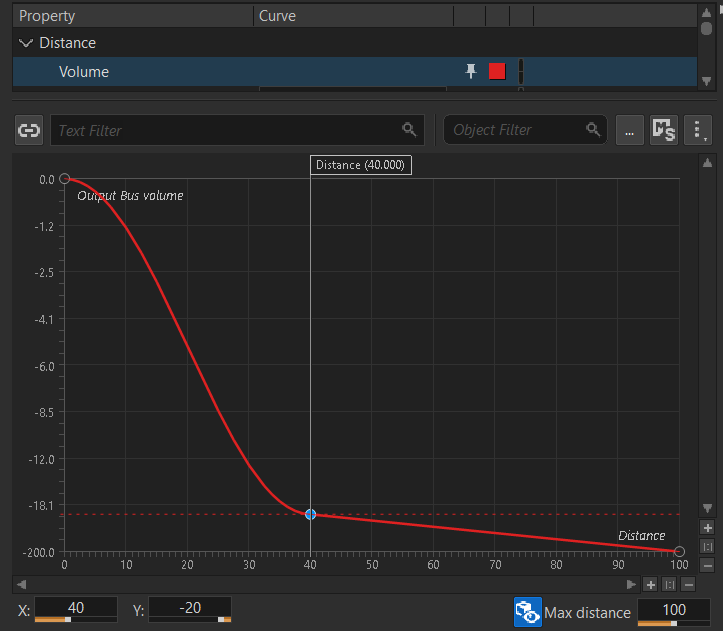

HDR et Distance Attenuation

Comme le système HDR de Wwise utilise le paramètre de Voice Volume, les courbes d'atténuation (Distance Attenuation) ont un effet direct sur le HDR. Cela peut être une bonne chose, car un son d'explosion jouant loin sera naturellement plus bas dans la hiérarchie HDR en raison de son Voice Volume réduit avec la distance, mais vous devez toujours être conscient de la façon dont votre rapport HDR affecte la réduction du gain lorsque la valeur de Voice Volume est encore au-dessus du seuil. Lorsque vous êtes en train d'élaborer votre réduction de volume avec votre courbe d'atténuation, vous allez probablement dessiner une courbe offrant une réduction du Voice Volume avec la distance. Cependant, dès que vous affectez ce Shareset d'atténuation à un son se trouvant au-dessus du seuil HDR, la réduction du volume s'effectuant sur la distance sera amoindrie par le rapport HDR jusqu'à ce que le son atteigne finalement le seuil HDR. Vous pouvez en savoir plus sur ce phénomène dans la documentation officielle.

Par exemple, si vous avez un son dont le Voice Volume est de 20, et que votre projet a un rapport HDR de 10:1, les 40 premières unités de distance du son n'entraîneront qu'une réduction de -2dB du volume apparent. Dès que le son atteindra la marque des 40 unités, il continuera à s'atténuer sur la distance comme prévu par la courbe. Il peut s'agir d'un autre facteur décisif influant sur le rapport HDR que vous choisissez d'utiliser. Des rapports plus faibles produiront un comportement beaucoup plus prévisible lorsqu'il s'agit des courbes de Distance Attenuation, alors assurez-vous d'en tenir compte. Les sons acheminés vers des bus ayant des rapports HDR plus élevés peuvent nécessiter la création de Sharesets d'atténuation plus spécifiques pour traiter ce phénomène, soyez donc conscient de cet impact potentiel dans votre flux de travail.

Gestion de la hiérarchie

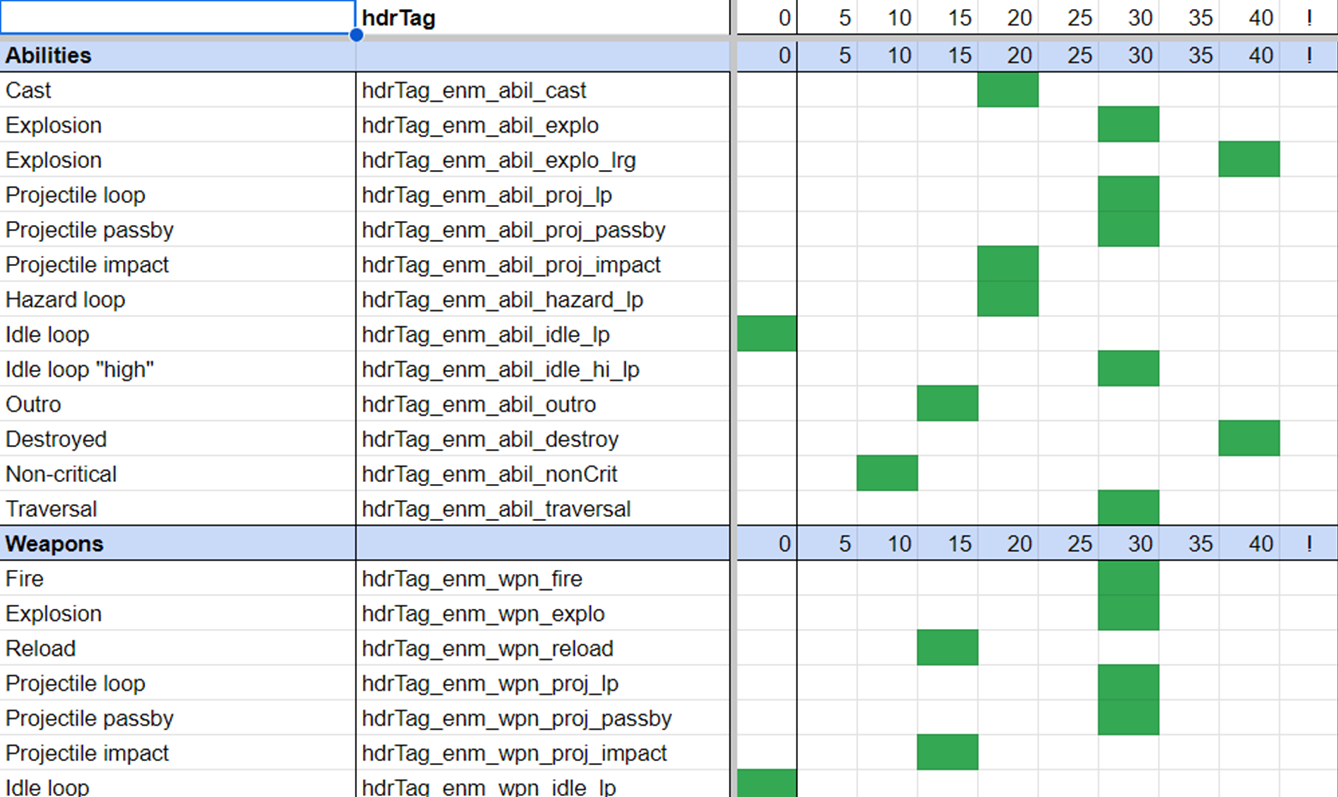

Pour gérer le paramètre de Voice Volume de cette manière, il est préférable de trouver un moyen de suivre et de gérer facilement les différentes catégories de sons et leurs positions les unes par rapport aux autres en ce qui concerne l'importance sonore. L'une des meilleures façons d'y parvenir est de créer un tableau pour suivre les valeurs de Voice Volume de tous vos sons.

Afin de déterminer facilement comment les sons de votre jeu réagiront les uns par rapport aux autres, il est préférable de définir un nombre limité de catégories plus larges de sons, plutôt que de travailler son par son. Il est beaucoup plus facile de gérer des catégories plus larges, en particulier si vous souhaitez modifier leur Voice Volume/« importance sonore » ultérieurement. Il vous faudra peut-être un certain temps pour déterminer les catégories de sons que vous devrez représenter dans ce tableau, et vous trouverez constamment des cas où vous devrez créer de nouvelles catégories. Toutefois, le simple fait de représenter visuellement cette hiérarchie est extrêmement utile pour avoir une vue d'ensemble. Il est facile de se perdre dans les méandres du mixage d'un jeu, c'est pourquoi il est extrêmement utile d'avoir une vue d'ensemble des relations de mixage entre les sons.

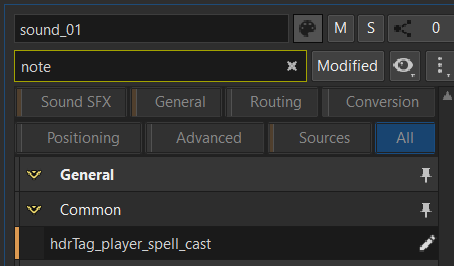

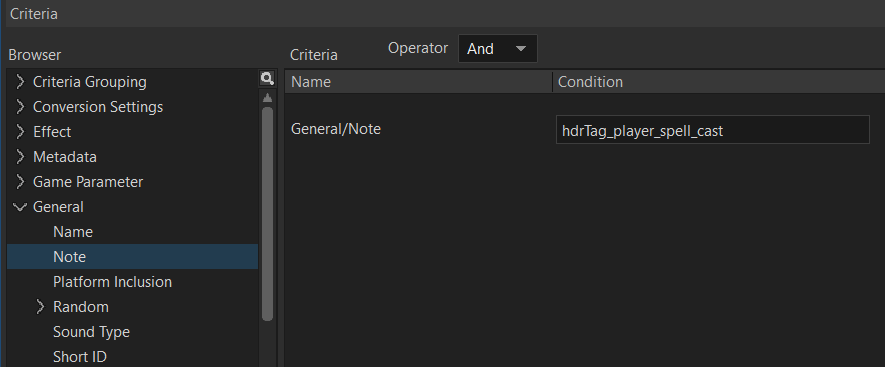

Utilisation des Notes pour les catégories HDR

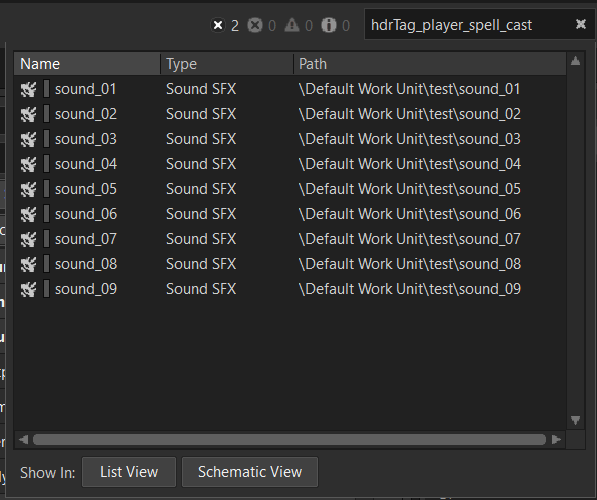

Pour tirer le meilleur parti de cet outil, vous devez trouver un moyen de rechercher facilement les sons appartenant à une catégorie spécifique. Pour ce faire, vous pouvez notamment utiliser le champ Notes. Par exemple, si vous écrivez quelque chose comme « hdrTag_player_spell_cast » dans le champ Notes, puis recherchez simplement cette chaîne exacte dans la barre de recherche de Wwise, cela affichera tous les objets où cette note est écrite. Pour tirer le meilleur parti de cette méthode de travail, il est conseillé d'utiliser le champ Notes de cette façon, au niveau de l'objet où vous avez l'intention de régler la valeur de Voice Volume pour le son lui-même. Dans d'autres cas, cela peut être fait sur un Actor-Mixer parent, un Random Container ou un son individuel.

Vous pouvez également utiliser le Query Editor (éditeur de requêtes) pour rechercher ces notes contenant « hdrTag ».

Une fois que vous disposez d'une liste d'objets correspondant à cette entrée du champ Notes, vous pouvez facilement tous les sélectionner et afficher leurs valeurs de Voice Volume et de Make-up Gain dans la List View (vue en liste), dans la fenêtre de résultats du Query Editor (Query Results), ou encore ouvrir la fenêtre Multi-Editor. Dans le cas de la List View, il est préférable de configurer les colonnes de manière à n'afficher que ce qui est pertinent pour les besoins du HDR. Dans ce cas, il est plus pratique de n'afficher que les propriétés de Voice Volume et de Make-Up Gain. Ci-dessous, vous pouvez voir comment utiliser la List View à votre avantage pour définir très rapidement « l'importance sonore » du HDR pour plusieurs sons à la fois.

En raison de la nature même de l'organisation d'un projet, de nombreux sons peuvent partager une même catégorie sonore HDR mais ne pas être situés dans la même section de l'Actor-Mixer Hierarchy ; pouvoir gérer globalement de cette manière les propriétés partagées entre plusieurs sons peut donc faire gagner beaucoup de temps et rendre le mixage du jeu plus cohérent. Cela facilite également le processus d'itération, car une valeur de Voice Volume que vous pensiez efficace au départ peut changer plus tard au cours de la production. Le fait de disposer d'un moyen fiable et rapide de modifier la position d'une catégorie sonore dans votre hiérarchie de mixage vous évitera bien des problèmes lorsque vous serez en processus d'itérations sur le mixage du jeu.

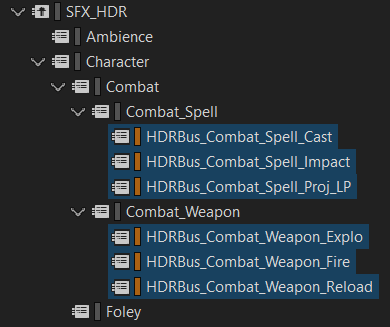

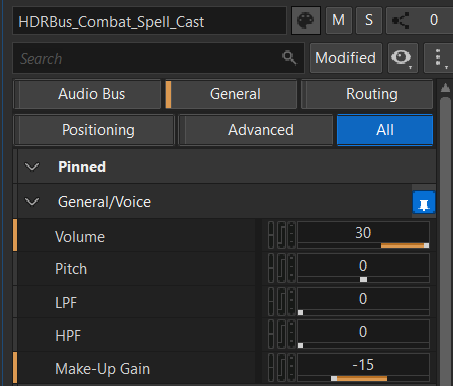

Utilisation des bus pour les catégories HDR

Une méthode alternative que vous pouvez utiliser pour gérer vos différentes catégories HDR consiste à utiliser la Master-Mixer Hierarchy de Wwise pour gérer les paramètres de Voice Volume et de Make-up Gain, plutôt que d'utiliser la méthode basée sur les notes expliquée ci-dessus. Cela signifie que chaque catégorie sonore HDR que vous créerez sera associée à un bus. Au lieu d'utiliser la List View pour gérer le Voice Volume et le Make-Up Gain pour chaque son associé à une étiquette HDR, vous pouvez simplement assigner un son au bus audio de la catégorie HDR appropriée et régler le Voice Volume et le Make-Up Gain au niveau du bus.

Vous pouvez toujours organiser votre Master-Mixer Hierarchy de la manière la plus judicieuse pour le mixage de votre projet, et ensuite créer de nouveaux bus enfants qui serviront d'emplacement où les paramètres de Voice Volume et Make-Up Gain seront réglés. Gardez à l'esprit que vous devez toujours prendre note de tout changement de Voice Volume et de bus audio se produisant en aval de ces sous-bus HDR, mais il peut être plus facile de gérer cela si tout est situé au même endroit. Afin de définir la priorité ou l'importance sonore d'une catégorie HDR, vous pouvez simplement régler les paramètres de Voice Volume et de Make-Up Gain comme vous le souhaitez dans les propriétés de son bus. Il peut également être judicieux de nommer et/ou de colorer les bus de manière à informer les autres concepteurs sonores de leur fonctionnalité, car si un son est assigné à l'un de ces bus, son Voice Volume sera réglé au-dessus du seuil. Pour cette raison, assurez-vous que tous les sons assignés à l'un de ces sous-bus HDR aient leurs enveloppes HDR activées. Il est également conseillé de vérifier le Voice Volume final d'un son avant de l'assigner à ces bus, afin de s'assurer qu'aucune valeur cumulative de Voice Volume non intentionnelle ne soit présente et n'entre en conflit avec les valeurs du bus.

Étant donné que le Voice Volume et le Make-Up Gain sont désormais réglés à un seul emplacement et non plus à plusieurs endroits, il est beaucoup plus rapide et facile d'itérer sur ces valeurs après coup, avec moins de risques d'erreurs. Il est également plus facile d'ajuster dynamiquement le Voice Volume et le Make-Up Gain au moment de l'exécution du jeu, puisque vous êtes sûr à 100 % des valeurs absolues de Voice Volume qui seront modifiées avec des RTPC, des States, etc. Avec les autres méthodes, si vous choisissez de modifier le Voice Volume (et donc « l'importance » HDR) au niveau du bus, les sons sortant sur ce bus peuvent avoir des valeurs de Voice Volume différentes, ce qui signifie que le mixage et les changements de comportement HDR risquent d'être incohérents pour ces sons.

Touches finales

Lorsque que vous commencerez à implémenter Wwise HDR sur votre projet, il y a quelques points à considérer afin de rendre le processus aussi fluide que possible.

Puisque le HDR peut reproduire la façon dont nos oreilles perçoivent le son ou même être utilisé pour quelque chose de plus subjectif, il est habituellement préférable de concentrer le système HDR sur les SFX seulement. Étant donné que le HDR utilise le volume pour mixer les sons de manière dynamique, il se peut qu'il ne soit pas aussi transparent lorsqu'il mixe d'autres contenus tels que les dialogues et la musique - il est donc préférable de ne pas les inclure dans le système HDR. De plus, certains SFX non diégétiques comme les sons de UI/HUD et les cinématiques peuvent ne pas fonctionner correctement avec le HDR ; essayez donc de définir cela le plus tôt possible afin de savoir quel contenu est à inclure dans ce système et quel contenu en sera exclu.

Il n'est pas rare non plus d'utiliser différentes méthodes de mixage dans le cadre d'un projet. Alors que la plupart des SFX d'un jeu pourraient participer au système HDR, il n'est pas inhabituel de continuer d'utiliser des systèmes de sidechain basés sur des instances de Wwise Meter, en particulier concernant les interactions entre SFX et dialogues et musique. Il n'existe pas de façon unique de faire les choses, alors n'hésitez pas à expérimenter toute combinaison de méthodes qui pourrait fonctionner pour votre projet !

Parce que l'utilisation du système HDR de Wwise peut devenir complexe, il est non seulement préférable de garder les choses aussi organisées que possible, mais aussi de travailler et de communiquer constamment avec votre équipe au sujet du système, de ce à quoi il faut s'attendre, et de la façon de l'utiliser à votre avantage. Une fois les choses correctement mises en place, le jeu sera capable de gérer une multitude de situations de mixage avec facilité ! Cependant, pour en arriver là, il faut beaucoup de planification et d'organisation. Veillez donc à vérifier constamment l'intégrité de votre configuration HDR pour vous assurer qu'elle servira le mixage audio de votre jeu.

Poursuivre l'apprentissage

Si cet article vous a été utile, n'oubliez pas de consulter la vidéo d'Helldivers 2 approfondissant le mixage dynamique pour obtenir de l'information supplémentaire sur le HDR et plus encore !

Commentaires