The State of Mobile 2021 report issued by App Annie identifies PUBG-like, shooter, and MOBA games highlighting social interactions as the most popular game categories, which are the main drive in the increase in gameplay time. Voice interaction in blockbuster games such as PUBG, Call of Duty, and Free Fire has already become a player habit. Innovative social games such as Roblox and Among Us are also widely popular among Gen Z.

Although multiplayer gaming and social interaction have become mainstream in the game world, how to deeply integrate game voice into gameplay and restore the real world experience for players remains a challenge.

The Wwise + GME solution not only helps games easily integrate the voice chat feature, but also maximizes the immersive gaming experience. This article will introduce the unique benefits of this solution from three aspects: solution strengths, technical implementation, and voice features.

What is the Wwise + GME solution?

Game Multimedia Engine (GME) is a one-stop voice solution tailored for gaming scenarios and provides abundant features, including multiplayer voice chat, voice messaging, speech-to-text conversion, and speech analysis. You can connect to the GME SDK by calling APIs to implement voice features in your game.

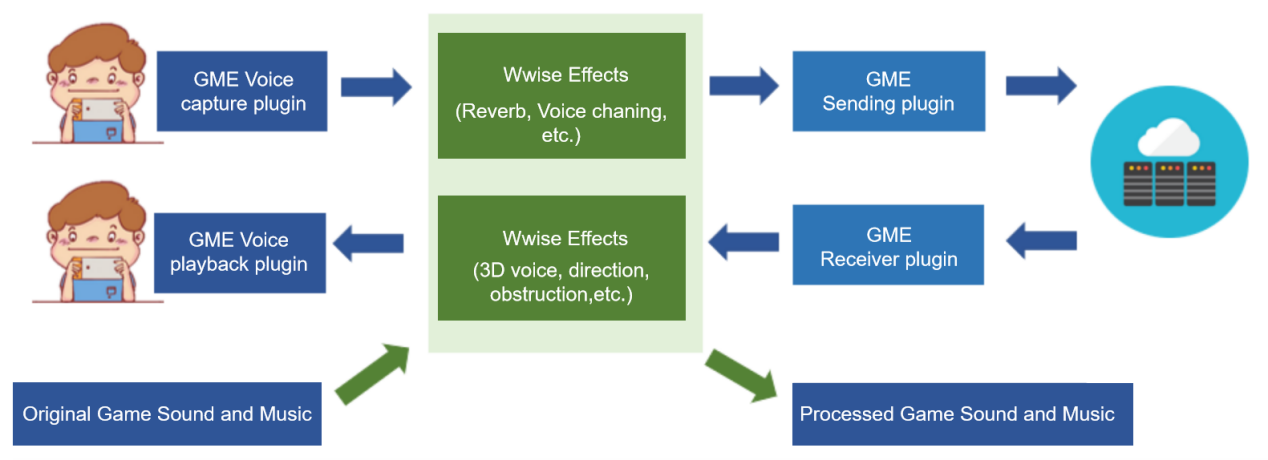

The connection process of traditional standalone voice SDK solutions is designed independently of the game sound effects. In contrast, for games developed based on the Wwise sound engine, the Wwise + GME solution can include voice features in the game sound effect design process. Wwise's powerful audio processing and control capabilities can be applied to the voice features, which provides a larger space for designing voice features for game sound effects while improving the sound quality. Below is the basic flowchart:

As shown above, GME's plugins send all the local voice stream (voice of the player recorded by the mic) and the voice streams received over the network (voice streams of teammates to be played back locally) to the Wwise audio pipeline, where the GME voice streams are abstracted into Wwise's basic audio sources for processing. It is based on this novel design that the Wwise + GME solution has unique strengths over traditional standalone voice SDK solutions.

Unique strengths of the Wwise + GME solution

1. Unified design for voice and game sound effects:

In a Wwise project, GME voice streams are seamlessly connected to the Wwise audio pipeline, and the voice connection process is deeply integrated into the Wwise sound effect design process, avoiding audio conflicts that may occur during connection to a separate voice SDK. On the game client, the operations of sending and receiving GME voice streams are abstracted into triggers of Wwise events. This makes such operations consistent with the standard Wwise development process experience, which is more straightforward than previous API call-based connection.

2. Effective solution to the problems of declined sound effect quality and sudden change in the volume level after mic-on:

In traditional standalone voice SDK solutions, the declined game sound effect quality after mic-on, sudden change in volume level, and dry voice all are pain points in the industry; especially, the sound effect quality of the entire game will degrade to the sound quality level of phone calls (mono signal at a low sample rate) immediately after mic-on, which severely deteriorates the game experience. In contrast, the Wwise + GME solution effectively solves the problem of sound effect decline caused by volume type switch. This greatly improves the sound quality and enables players to identify other players' positions during smooth voice chat with the original sound effects retained.

3. Powerful design capabilities for unlimited gameplay and creativity

The Wwise + GME solution allows a vast design space for game voice features. As all voice streams flow to the Wwise audio bus, the rich sound processing and control capabilities of Wwise can be applied to the voice, and each voice stream can be customized, making gameplay more immersive and fun and allowing players to communicate in a natural way.

Technical implementation

For each player, voice chat mainly involves two audio stream linkages: the upstream linkage where the local mic captures the player's own voice and distributes it to remote teammates through the server, and the downstream linkage where the voices of all teammates are received from the server, mixed, and played back on the local device.

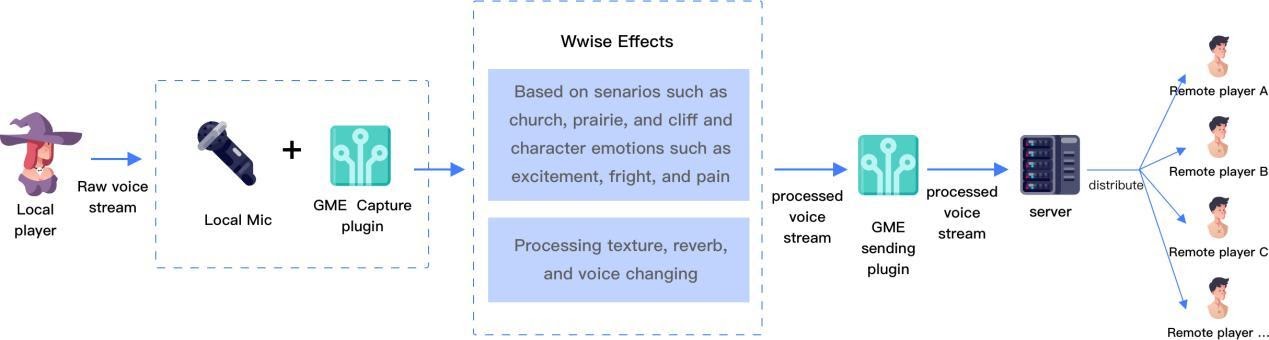

Upstream linkage:

The player's local chat voice stream will be sent to the Wwise engine through the GME capture plugin. Based on the rich sound effect processing capabilities of Wwise, the game can process the voice stream based on the actual environment and needs, with operations such as texture processing, reverb, and voice changing. Imagine that the player's character is in a church. The processed voice stream with church reverb will be sent to the server through the GME sending plugin and then to remote players. Similarly, if the game is configured with the voice changing feature, the voice stream processed by the real-time voice changing algorithm will be sent to remote players.

Upstream linkage processing flowchart

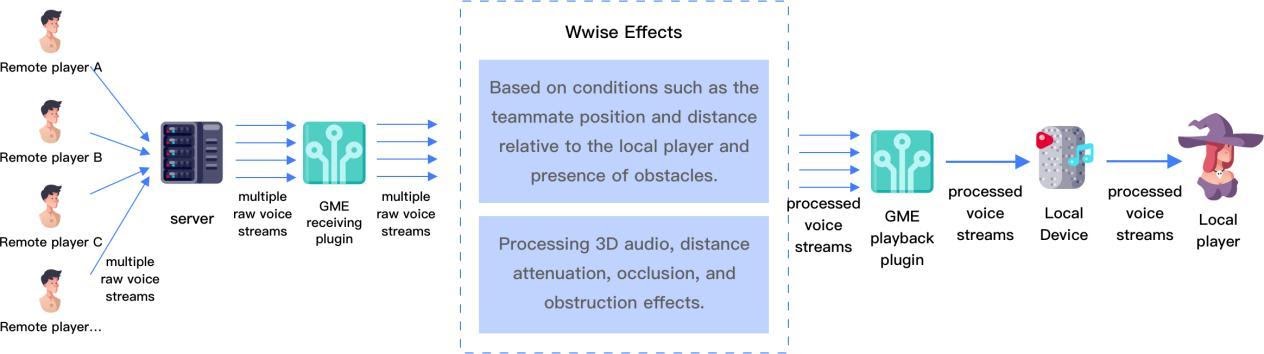

Downstream linkage:

Unlike the upstream linkage where only one local voice stream is involved, the downstream linkage generally receives multiple voice streams from all teammates, which will be passed to the Wwise engine through the GME receiving plugin. In addition, the game can process the corresponding sound effects based on the actual player conditions in each received voice stream, including position relative to the local player, distance, and presence of obstacles in the way. The processed data is mixed by Wwise and then played back on the local device. In a specific game scenario, for example, if teammate A is standing on the front left of the local player, then the local player will hear teammate A's voice from the front left direction; and if teammate B jumps behind a rock, then the local player will hear teammate B's voice obstructed and reflected by the rock. In addition, the voices of approaching and departing players will be amplified or attenuated.

Downstream linkage processing flowchart

Compared with traditional standalone voice SDKs that only provide an audio conference-like game voice experience, the Wwise + GME solution processes the voice based on game scenarios and takes the voice experience to a whole other level (i.e., a game scenario-specific immersive voice experience). The demo video below shows some basic usage of the Wwise + GME solution. If you watch it on your phone, please put on headphones, because it uses the binaural virtual sound field technology.

In the following demo video, the gray robot opposite you is your teammate talking to you through GME. 3D audio, voice changing, and reverb are applied in voice chat processing. All voices in the video are from real-time recording of the voice stream sent by the remote player instead of post-production synthesis.

(Click to play. As the demo uses binaural virtual sound field technology, please put on headphones for optimal effect.)

More voice features

The unique design of the Wwise + GME solution makes it possible to implement voice features as a part of game sound effect design. Below are some proposed voice processing features, and there are more to be created by audio designers.

Sending ambient sound or accompaniment:

The Wwise + GME solution provides the capability to send not only player voice but also other audio streams to the voice server. The most obvious application of this capability is karaoke. For example, in a game scenario where a player's character is in the rain or wind, when the player talks with a teammate, the immersive experience requires that sound of rain or wind be properly mixed into the voice. There are also some other use cases, such as sending sound emojis based on the player's progress in the game to make the voice more fun.

Simulating voice reflection and diffraction:

To create an immersive voice experience, voice rendering and actual game scenarios must be taken into account together. The aforementioned texture processing, attenuation, voice changing, reverb, and 3D positioning are only basic processing features. To better simulate the voice transfer path between the speaker and the listener in game scenarios, you can leverage the reflection, diffraction, occlusion, and obstruction models provided by Wwise to process voice chat, and such processing effects are exactly the ultimate voice experience that the metaverse seeks.

Processing character personality and status:

In order to make game voice more fun, some specially designed DSP processing can be performed on the voice when character personality and status change in games. For example, if a character is attacked by an enemy and loses HP in a battle, then the voice can have some distortions, lags, or trills added to indicate that the character is in pain; when the character defeats the enemy or picks up an item, the voice can have some high-pass filtering or acceleration processed to reflect the excitement.

Side-chaining:

Side-chaining is an essential processing method in audio mixing. It controls a signal with another. The purpose of adding voice features to a game is to enhance in-game social networking, so the voice must be clearly delivered to listeners. When a player speaks, the focus of game sound mixing should switch from the game sound effects to the voice, as they do on radio stations, where the DJ decreases the music volume level when speaking and restores the original volume level after speaking. In the Wwise + GME solution, all voice streams are sent to the Wwise audio bus, which makes side-chaining possible in games; for example, you can set a Wwise Meter at the place where voice is received and then dynamically control the volume levels of other sound effects based on the value of this Meter.

Below is a demo video of the Wwise + GME solution's multiple capabilities, such as how sound reflection, obstruction, and side-chaining are processed by GME. The video shows the first-person view, third-person view, and top view, and the green robot is your teammate talking to you through GME. As the robot's position and environment change, the corresponding processing features will be applied to the voice (as described in the video subtitles). Voice chat processed in this way can deliver an immersive gaming experience. All voices in the video are from real-time recording of the voice stream sent by the remote player instead of post-production synthesis.

(Click to play. As the demo uses binaural virtual sound field technology, please put on headphones for optimal effect.)

Summary

The Wwise audio engine middleware and the GME game voice solution can improve the game quality from different perspectives. Wwise greatly increases the efficiency of developing interactive sound effects for a better game voice experience, while GME enhances social networking in games for a higher player retention. When Wwise is combined with GME, the two create better synergy for multiplying effects. The Wwise + GME solution will become a powerful tool for game sound effect designers to create most realistic, vivid, and creative sound and voice effects in games.

Commentaires