This article provides a quick overview on some of the benefits in my opinion, of using Wwise, and particularly when working on higher budget games.

Let's start by summarizing the benefits of using audio middleware, in any project:

- Your project will sound better.

- Your project will use resources more efficiently.

- Your project will require less programming time.

When playing a game, audio is half of the experience. Audio in games is not about simply triggering sounds. Unfortunately, the scope for audio is often limited, leaving the implementation to a minor task that nobody wants to deal with when the project is reaching its end. Programming audio is not easy and it can become problematic if no resources are deployed to help. Wwise is a crucial tool for development as it can bring the three major benefits listed above with no drawbacks and in a short, very optimized time. This improves the audio pipeline implementation and the quality of the overall project.

Less programming time

Wwise has its own interface, allowing the sound designer to be completely independent from the game engine when organizing assets and creating mechanics. It empowers sound designers to build complex systems very quickly with no programming required. We are talking about hours upon hours saved on building custom scripts, and constant communication with programmers who are already focusing on the core gameplay. This saves up a big chunk of work that programmers would normally have to do; sound designers now handle all of that by themselves.

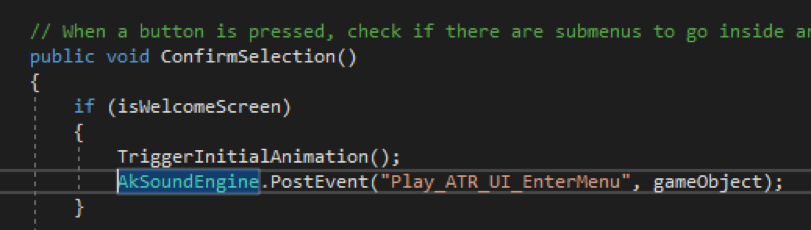

1) Here is what a basic Wwise implementation looks like:

The PostEvent function will be the most written line of code. It calls the appropriate Event in the sound engine and whatever that Event in Wwise does, which can consist of many things.

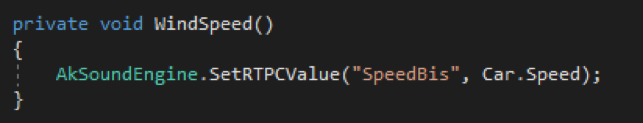

2) The second most used function would be the SetRTPCValue.

Another time saving feature, the RTPC, is the foundation of complex systems involving dynamic audio.

In the following example, I am linking the Car.Speed, which is a real value in the code (going from 0 to 30) to the RTPC SpeedBis which I have created within Wwise.

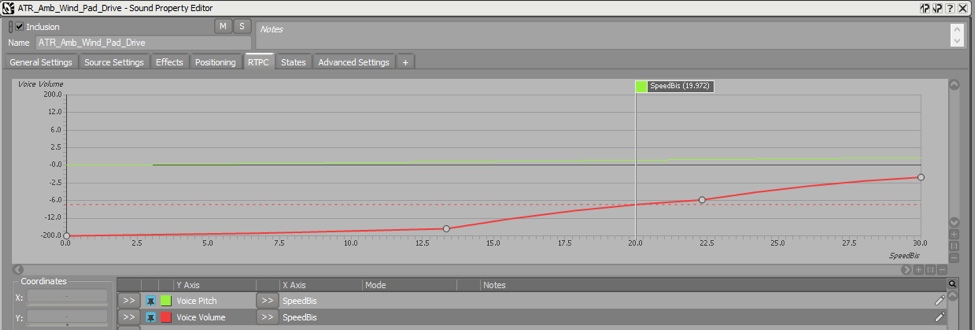

Thus, every time Car.Speed modulates, my value in the software updates in real-time. The result in the game is the speed of the car making a wind asset gradually louder as the value increases. I then tweak the levels in real-time while playing. A 5 to 10 minute job!

3) The only occurrences of communication with a programmer is me asking which value I can use for my mechanic, or where can we put that PostEvent function to call my Event. This takes up a couple of minutes, no script needed whatsoever. I can eventually require more from the programmer when I want to push my work to the most advanced level possible. This is rare but requires maybe 20 to 30 minutes.

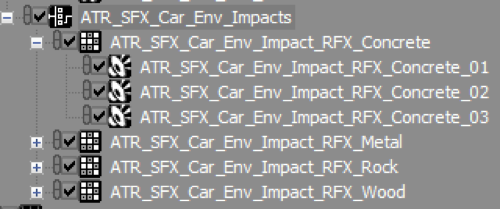

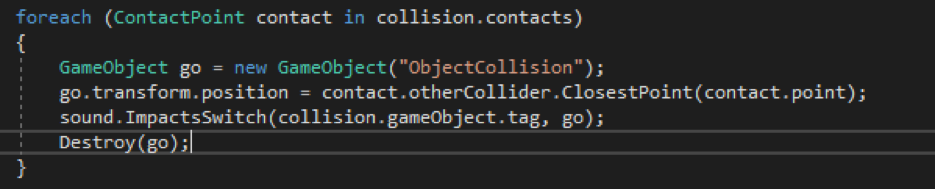

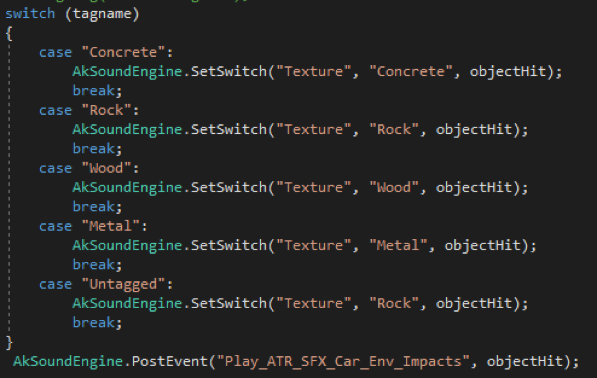

Quick case: The car hits the environment (four textures in the map). When an impact occurs (On Collision Enter), the Event is called. It will go through the Switch Container on the left, selecting the appropriate assets to play according to the tag. It works fine, the only issue is that the Event is posted on the Game Object where the script is attached. In that case, the car provides no audio spatialization. I want my sound to be 3D, so the Event must trigger at the point of impact.

A custom method was created with the help of a programmer, creating a Game Object at collision, then sending the tag information to the method I already had with objectHit being the impact point where the sound will play. This allowed 3D positioning* which was set in Wwise.

It took more time, but no more than 20 minutes. While it required the help of code, the task remained enjoyable, as we were not creating a feature but rather polishing an already existing one.

4) Putting into script basic functions that I can set up in two clicks in Wwise took days upon days. Elements related to randomization, linking float or integer values, and elements related to the employment of audio assets for example were things that required a lot of time, and even with all the work put in, custom scripts can sometimes fail and can impact performance as well. In a perfect environment, we would want our programmers to focus on core features of the game. Rather than having to take hours, days, or months into developing systems that a sound engine like Wwise can already perform.

Using resources more efficiently

Wwise offers deep profiling when connected to a Unity session or even to a build. It of course provides live mixing and tweaking. Unity allows live mixing but not changing values or other parameters affecting audio during run time. The profiler displays advanced data to optimize CPU and memory usage in the end. Wwise not only allows fancy mechanics and better sounding systems without the need of coding, but also facilitates optimization.

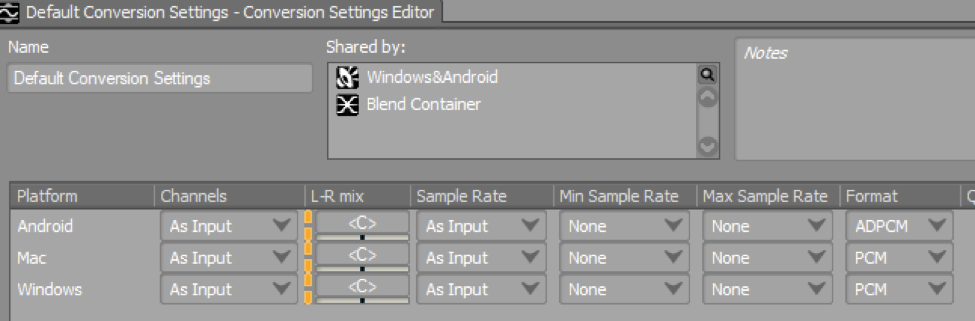

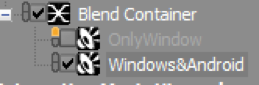

1) To save memory, compressing audio is necessary. We can choose the best settings for each target platform.

We can set a different compression for each platform our project will be shipped on. We can also exclude assets that we would not include in a certain platform.

2)SoundBanks are so useful. Organizing all of our work into groups of data allows us to deploy audio in a smarter way.

This implies loading assets that we only need at a specific moment or within a specific map. In the end, perfecting memory usage for greater performance, with intricate levels of control. Language localization is another neat feature already built-in. Unity or Unreal do not provide the ability to switch from one language to another, or replacing the dialogues and other voices for appropriate localization.

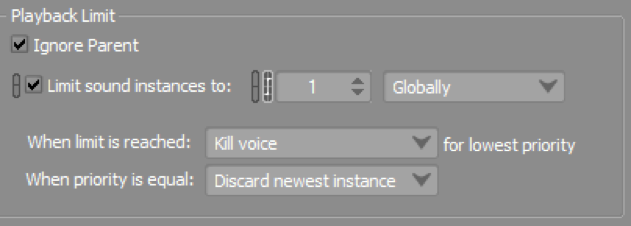

3) The playback limit function is an essential setting when it comes to performance, saving us from bugs or problems that faulty scripts would produce.

No need to complain when a script fires an Event a thousand times for a particular reason. We can, most of the time, solve the issue on our own by limiting the number of instances the file/container can play. It saves time for programmers and therefore project resources. This feature is a MUST HAVE and living without it is painful.

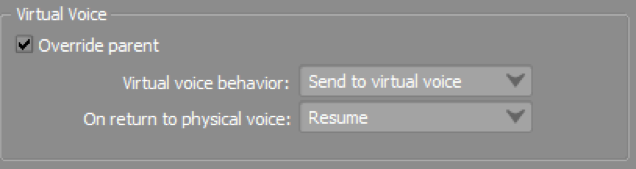

When the limit of sound instances is reached, we can send the audio to a virtual voice. It is a very friendly resource operation as the engine will not process the audio anymore but only perform volume computation until the file is needed again. This can also apply to cases where the asset is under the volume threshold we set in our project settings (e.g. where a 3D object too far from the listener or constant audio running in the background like RPM changes of a car). It means that the file is inaudible but still being processed, unnecessarily taking up the hardware’s active voices. We send 'unheard' audio to virtual voices.

All of those tweaks and adjustments to get the most out of Wwise’s performance settings will allow you to efficiently use and save memory and processing.

Using a game engine without a sound engine means that we would have to rely on scripting. We are also all aware that audio is very heavy on performance when it is poorly executed.

Sounding better

You can create the most amazing audio assets within your DAW (Digital Audio Workstation) utilizing the best sound libraries and recordings, but this will be irrelevant if your assets are not properly implemented. Having great tools available will naturally improve the quality of a project. When you have excellent control over your work, it will reflect in the performance.

Programmers just do not have the time to deal with all of our requests and fulfill our audio needs. If as a sound designer, you have to constantly go to programmers for minor details, you would perhaps be forced to mainly focus on the bigger picture, and leave out great details that require more attention. Unity for example does not allow you to adjust the volume of individual assets. This becomes a problem when you have a custom script involving an array of audio files that share a unique audio source. As it is all about precision, good work demands attention to detail with anything you put in the game, and not efficiently tweaking those settings can take a toll on the mix. This applies to everything else, such as pitch, filters, or other effects that you could only apply to a mixer or an audio source in Unity. And we all know that we want to keep the audio sources to a minimum so individual control on files does not get compromised.

I have mostly mentioned mutual benefits to make this document relevant to everyone. There are of courses many conveniences that middleware brings to sound designers, but there are also results that you cannot obtain without Wwise or spending a lot of time and effort.

Quick and easy work leads to better work overall!

More resources!

Commentaires