This article will try to shed light on how we use Wwise and other tools at Rovio in free-to-play (F2P) mobile game audio development, and strategies we use to improve workflows and efficiency.

Introduction

Some may think that mobile game audio is a smaller scale version of audio development for PC/console, but the truth is that mobile game audio has its own very unique characteristics and challenges. In my experience working as a sound designer in a large-size mobile developer, I see that this is especially true for the free-to-play (F2P) model, in which games are developed more as an ongoing service than as a one-off product.

The most typical challenge of live service game development is the practice of developing and maintaining the product with constant updates over its lifetime, which can span several years. This requires a solid infrastructure and scalable tools in place, which needs a certain level of foresight and preparedness. In this sense, mobile games and some multiplayer desktop games have some similarities.

Another characteristic of mobile game development, especially in larger companies, is the amount of concurrent projects or overlapping development cycles. Generally, in PC/console development, project scale is larger and development cycle is longer, and there’s usually a single project that is actively worked on. In mobile, this is reversed with several, smaller projects with shorter development time.

Here we have a picture of an audio team whose efforts are spread thin over multiple projects with varied technical and artistic needs, and in different stages of production. The team is also burdened by maintaining live products with continuous updates. Add to that the iterative and test-driven nature of F2P games, where any game can have the risk of being canceled due to poor performance or a myriad of other reasons, and new prototypes popping up like mushrooms. It’s not hard to imagine that such a team needs smart and efficient workflows to cope with all these challenges. In this article, I will try to shed light on how we have been solving some of these challenges at Rovio from the tech perspective, including how we take advantage of Wwise and other tools, with examples from some of our recent projects, such as the latest Angry Birds title.

Alice in Templateland

When handling multiple projects, the biggest help comes from standardizing the tools we use, so that workflows and improvements can be carried over across projects. Learning can be synergistic, allowing knowledge to be built on top of what is learnt using the same systems previously. On top of that, it’s crucial to use scalable, future-proof and convenient tools when considering maintaining projects throughout the course of years.

Arguably the most central tool in a game audio designer’s arsenal is the middleware, or any other implementation platform (native or custom game engine tools, etc.). It defines fundamental aspects of our work; such as how we prepare assets for integration, how we build playback systems and behaviors, and what our technical limitations are. Using the same implementation tool in multiple projects allows us to use similar approaches such as dynamic mixing, packaging assets (SoundBanks), templates and presets.

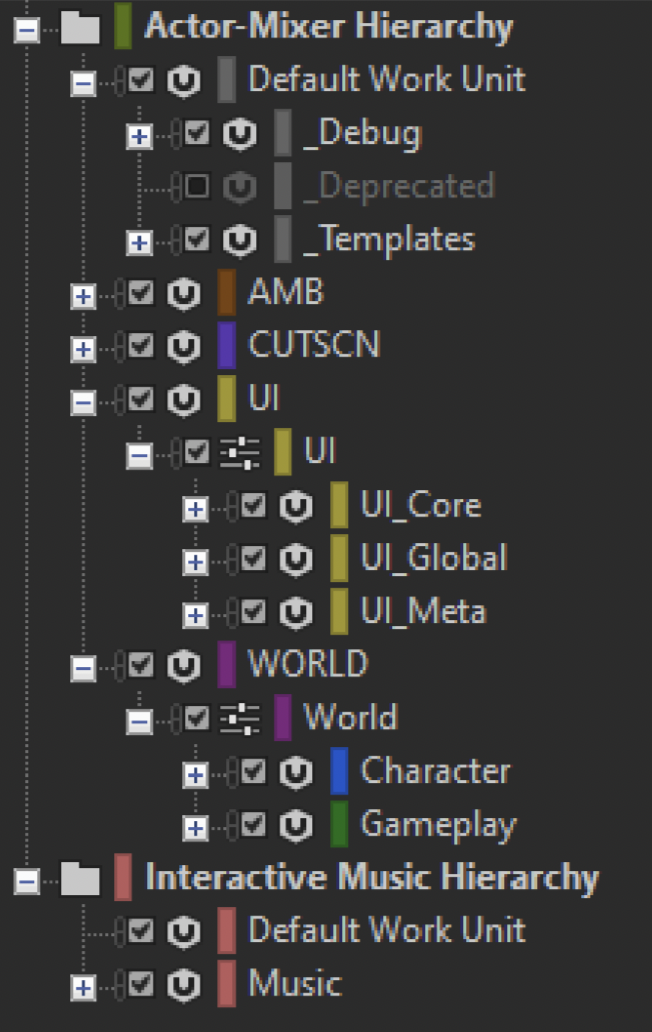

Since we’ve been using Wwise as our go-to middleware for a while now, we maintain a template Wwise project in a repository as a blank(ish) project to use as a basis for any new project. The template project has certain settings to speed up the initial setup process, such as a generic mixer hierarchy, debug containers and events, template actor-mixers and container presets, some common game syncs, side-chain RTPCs, conversions settings, sharesets, etc. A template project custom-tailored for your typical needs is a good starting point, and faster than starting from scratch. There’s usually a bit of customizing needed for the specific project, but that’s actually a good thing, because it lets us question our methods and potentially improve them, while still letting us start a few steps ahead. The project is regularly updated and maintained to get the latest improvements we make in recent projects, so in our next project we can start up-to-date with the latest developments in our implementation approach.

Wwise template project

In the past, I’ve tried taking a previous Wwise project and making a template out of it for the next project, but I find this approach very tedious and error prone. Plus, it prevents you from having a fresh approach and trying new things, making you fall back to your familiar ways of working.

That’s a Wrap

Having a standard middleware is great, but in reality, it’s not always a possibility. Every project has their own needs and dynamics, and in certain cases you may find yourself using a different set of tools. Different middleware (or no middleware?), a completely different engine, or just maintaining legacy projects using different tools…

We have a custom tool for Unity in our arsenal called AudioWrapper to help cope with such scenarios. It was initially designed by my former colleague Filip Conic to act as a wrapper class between the game engine and audio middleware. By using AudioWrapper, instead of only relying on the middleware specific integration, we are able to make the audio code middleware agnostic, keeping the audio coding experience similar between all projects. With a wrapper like this, regardless of the tools we use, we have a common interface to work with. It makes it easier to onboard people to multiple projects not only for the audio personnel, but also for other developers, who may not be familiar with the third-party API.

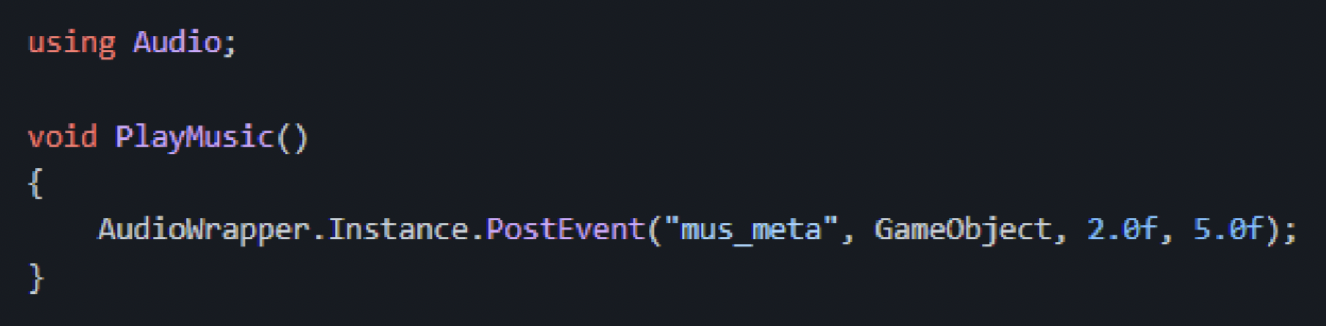

AudioWrapper usage example

Another important thing AudioWrapper does is standardize the way we approach integrating audio on a higher level. The way every game is setup is different, and integrating sounds through code allows us to standardize our methods compared to using GUI-based integration tools, regardless of how the game entities and logic are built. This is a preference we made in order to use a similar implementation workflow on every project, such as how the sounds and music are triggered, loaded/unloaded, and events are handled.

A bonus advantage of using a wrapper is the option to easily swap middleware mid-development. This is a common scenario where a prototype starts with a native tool for simple and fast iteration, and later, when the project gains momentum, switches to a middleware like Wwise to take advantage of convenient or advanced features. Even though the main tool has changed, syntax is still the same and the entire audio-related code needs little change to work out-of-the-box.

Let’s Just Cool Down

The wrapper approach comes with some overhead of developing and maintaining an additional tool, but its benefits definitely justify the cost in our case. It also makes it that much easier to build on top of the middleware features for our own needs.

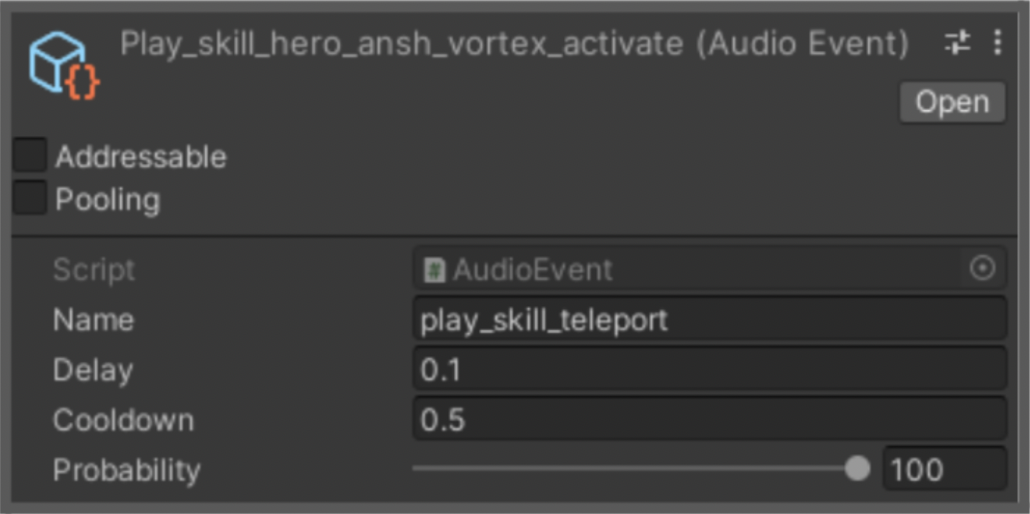

Darkfire Heroes, a mobile RPG, had a ton of characters and actions, causing many simultaneous voices to play. Due to the more casual nature of mobile games, there’s already a certain difficulty in motivating players to keep the sound on. Therefore, making the sound less cluttered and more pleasant to listen to was all the more important. Also, we wanted to unmask the most important information to the player at any given time to make the game more enjoyable without constantly having to focus on visual information. Voice limiting and dynamic mixing options in Wwise go a long way, but I wanted to have an additional layer of control via wrapping Wwise events with a few additional features as an extension to AudioWrapper. It started with an event cooldown system, which allowed us to throttle the number of subsequent events within an adjustable time window. Once we had that working, it was easy to add even more additional control, such as probability and delay. With these additional control points, we were able to fine-tune settings both on event (macro), event action and voice (micro) levels, which gave us a tremendous flexibility to create clarity in the mix.

An example AudioEvent scriptable object from Darkfire Heroes

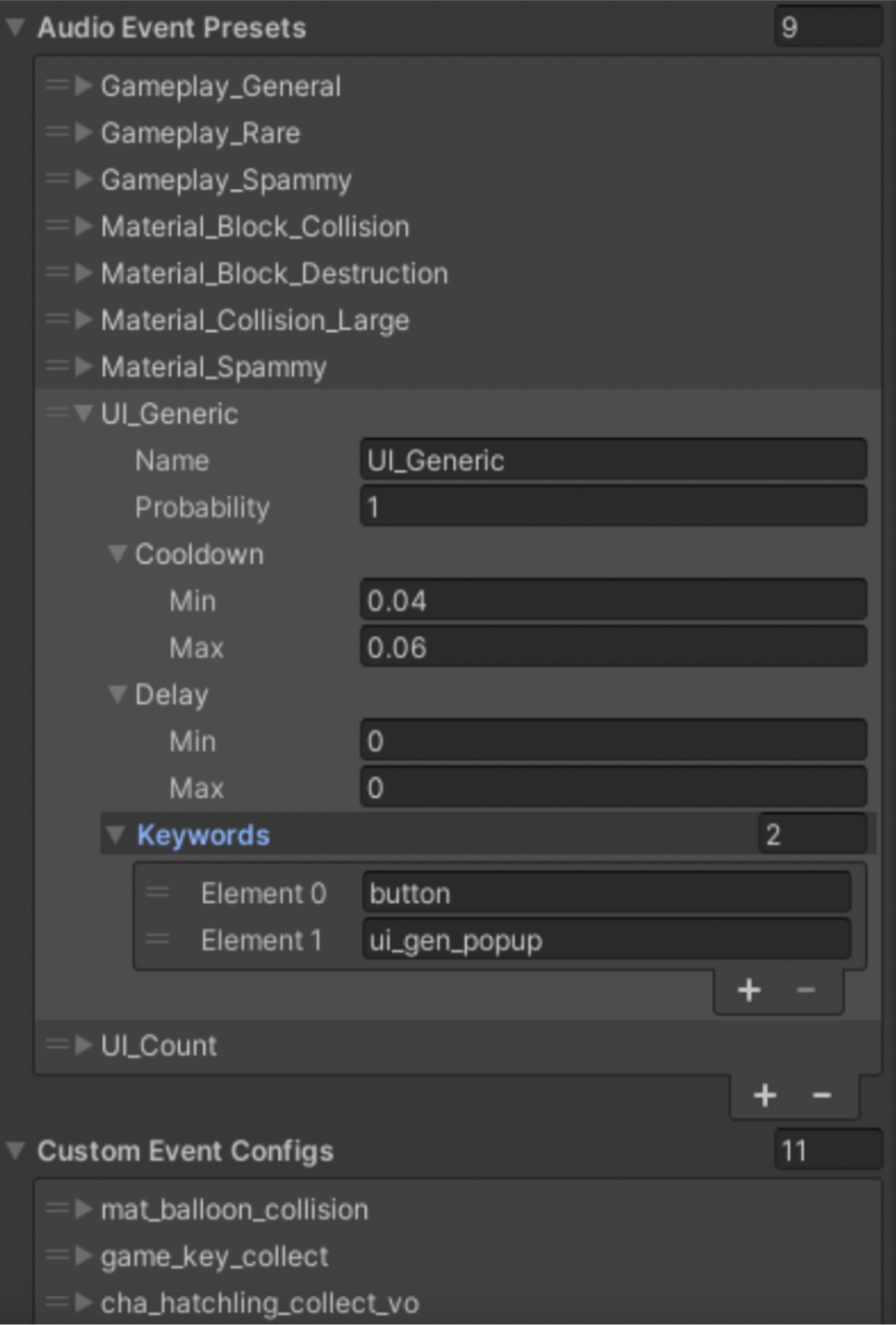

In Angry Birds: Journey, my former colleague Mikko Kolehmainen took the cooldown system from Darkfire Heroes and turned it into something we call Audio Event Presets. In this iteration, instead of wrapping middleware events, we specify event handles (strings) with similar settings like before (cooldown, delay, probability and potentially more), and any matching events will adhere to those settings. This system has been way less hassle to maintain and apply bulk changes. As a bonus, all of this data is stored as an XML file, pre-loaded to improve performance (in the earlier version, the data was fetched at run-time). On the other hand, it requires a very consistent naming scheme; but then again, who is against a good, consistent naming convention anyway (other than a few evil blasphemers)? That’s the next best thing after a good color convention!

Audio Event Preset usage in Angry Birds: Journey

Mix It in the Fix

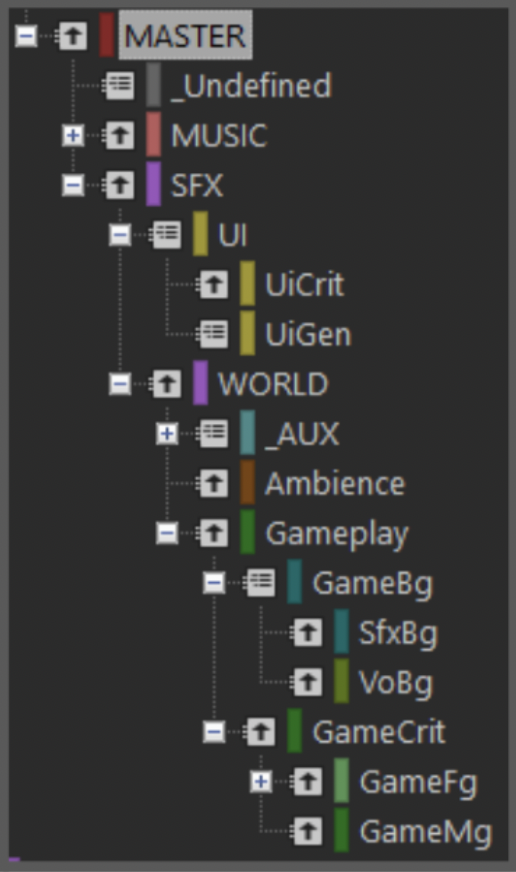

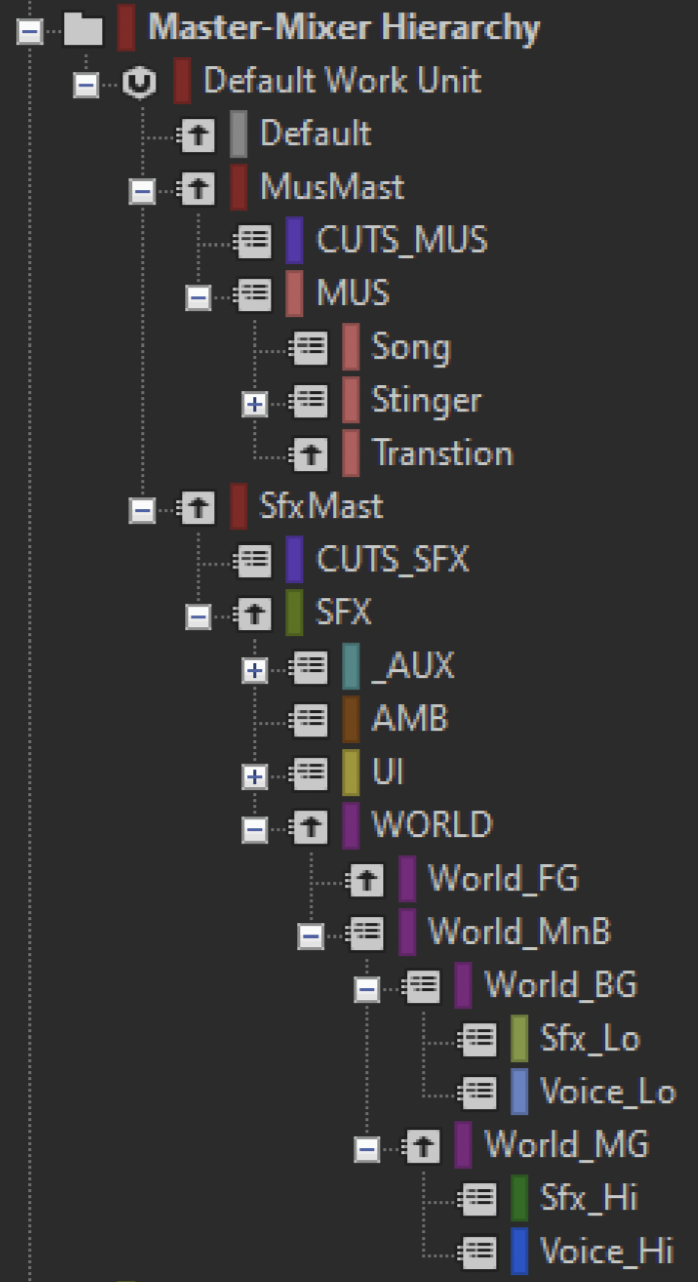

Event and voice limiting are only one side of the medallion on the journey to a clear mix. On the other side is dynamic mixing, which I see as a tool for filtering and prioritizing the constant flow of rather unpredictable voices into the most intelligible, pleasant and clear soundscape possible. As such, a good mix starts from a good mixer hierarchy, which dynamically responds to the game events and resulting sounds.

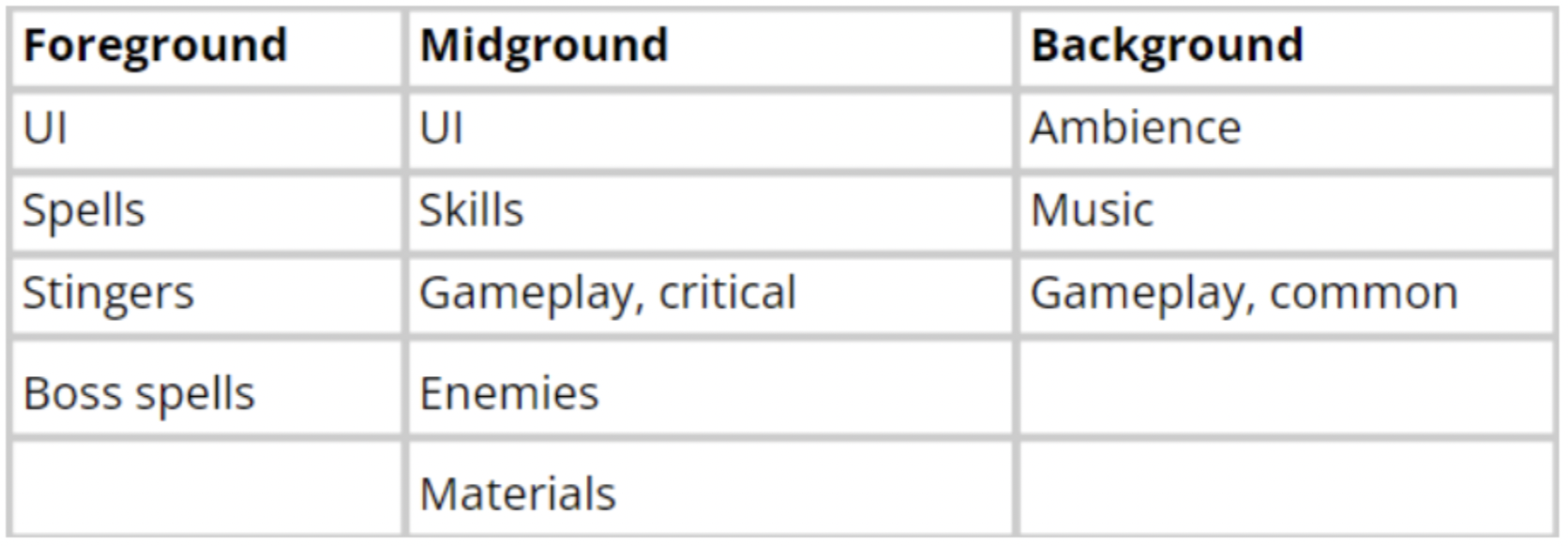

We build our mixer structures around priorities. Instead of only using busses that follow the sound types (ambience, music, gameplay, etc.), we utilize a structure that follows the priority of the sound. We’ve used simple categories such as background (BG), mid-ground (MG) and foreground (FG) to distinguish between priorities. Different priority groups interact with each other through states, auto-ducking, and RTPCs used as side-chain driven dynamic controls.

Mixer structure example

For example, in a shooter game, weapons may be high priority, enemy movement medium, and player movement is low priority. This approach allows for keeping the mixer structure and bus names more generic, which makes it easier to take advantage of a mixer template described earlier. We still have custom busses for each individual project, but the connections between the main busses remain the same, making it very convenient to have a decent sounding mix from the get-go. Most of the time, all it needs is a bit of customization.

Mixing priority example from Darkfire Heroes

It’s Alive

The work is not done in a F2P mobile game when it's shipped. In fact, one can say it’s just starting. “Shipping” a mobile game usually refers to the global launch, and that is not an end, but the beginning of the game’s live services. The game has already been in soft-launch for a while to begin with.

What this multi-release model means for audio developers is that the overall scope of the content and features are partially produced and gradually rolled out on each milestone. Soft-launch, where the game is released only in a restricted number of regions, can be analogous to the alpha state in premium games. Towards global launch, the game will get closer and closer to beta through continuous updates and iterations. The audio work may be ramped up either gradually during this period, or, more often than not, by having a big sprint closer to the launch date. The goal is to hit golden by that date, but in reality, some features can be shipped at beta stage at this point. This is possible thanks to the next phase of the game’s release schedule: Live service.

During live-ops, the game is polished, improved, fixed and expanded through regular (usually monthly or bi-monthly) updates. This is a chance for the audio team to polish and fix any content that was not up to the standards due to time constraints. Not only that, new features and content will be added on each update: A new mechanic, a new character, a new chapter, a new music track, etc.

This is a great opportunity to have another look at the sound and improve any imperfections. A great advantage live service games have is that it’s much easier to react to player feedback and do necessary changes via these initial updates. You can imagine the first few updates after the game’s release keeping the audio team quite busy, and the workload slowly ramping down towards the later updates. These updates are also an opportunity to dive deeper into the core themes of the game and explore broader artistic avenues. The first few updates will also test the scalability of your systems and structures, and this is a good time to review your workflow and take notes for future improvements.

All the advantages aside, the live-ops model requires the audio team to be ready to deliver content every month, and not for just one game but many! Not to mention the work required for new games.

The key to handling the ongoing updates like a boss is to have a good plan and solid communications. As a start it's important to gauge the upcoming content scope well, and allocate resources accordingly. A timeline of all upcoming milestones is key. All audio needs should be gathered from producers promptly, and requirements on the audio side communicated.

Once a solid production backbone is established, what comes next is tools and conventions that enable updating the game without causing issues or creating frictions. Within Wwise, this may look like having the hierarchy, SoundBank structure, templates and the naming convention (as always) built with scalability in mind. Taking advantage of Wwise Work Units to isolate the content is a smart choice here. The workflow should be clear and easy enough so that even the newcomers to the project can frictionlessly jump into the project and implement new assets in a consistent way with the rest of the content.

The next important step is to test the content in various stages. The first step is the testing within the game engine. Once it’s made sure that all the new content is functional without any errors (or even more importantly, without accidentally breaking other parts of the game), it’s time to test the build on a device. What we try to do before testing on a device is to test the build itself to see if there are any low-level issues. This can be achieved by keeping the audio work on a separate branch in the versioning tool. Once the build is proven to be error-free, it can be tested on device by a member of the audio team and/or the QA team. This is also a good place to get feedback from the creative director of the feature (assumingly, you’ve already done this before implementing the content using video captures of gameplay, but nothing can replace the actual gameplay experience).

Following these steps will dramatically reduce the chance of facing issues in live-ops updates. At any rate, setting a deadline for audio development as a safety margin before the feature-complete date (at least a week’s time) will give you enough headroom to deal with any emerging issues (which surely will happen one way or another).

Optimal Limits

Game audio went past many of the technical limitations of ye olde times, but mobile still has room to grow in this area. Lower memory and CPU budget to accommodate lower grade devices, or small package size limitations are still relevant issues. Therefore, it’s important to touch upon some of the optimization strategies we’ve been using.

In older generations of mobile gaming, I remember using brutal methods like reducing music to mono, using half sample rates like 22.1 kHz, using the lowest quality conversion settings, or even stricter methods the audiophile in me is ashamed to tell… We’re lucky that we don’t have to be that strict these days. At Rovio, we try not to limit our creative ideas within any technical boundaries. At the least, we start with open-ended ideas, and later fine-tune them to fit realistic memory and processing limits. For example, in Darkfire Heroes, we had over 100 minutes of original music in the game, not to mention the tons of different characters and spells!

Darkfire Heroes soundtrack

The most obvious strategy is to use conservative conversion settings. The trick is to know where to keep settings tight and where to keep them loose. I like to start with answering a few simple questions before deciding what kind of conversion I will apply to a sound:

- How often is the sound played?

- How long is the sound?

- How significant is the sound for the player experience?

- What are the spectral and spatial qualities of the sound?

Answering these questions will help me to reach an optimal point between quality and performance. If the sound is played frequently, it will take a heavier toll on the CPU, and would benefit from quicker loading. On the other hand, if it’s a sound that the player will hear all the time, the quality should be decent. A rarely played sound can usually tolerate lower quality, because it will only be a passing detail. Then again, if that sound has a significant meaning for the gameplay experience, it needs to feel rewarding and special. For instance, I may want to keep it stereo and with full sample-rate to get all the details across.

The answers will change from game to game, as the priorities and goals will always be different, but they will surely point you in the right direction. Based on our typical games, we identified a few different presets that cover the usual conversion scenarios in our Wwise template. This way, it’s easy to quickly achieve a good starting point for performance, and tweak parameters such as sample rate and compression quality as needed. Since we can’t truncate the bit rate in Wwise (but we can lower the sample rate), we prefer to export files in 48 kHz and 16 bits (sometimes 24).

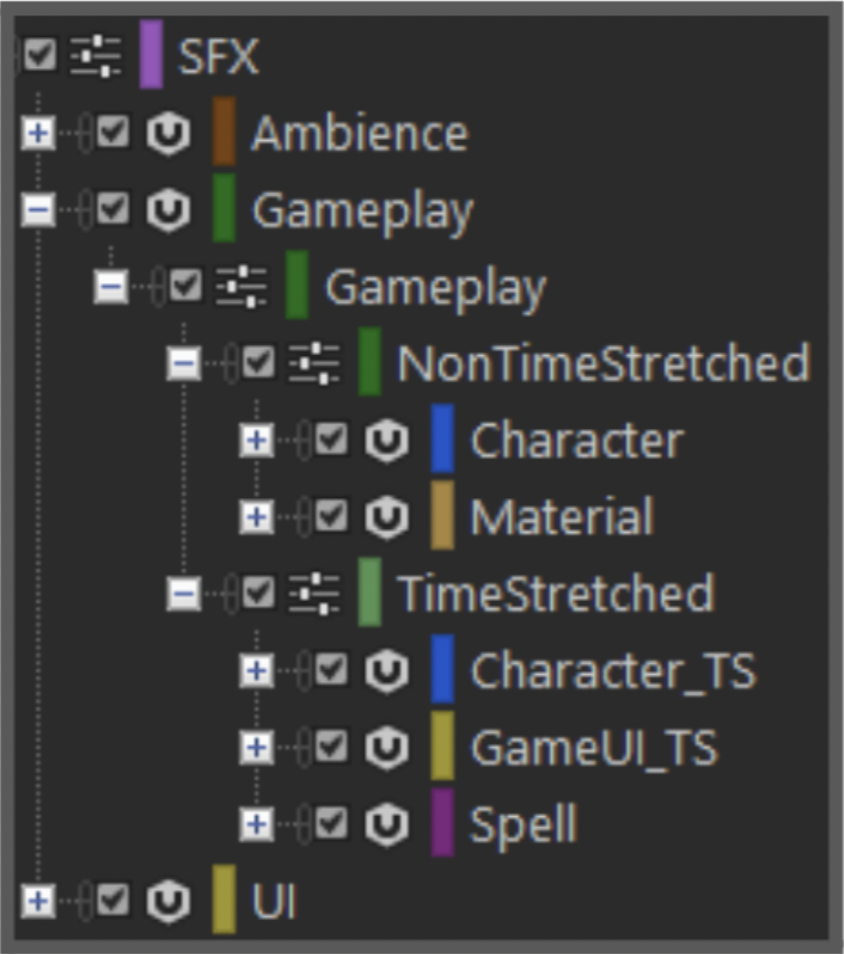

Another aspect of optimization comes from controlling the amount of concurrent voices and processes. We have good priority and voice limiting settings in place, but the event cooldown system mentioned before is a huge helper too. Besides that, it’s also important to set up the actor-mixer hierarchy in a way that can take advantage of property inheritance to the fullest, and limits redundant usage of actor-mixers or properties. Same thing applies to the master-mixer hierarchy too. For example, we structured the whole actor-mixer hierarchy in Darkfire Heroes around a time-stretch plug-in, so that the plug-in instance is not duplicated on all voices. It made the project structure a bit tedious to navigate, but helped the performance tremendously.

Darkfire Heroes actor-mixer hierarchy

We also do other small tricks like modifying frequently used RTPCs to only have linear curves instead of beziers. However, I think the biggest bang for the bucks comes from designing the assets and the Wwise project to be scalable from the get go. This involves things like creating shareable assets that can be used for multiple purposes, but it can also mean knowing where to limit the asset creation in the first place. For example, in Darkfire Heroes, we added custom voices for each enemy type, but not for enemy variations (fire and ice goblins used the same voice). However we only used a shared group of attack and hit sounds. This way, we were able to create a custom feeling for each enemy type, and still stay within our memory budget. It was also a good strategy to cope with the content demands for the upcoming updates by limiting the amount of content we have to deliver.

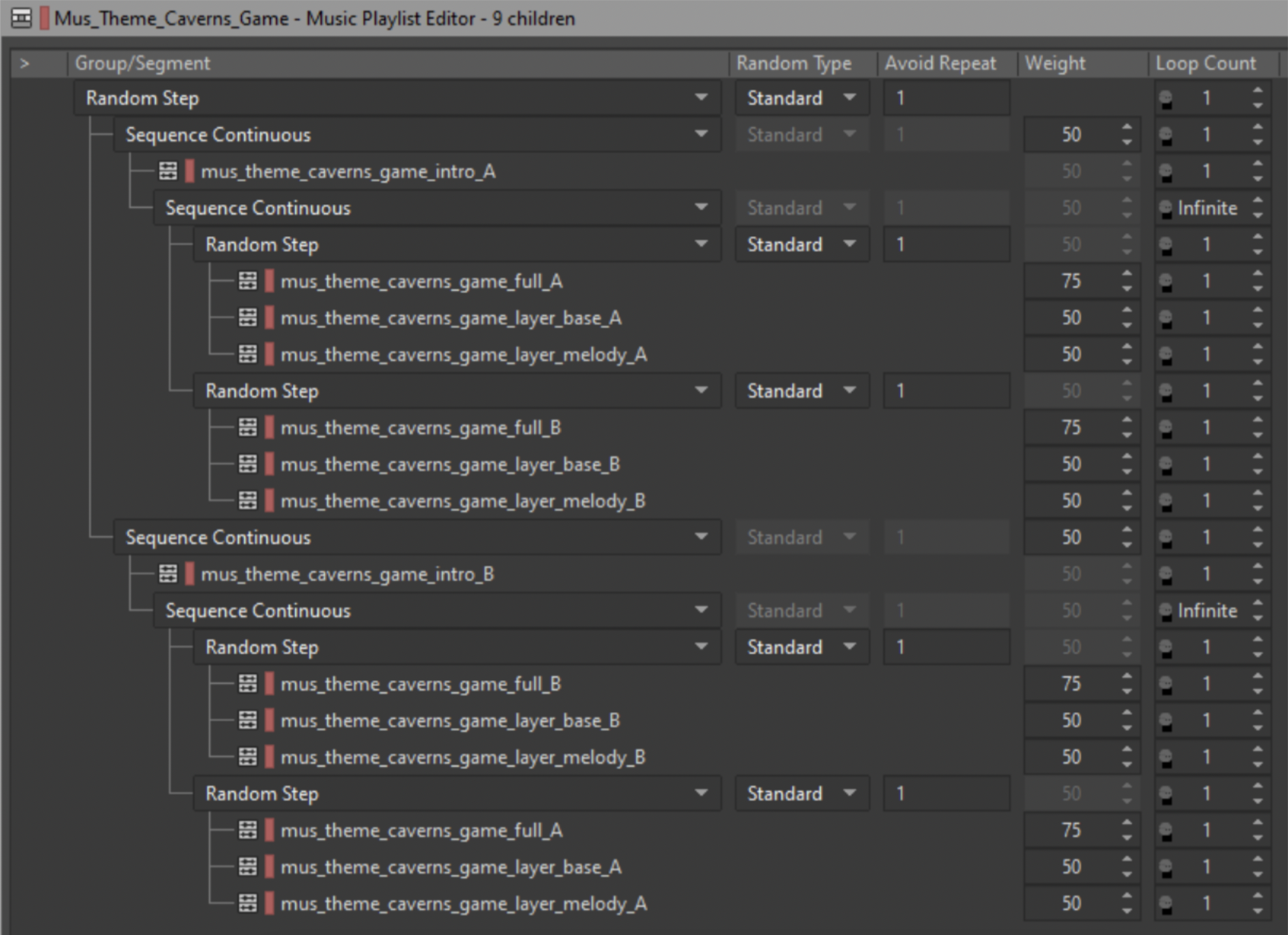

Last but not least, having a good SoundBank setup is a big helper, especially when it comes to large files like music and ambience. We usually have a core package comprising a few SoundBanks, such as meta and core gameplay elements, characters, UI, etc., that is downloaded during the initial install. Later, content like new chapters with new music and ambience are contained in separate SoundBanks as downloadable packages. This way it is possible to have unique music content over an hour. When it comes to musical or ambience variety within the game, we achieve this by using a layered and segmented approach. Most of our music and ambience have several layers and segments that are randomized to reduce repetitiveness. Ambiences are especially set in a very dynamic way in Angry Birds: Journey to create an ever-changing feeling, with many randomized parameters, such as reverb send, LFO, envelopes and filters. Some layers in the ambience affect each other through RTPCs, creating a dynamic environment. Each gameplay music track has four intro variations to make it feel different every time the player lands on a level, and the track starts from point A or B, with the possibility of playing one of the three different layers, which creates a ton of variety.

Angry Birds: Journey Icy Caverns chapter ambience export from Wwise

Angry Birds: Journey Icy Caverns chapter music

Gameplay music setup in Angry Birds: Journey

Conclusion

Mobile games are sometimes seen as the smaller siblings of PC/console games. Regardless, mobile game audio can be however deep and intricate, or simple and sweet you want to be. It’s true that it has its own limitations and challenges. However, the most crucial limitations usually come from the game itself, not the platform. The games that give designers the broadest range of creative possibilities tend to produce the most imaginative sound, whereas nondescript projects can put you in an artistically dull corner. All other limitations are just problems that can be solved with the right thinking and right tools.

Commentaires