In the previous article The Challenges of Immersive Reverberation in VR, we covered the reasons why immersive reverberations are so challenging in virtual reality. In this series, we will dive deeper and take an extensive look at past, current, and emerging reverberation techniques. More specifically, they will be reviewed from an immersive and spatial perspective.

Artificial reverberation is a type of audio effect that aims to add a diffused sense of space to an audio signal. These signals are usually captured in an acoustically damped recording studio. By simulating some aspects of the propagation of sounds in a room, a reverberation effect can be used to control the aesthetic characteristics of the auditory space. For efficiency, the simulation is usually accomplished by greatly simplifying the acoustics phenomena. Since immersion was not a requirement at the time of their creation, the spatial cues of most reverberation algorithms have been reduced to deliver a tunable but otherwise static output at each channel. Meaning, these algorithms can be difficult to extend into an interactive application.

Audiokinetic spatial audio team members testing out new and upcoming Wwise spatial audio features

Now that complex audio output systems that can simulate sounds coming from any directions are becoming the norm for VR and other immersive platforms, it’s time to rethink how we render reverberation on these emerging technologies. Spatial cues, drawn from distinct echoes, should follow plausible sound propagation paths through virtual geometry, both in time and direction. For instance, in headphone reproduction, it is now common practice to filter the direct sound through a Head Related Transfer Function (HRTF), a series of filters reproducing the frequency response and time delay to mimic the binaural nature of a sound traveling to our ears at various angles and depths. Therefore, an ideal spatial reverberator should carry enough spatial information from individual reflections, or at least a portion of them, to enable the same filtering process for each discreet reflection. Reverberation algorithms should also modulate as a source and a listener move around geometrically complex spaces. Finally, these newly desired perceptual cues shouldn’t sacrifice the aesthetic versatility that we’ve become accustom to with reverberation algorithms.

There are a wealth of amazingly rich reverberation effects out there that will undoubtedly remain crucial for media production for years to come. The goal is not to replace them entirely, but ultimately the sound designer toolbox should include effects that can be informed by the virtual geometry to convey spatial cues. With this in mind, let’s review some of the reverberation techniques behind the most common reverbs today and review how they could support spatial cues.

Physical reverberation

Introduced in the 1930s, echo chambers were the first attempt to add artificial reverberation to studio recordings. Using a physical room, the dry sound was simply played through a loudspeaker and recorded again using a microphone, capturing the reverberation of the room in the process. These weren’t just any rooms however, they had to be built without parallel walls to prevent flutter echoes [5]. Limited by the physical properties of the space, tweaking the reverberation would mean modifying the acoustics of the room. For that purpose, different materials could be added to influence both the frequency response and reverberation time of the effect, such as a carpet. Different microphone placements were also used to adjust the dry to wet ratio, and multi-band equalization later became a common method to correct the frequency response of the wet signal. Obviously, cost and portability were limiting factors for this effect. Yet, it’s difficult to achieve reverberation that sounds more natural than sound propagation in a real room. This probably explains why echo chambers were common practice well into the 1970s and some still exist today.

![Echo chamber with no parallel walls [1] Echo chamber with no parallel walls [1]](http://info.audiokinetic.com/hubfs/Picture1-14.png) Echo chamber with no parallel walls [1]

Echo chamber with no parallel walls [1]

Around the same period, spring, and later, plate reverberation were introduced. Also making use of natural sound propagation, but in this case the sounds are sent through a smaller enclosed physical space. Metal plates and springs are the medium used to transmit and disperse sound energy. The sound is transmitted through the metal, reaches the edges of the material, and reflects back and forth as it would in any space. These devices make good use of the fact that sound can travel much faster in dense metals. For instance, sound travels about twenty times faster in steel than in air, around 6,096 meters per seconds (340 m/s for air). Steel also has damping properties that allow similar reverberation times to what we would expect from rooms. These factors make a much smaller unit capable of creating a rich density of echoes. They also have properties of their own. For instance, sounds at different frequencies travel at different speeds in steel, which is called dispersion. This leads to small, but audible, frequency chirps in the reverberation which have since become a praised artifact in music production. First music recording using an echo chambers [2][3]

Frequency response of a spring reverb [4]

Frequency response of a spring reverb [4]

Spring reverb on a modular synth

From a spatial perspective, physical reverberation is clearly not the ideal method. But let’s entertain the idea anyway. We could stream the content to a space mimicking the virtual one, one space per listener, and move a loudspeaker around following a sound source, while a binaural microphone could be moved to represent the listener position and direction. Although some latency would likely be introduced and it would be highly impracticable in some ways, it sets the theoretical bar for perceiving spatial reverberation.

|

|

|

|

|

Delay based method

Following the evolution in music production, reverberation eventually moved onto electronic devices. A delay is a continuous memory, called a delay line in the digital world, which can store a signal and play it back shortly after. In its simplest form, a delay reverb is a simple delay that allows the output to be fed back into the input of the delay, producing a series of decaying echoes. Controls are usually given on the length of the delay and on some attenuation factor to adjust the decay. One of the earliest commercial delay based reverberation units was the Roland Space Echo. The signal was stored on a magnetic tape and played after a tunable delay.

Roland Space Echo - RE - 201

To increase the density of echoes, the output of a delay can be fed back into itself to create a recirculating delay. Multiple delays can also be put together. They can be placed either in parallel for independent control, or in sequence for a quick buildup of the density of echoes.

One of the challenges of this type of reverberation is controlling the frequency response of the system. Ideally, different frequencies should get attenuated faster than others, mimicking frequency dependent absorption of different materials in a room. However, by copying an audio signal in close proximity to itself, the additive nature of sounds means a comb filter will be created, attenuating some frequencies based on the length of the delay.

Over the years, multiple signal processing techniques have been created to allow the use of delays while retaining control of the tonal texture of the output. In 1958, the forefather of modern artificial reverberation, Manfred R. Schroeder, published the design of a reverberator that was using a new type of cascaded filter called the all-pass. These filters have the special property of preserving all frequencies of the original signal, even after copying it with a small delay. This was a great breakthrough allowing the frequency response of the reverberator to be controlled independently. This led to the creation of most of the artificial reverberators still in use today, allowing an infinite amount of combinations of various filters and delay lines to create reverberation effects.

Multichannel delay networks were first introduced by Gerzon in the 1970s. Delay networks were further developed in the early 1990s by audio pioneer Jean-Marc Jot [6] and formalized into one of the most popular delay reverberation designs called the Feedback Delay Network (FDN). The original intention was to create a design that could yield signals for multiple channels at once. This also streamlined the design for multiple recirculating delays and tone control. The multichannel aspect in this design didn’t intend to localize the sound position. Instead, it aimed to deliver slightly different audio signals to each speaker and minimize comb filtering artifacts that can occur when the same signals from various speakers recombine slightly out of phase.

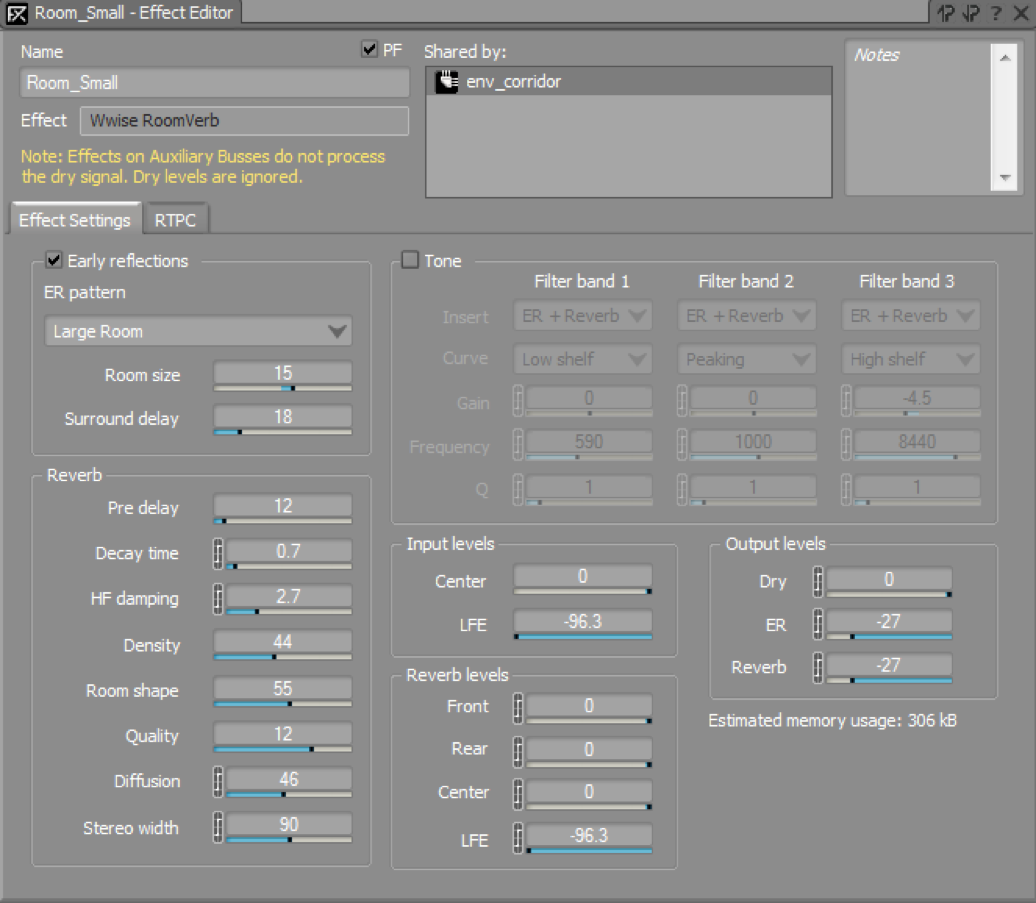

Wwise RoomVerb is a good example of an FDN algorithm that is still popular nowadays. One known limitation of FDNs is that they can be slow to accumulate a reasonable amount of echoes at the beginning. This is the reason why the RoomVerb offers the option of supplementing the effect using predefined early reflection patterns of various rooms. These are simply multiple static echo patterns that can provide a higher density of echoes at the beginning while the algorithm recirculates delays naturally.

Within the context of immersion, delay-based reverberators can be somewhat limited. The efficient compromise they use to accumulate lots of echoes, feeding the output to the input of the system, doesn’t allow to individually place them in space and time. This limitation is somewhat acceptable since as the echoes accumulate, we lose the perceptual capacity to differentiate and locate them. In fact, at high density, close repetitions of the signal tend to merge together and become a diffused texture that we call late reverberation or diffused reverberation. Therefore, the main issue is with the early reflections, when the density is still low enough to allow us to perceive them individually. These are known as specular reflections. More on this in a follow-up article.

|

|

|

|

Room impulse response convolution

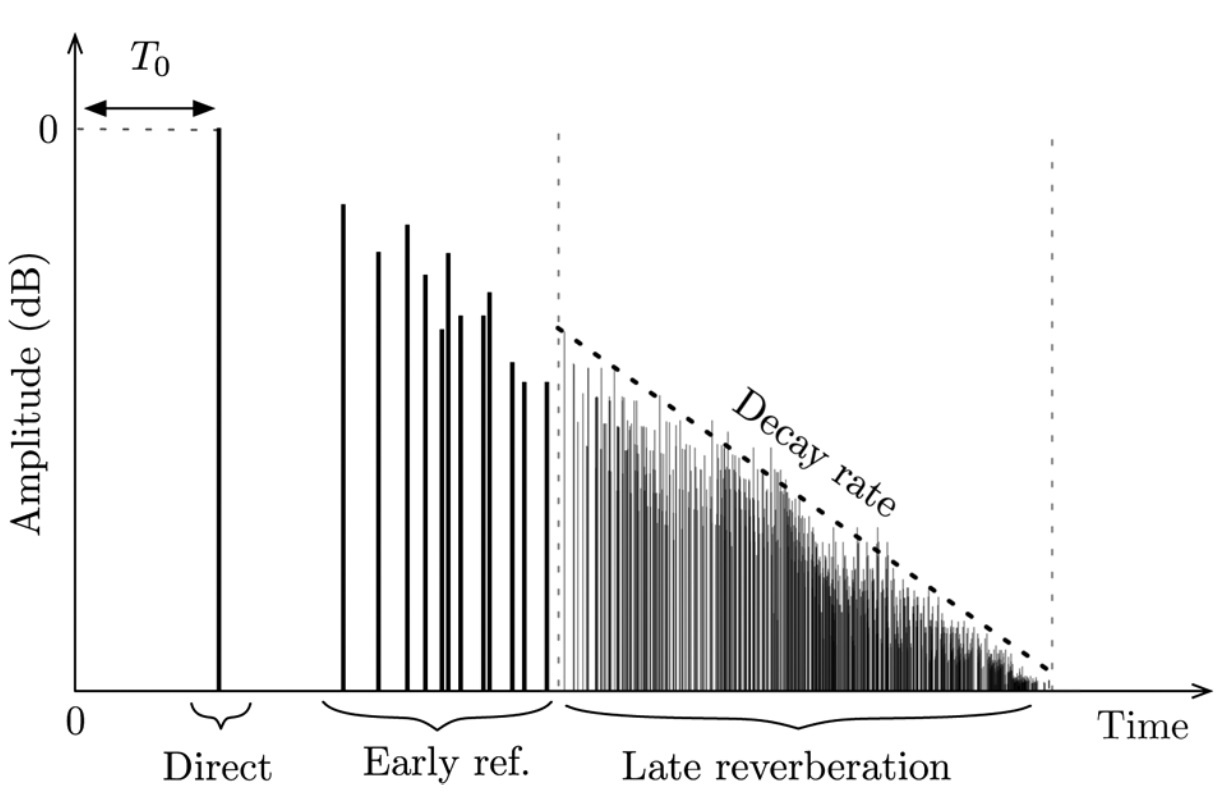

Through convolution, the reverberation of a room can effectively be recorded and applied to any input signal. Convolution refers to a mathematical operation that can combine two signals to form a third one. In the case of convolution reverb, the dry signal is convolved with the pre-recorded impulse response of a space which will effectively delay, weight, and copy the signal for every ‘impulse’. In signal processing, an impulse response is the signal that comes out of a system after putting an impulse into it. In the case of a room impulse response (RIR), you can think of it as a recording of the echo pattern of a room. These can be easily captured with regular recording equipment, provided you can have access to a relatively quiet room. Since a RIR comes from the recording of an actual space, this reverberation technique can render much more complex and realistic reverberation effects. A longer RIR, representing a longer reverb tail, will require more computation and more memory. Since many delay lines are required for a good quality delay-based reverberator, convolution is usually considered more efficient for a short reverberation, while a delay-based reverberator becomes a better solution for longer ones. This is why both are still common in today’s video game productions.

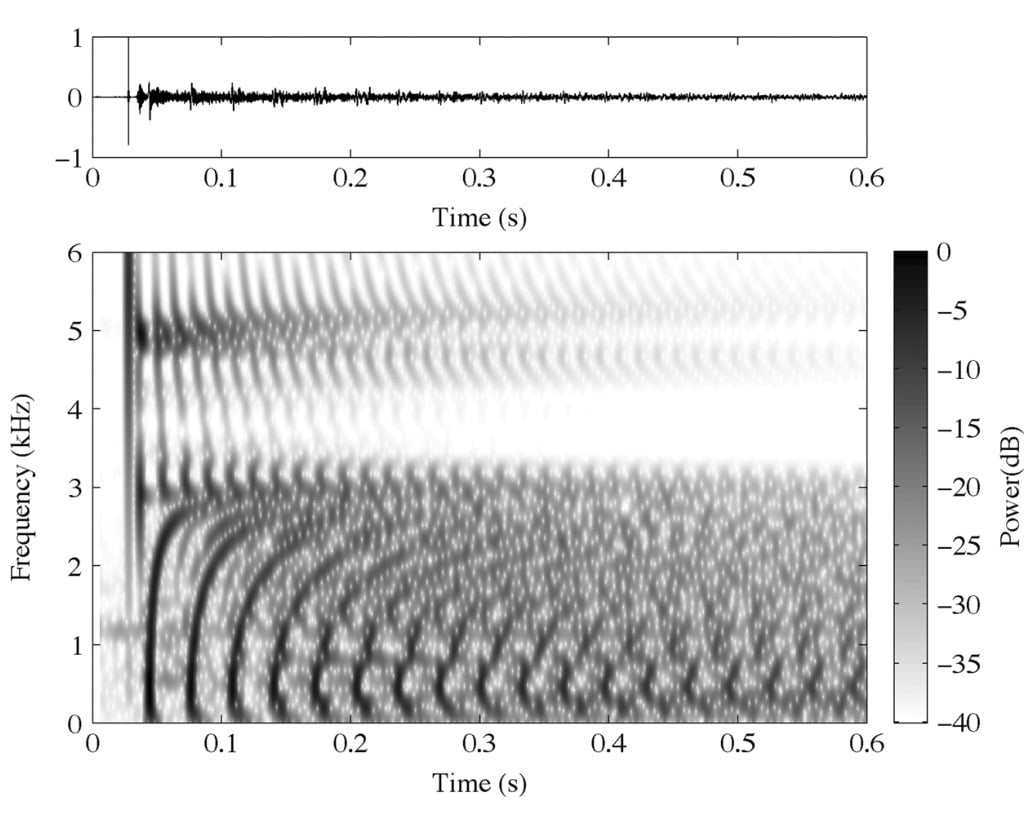

Representation of an impulse response [7]

A common method used to capture an impulse response is to set up a microphone in a room, with little ambient noise, and record a loud sound full of transient that can excite the room at different frequencies. To this end, it is common to use either a loud clapping sound or the pop of a balloon. A frequency sweep can also be used to ensure all frequencies are covered. In this case, a simple process can be used later on to reconstruct the impulse response from the recording. For more information on recording impulse responses, Varun Nair wrote an in depth article in 2012 [8]. Nowadays, good libraries of impulse responses exist, such as Audioease [9], so it is not always necessary to physically record spaces.

As an example of the possibilities offered by convolution reverb, the following recording was done using an impulse response of the most reverberant place known. A place usually restricted from public access and certainly not available to simply play and record various instruments in.

![Longest reverberation in the world in an old oil tank in Scotland [10] Longest reverberation in the world in an old oil tank in Scotland [10]](http://info.audiokinetic.com/hubfs/Screen%20Shot%202017-02-06%20at%209.56.17%20AM.png)

Longest reverberation in the world in an old oil tank in Scotland [10]

By nature, the reverberation pattern provided by convolution reverb is completely static. Similar to the delay-based method, this technique does not permit interactive interactions with the space. Walking in the middle of a long corridor will sound the same as being close to a corner. However, it is possible to record multiple impulse responses, one for each desired source-listener position; but, the amount of data generated quickly becomes prohibitive for most applications.

Until recently, the standard has been to use mono or stereo microphone setups to record impulse responses, and these would yield a static spatialization at run-time. The echoes coming from the left wall at the time of the recording will always come out from the left speaker after convolution. However, following on the trend to use ambisonics to record and transmit spatial audio data, it is also possible to record an impulse response using an ambisonics microphone. This will record the sound propagation coming from all directions, and later on will allow you to rotate the listener’s perspective interactively. This is a tremendous leap forward for spatial representation of reverberation. Nonetheless, one key spatial limitation remains with this; the fixed location of the microphone and sound source during the recording means that it will not be possible to interactively move them during playback. Unless, that is, multiple listener-emitter positions are recorded.

Convolution reverb is not limited to real physical spaces. Impulse responses can also be simulated through various techniques approximating how waves propagate. More on this in the next article.

|

|

|

|

For more information on classic reverberation algorithm from a signal processing’s perspective, I recommend this extensive review article by Välimäki et al: Fifty Years of Artificial Reverberation [4].

While delay-based and convolution reverberator are the two most common types of reverberators in video games today, neither offer a clear solution to render interactive reverberation. We will need to explore new techniques to find ways to inform and spatialize reverberation effects. In the next article, we will focus on modern reverberation techniques, aiming to approximate sound propagation at a much more complex level. In the meantime, feel free to reach out with comments and questions, and I will try to address them in one of the follow up articles.

Footnotes:

[1] https://www.gearslutz.com/board/attachments/so-much-gear-so-little-time/306216d1345733523-sinatras-vocal-chain-chamber.jpg

[2] http://www.harmonicats.com/Press_circa_1947.html

[3] http://www.uaudio.com/blog/emt-reverb-history/

[4] V. Välimäki, J. D. Parker, L. Savioja, J. O. Smith, J. S. Abel, “Fifty years of artificial reverberation”, IEEE Transactions on Audio, Speech and Language Processing, vol. 20, no. 5, pp. 1421–1448, July 2012. Available at: https://aaltodoc.aalto.fi/bitstream/handle/123456789/11068/publication6.pdf

[5] http://recordinghacks.com/2011/06/04/flutter-echo/

[6] https://ccrma.stanford.edu/~jos/cfdn/Feedback_Delay_Networks.html

[7] V. Välimäki, J. D. Parker, L. Savioja, J. O. Smith, J. S. Abel, “Fifty years of artificial reverberation”, IEEE Transactions on Audio, Speech and Language Processing, vol. 20, no. 5, pp. 1421–1448, July 2012. Available at: https://aaltodoc.aalto.fi/bitstream/handle/123456789/11068/publication6.pdf

[8] http://designingsound.org/2012/12/recording-impulse-responses/

[9] https://www.audiokinetic.com/products/wwise-add-ons/audio-ease/

[10] https://acousticengineering.wordpress.com/2014/08/01/acoustic-analysis-of-playing-the-worlds-longest-echo/

Photography credits: Bernard Rodrigue - 'Audiokinetic spatial audio team' image

Commentaires

Jeff Ali

February 08, 2017 at 05:26 am

It seems impulse responses captured with Ambisonics microphones then processed as convolution reverb gets really close to the ideal but aside from using multiple locations what other techniques can be used to give the listener the sense of moving through space?

Benoit Alary

February 08, 2017 at 11:55 am

You are correct Jeff, there are indeed some things that can be done to work with a static ambisonics IR but still move around. Stay tuned, I don't wanna spoil the next articles!