This blog post is about Wreckage Systems by 65daysofstatic. Wreckage Systems is a live broadcast of infinite music. You can watch it HERE or listen to the audio only version HERE. It is not a game, but it is built in Unity and all of the audio is being run in Wwise. It is partly a para-academic research project, partly a pandemic contingency plan conjured up by a band suddenly unable to tour. It is a stubbornly utopian attempt to find new ways to write and present music, and an excuse to make lots of flavours of noise that wouldn’t necessarily make it into 65daysofstatic’s more conventional output. I’ll get into the Wwise guts of it down below, but first a bit of background as to why it exists at all.

BACKGROUND

Back in 2014, my band 65daysofstatic was asked to compose the soundtrack to No Man’s Sky. This meant writing not only a regular soundtrack album full of linear, song-shaped pieces of music, but also constructing an infinitely long, dynamic soundtrack to match the infinitely large, procedurally-generated universe that Hello Games were creating.

We were the composers, but the heavy-lifting of creating the No Man’s Sky audio framework was the responsibility of audio director (and probable wizard) Paul Weir, so the technical specifics of how those infinite soundscapes were actually built in the game is not really our story to tell. Nevertheless, as a band we were eager to make the most of the generative nature of the soundtrack and so we threw ourselves into a crash course on interactive music techniques and hustled our way into getting deeply involved in the process. We came out the other side armed with myriad ideas about making nonlinear music and a catalogue of new existential worries about the fundamental nature of music.

Around the same time, serendipitously, I was working on a PhD exploring algorithmic composition strategies in the context of popular music (i.e., how can you apply these kinds of things to a noisy live band like mine?) This combination of game music development and applying experimental algorithmic music research to 65daysofstatic happened in parallel over a few years and opened up a lot of unexplored terrain that I was eager to explore.

Despite having spent my entire adult life using computers to make music, by the end of all this I had developed a deeply critical, often sceptical approach to anything that could be generally described as algorithmically-driven, procedural or generative music techniques being applied to composition. Not because I don’t think computers are capable of making interesting structures of sound autonomously, but because it became clear to me that the actual sounds that come out of speakers are just one very small part of what makes music music. You can give a computer all the machine-learned, neurally-networked artificial hyper-telligence you like, but you’re never going to be able to give it intent. You’re never going to be able to give it a broken heart, or a surplus of romance. It’s never going to understand the joy of making messy noise in a tiny room with its friends, or dancing all night to repetitive beats.

And so to Wreckage Systems. An ongoing project in which we broadcast an endless stream of ever-changing music onto the internet in an attempt to grapple with this dialectic: ‘computers can never really know what it’s like to embed music with meaning; let’s use computers to make meaningful music autonomously’.

Is Wreckage Systems an artistic expression about the unreasonable demands on contemporary artists to churn out endless musical content? Maybe. Is it an attempt to see how far musicians can push automated music before it loses all meaning? Probably. Is it an assemblage of massive algorithmic bangers, sad piano soundscapes, glitchy breakbeats, free jazz loops, field recordings, procedural synth melodies, and a whole host of other generative music systems all smashed together in a Unity project powered by Wwise? Absolutely.

If you’re interested in blog posts that go further in explaining the philosophy and artistic thinking behind Wreckage Systems, and more detailed breakdowns of individual compositional techniques we used, then please do take a look at our Wreckage Systems Patreon, which is the model we chose to fund this curious musical experiment. For a preview, there’s an unlocked post that discusses more about our experience on No Man’s Sky HERE. If a little bit of utopian Marxism is more your kind of vibe then there’s an audacious application of historical materialism in relation to the project HERE. Otherwise, the remainder of this post will look specifically at the Wwise project that drives the sixty five different autonomous music systems that exist inside the Wreckage Systems broadcast.

OVERALL STRUCTURE

In total, there are sixty five different ‘Wreckage Systems’ in the stream. Each system has its own set of sounds and behaviours within Wwise, and will often have accompanying custom scripts in Unity to extend its behaviour. Because Wreckage Systems is not a game, and because it is only available to the public as an audio/video stream rather than as software, we had the huge luxury of designing everything around the audio output, rather than needing to think about disk space, DSP threads, polyphony, audio quality, and so on. It didn’t need to be a shippable product in any conventional sense.

To this end, more or less every Wreckage System has its own SoundBank within Wwise, full of as much high quality audio as is required. There’s an additional SoundBank that’s always loaded for general background rumbles, static hisses and occasional UI sounds.

The broadcast loop on the live stream acts like a series of radio transmissions coming out of and then falling back into static. When a new system comes online, it loads the SoundBank, gets going, and crossfades out of the static to the music the system is making. As the system draws to a close, it crossfades back to the static, unloads the SoundBank of the completed system, and repeats the cycle. Systems rarely stick around for more than 7-8 minutes, so if you watch the broadcast for at least that long and follow what the log is saying you should be able to see this in action. (Oh, it occasionally hacks itself too).

TYPES OF WRECKAGE SYSTEMS

Each of the 65 different systems is based around a concept we call a ‘constellation heart’. Here is a short video from our Patreon that explains the idea in a non-technical way.

Essentially, what we were getting at in this video is that, where possible, we didn’t let the main audio framework we had built limit the main musical idea for any given system. The ‘constellation heart’ of each system was the core of the idea, the thing that makes that particular system unique, and was something we needed to protect at all costs to stop the project becoming 65 differently-flavoured generic generative soundscapes. Sometimes the ‘constellation heart’ of a system is as simple as a particular set of unique samples. Sometimes it is that the system might have taken days or weeks to make because it’s full of Interactive Music Switch and Playlist Containers with custom stings and transitions that are not generalisable to any other piece of music. Often, it means that there’s an additional set of custom scripts in Unity that, rather than just starting or stopping Wwise Events, are extending the behaviours of the system, perhaps by generating MIDI patterns, or using various callback commands on Events to create music on the fly. Despite doing whatever we could to protect this idea of the constellation heart, it was still necessary to generalise how all of the systems operated to some degree.

Therefore, at the top level of all the systems is a very generic Game Sync State. This has seven generic States:

- Beginning

- State_00

- State_01

- State_02

- State_03

- State_04

- Ending

A system can choose to ignore these States by assigning the same things to all of them, but every system starts in its Beginning State and ends on its Ending State. If the system is making use of different States then it can choose to move through them by using a custom sequencer unique to that system, or by using a generic sequencer that switches between them randomly over the course of however long the system decides to run for.

Beyond this top level mechanic, what the systems actually look like in Wwise can be broken down as follows.

ACTOR-MIXER SYSTEMS

The relatively simple systems are the ones that tend to sound more ambient or soundscape-y. This music tends to be generated using a balance of Random Containers and Blend Containers in the Actor-Mixer Hierarchy. They can sometimes be as basic as a handful of long synth samples looping randomly. (After too many years listening to really bad algorithmic music, I am a strong believer that you can go a long way with incredibly simple randomisation as long as the core melodies or sounds are interesting enough.)

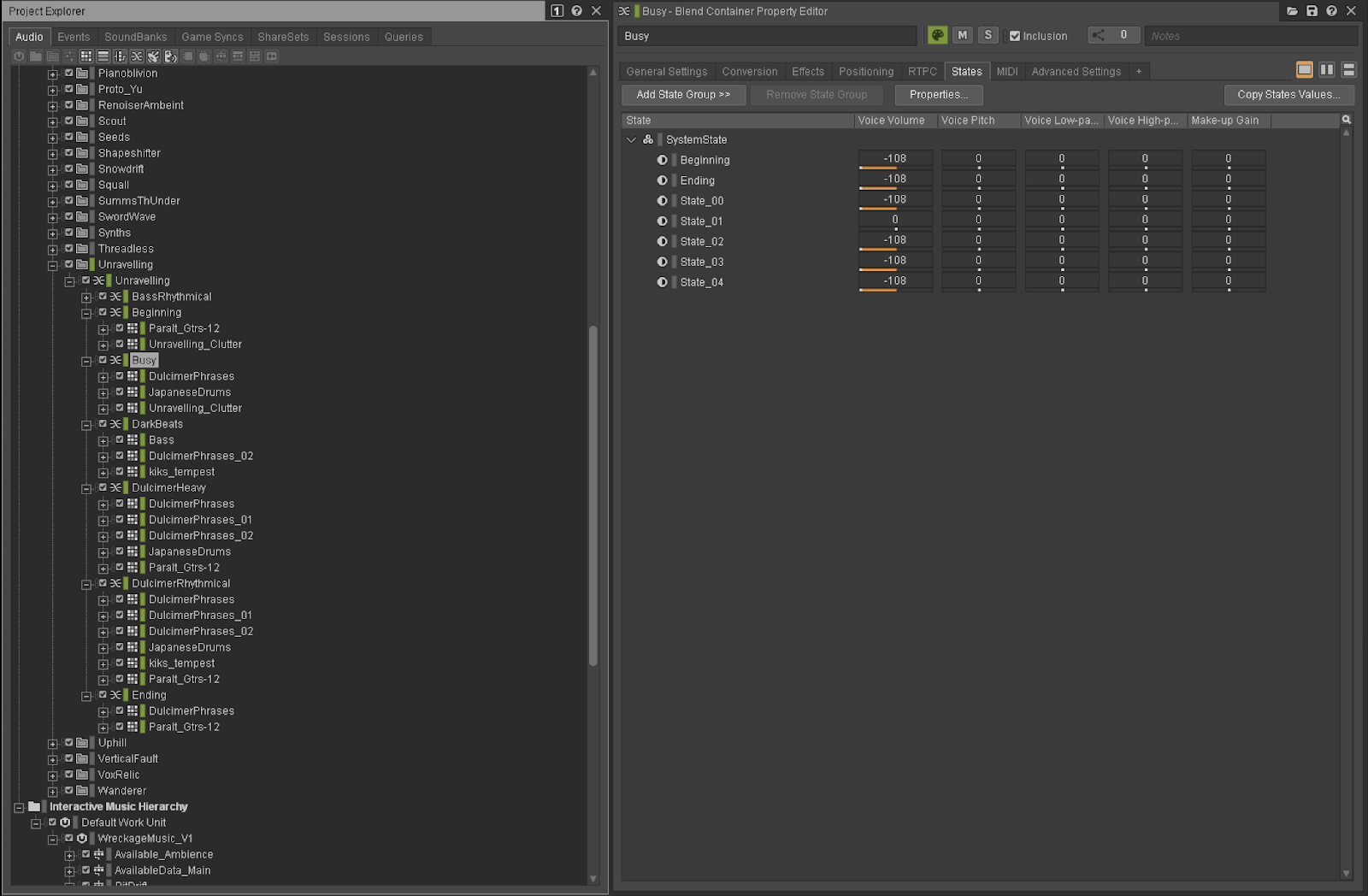

Wwise is also great for layering up simple randomisation to create more complex variation. For example here is a system called Unravelling.

On the left you can see how it has been built. Everything lives in a single Blend Container that is triggered when the system starts. The child Blend Containers are different parts of the song. The right of the screenshot shows that the highlighted container in the screenshot, ‘Busy’, is only audible during ‘State_01’. In the case of Unravelling, the other containers represent other states. Other systems might have containers that exist in more than one state, or have states that are made up of different mixes of multiple containers at once.

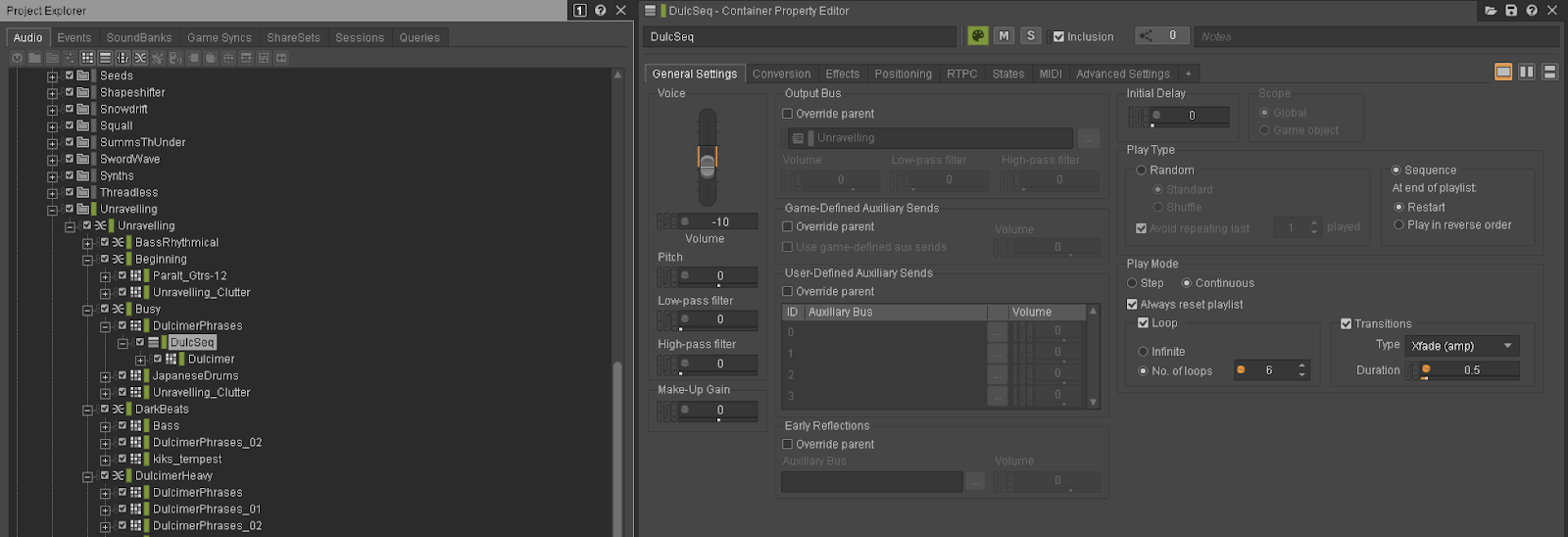

Taking a closer look at ways we create variation, expanding the ‘DulcimerPhrases’ container shows how it works. At the core is a Random Container that plays a single note sample from a pool of dulcimer note samples that are all within the same scale. Its parent is a Sequence Container that loops 6 times with a max offset of 2, and a crossfade amount of 0.5 with a min offset of -0.2. The parent container of this is the DulcimerPhrases container that triggers every 8 seconds with a max offset of 8. What this all adds up to is that once every 8-16 seconds, it is going to play a short pattern of between 6-8 dulcimer notes with a little variation in the timing to make it sound more human.

As you can see, this container sits alongside others in a Blend Container, and this blend is one particular state of the system. Other states have different samples, different hierarchies, different ways of working. Some contain loops, others are single percussion samples repeating at a fixed rate... The possibilities are essentially endless.

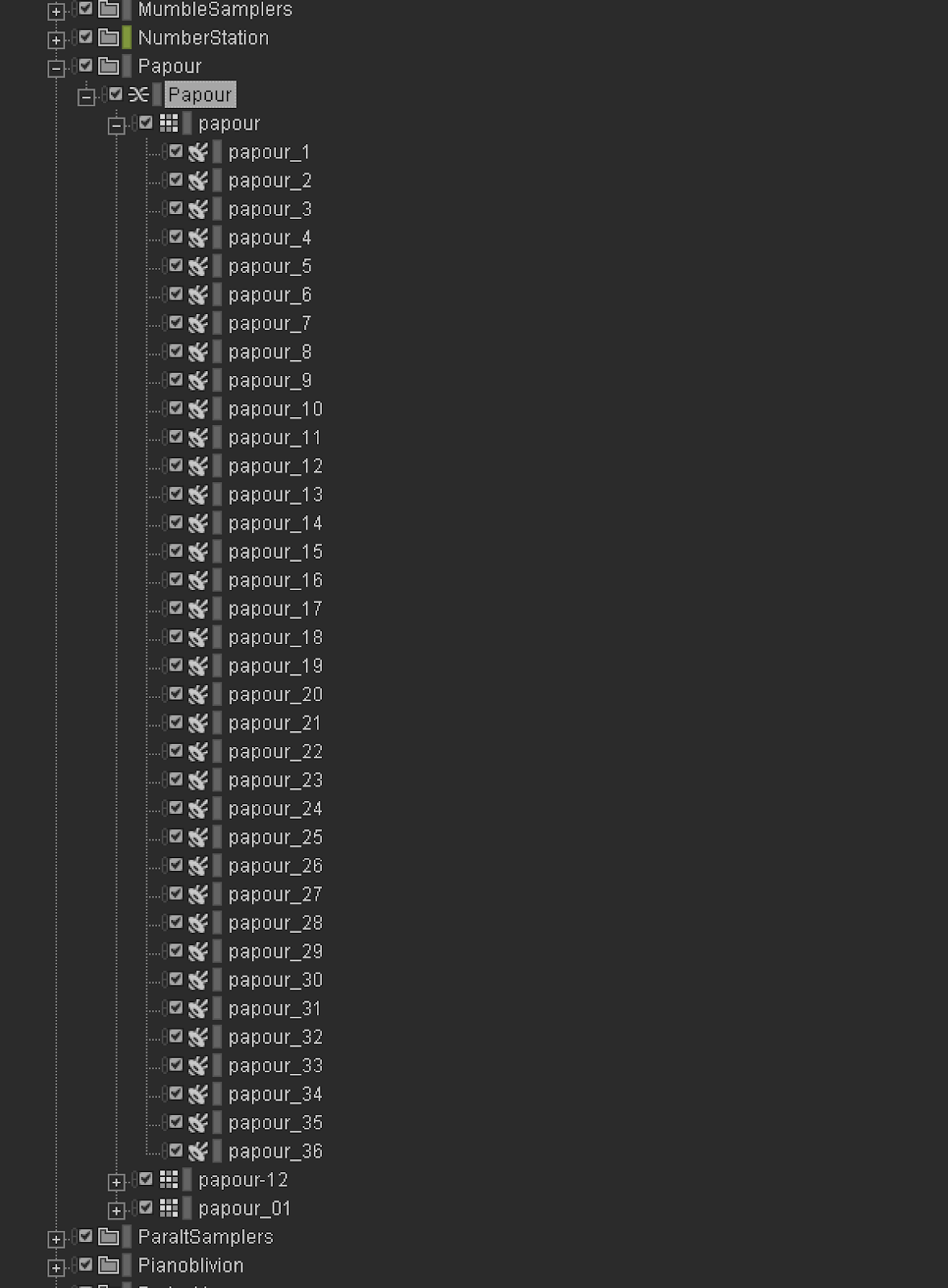

In contrast, here is a simpler system called Papour:

This system doesn’t use states. It has three Random Containers each filled with the same 36 samples. Each container has different amounts of Low-pass filter, different Trigger rates between 7 and 20 seconds and the middle container is pitched down an octave. The samples themselves are long, bladerunner-esque synth notes recorded at 65HQ with live filter sweep variations, full of grit and reverb. It is all put through another massive reverb bus in Wwise, and voila! Instant sci-fi soundscapes.

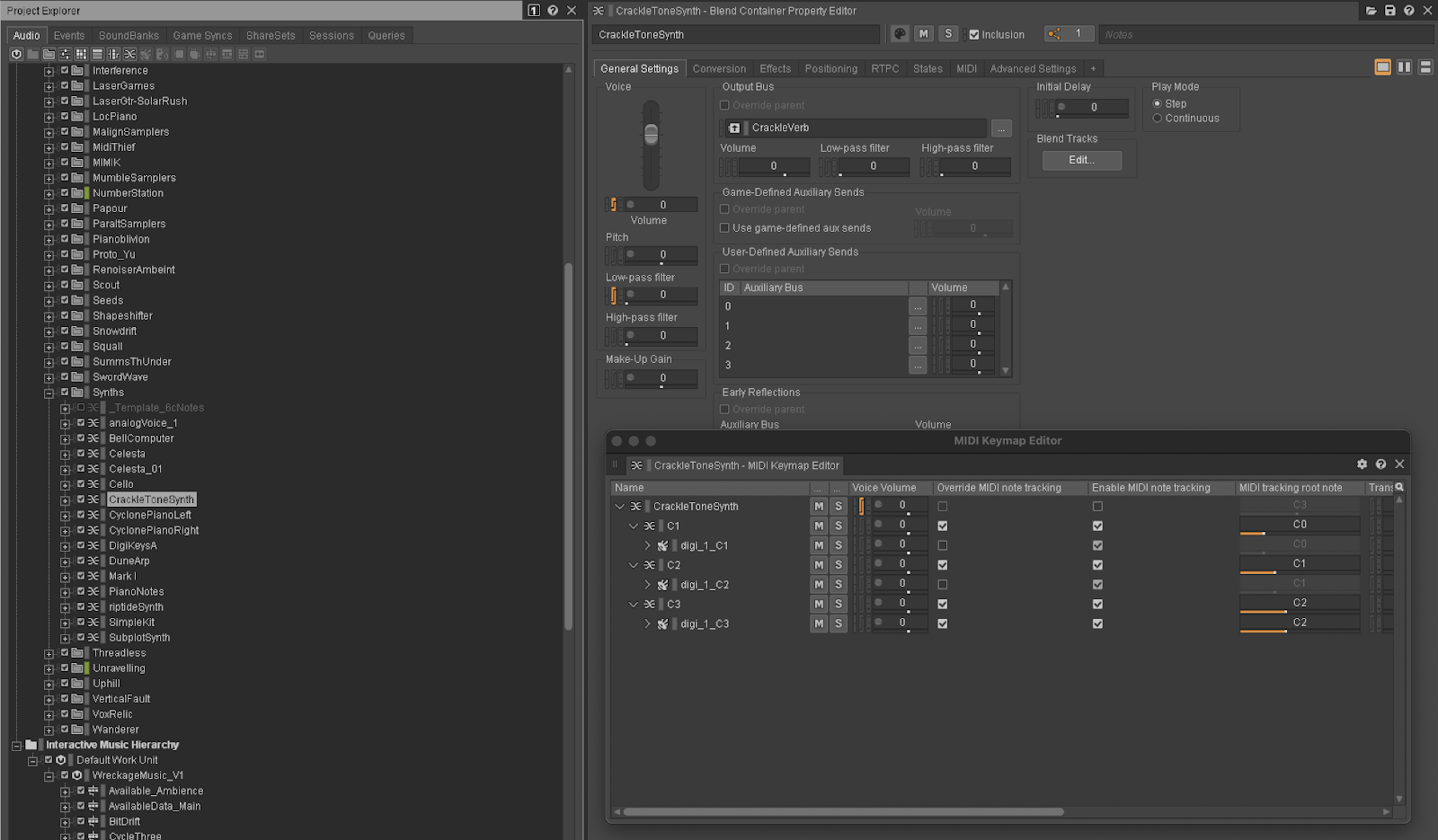

Other Actor-Mixer systems, for example Mist_Crackle, are equally streamlined in terms of audio content and complexity but are used in a different way, existing in Wwise as sampled instruments.

Some instruments are mapped across the whole range using many samples (like the main piano which is used by several systems). Others, like the ‘CrackleToneSynth’ shown above, are more stripped down. This one uses just three sampled notes mapped across the whole range of notes, put through some nice reverb.

For systems like this, rather than using the generic sequencer in Unity that cycles through states, there will be custom scripts that create musical patterns in one way or another, and then send them to Wwise as MIDI notes. Mist_Crackle, being an ambient soundscape where precise tempo is neither important nor desired, uses a coroutine loop in Unity that draws upon a pool of possible MIDI note numbers, and with some simple randomisation creates a soundscape made from slow, ambient melodies and lower chord progressions.

INTERACTIVE MUSIC SYSTEMS

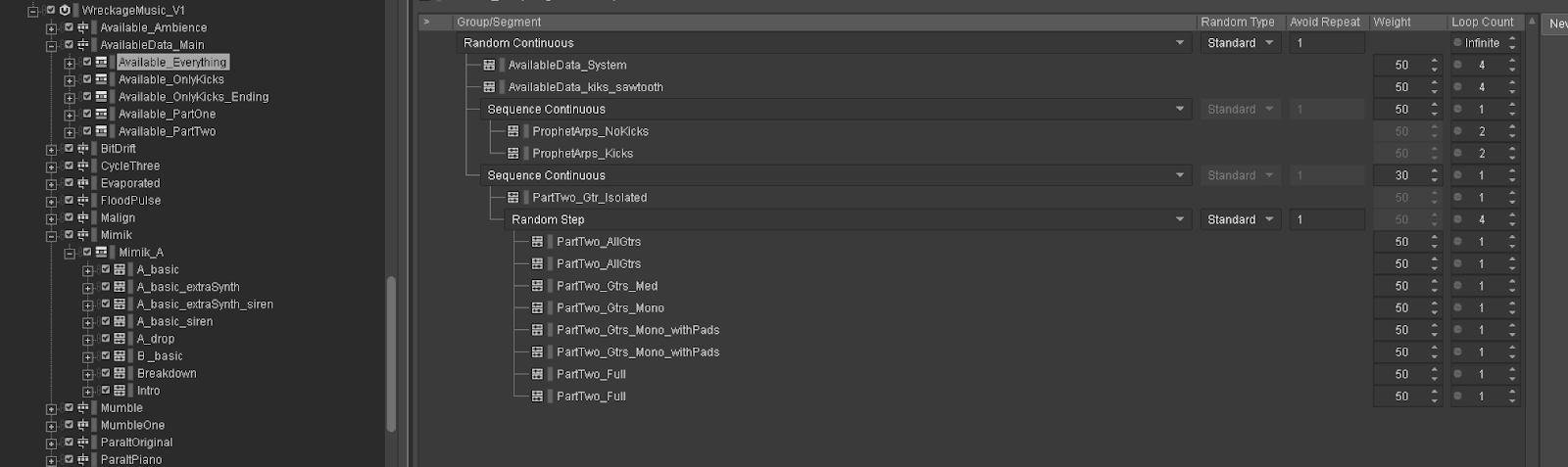

Other systems use the kinds of interactive music approaches more common to Wwise, making use of horizontal and vertical mixing techniques. I won’t go into too much detail here because, for users of Wwise, this is probably the most common use case for composing interactive music. Here is an example of what one of the interactive music-based systems looks like:

This system, Available Data, is based on a linear, concrete song that was written as part of the Wreckage Systems research. The recorded version is a 12 minute banger of beats and synths and guitars and noise. (You can listen to it HERE if you want.) Its Wreckage System equivalent is designed to manifest as equally long, equally banging variations on the theme every time it makes an appearance. The screenshot above shows the kind of structuring going on. Everything lives inside a Switch Container, and the various Playlists are full of different tracks and sub-tracks. There are a lot of custom stings for transitions, and over on the Unity side is a custom sequencer that moves through the states using a weighted probability. This took a lot of balancing, but the result is that every iteration of Available Data reliably moves through a loose skeleton of two parts (like the original), but hits new sections, loops and variations each time.

COMBINATION SYSTEMS

A lot of the systems exist as some kind of combination of Wwise Events that use Actor-Mixer Containers and some kind of foundational loops or structure in the Interactive Music Hierarchy.

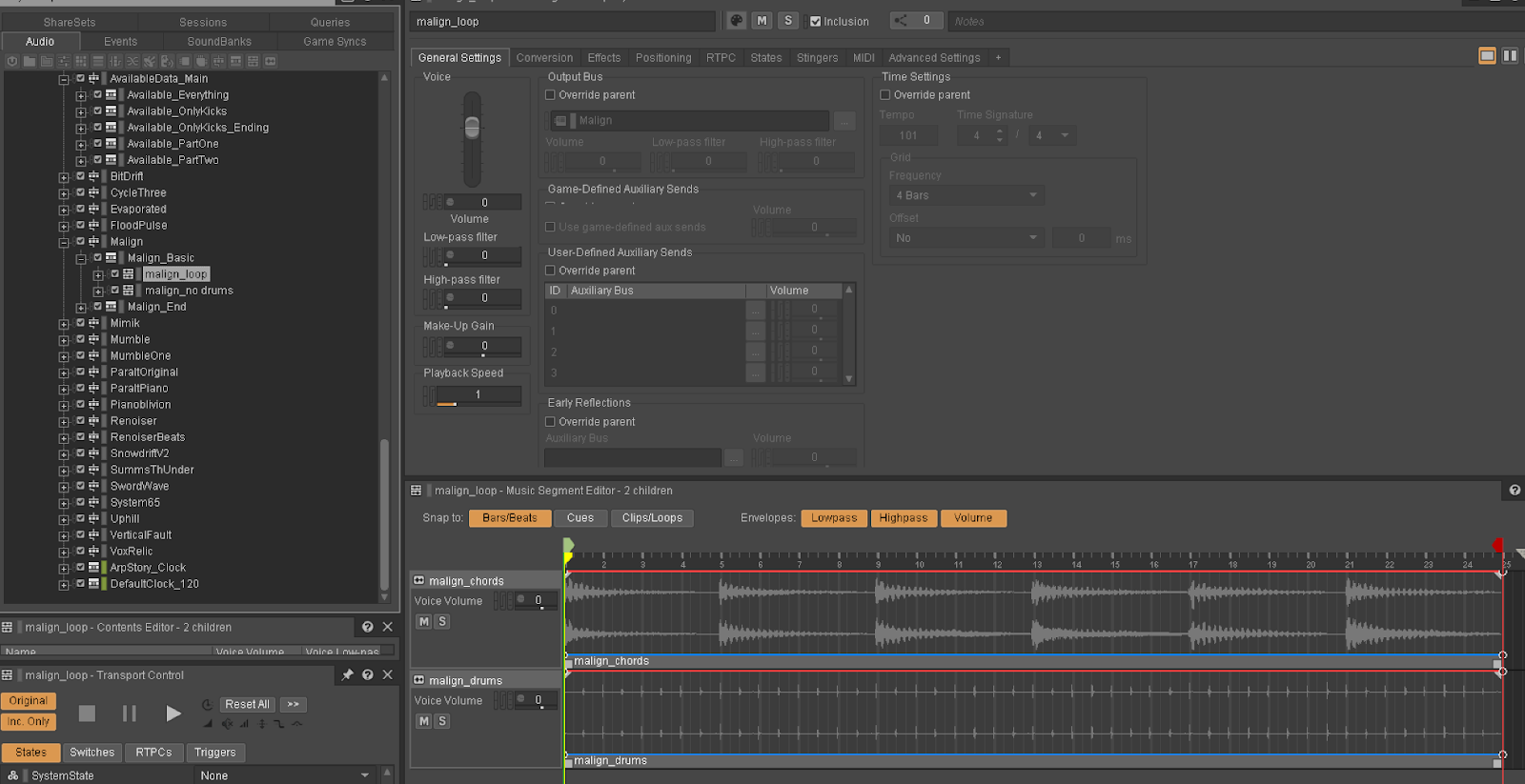

The Malign system is the doom jazz soundtrack for those times you’re a depressed cyberpunk detective, drinking away your sorrows in some kind of dimly lit cyberbar. It is based on a very simple, deliberately repetitive loop running in an Interactive Music Playlist Container. Crucially, Unity triggers this loop with callbacks. It then uses these callbacks to keep track of the start of each new loop, and at the start of each loop it decides whether or not it’s going to make a little melodic pattern on an electric piano, or guitar, or neither.

If it decides to play guitar, then it triggers a phrase from a large collection of samples via a Wwise Event triggering a Random Container. If it decides to play an electric piano, it uses some custom code to create a new melodic pattern using MIDI note numbers, and then plays it over the subsequent loop by scheduling MIDI notes and posting them to a Wwise Event pointed at a sampled electric piano instrument.

Using callbacks in this way is subject to some minor tempo fluctuations because of Unity’s variable frame rates, but in the context of a system that sounds like Malign, the small timing looseness helps humanise the melodies. (Experiments using this technique to generate high bpm breakbeat patterns using callbacks this way were less successful).

CONCLUSION

These examples broadly cover how most of the systems are built, but as it hopefully shows, the whole point of this project was to find a balance between some kind of generalisable, scalable framework, and a system that allows for custom behaviours to be integrated on a system-by-system basis. What Wreckage Systems has shown us as a band (and this is a purely subjective view) is that in this age of automating-everything, when it comes to making meaningful art then going the long way round and brute forcing it is, if anything, becoming increasingly important. By all means—use computers to find, build, and use tools to help you churn through all that painful mediocrity so you can find the diamonds in your subconscious faster. But doing that work, that merciless editing and curating away the stuff that’s not good enough—that is composition. And that curation, that intentionality, that’s the constellation heart. It is forever ungeneralisable.

Commentaires