In this series, we are taking an extensive look at past, current, and emerging reverberation techniques and reviewing them from an immersive and spatial perspective. In the previous articles, we covered the reasons why immersive reverberations are so challenging in virtual reality and some of the techniques behind classic reverberation algorithms. In this article, we review some of the current trends in acoustic simulations that can be used to create spatial reverberation effects.

Acoustics simulation of sound propagation on a metal plate

As we saw in the previous articles, although classic reverberation techniques are still very relevant today, we need to start looking at ways to make them more immersive. Just as reverberation techniques have evolved in the past to new production needs, virtual reality is currently pushing the limits on how we want to listen to reverberant virtual spaces.

Thanks to binaural algorithms, we can now perceive the direction of incoming sounds better then ever on headphones. While binaural algorithms will continue to improve in the future, refining the proximity effect and offering personalized Head Related Transfer Function (HRTF) filters, we also need to ensure that reverberations can carry on these spatial cues well after the initial direct sounds. While classic reverberation algorithms are able to efficiently increase the density of echoes over time, in the process, we lose the interactivity that would be crucial to convey spatial cues. This is especially true of early reflections, which carry important perceptual information. To achieve this goal, we need to start using the geometry information from the virtual spaces and inform simulation algorithms.

Using headphones makes it easier to render spatial audio effects as the listener is always located in the center of the speakers[1]

As we look beyond traditional reverberation, we will examine various methods for auralization of virtual soundscapes. In other words, listening to virtual reverberating spaces. Auralization of virtual acoustics is a research field that aims to simulate sound propagation by borrowing notions from physics and graphics. Indeed, to gain more realistic simulations that can carry spatial information, we need to take another look at how sound propagates in an enclosed space and how we can simulate it.

![Spatial sound is also needed for multi-channel setups, such as the SATosphere in Montreal which has 157 speakers [2]. Spatial sound is also needed for multi-channel setups, such as the SATosphere in Montreal which has 157 speakers [2].](http://info.audiokinetic.com/hubfs/Blog_Images/Benoit%20blog%202/Picture2.png)

Spatial sound is also needed for multi-channel setups, such as the SATosphere in Montreal which has 157 speakers [2]

Sound propagation is an amazingly complex phenomenon. Increasing the accuracy of the simulation will mean increasing the amount of computation required. Therefore, it’s important to consider where these methods excel, what their shortcomings are, and what perceptual ramifications can result from each approach.

The propagation of sound

The energy released by a rapid vibration, that we perceive as sound, travels through different materials as a mechanical wave. This kinetic energy which originates from the original vibration displacement, the vocal cords for instance, is passed onto the different particles of a surrounding medium, such as air. As sound itself never really moves, it merely passes its kinetic energy from particle to particle, and then gradually dissipates based on the viscosity of the material it is passing the energy to.

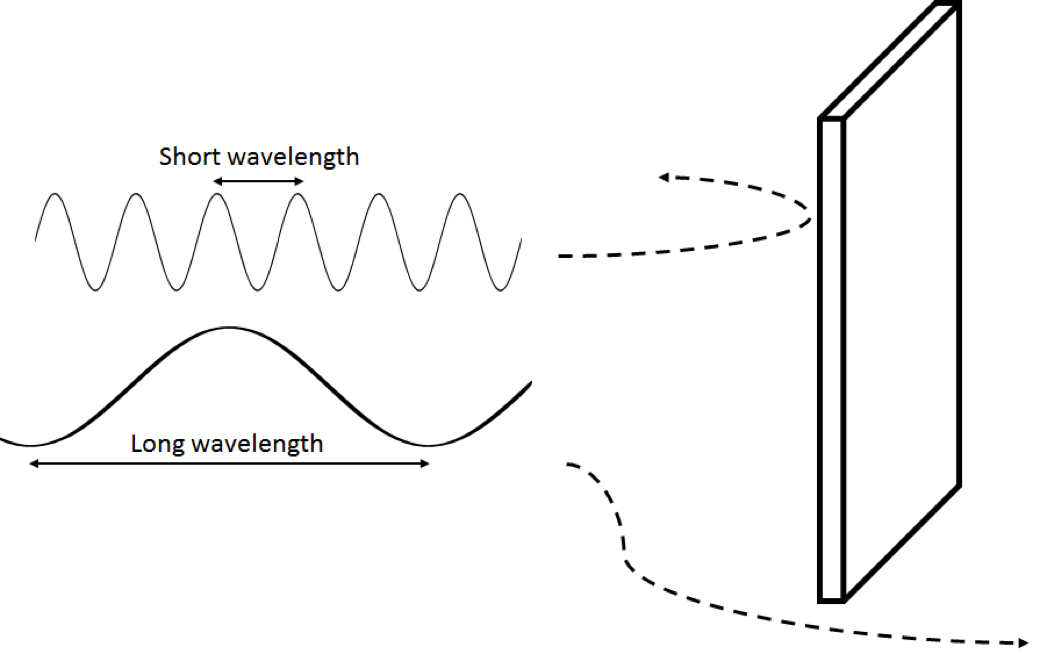

A denser material will allow a faster propagation because particles would be packed closer to each other, while a heavier material will dissipate more energy because it requires more to get the particles moving. Another property, called acoustics impedance, can describe how well different frequencies transmit onto a specific material. The energy that isn’t transmitted from air onto a wall material will be reflected back and will contribute to the reverberation of a room. This explains why a material with low density, such as a fibrous material, can still be good at reducing reverberation in a recording studio. The exterior texture of the material will also guide the direction of the reflections. It is important to note that all of these phenomena will vary depending on the wavelength of a sound, meaning these are frequency dependent phenomena. While a long wavelength is perceived as a low frequency sound, a short wavelength is perceived as a high frequency sound.

The easiest way to illustrate the frequency dependant nature of sound is by considering another key element of sound propagation, diffraction. Through diffraction, known as obstruction in game audio, a sound wave can bend around obstacles and openings to reach a listener without a direct line of sight to the emitting source. The reason a low pass filter is usually used to simulate obstruction is due to the way diffraction behaves in real life. When a wavelength smaller than an obstacle comes in contact with an obstacle, the sound will be reflected. However, when the wavelength is longer than the obstacle, it will go around it. So, if you imagine those two frequencies combined in one signal, traveling towards the same obstacle, a listener behind that wall will only hear the lower frequency (longer wavelength). This effect gets trickier with more complex shapes. For instance, the diffraction of sound around our head is so complex that we need to record it using an in-ear microphone and an impulse sound. This is how HRTF filters are captured to create binaural effects. Therefore, the size, shape, density, acoustics impedance, and texture are all material properties that impact how sound, at different frequencies, will travel through a space.

A short wavelength tends to reflect from an obstacle, while a long wavelength will diffract around it.

In contrast, light propagates through an entirely different process. A form of electromagnetic waves, light can travel through vacuum. In fact, light requires vacuum to propagate. This means it will go in between the particles of a material. Therefore, a denser material will slow down the propagation and can even block it. Furthermore, the wavelength of light we can see is much smaller than the wavelength of sound we can hear. Indeed, our eyes are able to capture light waves between 400 and 700 nanometers in length, as opposed to sound waves that need to be between 17 millimeters and 17 meters for us to hear. Moreover, sound will travel much slower, at about 343 meters per seconds in air, while light can travels at almost 300 million meters per seconds. This explains why we need to worry so much about the delay of reverberant sounds. All of these differences illustrate why light and sound waves will interact so differently with various materials and obstacles, especially diffraction. That being said, diffraction isn’t so prominent in an enclosed space, meaning that light propagation is actually a great place to start the approximation of sound waves.

Ray-based methods

Ray-based acoustics are sound propagation simulation techniques that take cues on how light travels. In this approach, sound travels in a straight line and, with a little linear algebra, you can easily calculate the direction of sound reflections based on the position of various walls. Delay can be added to these models to take into account the slow moving nature of sound, and filters can simulate how walls absorb different frequencies. Usually performed using simplified geometry, ray-based methods offer fast and fairly accurate ways of simulating sound propagation of early reflections at higher frequencies. Indeed, since rooms tend to be more complex in real life, it will reflect on more surfaces, thus creating a richer density of echoes. This is the reason why late reverberations are not well reproduced with these techniques. Also, when a space becomes more geometrically complex, diffraction of low frequency waves becomes a much more prominent factor. In this case, provided we know the incoming direction of different reflection rays, this can be simulated independently.

The two main variants of ray-based methods are the image source method and ray-tracing. Image sources will calculate the reflection paths of a sound from each wall. The process can continue to include higher order reflections when a reflection itself is reflected onto another wall. In ray-tracing, rays are sent in various directions and reflected when an obstacle is reached. Multiple new rays, going into different directions, are generated following each reflection from a surface. Random directions can be chosen to simulate sound being scattered by the wall’s texture or a corner, for instance [4]. This approach is useful when more complex geometry is used but will be require more computation. In both cases, it is possible to limit the depth of the algorithms to favor early reflection. Since we know the exact position of each ray, based on the position of a sound source and a listener, ray-based methods allow us to interactively position a sound, both in space and in time. To simulate the distance traveled by a sound, a delay is introduced based on the speed of sound in air.

The following video is courtesy of Brian Hamilton [4], member of the Acoustics and Audio group at the University of Edinburgh. We can see the propagation of a sound source over time using a ray-based method.

Ray-based sound propagation simulation

|

|

|

|

|

Wave-based modeling

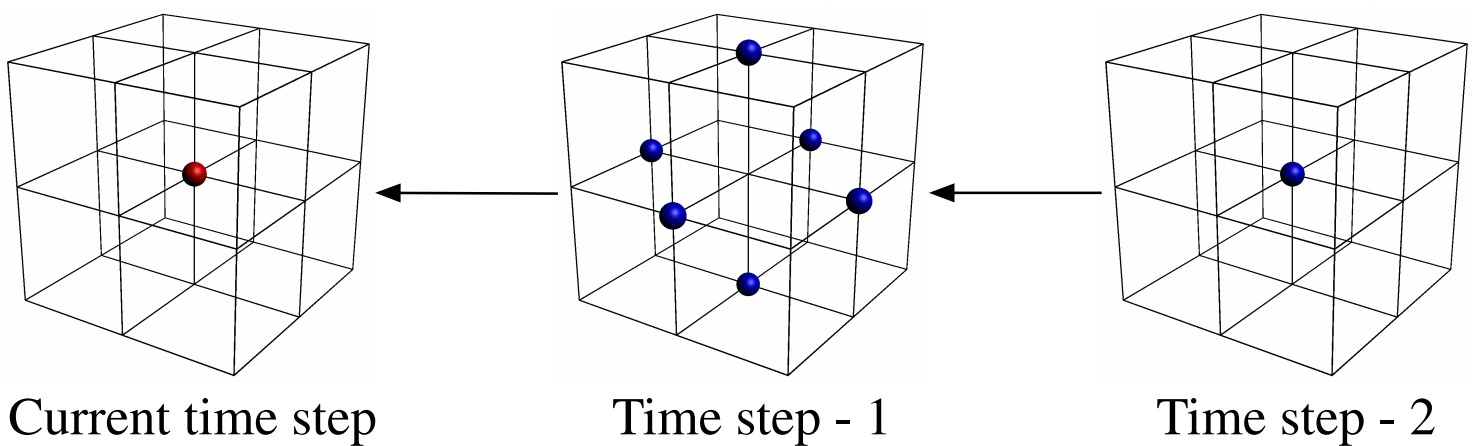

As we saw previously, sound doesn’t really travel like a light wave. So, another family of methods aim to simulate sound wave propagation more accurately. For instance, using a numerical method called Finite Difference Time Method (FDTD), it is possible to simulate something closer to how sound energy is transmitted from one particle to another. Instead of actual particles, we split the three dimensional space into a fixed sized grid.

The key notion for this method is that the sound pressure at any given point will influence the points around it in the near future. Based on this simple principle, some information about the transmitting material, and a lot of math, the sound pressure value of every point can be calculated using the values at surrounding points, from a previous time. In other word, if you know where the sound was, you can predict where it’s going to be. This also means the value for every point on the grid will need to be re-calculated for every frame. In the case of full audible bandwidth, this would means 44,100 frames per second. The grid spacing is chosen depending on the desired sampling rate. Indeed, simulating high frequencies requires a smaller grid size to accurately simulate the behavior for short wavelengths.

The neighbouring points, in time and space, used to compute the sound pressure of a single point in the FDTD method [5]

In FDTD, to play a source, we can simply set the signal into the modeling at a specific point over the necessary time frames. The signal’s amplitude over time is converted to sound pressure for every frame. Similarly, we position a listener by reading the calculated sound pressure over time at a specific point to form an audio signal. In FDTD, the sound vibration is simulated for every point simultaneously, regardless of the listener’s position.

Depending on the size of the room and the required minimum wavelength, the resulting computation can take anywhere between several minutes to several hours. So, how could we ever use this method in real- time? The simulation can be performed offline by using an impulse as a sound source to generate an impulse response (IR) (see convolution reverb in the previous article). This process will create a series of IRs which can later be used in real-time, through convolution. For spatial audio purposes, it is possible to generate higher order ambisonics IR; but, just as with pre-recorded room impulse responses, the amount of storage space required to represent various emitter-listener positions can be prohibitive.

Since accurate results at higher frequencies require small grid spacing, about a few millimeters in size to simulate all audible frequencies, we can save on computation by lowering the grid size. Therefore, this method is better and more appropriate for sound propagation simulation of lower frequencies. Using a lower sampling rate will save on computational costs and storage space. The good news is that high frequencies don’t usually travel very far anyway, air and wall absorptions play a key role in this.

A wave-based simulation is also good at simulating frequency-dependant sound diffraction based on the shape of complex geometry, something that a ray-based method can’t do. Generally speaking, wave-based methods are also considered better at generating late reverberation since the density of echoes are more accurately simulated. That being said, just as not all spaces in real life have desirable reverberations, some simulation may not result in great sounding acoustics from a media production perspective. The required rendering time will yield long turnarounds that can complicate a tuning process.

The FDTD approach is quite literal in how it simulates wave propagation, but other wave-based methods exist that can approximate part of the physics to offer faster simulations. Therefore, the actual benefits and limitations can vary from method to method.

Beside direct IR convolution, the generated data can also be used in other ways. For instance, it is possible to render a low frequency simulation of the sound propagation, from 500 Hz and below, and use the resulting data to analyze the amplitude decay of the reverberation over time. The information can then be utilized to inform the parameters of a classic reverberation algorithm [6]. This is a good way to automate the parametrization of reverberations, which can be useful in large virtual worlds containing many different rooms. This approach was implemented into a Wwise plug-in by Microsoft for the recent Gears of War 4. A talk on this project will be given at the upcoming GDC conference [7].

The following video, also a courtesy of Brian Hamilton [4], demonstrates how the wave-based method can render sound propagation. Note the inside corner that generates several new wave fronts.

Wave-based sound propagation modeling

|

|

|

|

|

Here are two other simulations [4] to illustrate the differences between ray and wave-based acoustic simulation.

Ray-based acoustics modeling

Wave based acoustics modeling

So, which one of these methods is the best? Both! Although the wave-based approach appears to be better with complex simulations, the ray-based method can still offer great sound when used properly. While the physics behind sound propagation can get quite complex, greatly simplifying the process can still result in great sound. Therefore, we need to make sure the extra computing required for a full simulation is worthwhile from a perceptual perspective. Interestingly, ray-based and wave-based methods seem to complete each other with their strengths. Indeed, ray-based methods are great at simulating high frequencies and early reflections, while wave-based modeling performs better for low frequencies, late reverberations, and diffraction effects of complex geometry.

In the next article, we will cover how classic reverberation, ray-based, and wave-based methods can be mixed together to form various new hybrid reverberators that have the right flexibility and efficiency for interactive spatial effects.

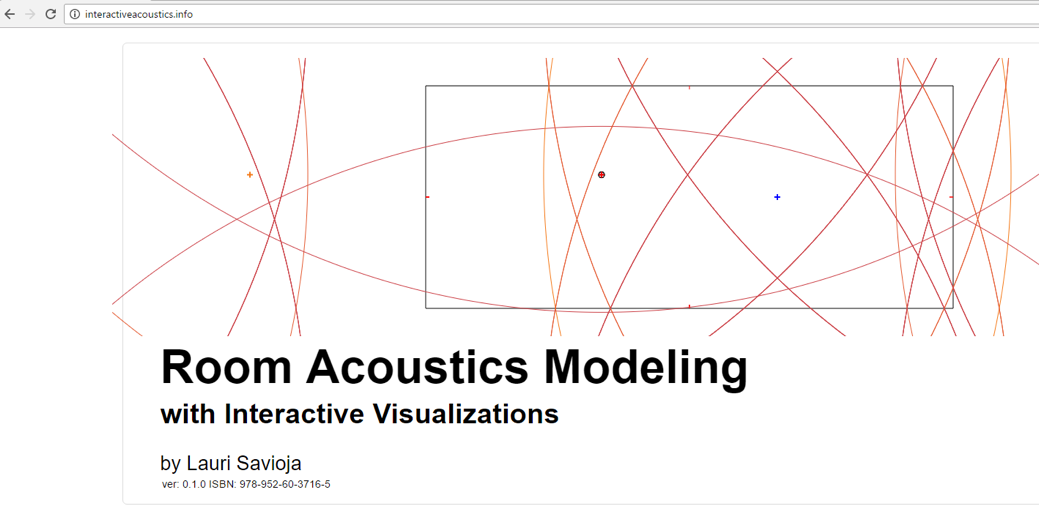

For more information on virtual acoustics, I recommend this great interactive text book [8] that demonstrates some of the techniques discussed above though interactive animations.

Interactive text book [8]

The University of Edinburgh’s Acoustics and Audio group also has an interesting website [9] with a lot of noteworthy information on wave-based simulation and how it can be used to model not just rooms but musical instruments as well.

Footnotes:

[1] http://sat.qc.ca/en/albums/symposium-ix-2016#lg=1&slide=14

[2] http://sat.qc.ca/en/albums/symposium-ix-2016#lg=1&slide=17

[3] http://interactiveacoustics.info/html/GA_RT.html

[4] http://www.acoustics.ed.ac.uk/group-members/brian-hamilton/

[5] C. Webb, "Computing virtual acoustics using the 3D finite difference time domain method and Kepler architecture GPUs", In Proceedings of the Stockholm Music Acoustics Conference, pages 648-653, Stockholm, Sweden, July 2013.

[6] Nikunj Raghuvanshi and John Snyder. 2014. Parametric wave field coding for precomputed sound propagation. ACM Trans. Graph. 33, 4, Article 38 (July 2014), 11 pages. http://dx.doi.org/10.1145/2601097.2601184

[8] http://interactiveacoustics.info/

[9] http://www.ness.music.ed.ac.uk/

Commentaires

Len Ovo

February 14, 2017 at 03:03 pm

Really good post! Very thorough. Thanks.

Blake Jarvis

September 18, 2017 at 01:35 pm

This is an awesome article! Super helpful, thanks so much and keep it up.