In a recent project, our company got the chance to prototype simulating acoustics using Wwise for a client's future office space in a building which had not yet been developed. Our task in the project was to help the client understand how acoustics will sound in the new building. More specifically, how various areas like open office environments and closed meeting rooms would sound at different times of the day, with varying intensities. Our task was not to acoustically measure the building nor get data-exact results of exactly how the building resonate, but rather simulate how it feels and could sound at different spots in the building. We had fun exploring the potential of using Wwise for simulating acoustics.

Setup

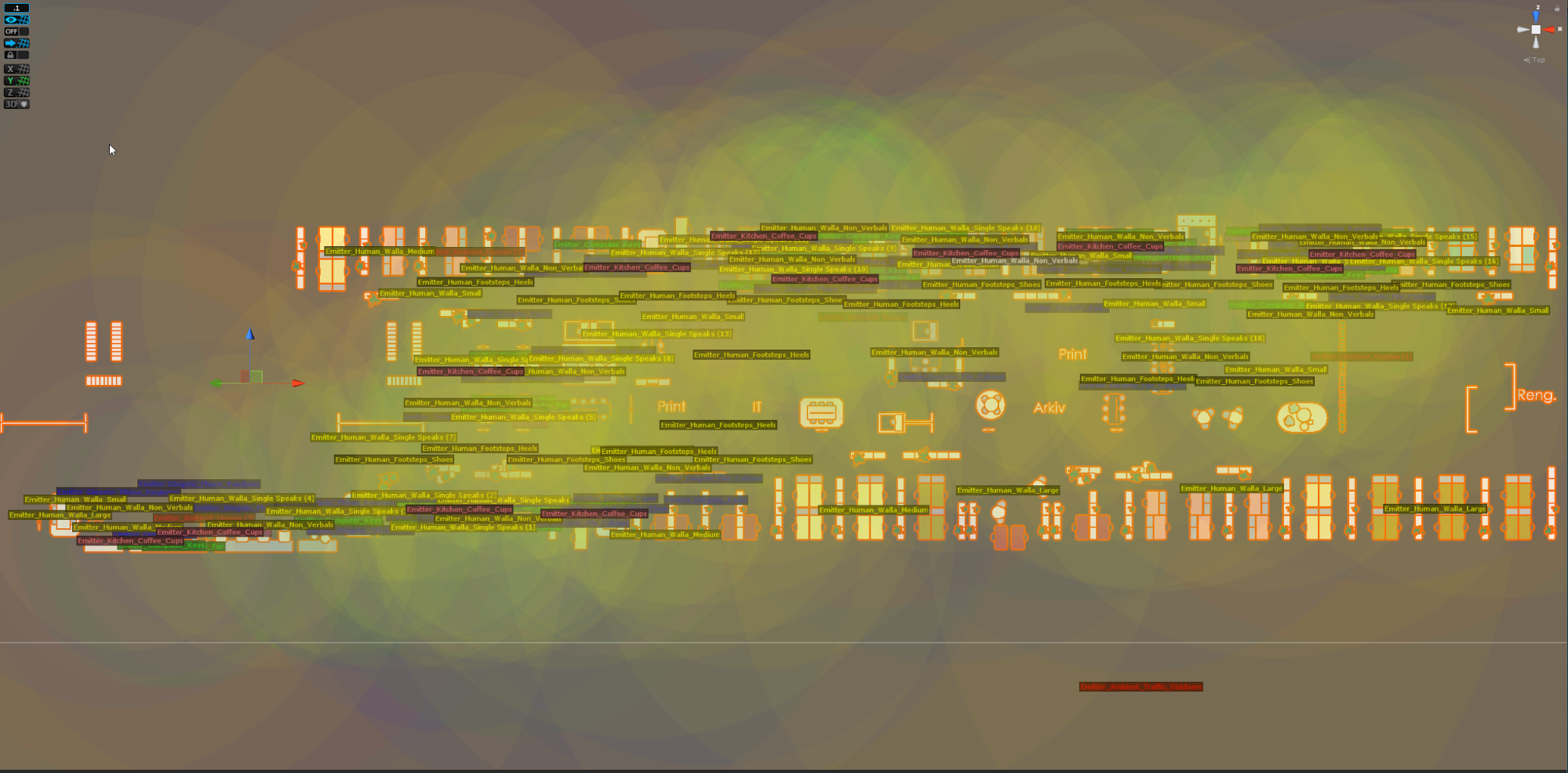

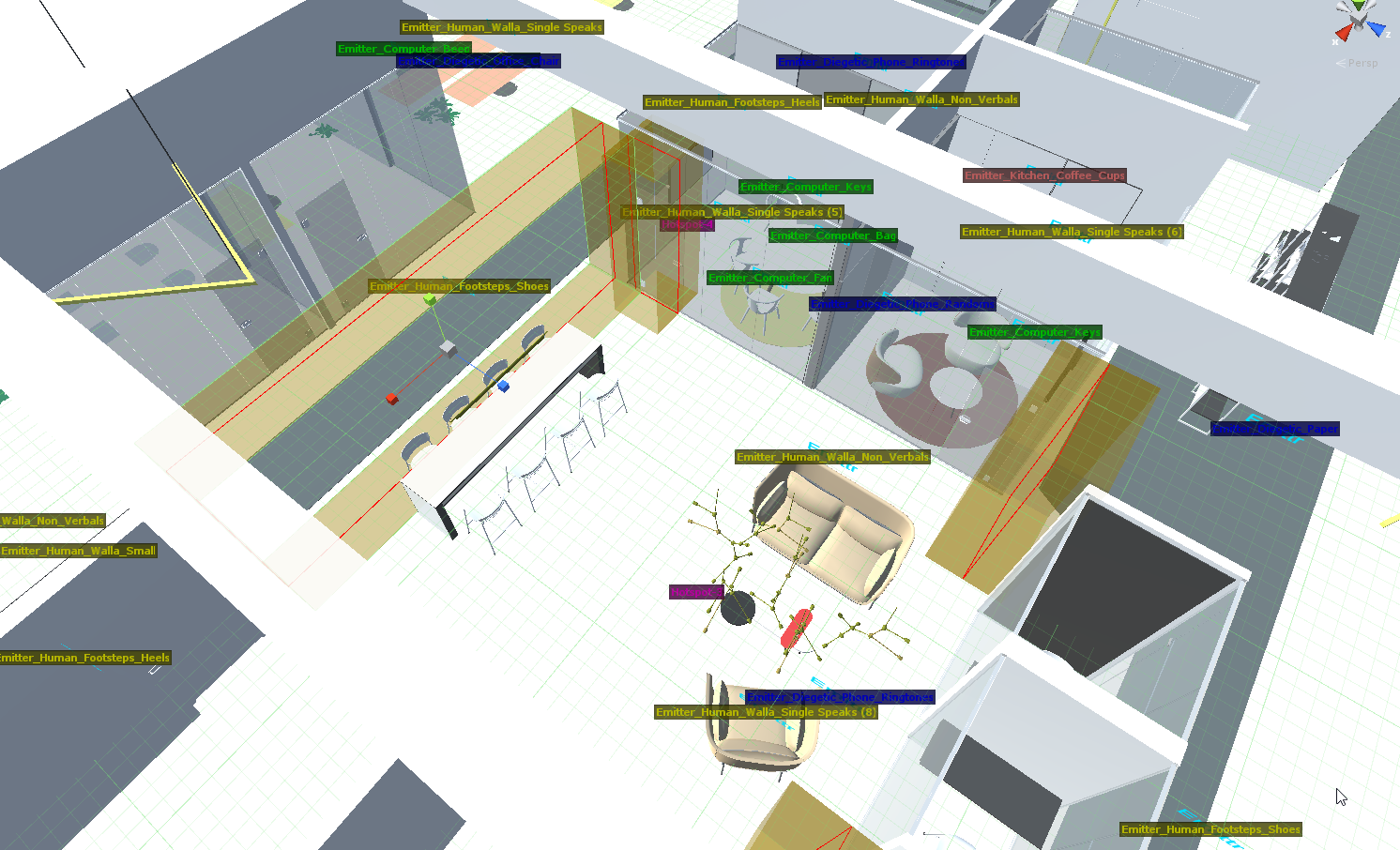

It was important to us, that the client could relate to the soundscape, so we reconstructed, sound by sound, a believable office environment with a large number of emitters. By placing single sounds (eg. coffee cups, office chair creaks, ventilation) in realistic positions all around the virtual office, we had a foundation for a dynamic acoustic simulation.

Gizmos in Unity of emitters spread out on the office floor. Colors represent different sound categories.

We could also use these single emitters to keep the development phase flexible. With a short deadline and plenty of stakeholders having a say on where and what to present to the client, we could easily teleport around in the ocean of emitters to where it made sense both visually and auditorily to show off the building for the client. Choosing this path of flexibility, however, does require more work upfront in order to control the sound mix like in open-world games.

Wwise Reflect

.

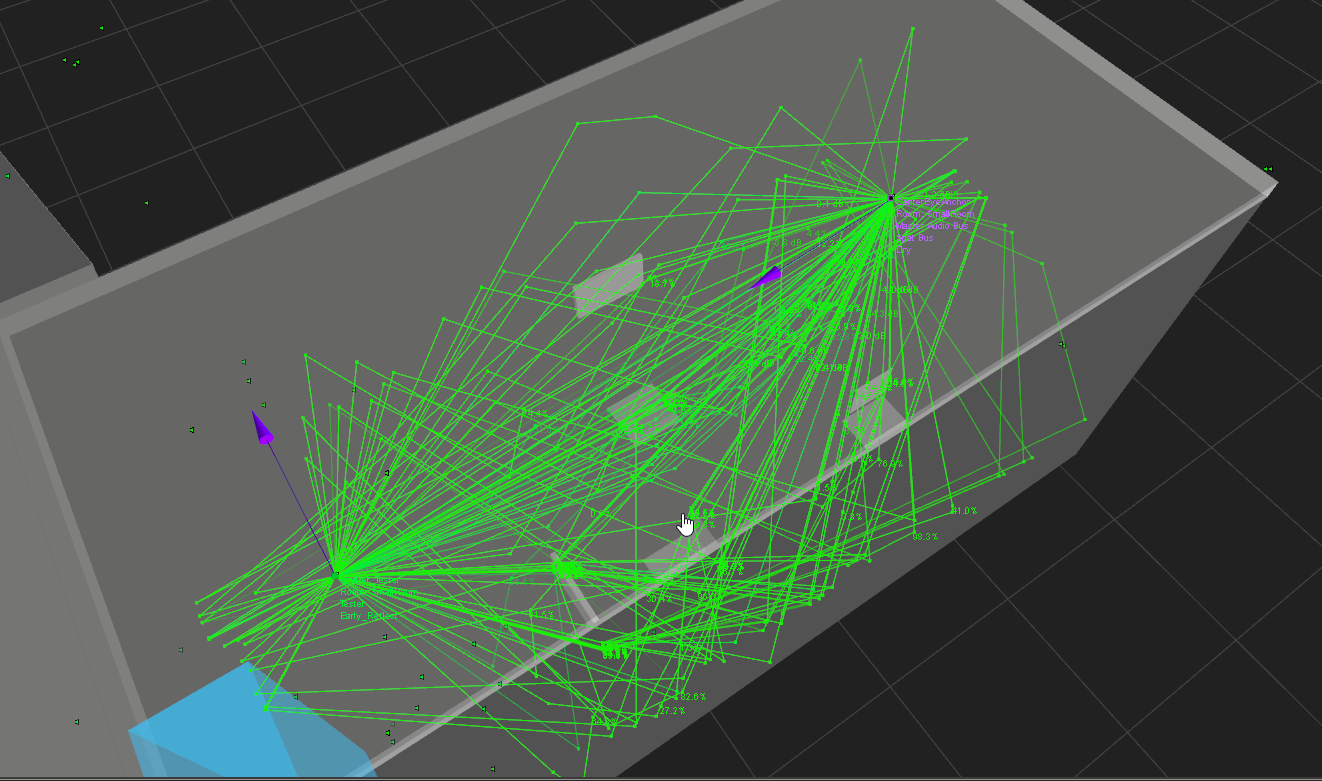

2nd order reflections of one emitter travelling to the listener. For each bounce off a surface, the sound is absorbed partly by the defined acoustic material on the surface.

For the client presentation, in the simulation we chose to listen to the office from 6 hotspots that showed different auditory challenges. The hotspots were not fixed positions as the user could choose to move around. In reality though, the freedom to move around freely was used mostly for triangulation of the sounds. Having the possibility to lean forward while turning the head can help a lot in terms of understanding the environment and getting directionality. Wwise Reflect helped creating spatial awareness.

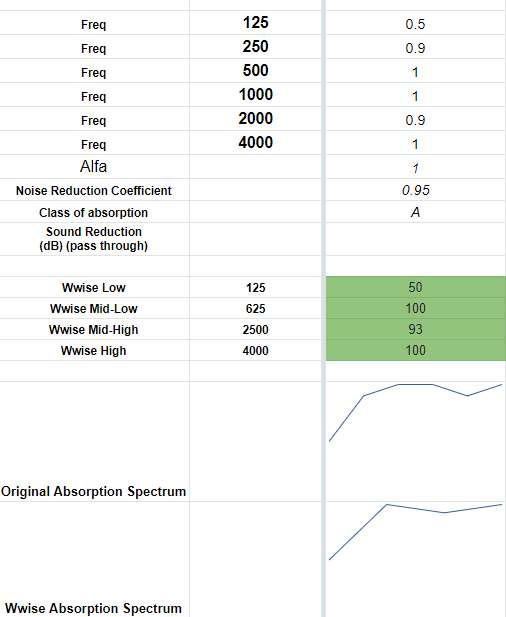

Wwise Reflect focuses on early reflections which aids a user to understand room size and shapes. Using a nifty small conversion spreadsheet to convert real acoustic material coefficients into Wwise, made it possible to use those exact materials and let Wwise bounce early reflections off of them.

Wwise Convolution

In our experience, the characteristics of a room comes from its late reflections - the reverb. To simulate this in Wwise, we turned to Wwise Convolution Reverb, which essentially takes a (real) reverb and apply it to dry sounds. In our situation, we could not go and record the room reverberation, because the building is not built yet. Instead we modeled 3D models around the 6 hotspots and used a third party tool to generate first order ambisonic impulse responses for each of these 6 hotspots. The impulse responses could be imported into Wwise Convolution to create late reflections on the sounds in those areas. This meant we could get a good understanding of the acoustical characteristics from the digital model. The 3D models for generating the IRs were simple models without too many smaller details, as the third party software had its limitations.

Model of one hotspot for generating simulated impulse response. Orange cone is the listener position for the impulse response.

Obviously the IR is only as good as its model, so we had to put a disclaimer on these types of reflections for the client when listening to them as they lacked details that would exist in a real situation. That could be smaller objects like monitors, mouse pads, plants, lights etc. which contribute to a shorter reverb because they absorb and stop the sounds travelling. Still with the simpler models, the convolution reverb sounded quite real. The calculated reverberation times aligning roughly to our expectations. From visually comparing the calculations to similar real world shaped rooms, we felt comfortable presenting late reflections to the client with the disclaimer.

Controlling emitters with Rooms & Portals

With over 170 emitters spread out in the virtual office building that had over 130 acoustic reflective surfaces, we did a culling of the emitters that were too far away from the listener. We could have used the built-in virtualization in Wwise, but for visual big picture’s sake we simply hid non-playing emitters via Unity scripts. Even then we would still have plenty of emitters playing. Using Wwise Rooms and Portals to fix this had resourceful benefits. By separating emitters in different auditory rooms, that would correspond to the 3D model rooms, we could minimize the CPU resources spent on playing these sounds.

Sounds from other rooms are channeled through portals and limit reverb processing.

When emitter sounds from other rooms travel through portals towards the listener, the portal itself becomes the emitter of these sounds. This is where the system saves resources, because it does not have to process reflections of the sounds in other rooms. Well, at least not in the same extent as the emitters which are in the same room as the listener.

Client presentation

For the presentation, an Oculus Rift and a pair of Beyerdynamic DT770 Pro headphones were used in a quiet demo room. Using headphones meant a need for a spatialization plugin on the bus chain to properly filter emitters and create believable directionality. We used Resonance Audio for Wwise, although other spatializers like the Oculus Spatializer is recommended as well. Spatializers tend to color sounds heard as the HRTF models never really fit truly to any person but is rather often based on an average ear model. Still, the gain is larger than the pain.

The presentation yielded 5 important conclusions about the acoustics, that the client could continue with in their process of designing the interior of the office. The conclusions evolved around having more absorbing materials in some of the meeting rooms and more obstructions in the open office environments. The real take away from testing the simulation and the presentation was the importance of introducing a work environment culture about sounds and noise in the open environments. After hearing, via the simulation, how sounds can easily propagate to affect several workers, a foundation was formed to consciously decide a culture of which noises are okay and not, that workers should be aware of. For example that chatting and telephone calls standing up should take place in other parts of the building while discussing work at the desks sitting down could avoid disturbing others due to the physical obstructions in place.

Other technical learnings from the project

-

Make sure to route buses, so you can solo the dry direct sound, solo the early reflections only, and solo the late reflections separately. Furthermore, using the new 3D Meter visualiser really made it easy to understand the mix while profiling. This also helped the client visually and auditorily understand how sounds are reflected and its consequences. See a video of the 3D meters in action here

-

Build and test on hardware early. VR and spatializers are more resource hungry so monitor plugins with the Wwise Advanced Profiler. It was critical to run smooth 90FPS on the visual side, so there was as little visual disturbance as possible allowing for focus on the audio

-

Be aware of the many possibilities and challenges of new features in Wwise. As Wwise is advanced, expect setting up Reflect with Rooms and Portals to be rather complex, but something that yields great results in the end.

-

Wwise Reflect sounded pretty cool and helped to understand how some of the rooms in the model would require more absorbing materials on the actual walls in order to lower early reflections. Play around with it!

Commentaires

John Grothier

February 27, 2019 at 06:21 am

Great work! I am just finishing university and it is very inspiring to see people take advantage of this software and offer new, creative application to different professional fields. I'll be following awe's work from now on.

jean-michel shanghai

March 04, 2019 at 11:30 am

Thanks for this article! Any chance you might have a link to a video from which we could hear a binaural rendering with multiple audio sources (voices, coffee cups, office chair creaks, air conditioning, etc) while moving throughout the space?

Louis-Xavier Buffoni

March 06, 2019 at 10:29 am

Awesome! I wish I could see your Wwise project and profiling in action!