Part 1: Distance Modeling and Early Reflections

Part 2: Diffraction

Part 3: Beyond Early Reflections

In the previous blogs, we looked at how designers may hook into acoustic phenomena that pertain to the early part of the impulse response (IR), and author the resulting audio to their liking using Wwise Spatial Audio. It is now time to do the same with the later part of the IR.

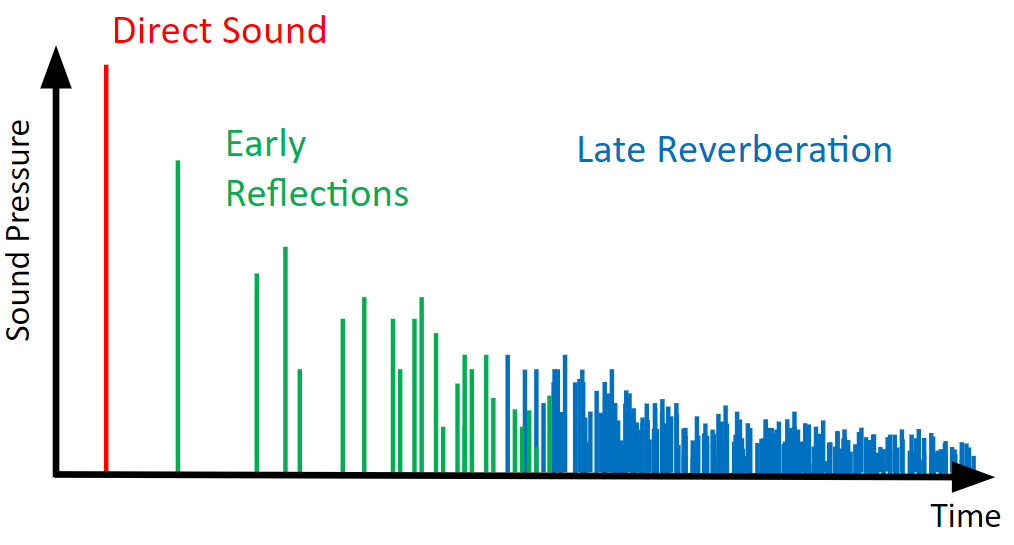

Figure 1 - Impulse response [20]

Environmental Sends with Rooms and Portals

The Wwise Spatial Audio approach to late reverberation design is very similar to the traditional way of authoring environments using auxiliary sends, that is, by tweaking the settings of your favorite reverb effects to match a game’s visuals and aesthetics. In fact, it is identical, with the only difference being that the engine will spatialize them for you and bring them to life through Rooms and Portals.

Rooms are a light-weight extension of the traditional auxiliary send method where you dedicate an auxiliary bus with a reverb effect to each environment or environment type, and send voices to the bus they (or the listener) are in. With rooms and portals, portals act as connectors between rooms. Sounds excite their respective room and propagate through a network of rooms and portals towards the listener. In terms of signal flow, they send to their respective room's auxiliary bus and reach the listener via a chain of auxiliary sends. Portals handle diffraction, and spatialization and spread of adjacent rooms’ reverberant field. Our teammate Nathan provides a great overview of the system in his blog [21].

Spatial Audio Paradigms

The raycasting engine discussed in the first two blogs of this series and the rooms and portals system described above follow two distinct paradigms, each with their respective design workflows and performance considerations. The former system follows a paradigm which we shall call Geometrical Acoustic Simulation, and the latter system follows another paradigm which we shall call Reverb Effect Control from Acoustic Parameters.

Geometrical Acoustic Simulation

Geometrical Acoustic Simulation consumes triangles and materials, and designers’ controls are essentially material absorption, as well as distance and diffraction curves (in the case of Wwise).

Ray-based methods become exponentially complex to compute as they are used to render the later part of the IR. In fact, naive extensions of these methods even fail at reproducing late reverberation because of a poor density of echoes, resulting in a “grainy” or “sandy” sound. Indeed, in real life “surface scattering and edge diffraction components tend to overshadow specular reflections after a few orders of reflection [22]." This means that, in the end, there are many more wavefronts reaching the listener than what the simple models of ray-based methods will predict, and the simplified geometry that they use will allow.

Overcoming this difficulty by increasing the level of details to microscopic levels is impractical. Instead, ray-based approaches often add a stochastic component to their models in order to obtain more plausible results, just like graphics rely on shaders and diffuse lighting techniques to look good, and not just on the meshes alone.

Audio 1 - IR made of 16th order, specular-only reflections. This was generated by feeding a digital impulse into a hacked version of Wwise Reflect with a slightly trapezoidal shoebox, and diffraction disabled.

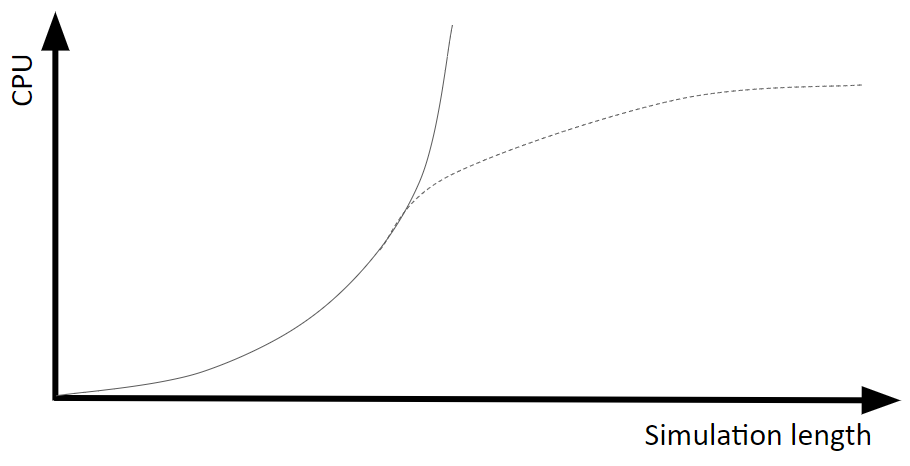

Figure 2 - Illustration of the CPU cost versus simulation length. Simulation length loosely correlates with reverberation time, although it also implies that rays interact with more geometry. The solid line represents the brute-force approach, which shows that computing late reverberation accurately is exponentially more costly. The dotted line shows how approximations help in practice keep performance under control.

The sound propagation technology from Oculus follows this paradigm. It uses ray tracing to compute high order phenomena and render both early reflections and late reverberation [23]. Presumably, it uses several techniques to avoid the exponential complexity of the latter. Designers mostly interact with properties of materials to tweak their sound.

Reverb Effect Control from Acoustic Parameters

This second paradigm consumes “acoustic parameters” and renders IRs using reverb effects. Designers’ controls are reverb effect settings and send levels, and acoustic parameters come into play and modulate these settings at run-time. This approach is fairly cheap, as most of the CPU usage is spent on processing the reverb effect, instead of computing sound propagation.

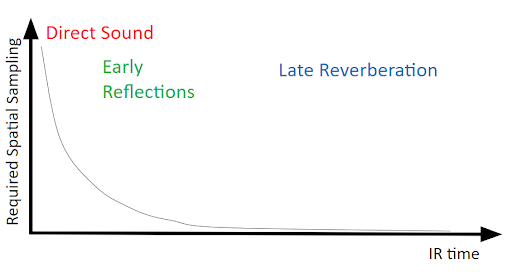

By definition, acoustic parameters depend on the location of the listener and/or emitters in game. But their nature and the process by which they are obtained vary greatly across solutions. The required spatial sampling varies as well, but it normally obeys the following rule of thumb: more information is required for informing a reverb effect of the early part of the IR than the late part.

Indeed, the early part of the IR consists of audio events whose directionality is noticeable, and this directionality is strongly dependent on the location of the emitter and listener in the environment. For example, the directionality of the direct (diffracted) path depends entirely on their relative positions, and the presence of obstructing obstacles. The directionality of early reflections is similar, but also depends on the proximity to nearby walls. On the other hand, the late part of the IR has weak directionality and consists of wavefronts that hit the listener from apparently random directions. A coarser sampling of the space is thus sufficient.

Figure 3 - Illustration of the spatial sampling density of acoustic parameters for reverb effect control by geometry-informed acoustic parameters required for accurately rendering different parts of the impulse response. The early part requires a much finer sampling than the late part which is somewhat insensitive to emitter and listener positions, provided that they are in the same room/environment and somewhat reasonably close. Higher spatial density does not necessarily mean higher CPU, but at least more memory, and more work involved for deriving the parameterization.

The solution developed by Microsoft’s Project Acoustics is one of reverb effect control by geometry-informed acoustic parameters. The parameterization process is automatic, and uses offline wave-based simulations. The extracted parameters are used to drive reverb effects and send levels [24]. Since the solution covers the complete IR, including the direct path, a different set of parameters is computed for each possible position of emitter-listener pairs, on a grid that is fairly fine (of the order of a fraction of a meter).

In contrast, the acoustic parameters of Wwise Spatial Audio rooms essentially consist of knowing which auxiliary bus to assign to a given room. It is not currently deduced from geometry, but instead assigned manually by designers based on their appreciation of what that geometry should sound like. Also there is only one set of acoustic parameters per room, so, referring to the previous discussion, the spatial sampling is very coarse.

The Dual Approach of Wwise Spatial Audio

The following table summarizes the discussion above.

| Geometry-Driven Acoustic Simulation | Reverb Effect Control from Acoustic Parameters | |

| Designer control | Distance and diffraction curves, material absorption | Reverb settings and send levels |

| Engine input | Geometry and tagged materials | Acoustic parameters, somehow derived from the geometry |

| Cost | CPU cost is relatively high and increases exponentially with the length of the simulation | Cheap run-time; Parameterization sampling needs to be increasingly fine for capturing the early IR |

Table 1 - Comparison of the Geometrical Acoustic Simulation and Reverb Effect Control from Acoustic Parameters paradigms.

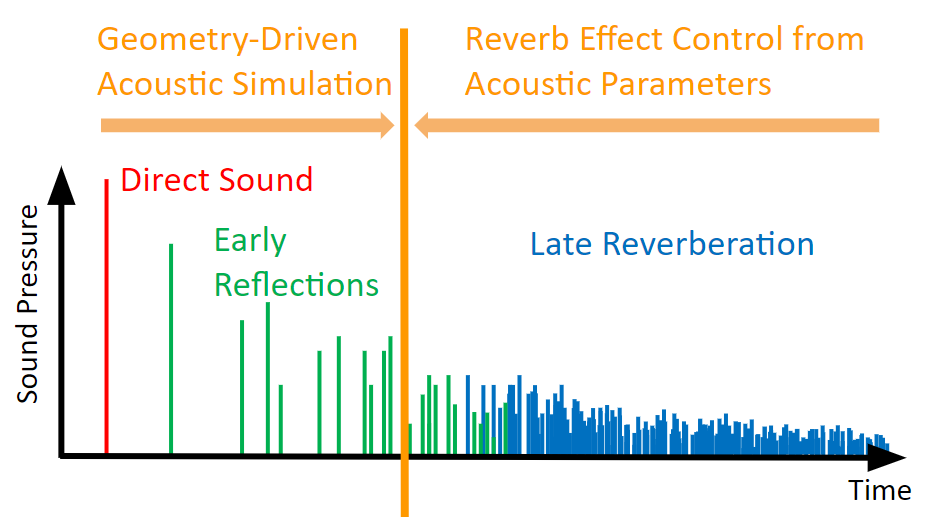

Wwise Spatial Audio tries to get the best of both worlds. Using Spatial Audio Rooms, it favors the Reverb Control approach for the late reverberation, so that the amount of acoustic parameters is kept minimal. Meanwhile, it relies on Geometry-driven Simulation for handling the direct path [25] and early reflections only, where the CPU cost of this approach is smaller.

The following figure shows this duality.

Figure 4 - Dual approach. Wwise Spatial Audio favors geometry-driven simulation for the early part of the IR where CPU cost is low, and reverb control for the late part where required spatial sampling is coarse.

Reverb Rotation

I said earlier that there is only one set of acoustic parameters per room, which means that rooms don’t take the position of emitters and listeners within them into account for informing reverberation. However, rooms also have a defined orientation, which means they can use their relative orientation with the listener into account. And they do, without effort, thanks to the magic of 3D busses.

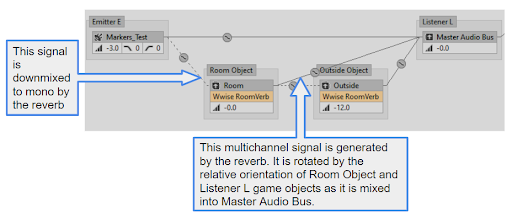

Let me explain. Reverbs output a multichannel signal on the auxiliary bus. This auxiliary bus is tied to the Room game object, which has an orientation defined by the game. When the listener is inside that room, the auxiliary bus signal acts as a source with a spread close to 100%. When set to 3D Spatialization Position and Orientation mode, the sound field will be rotated according to the orientation of the listener relative to the room [26]. The surround sound emanating from the reverb will thus feel like it is tied to the world instead of the listener.

Figure 5 - Screenshot of the Voice Graph in the Wwise Profiler, showing 3D busses and where rotation of the multichannel reverb output signal based on the relative orientation of the room and listener game objects occurs. Note that all emitters sent to a room reverb are downmixed to mono by said reverb, thus losing their individual directionality [27].

Now why would you rotate the sound field outputted by a reverb? Most artificial reverberators will, by design, produce an isotropic signal (that is, energy arriving equally from all directions), so if you rotate them you should not hear any difference. This is the case with the LR module of the Wwise RoomVerb, for example. However, the ER module of that same reverb effect shows patterns that have clear directionality, even if they do not stem from the game’s geometry. Also, studies show that real life reverberation may be anisotropic, for example if you were in a corridor or tunnel [28]. So if you used a multichannel IR recorded in a real tunnel inside the Convolution Reverb, mounted on a Spatial Audio Room bus, the orientation of the tunnel in game should follow that of the original. This may be subtle, but the fact that its orientation is always consistent with the graphics and that it does so dynamically should result in a noticeable improvement in immersivity. Can you hear it?

Audio 2 - Two binaural renderings of the first order ambisonics IR recording of the Innocent Railway Tunnel found on the OpenAir website [29]. Both have been rendered using the Wwise Convolution Reverb followed by the Google Resonance binaural plug-in, but one has undergone a rotation. Can you tell the difference? Can you guess the orientation of the tunnel?

Outlook / Future Work

Blurring the Line Between Early Reflections and Late Reverberation

In our experience, all audio teams adopt a different approach for integrating spatial audio in their game, opting in to various features, having different workflows, aesthetics, and systems, and they strive to develop them in ways that are unique to their game and give it an edge. With Wwise Spatial Audio, we hope to facilitate this. Referring back to the two paradigms presented above, we will thus be working on both fronts:

A. Simulation: Being able to simulate the complete IR from the geometry and material characteristics.

B. Reverb Control: Further inform reverb effects about game geometry.

Hopefully you will end up with more flexibility in setting the crossover between these two paradigms, one that fits best with your games’ systems and aesthetics as well as your team’s workflows and preferences.

A. Simulation

The first step to being able to simulate the complete IR is to continue improving the performance of our raycasting engine. On this note, we have already worked on a feature which we are eager to share with you in our next release of Wwise, that allows you to tame the performance of the geometry engine so that it corresponds to your budget.

B. Reverb Control

A shortcoming of our approach compared to others is that rooms and portals are distinct from geometry and need to be built manually.

1. Geometry pre-processing: We are currently working very hard to lessen this issue in our Unreal Engine integration: We are adding room and portal components and blueprint support, and you might have had a glimpse of some cool tools being developed to simplify geometry into rooms and portals at our recent livestream event [30].

2. We are also working on providing a good “first guess” on mapping rooms to reverbs. The goal is to extract high-level reverberation features from room shapes in order to get you up and running as fast as possible, while allowing you to further tweak rooms if needed.

And we will be providing more tools to inform your reverbs of geometry via RTPC. Stay tuned!

Simplification and Generalization

I started this blog series by quoting Simon Ashby: “[Designers are] in control of the mixing rules, but the system has the final word.” This means that you are given freedom to "reinterpret" physical quantities (e.g. distance, diffraction angle) into audio signal processing, through various tools and curves. And we have seen how important it is to allow you to do so. But afterwards you need to hope that it works well in all circumstances. Does it? How do we help you ensure that it does? Potential avenues are:

- Giving even more control, by providing tools to “override” problematic cases;

- Improve the underlying acoustic models so that it generalizes more naturally;

- Provide simpler controls to "bend the rules", albeit perhaps less flexible.

These avenues go in diametrically opposite directions. It is needless to say that we need you to be part of the conversation in order to steer development in the direction that suits you.

Commentaires