In this white paper, we aim to provide an overview of different efforts, pioneered by both industry and academic researchers, to develop advanced use cases where sound is used in innovative ways to enhance automotive Human-Machine Interfaces (HMI), and improve the overall user experience. We will also discuss how converting data from on-board systems like navigation or advanced driver assistance systems (ADAS) into sound cues can lead to a safer driving experience in complex driving situations.

Different technical elements used to concretely realize auditory displays and a few application domains, such as infotainment HMI, safety, and guidance will be reviewed. Finally, high-level software requirements for integration of use cases into automotive system software stacks will be extracted.

Background: Auditory Displays in Automotive Context

Before jumping into the topic of auditory displays, establishing some basic terminology is required. The term sonification refers to the process (algorithm or technique) used to transform data in an acoustic signal to provide information. Auditory displays are systems that use sonification but consider the broader context including the user, the audio interfaces (such as loudspeakers or headphones), and the situational context (background noise, user task, and so on).

In the automotive context, this means that the real-time information from the embedded systems either directly generates sound or affects the rendering of other audio streams, providing users with dynamically varying information representing some desired aspects of the real-time data. Based on this acoustic feedback, the user may react and take appropriate action or change behavior, in turn, affecting the data received from the car systems to form a feedback loop that can be used to guide users towards better driving habits, for example.

Automotive Human-Machine Interface Evolution

As the automotive market shifts towards connected and software-defined cars, it becomes clear that the car is no longer an isolated bubble. With SMS, email, social networks, and weather and traffic information available on a continuous basis, and with the multiplication of on-board systems and applications around multimedia and navigation combined with ADAS systems, a lot of different functionalities are now fighting for the driver’s attention.

Unfortunately, the majority of these systems require a form of visual attention that can significantly increase the driver’s cognitive load in unsafe ways. In fact, studies have shown that visual inattention is the main factor behind 78% of crashes and 65% of near crashes (NHTSA, 2007).

So, what can be done to address visual overload situations? The answer lies in offloading some of the tasks to other senses where cognitive bandwidth is available. The auditory channel is indeed an effective modality to inform users about ongoing secondary processes without hindering the primary task (Belz, 1999).

“Each of our senses can be thought of as a "channel" through which information is received and processed. Each channel has limited capacity in terms of the amount of information that can pass through it at any one time.” (Auditory Display Wiki)

Understanding the Auditory System

In order to properly design auditory displays, we must first understand the strengths and weaknesses of the auditory system.

Strengths

Compared to vision, the auditory system is always active (including during sleep) and does not require any specific precondition (such as lighting for the visual sense). Another advantage of auditory perception is that, unlike visual perception, there is no requirement for specific user physical orientation or visual attentional focus.

Hearing is a peripheral sense that can detect sounds coming from all directions with pretty good localization accuracy. Perhaps as a vestige of evolutionary processes, sound is extremely effective for grabbing our attention because humans have always used hearing to detect potential threats. Furthermore, our auditory system can naturally filter out unimportant sounds, enabling us to locate and attend to specific sounds even in a noisy or sound-filled background. The natural alerting properties of sound also suggest applications for audio in multimodal displays, such as in focusing attention on key areas of complex visual displays (Eldridge, 2005).

The auditory sense, unlike other senses (smell or taste, for example), is very temporal. When the stimulus goes away, the sensation associated with the sound immediately goes away. It is not so prone to fatigue and it can easily overlay multiple layers of information, due to the multi-dimensional nature of sound (pitch, loudness, spatial location, timbre, etc.).

Weaknesses

Like other senses, hearing also has some disadvantages when used as an information channel. Understanding these limitations is key in avoiding pitfalls when designing auditory displays.

For sounds to be understood, they must obviously first be heard. Interfering sounds from the environment or from simultaneous speech communication can effectively mask the desired acoustic message. Good design practices therefore suggest to separate the sounds that can be used together, such as with frequency, location, and quality, so that they can be assimilated and properly separated without masking effects.

We can discriminate between thousands of different real-life sounds; however, human memory is limited for abstract sounds. It is, therefore, recommended to limit the number of sounds that can be used in each given context, typically from 5 to 8 (Jordan, 2009).

As a result of the fact that our hearing cannot easily be shut off, like we can do with vision by closing our eyes or simply looking elsewhere, annoyance can sometimes become a problem for frequently used sounds. Good design is therefore key in avoiding annoyance while maintaining the functional objectives of the sound message, such as an alert.

Choosing and Combining Modalities

One fact outlined by a variety of different research is that different modalities are in fact complementary. In many cases, reinforcing the messages (particularly alerts) across different modalities (e.g. visual, sound, and haptic) yields a more cohesive message to the user that is more likely to be comprehended accurately and quickly.

In single modality cases, it is important to know for what tasks or purpose to use each modality [ref Sanders 1993]. The auditory modality is good for events which require immediate user attention (e.g. alerts) since it is pretty easy to incorporate a sense of urgency in sound design. Auditory displays should focus on simple and short messages that refer to time-dependent information.

Sonification Techniques

Commonly Used Sounds

Commonly encountered sounds in auditory displays can be grouped into a few categories.

The first type of sounds are called auditory icons. These sounds have an obvious and well-known association with a reference physical object. The shutter sound that is used on virtually every smart phone when taking a picture (even though there is no physical shutter anymore) is a good example of an auditory icon. The advantage of this type of sound is that no learning is required from the user. Limited design flexibility (since the object association must be preserved), and the fact that such a reference may not always exist are, however, disadvantages of using auditory icons (Stevens & Keller, 2004).

A second category of commonly used sounds in auditory displays are called earcons (the auditory equivalent to visual icons). These abstract (often synthetic) sounds can be designed specifically for each application to employ repetitive melodies and rhythms from simple to complex. Example sounds in this category usually include all the HMI feedback sounds, such as touchscreen interaction (e.g. home, back, selection, accept, and cancel), speech recognition (e.g. listening on/off), and so on. (Blattner, Greenberg, & Sumikawa, 1989).

Another important sound category includes all of the speech feedback (usually accomplished through text to speech technology), used for example in the case of navigation, or when a more complex form of user guidance is necessary. Several other sound categories have been formulated by field experts, but the above mentioned ones contribute to the vast majority of sounds heard in the automotive context.

Spatial Auditory Displays

Humans can perceive sound location accurately, allowing auditory displays to carry additional information through the spatial dimension using three-dimensional sound positioning algorithms. Sound localization cues can be used to rapidly position hazard locations outside the car in ADAS systems for collision avoidance, for example. Spatial sound characteristics can also be used to position sound feedback coming from different systems within the car to help users better identify which system they are interacting with (e.g. cluster instrument versus the head unit).

For alert sounds, using the spatial dimension not only provides the localization information, but also makes the sound easier to distinguish from other sounds and is more attention grabbing when the sound is played from a location that makes sense within the context of the signal (Jacko, 2012).

Automotive Applications Examples

HMI Audio Feedback

Sound as an immersive phenomenon perceived in the background is well suited to unify the various subsystems together to provide more cohesive HMI interfaces. By simply adding non-obtrusive sound feedback with common esthetics for different applications, a more holistic human-machine interface user experience can be achieved.

Auditory Guidance Examples

Navigation systems frequently use text-to-speech technology to provide turn by turn guidance instructions to drivers. The use of speech is ideally suited for such complex instructions, and panning should be coherent with the provided instructions (such as "turn left" being heard on the left side) to enhance information cognition. Effective auditory displays can be developed to enhance this important interaction with the driver using additional sounds. For example, providing continuous feedback on time remaining before action and leveraging sound localization cues can further clarify the direction information. This augmented auditory guidance provides acoustic feedback that frees the driver from having to look at the navigation system to precisely determine where to turn. This is particularly useful in situations where there are closely spaced exits or complicated roundabouts, and can really simplify the navigation task and, therefore, significantly enhance safety.

Similarly, lane guidance information and traffic notification can also be provided with specifically designed acoustic cues, designed to avoid being overly intrusive and to blend well with entertainment content, so that drivers can keep their eyes on the road at all times.

Entertainment Processing

One interesting concept that has been developed is that instead of adding additional sounds, the existing entertainment content can be modified in ways where information can be perceived intuitively without being obtrusive in order to make users easily accept these systems (Hammerschmidt, 2016). For example, front/back panning of entertainment content can be used to notify when drivers exceed the speed limit, gradually guiding users towards safer driving habits. Slightly degraded audio quality of the entertainment signal (by removing high frequencies, for example) can also be used to signal more severe situations. Similar concepts can also be used to improve driving performance, for example, notifying the driver with energy efficiency information.

Active Safety Applications

On top of user guidance applications, auditory displays have an important role to play in various active safety systems like advanced driver assistance systems (ADAS), since auditory warnings can capture and orient attention more effectively than visual warnings (Ho & Spence, 2005).

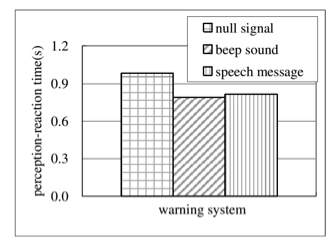

Reaction Time for Collision Avoidance Systems

Many studies have been performed to investigate the improvement in reaction time (a key metric for safety) when using different auditory display signals. Overall conclusions show that a driver's response is in general 100-200 millisecond faster compared to when not using sound at all. For collision warnings, the use of tones instead of speech is recommended since it yields faster reaction, because speech takes longer to integrate.

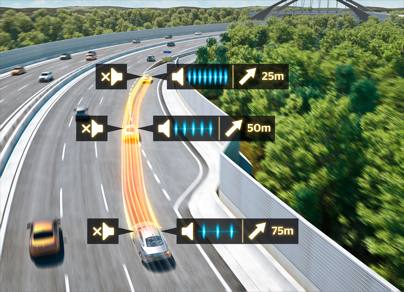

ADAS Collision Avoidance Examples

For blind spot warning systems, auditory displays which overlay the indicator turn signal, acoustically signaling the presence of a vehicle in the overtake lane (in the blind spot) for example, can be very effective to enhance. The spatial location can also be used in systems like lane departure warning and park distance control (or surround view applications).

Engine Sound Generation

For a long time, thermal engines have naturally provided a form of implicit auditory feedback which we came to rely upon, either as a pedestrian identifying a vehicle coming our way or as drivers to have some impression of the speed and acceleration of the vehicle. With the advent of electric drive trains, much of this acoustic feedback has now vanished. From a sound pollution and ride-pleasing perspective, this is very interesting; but, this also brings some new challenges with respect to pedestrian safety and to driver environment awareness.

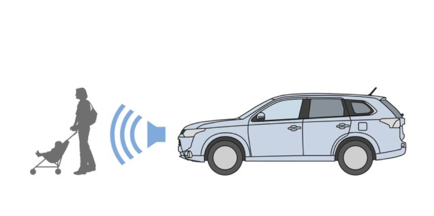

Exterior Sound for Safety (AVAS)

A report from a transport safety agency showed that hybrid and electric vehicles are 37% more likely to cause accidents involving pedestrians and 57% more likely to cause accidents involving cyclists (NHTSA, 2011). For this reason, regulations have already been installed in many places to include an acoustic vehicle alerting system (AVAS) on electric vehicles. These systems, equipped with an external loudspeaker, generate exterior sounds at low speed to alert pedestrians. For this, real-time information (such as speed) is taken from the car embedded systems to drive interactive sound synthesis algorithms to produce sound signatures customized for each model.

Interior Sound for Comfort

Because sound plays a key role in the user perception of the vehicle, manufacturers of electric vehicles are also starting to include interior sound generation technologies to replace the acoustic feedback previously provided by thermal engines on speed and acceleration, enhancing the driving experience with a sound signature matching each brand's requirements.

Software Requirements

We have discussed numerous applications of auditory displays in the automotive environment. From these applications, a set of common technology requirements to be able to successfully integrate auditory displays in cars can be extracted.

Clearly, the capability to dynamically build complex audio graphs with sound synthesis heuristics (looping, sequencing, voice management, and so on) and DSP algorithms will be required. An interactive audio API is required to feed the continuous stream of real-time information provided by the embedded systems to the audio rendering engine.

To allow manufacturers to differentiate these new audio systems from competitors, audio designers will need to be empowered by data-driven systems in order to disconnect the sound design entirely from the engineering integration within the embedded systems. Quick iteration cycles in design, as well as the tuning of those systems in the context of the car, will also require real-time editing capabilities for workflow efficiency.

Finally, being able to prototype and simulate such systems to allow early concept development outside of the embedded platform will be required, along with the capability to effectively migrate these concepts towards production platforms without the need to reimplement.

Conclusion

To address the new requirements necessary to tackle auditory displays in the automotive context, the automotive sector should look at how other industries previously addressed those challenges. The gaming industry, in particular, has for more than 15 years pioneered the field of interactive audio and has developed software tools and processes to efficiently design such systems.

References

- Auditory Display Wiki. (n.d.). http://wiki.csisdmz.ul.ie/wiki/Auditory_Display.

- Belz. (1999). A New Class of Auditory Warning Signals for Complex Systems: Auditory Icons.

- Blattner, M., Greenberg, R., & Sumikawa, D. (1989). Earcons and Icons: Their Structure and Common Design Principles.

- Eldridge, A. (2005). Issues in Auditory Display.

- Fagerlonn. (2011). Designing Auditory Warning Signals to Improve the Safety of Commercial Vehicles.

- Fung. (2007). The study on the Influence of Audio Warning Systems on Driving Performance Using a Driving Simulator.

- Hammerschmidt. (2016). Slowification: An in-vehicle auditory display providing speed guidance through spatial panning.

- Hermann. (2008). Taxonomy and definitions for sonification and auditory display.

- Hermann. (2011). Sonification Handbook.

- Ho, C., & Spence, C. (2005). Assessing the Effectiveness of Various Auditory Cues in Capturing a Driver's Visual Attention.

- Jacko, J. (2012). Human Computer Interaction Handbook: Fundamentals, Evolving Technologies, and Emerging Applications.

- Jordan. (2009). Design and Assessment of Informative Auditory Warning Signals for ADAS.

- Lundqvist. (2016). 3D Auditory Displays For Driver Assistance Systems.

- Moreno. (2013). The Value of Multimodal Feedback in Automotive User Interfaces.

- Nees. (2011). Auditory Displays for In-Vehicle Technologies.

- NHTSA. (2006). The Impact of Driver Inattention On Near-Crash/Crash Risk.

- NHTSA. (2007). Analyses of Rear-End Crashes and Near-Crashes in the 100-Car Naturalistic Driving Study to Support rear-Signaling Coutermeasure Development.

- NHTSA. (2011). Incidence Rates of Pedestrian And Bicyclist Crashes by Hybrid Electric Passenger Vehicles, .

- Sanders. (1993 ). Human factors in Engineering and Design.

- Stanton. (1999). Human factors in auditory warnings.

- Stevens, P., & Keller, C. (2004). Meaning from environmental sounds: types of signal-referent relations and their effect on recognizing auditory icons.

Comments

Jeremy John Butler

January 04, 2018 at 06:43 pm

I think this is pretty cool and has a lot of potentials.