Introduction

Automation is a commonly used approach while working on large projects.

In a team with hundreds of people, things could easily go wrong during repeated communication and back-and-forth collaboration. With an automated workflow, we can minimize the error rate and maximize our production efficiency.

In industrial production, the project team tends to implement tools like build machines and packagers for some automated operations, combined with a notification system to show the results on a certain page and notify personnel involved. This makes quality control and the whole workflow more efficient.

In audio production, we can also automate some manual operations, reducing human intervention and communication. For example, using the build machine to package SoundBanks automatically. This way you can avoid the conflict while having multiple people generating SoundBanks locally, thereby minimizing uploading issues.

As for Wwise engine automation, thanks to the WAAPI functions provided by Audiokinetic (there are lots of articles on WAAPI, so I won't go into details here), it becomes easier for developers to automate the internal operations of Wwise. Also, you can combine WAAPI with other tools to build an automation chain, and deploy it on the packager, making the whole workflow more compact and efficient.

Here, I will describe how to create temporary VO assets automatically with WAAPI and TTS.

Issues in VO Creation & Optimization Ideas

During VO creation, you may encounter time-consuming configuration and high iteration frequency, especially when there is a high demand for VO assets. Without any tool support, the game designer and the sound designer will have to communicate, operate and upload over and over. The whole process is repetitive, monotonous, error-prone and time-consuming.

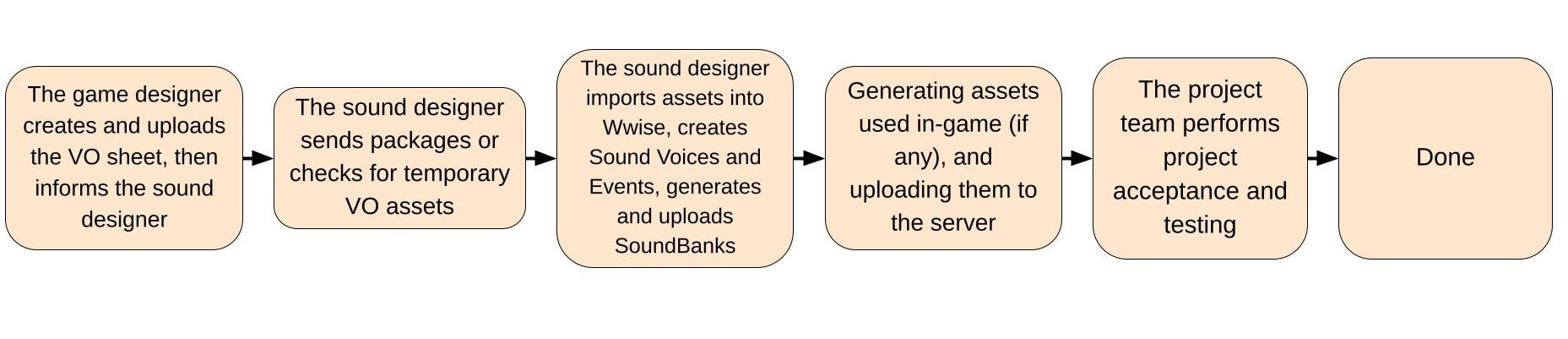

1. The game designer uploads the VO sheet and informs the sound designer;

2. The sound designer add assets in Wwise, configuring, uploading and synchronizing to the engine;

3. The project team performs project acceptance and testing.

Solving Configuration Issues

In industrial production, modularization is a common approach that can minimize duplicated work, reduce debug costs, and facilitate coordination and rapid verification. This allows everyone on the team to focus on their own work, thus increase the development efficiency. The general idea is to integrate text, audio, art and other elements into a single module for unified configuration. When the game designer is done for texts, it’s also done for VOs. No need to configure VOs separately. Events can be named according to specific rules, as agreed with the game designer in advance.

Solving Communication, Wwise Operation and Uploading Issues

When it comes to "avoiding repeated communication", the most obvious solution is to let the machine handle as much human coordination as possible. And the collaboration in VO production happens to be very repetitive, which can be replaced with the automation workflow in most cases.

Here is how I create VO assets automatically: first, find repetitive manual operations, then build an automation workflow, then encapsulate the execution processes into command lines, which involves WAAPI, Microsoft TTS and other related APIs, via uploading and deployment tools like Perforce and Jenkins.

Deploying the Automation Workflow

Setting Rules

1. Specifying the naming convention for Events;

2. Confirming that the header of the VO sheet includes information such as VO name, language, timbre, tone and content;

3. Creating Work Units separately in the Audio and the Events tab, and creating a folder for AI generated wav files within the Originals directory;

4. Auditioning and selecting tones provided by TTS, or training your own tone model.

Tools Needed

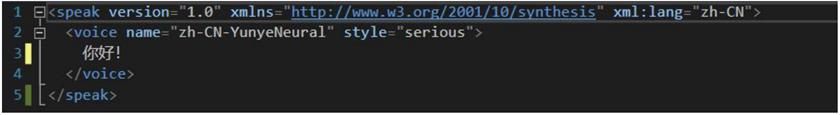

1. The tool for converting the VO sheet into SSML (Speech Synthesis Markup Language) format (recognizable by Microsoft TTS);

Example of the output target

Example of the output target

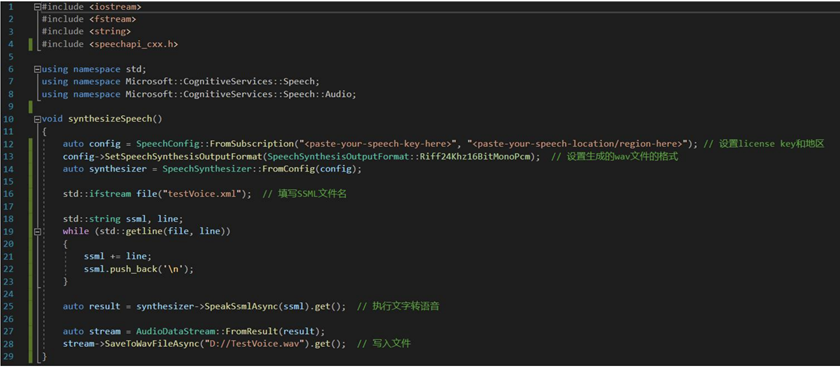

2. The TTS (text-to-speech) tool;

(You need to register and apply for a license key on the official website)

(You need to register and apply for a license key on the official website)

Line 12: Setting the license key & region

Line 13: Setting the format of the generated wav file

Line 16: Filling in SSML file names

Line 25: Performing the TTS conversion

Line 28: Writing to files

3. The WAAPI tool for automatically importing assets, creating Sound Voices and Events;

4. Relevant content, notification mechanism and process deployment for version control tools like git, p4, etc.;

5. The frontend playback logic.

Deploying the Build Machine

To use the build machine, you need to install the Wwise environment and deploy the following processes to the machine:

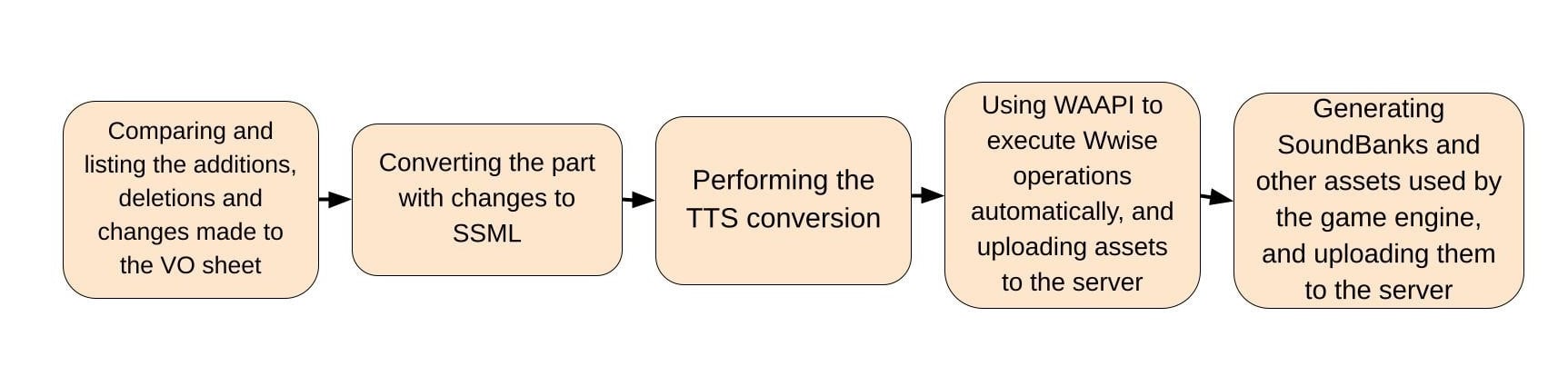

1. Comparing and listing the additions, deletions and changes made to the VO sheet;

2. Converting the part with changes to SSML;

3. Performing the TTS conversion;

4. Using WAAPI to create and update Voice SFXs within the Work Units specified in Wwise, generate associated Events and upload them to the server;

5. Generating SoundBanks and other assets used by the game engine in certain directories and uploading them to the server.

When the server detects the VO sheet uploaded by the game designer, it will execute the above processes automatically.

Optimized Workflow

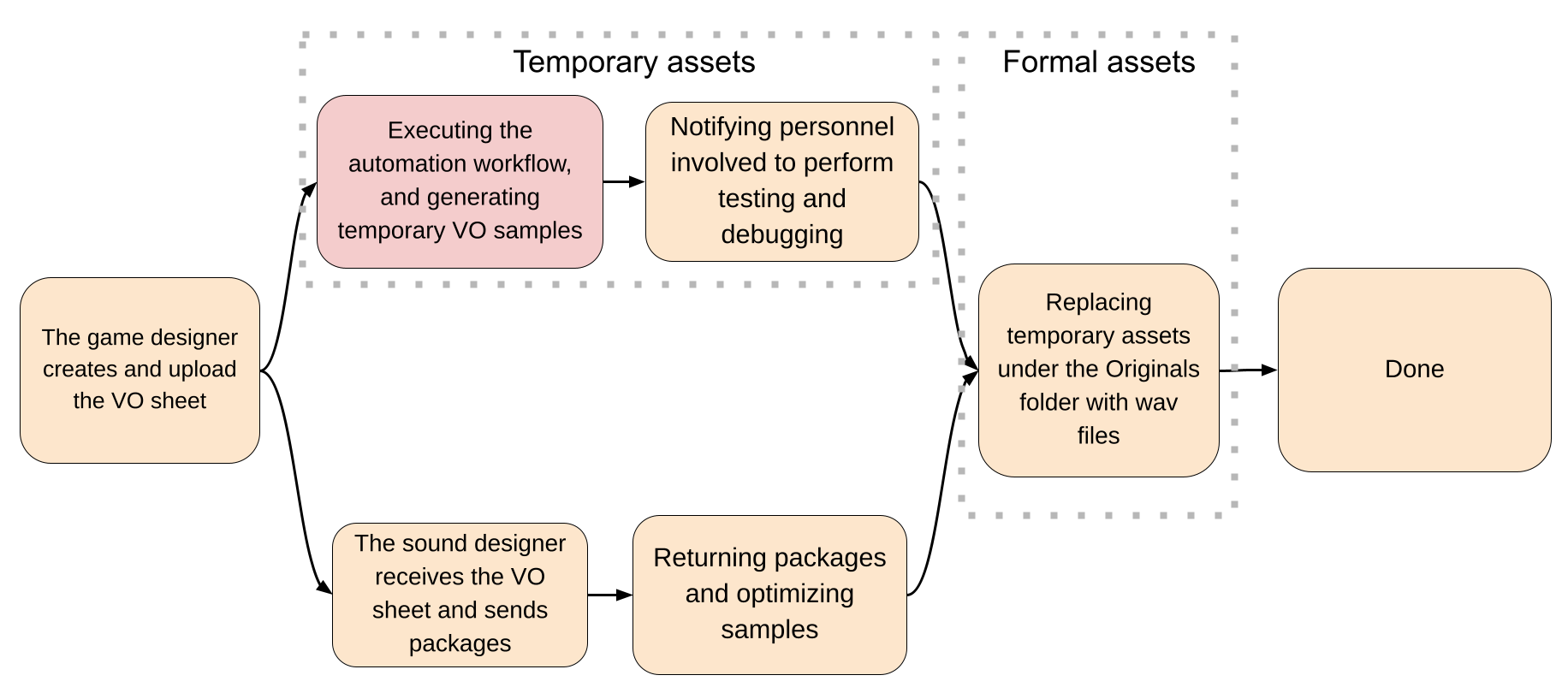

1. In daily production, after the game designer creates and upload the VO sheet, the build machine will execute the automation workflow, then you can audition those temporary VO assets;

2. VO testing and debugging can be done simultaneously with the package sending.

Potential Issues

The automation workflow can be time consuming and may use up building resources if the VO amount is high. You may want to do this at a convenient time (e.g. at night). With abundant building resources, however, it can be done simultaneously with the VO sheet uploading.

Comments