Immersive art could be viewed as inherently redundant in that the principle of art itself requires immersion within an artwork to appreciate it. Yet, while taking that into consideration, this is a term that can be applied to a lot of things. For the sake of this blog, I’m going to stick to digital and performance art. In this day and age, VR/AR/XR are considered a form of immersive art. One could argue that music shows are a sort of immersive art whenever shows use other elements to complement the experience (pyrotechnics, visuals behind a band, stages made out of LEDs, and so on). Modern video games, in my opinion, are a form of immersive art, as they use a lot of stimuli to appeal to the senses of the player, whether by a story or world building. Since this is such a broad term, the question "how do you create immersive art?" can only bring broad answers. Depending on how you see it, the term “immersion” might seem too vague, but being vague gives you a lot of wiggle room on how you approach the creation of the artwork, without going into esoteric concepts.

This brings me to the point of this article : my take on how I made a piece of immersive artwork, and my struggles on how to balance my starting ideas with the technology and knowledge available. I had the opportunity over the past year to pursue an artistic residency at Société Des Arts Technologiques of Montreal for the SATosphere. For those who are not aware, the SATosphere is a 13 meter high dome with 157 speakers grouped in 32 clusters of speakers. It is used mostly in a context of 360° shows with variations on the media used, such as 3D audio, interactive art based on physical movements, and installations.

I come from a musical background. I studied film before college, but it was always secondary to my passion for audio and music. I was at the head of two music labels, always trying to push the more experimental side of electronic music without alienating anybody. Over the years, I always strived to reach new goals with performances, mainly to entertain myself while entertaining others. For example, I integrated oddities like Wiimotes and DIY synthesizers in public performances, and I performed music in an anonymous 31 speaker configuration. As for my experience with Unity, I pretty much got into it last January without knowing much about 3D modeling, scripting, or even using Unity as a regular user. I hope this blog will inspire others to start using these tools for projects that are not necessarily video games.

Drichtel

Drichtel is the result of my past months of research and work on audiovisual synchronicity. It is an A/V performance show where music is performed live and the visuals are made in Unity. The different instruments are then spatialized in real-time in the dome with Wwise through the movement of game objects that are visible or invisible. Other than the positioning, the synchronicity of visuals and music are represented through another core aspect of the performance: the instruments will affect the visuals depending of how they are played, each represented by an element in the world. Written like this it sounds like I’m making a visualizer for a media player, but the idea was to have a world with “concrete” objects and concrete events reacting to the musical performance. Using this ideology provides a lot of flexibility in terms of how you can perform the music, set the positioning, and change the timeline of visuals. Changes after each show would be made and rendered pretty quickly in comparison to a more linear setup with a 3D modeling or animation software. This also helps a lot with the process of “humanizing” the performance, where little details are not necessarily fixed, but rather have their own organic movements.

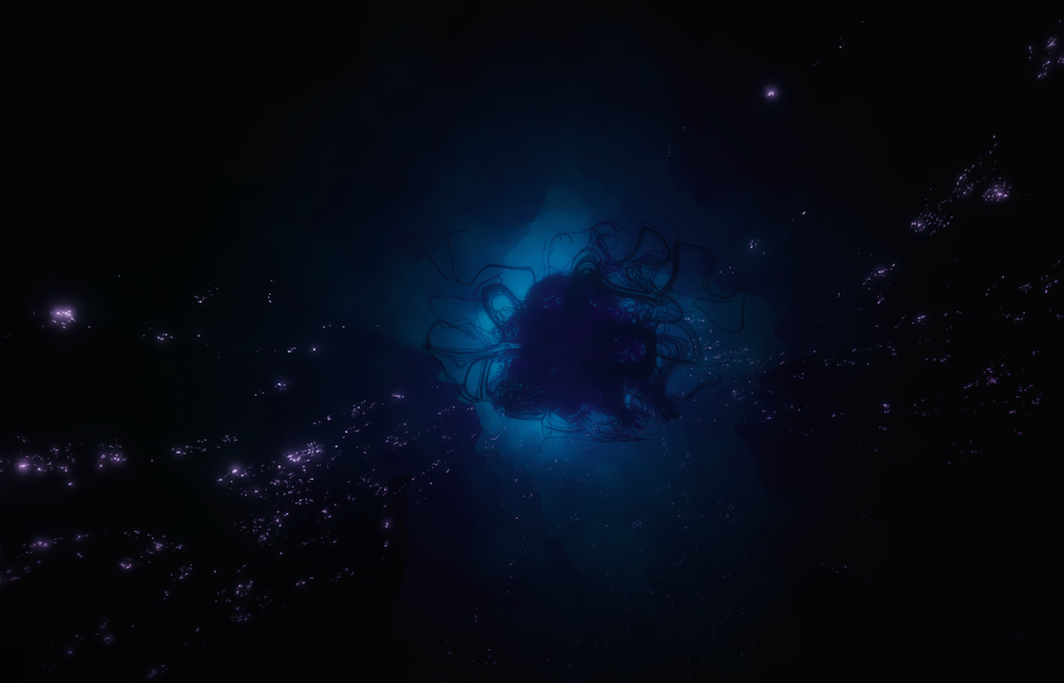

On the less technical side, the idea of Drichtel was to create a world inspired by concepts used in H.P. Lovecraft literature, without directly referencing the mythos itself. We’re talking non-Euclidean geometry, destroyed ancient cities, and a feeling of dread for the spectator. The obvious part being that Drichtel is a bad anagram of eldritch. However, the idea of an underwater-like experience comes from the fact that I was playing a lot of Subnautica at that moment. From peaceful shallow waters to anxiety-inducing depths, its game structure had a huge influence on the type of visuals I wanted to use.

I also knew that some people were previously using Unity for some projects in the Satosphere but didn’t use the full potential of the environment to create a universe. As Subnautica was made in Unity, it convinced me that I could come up with visuals that I would like with an engine that proved to work in this space.

Setup

I had the following equipment for this show:

- 2 computers (one for music and one for Unity)

- Hardware synthesizers and audio equipment (Mutable Instruments Ambika, Mutable Instruments Shruthi, Vermona DRM MK3, Audioleaf Microphonic Soundbox)

- A MIDI Controller (Livid Instruments CNTRL-R)

- 1 audio interface for audio inputs, 2 audio interfaces for MADI/MIDI communication

Then, the pipeline for all of this:

- I sequenced my music and hardware synthesizers in real-time via Ableton.

- The instruments would then be separated in different outputs. In my case, sounds that MUST be in stereo (like the main drums, or reverbs of sounds) or were aesthetically the same were grouped together. Sounds that were easier to spatialize in real time (arpeggio synth, single percussion, etc) were in their own mono outputs. If they had creative send effects (like a pad made out of a long reverb driven by an arpeggio synth), the send channels would be routed to a more static stereo setup that could move once in a while but would still stay in place to give the impression of depth in the dome.

- These outputs were sent over MADI to another computer that handles Unity/Wwise. I also sent some MIDI triggers to the second computer to sync elements (for instance, gigantic buildings moving each time a note was sent to my drum machine).

- I integrated Wwise in Unity and used an experimental sink plug-in to handle ASIO. The ASIO sink handles how to receive audio streams and outputs them to any sound card. In other words, I created objects in Wwise and set the positioning of them to be updated in real time in Unity.

- Then I assigned these objects to either invisible objects (in case of stereo outputs) or to visible objects. For example, I could position one of my arpeggio synths on floating rocks in a scene. Those rocks would be moved randomly by the MIDI note triggers I would send which, in effect, will move the sound's positioning as well.

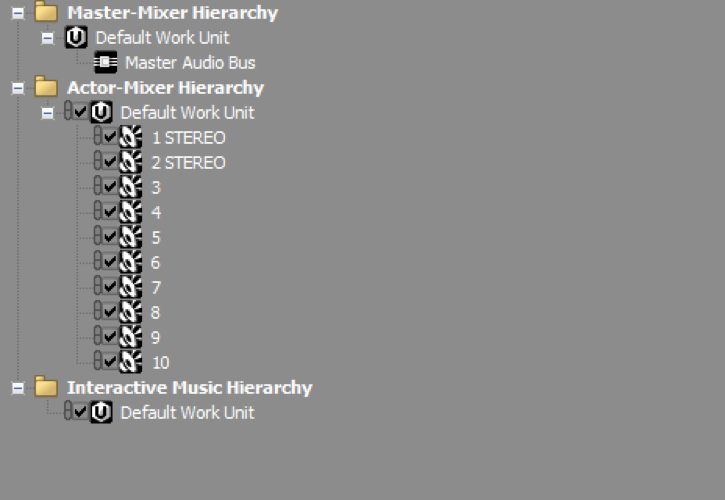

- Some of these audio objects have a Wwise Meter, changing an RTPC value in real time. Using Klak and a custom node for RTPCs, some elements were audio-reactive to the volume of what was played in real time.

- Then everything was sent to the dome's soundcard and output card.

So, in summary:

Ableton/Synths -> MADI/MIDI -> Unity/Wwise -> Out

Clips

As examples of the setup, here are some clips of the premiere on June 5.

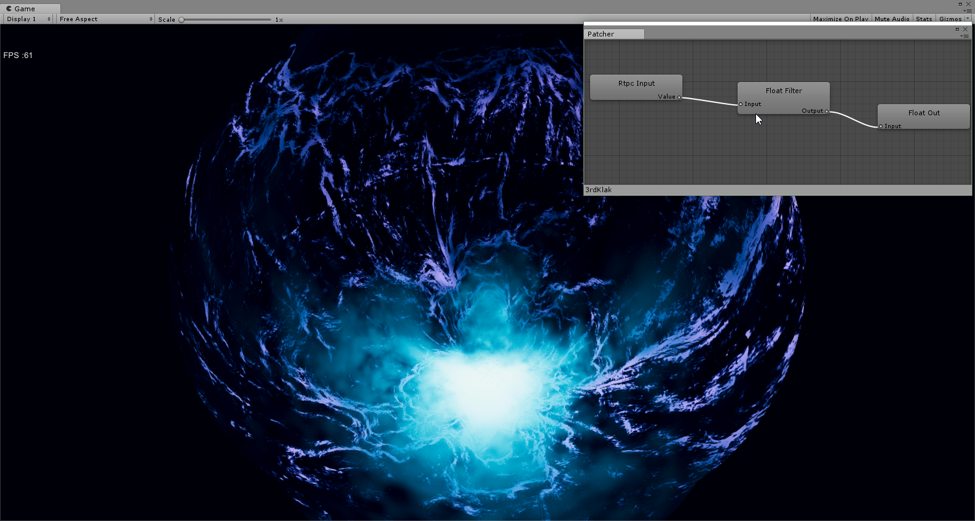

In this clip, we can see that the sphere is moving around the dome. This sphere represents the dry signal of the arpeggio synth for this song. It is a good example of an audio stream that is dynamically positioned as the sphere would come from above and underneath the listener object at different moments, only playing the sound when this object would appear. The same method was applied in the following clip for the cube displayed at the zenith point of the dome.

The variation on this one is that the MIDI notes change the shapes displayed inside that cube. To do this, I duplicated the note sent to my Shruthi and sent it to a MIDI channel I dedicated to interaction with Unity and converted it to triggers that would output random values. These values would then be sent to a specific shader, changing the form and punctuation of the different shapes within that cube. This same music sequence is also used in another visual element for this specific section of the performance: the strobe’s intensity in the following clip relies on the volume of the Shruthi. Therefore, playing with the filter cutoff frequency of the synthesizer would either lower or increase the intensity of the light.

Starting Ideas and What Went Wrong

When starting up the project, I had the idea, which was influenced by the concept of light painting, to use a Wiimote or anything that is based on movement to “paint” the trajectory of a sound. For example, a sphere could move in a looping pattern that follows the camera, moving along a musical instrument with it. Another idea stemming from this was the implementation of MIDI note assigned to XY positions around the dome. Therefore, I could point in the direction of specific notes, not only creating a melody on the fly, but also having this same output from a hardware synthesizer positioned on this sort of grid.

The reason why it didn’t advance further is mostly logistical and dealing with the fact that this is a one-man operation. However, technology advances is not really an issue as a project like “Trajectories” by Chikashi Miyama proved that an artwork based on positioning sounds in a dome with specific equipment is doable. To compensate this potential element that would have increased the showmanship of Drichtel, I went with another method which I’ll elaborate on in the following section.

Another starting idea that I had when designing Drichtel was the use of portals to represent non-Euclidean geometry. Good examples of what I mean are games like Portal and Antichamber. One idea that I would have loved to put the focus on was to use Wwise Reflect and Portals to create micro-universes in a corridor that the public would fly by at high speed, where sound would actually feel like is coming from these rooms. I saw two methods to create this:

- Create render textures on part of the corridor that would display different cameras in completely different parts of the level, putting different windows next to each other for these micro-universes. This is used a lot for Raymarching and shaders that contain shapes within a shape.

- Create the actual worlds next to the corridor but have a portal in the corridor itself. This way, you could warp to a further part of the corridor that has a different room next to it.

An example of the intended effect of these two ways is to have a tiny room next to a gigantic one, without being able to see the continuity between the two of them: the small room would not appear as contained inside of the big one, but the window for the two of them would be next to each other.

The problem with the first way of doing this is that it eliminates the use of Portals and Reflect. The portals would indeed show another part of the level but since the emitter would be far from the actual position of the listener, the accuracy of the sound position would make no sense with what we see.

This problem could be solved by the second method, but I ended up with two major issues: how would I be able to teleport my camera rig of 6 cameras smoothly through a portal, and what would GPU performance costs for this? Unfortunately, I did not find the answer to my first question due to lack of time. Also, a minor issue would be similar to the first method: since the camera rig and the listener would warp from one micro universe to another, sound obstruction would need to be super precise, and the sound tails would need to be cut short in order for the sound to make sense since you would teleport really further away in an instant.

For a non-dome project that heavily relies on sound, I believe the second method could work really well in a setup with only one camera (or VR). For something that is less dependent on sound accuracy, the first method could work but I would be wary of the GPU costs rendering multiple cameras around a level. As a measure of reference, for the performance I used a computer with an I7-6700k with a GTX 1080 video card. Due to how the camera rig works, the vertices and edges count could be multiplied by up to 15 times the actual total for the same scene with one camera, depending on multiple variables (such as use of volumetric lighting and number of cameras rendering the same object). I ran the entire thing at about 50 to 60 FPS at 2K resolution without any major stuttering, but I had to do a lot of optimization to get this result.

World Immersion and Synchronicity

One thing I mentioned earlier is the idea of immersion in art. To most people reading this article, this is kind of a given considering you are most likely coming from the video game world and games relies a lot on world building. The narrative, the photographic direction, the sound design, and the characters all stem from a world that was predetermined before production. The same can be also said for movies, paintings, and multimedia art forms. Art exhibitions can also be considered world building: you go to a museum or gallery in a setup arranged for the public to focus only on the artworks, to be immersed by it. The question is, how do you approach world building when your artwork’s canvas is the room itself?

You know these 80s cartoons where the protagonist gets sucked into the TV and they have adventures with the characters inside a wacky world? How about this, how can you convey to a public that they got sucked into this world without moving one inch from where they sat down at the beginning? Personally, I think that taking some cues from how video games are structured is how you do it. Of course, using a game engine sort of influences the way you’re going to approach it; Unity works by scenes so you could always go scene by scene. But, even then, passing from a level to another one usually brings a continuity (unless you’re making an abstract game like LSD Dream Emulator) to get the feeling that this is not a random bunch of assets stacked on top of each other. You don’t usually go from Level 1 to the Final Boss, to then being warped to a water level while opening a chest. If that’s the case, you still need to explain why it’s like this, explicitly or implicitly. You need a narrative.

In my case, I usually imply a narrative in my own music, through its progression, mood shifts, or how the different sections make me feel. From one section in a song to another, from one song to another, there’s a silent story told. Based on this, it becomes obvious that it requires a visual continuity to complement the previously created direction.

World building is the reason why I also made sure to fill the sections in a way so that looking anywhere in the dome is interesting enough, wherever the camera moves. If I would find it boring to look at the back of the dome when we’re going forward, then it is not good enough. Once again, like a video game, you need to consider uncommon paths the player may take just as much as the ‘happy path’, ensuring a pleasant experience regardless of the players’ choices.

To me, these basic artistic choices are another piece of the puzzle to another goal of Drichtel: Synchronicity.

On the topic of synchronicity, there’s the question of how I make my own person part of the show. I mean, yes, even though I interpret everything in real time on my synthesizers, the reality is that the public will see me as extra noise in the show. Looking at a guy twist some knobs and press some keys is not really the most interesting thing to watch, nor does it bring a lot to the show itself. I mentioned in the previous section that this makes showmanship a void to fill. My solution to this is a violin bow for the Microphonic Soundbox. I separated the output of the Microphonic Soundbox to a different emitter and added an extra Wwise Meter on there to slightly modulate a scanline effect on the main camera. This way, whenever I played background sound ambiences with the bow and metal rods, there would be an impact on what the public sees. The public then understands, even if they don’t know that it’s a live performance, that I am part of the show and I’m not just standing there, staring either at a screen or the ceiling.

Technologies

Klak is a huge part of the puzzle behind Drichtel. It is a node-based environment that can do basic operations within Unity. It is mostly for fast prototyping, or for creative comrades who are looking to use external inputs to control any serialized value in Unity. It is made by Keijiro Takahashi and the beauty of it is that it’s available for use on GitHub. Klak also has different plug-ins that can be added for OSC and MIDI control. For certain elements that were dynamic, yet wouldn’t change much over the course of the performance, MIDI would be used in Klak.

For more sound-reliant objects though, a node was created by Shawn Laptiste, software developer at Audiokinetic, for using RTPC values in Unity. As mentioned in the previous section, the RTPC would output a value that would then be converted to the format needed by the change I want to route onto an object (such as transform value, float, or RGB). An RTPC out node was also created. These two nodes opens the door to creating some sort of audio-visual sidechaining: a sound playing in real-time could move an object while this value could be sent to another RTPC that would put a low-pass filter onto another sound, this other sound object is then changing the values of another object, and so on.

I also used Mandelbulb 3D to generate some 3D fractals. Check it out if you have the chance! There’s a pretty big community of artists using it to render out-of-this-world pictures. What is great from this software is that the latest version of it contains an option to export your fractals in mesh format for use in a 3D modeling software. I would use Meshlab and Blender to fix the vertices and potential errors, and would then import the result in Unity.

As for Unity assets, the main ones that I used were the Volumetric Light asset by Unity, World Creator Standard, Living Particles, and the Unity Post-Processing stack. For the Volumetric Light, Shawn created scripts to use it on a multi-camera setup. The main purpose is to be able to use one component to control the five perspective cameras in Timeline. Although, considering it was referencing the shaders only once in the master component, it would save a lot of graphic memory.

Post mortem

In conclusion, what did Drichtel bring to the table? To me, it is a proof of concept and, even though the usage of game engines in art wasn’t unheard of, it gives me new tools on how to approach art and music. Taking into consideration that you will need to mix your stems on a 32-output setup will make you approach the creative process in a more precise way as you need to take into consideration all acoustic details of the room. So, if it’s a proof of concept, what’s the next step? To fully dive within these tools using an artistic approach: use the public as an interactive element of the performance like having branching paths chosen by the public, have effects handled by environment portals or the spatial audio tools to avoid worrying about how it will sound when working on the production of the songs, and make virtual rooms within the actual showroom to emphasize the immersion. In 1896, people would be scared by a film of a train coming at a camera. One century later, the requirements for immersion are much higher; fortunately, technology has evolved to meet these requirements.

Comments