The Audio team for "Tell Me Why" had lots of opportunities to enhance unique and memorable narrative sequences. Our cinematic and creative director had a great sensitivity to the endless potential of well-crafted sound to picture. So, we were able to spread critical emotional feedback only with audio.

A good example to illustrate our creative process can be found during the Murder Night scenes of Episode 2 and several scenes of Episode 3 when Alyson’s anxiety and guilt become more and more obvious. We have closely collaborated with the camera department to bring audio-driven rhythm onto these scenes because our cinematic director wanted to express Alyson’s anxiety mainly with audio.

Alyson’s anxiety

Starting from Episode 2, our creative director wanted to find a set of sounds that would tell the player how Alyson’s starting to feel guilty of having killed her mother (Mary Ann) and never assuming the truth. This set of sounds needed to be reused in Episode 3 when Alyson’s experienced a panic attack due to her anxiety.

Inside Alyson’s Head – Sound Design breakdown

Our cinematic director Talal Selhami had a very specific idea in mind, and he did not want to rely on music to express Alyson’s anxiety. The overall idea was to create something that sounds like an inner parasite with a very cyclic rhythm, a kind of endless discomfort that takes place in Alyson’s head including Mary Ann’s voice! This discomfort needed to be played during black screen in the cinematics and its purpose was to increase tension. Let’s have a look at the main stems that we created for that.

Inner Parasite

We started to record lots of source material such as piano string scrap or underwater movements with the great custom contact mic and hydrophone from Jez Ryley. Contact mic was very convenient for what we were looking for because we do not need to cheat with plug-in to make the sound feel “inner”. Then we only did layer with the raw material and add an LFO to create the rhythm that would help the black screen video to increase the tension throughout the sequence.

Mary Ann’s voice

Regarding VO processing - we had a fun time with the VCV rack and Soundtoy’s bundle to transform Mary Ann's voice into something very scary. The intention was to make the voice so terrifying that Alyson will run away when she hears the voice in its head.

We used a very basic patch with the incredible Audible Texture Synth to create a kind of endless loop based on Mary Ann’s voices.

Then we merged those loops with intelligible dialogs to add more emotional impact.

Tinnitus

Tinnitus was the last flavor we had to this set of sounds because this is one the most understandable feedback when dealing with stress and trauma sequences. For the high frequencies part of the tinnitus, we used high-pitched metal tone recordings processed through the same VCV patch mentioned above. For the low-end part, we use a combination of low-pitched wind sounds and taiko percussion processed only with a low pass filter.

Silence in Alaska

One of the biggest challenges when designing ambient sounds for Tell Me Why was to stay focus on the original mood we felt during the scouting trip in Alaska is quiet and dealing with silence is more complicated than we expected.

Character Foley

A Quiet environment involves bringing a nice level of details for the main character’s foley. We tried to cover a wide range of gestures for the cloth and footsteps sounds of the main character. We used a Sanken Cos11 and a Neumann KMR81 to record clothing and footsteps sounds.

Footsteps

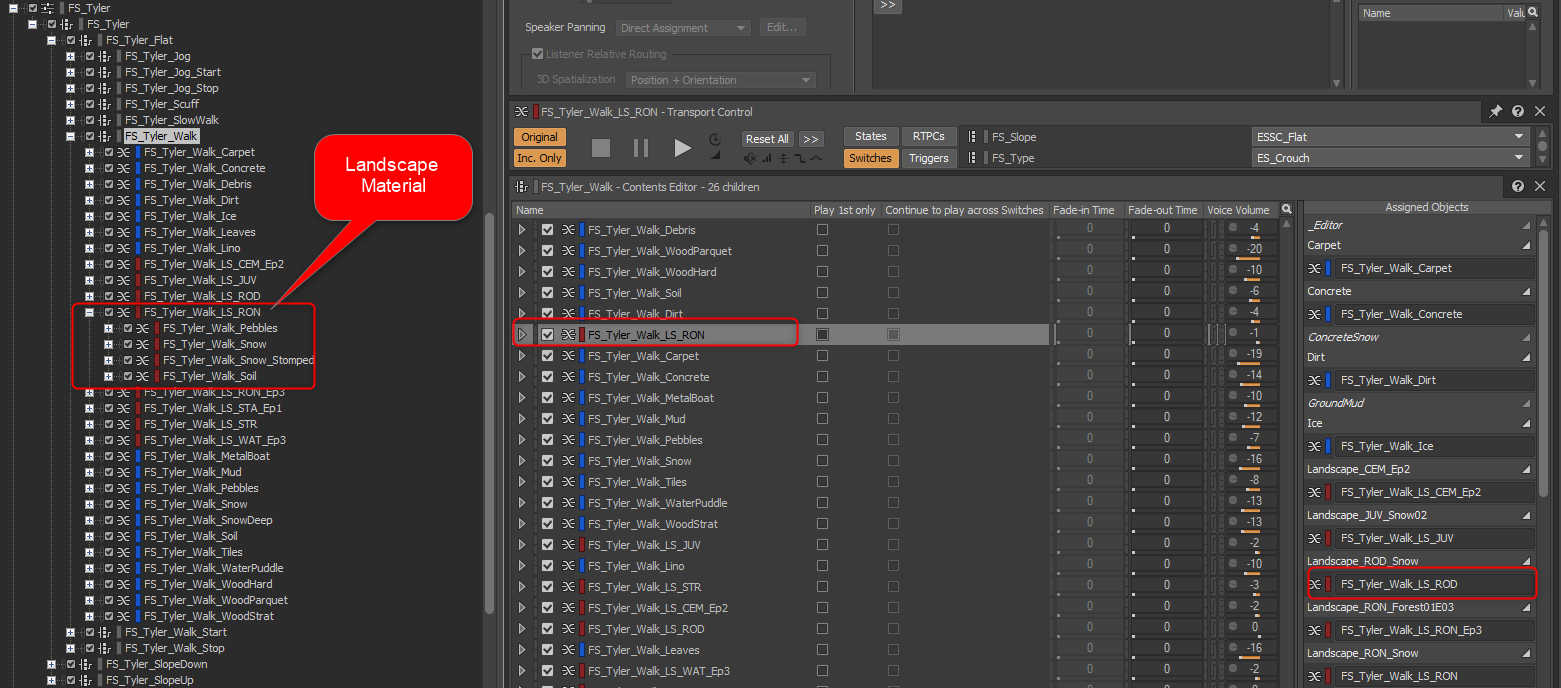

Footsteps depend on the player’s navigation speed and follow the ground materials of the Unreal landscape system.

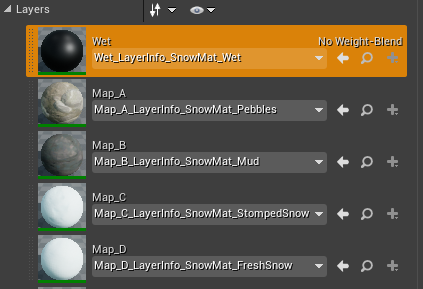

The Unreal 4 Landscape tool allows creating massive terrain-based outdoors. We can consider a Landscape as a "big complex material" that will be sculpted and painted by the environment artist. By complex, we mean a material containing several textures. And each texture will be associated with a landscape layer:

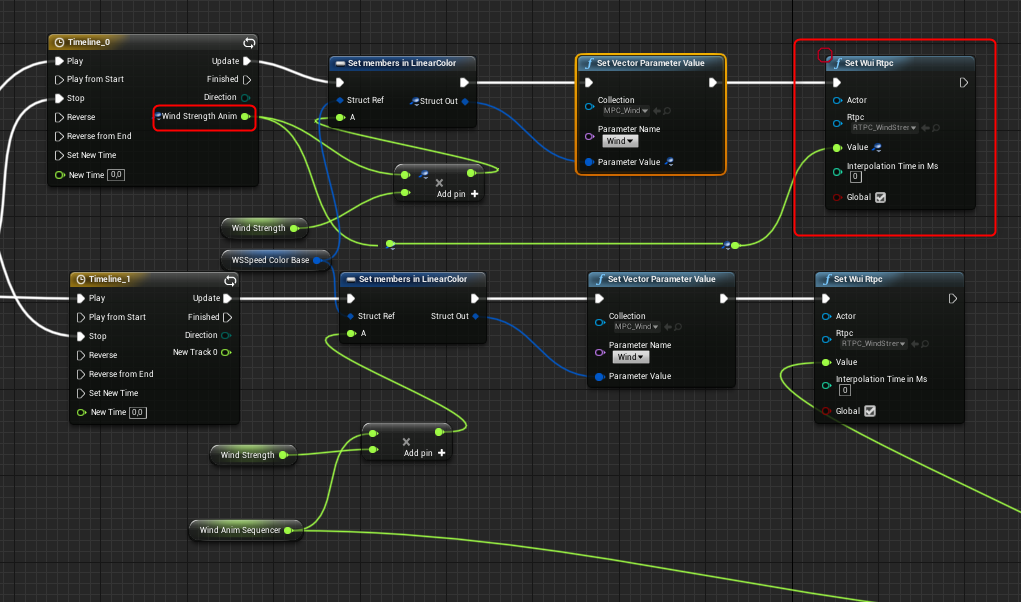

We worked on a system that allowed us to get one RTPC per landscape layer. This RTPC refers to the painting ratio of each layer. Therefore, it’s possible to know which texture the player is walking on. And also, the painting ratio of each one.

Then, all the RTPC are used in a Blend Container to control each layer. This system helped us to bring lots of variation to the ground material without the need of creating new assets for a specific set layering of landscape material.

When the environment artists were painting a landscape with grass + pebbles + snow, we do not have to be worried about updating our audio assets because the RTPC’s will do the job for us.

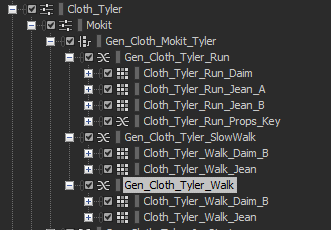

Clothing

Because interior ambiences are most of the time very quiet on "Tell Me Why", character cloth navigation foley was one of our focuses. We recorded lots of different outfits on a wide range of gestures including running, walking, getting up, etc. For the additional gesture added throughout the production of the game, we tried to modify the volume and pitch at runtime. For example, the slow walk gesture is the same as the walk gesture, but with a different volume and pitch.

Some gestures will also play specifics props sounds related to the character. For example, Tyler’s jingling keys will only be played if the player is running or walking on stairs.

Ambiences

We ended up with two main pillars for the ambience design and integration:

1) Create contrasts between close and distant sounds

We wanted to bring as much relief as possible and to create lots of depth of field that helps the player to feel the depth of the environment. To achieve that we combine baked reverb and in-game reverb to teleport random SFX emitters.

The 3D Automation is set to manage random positioning relative to the listener position, each time a new sound starts. Then a user-defined aux send is applied to the attenuation that will manage the amount of reverb if the SFX is close or far from the listener.

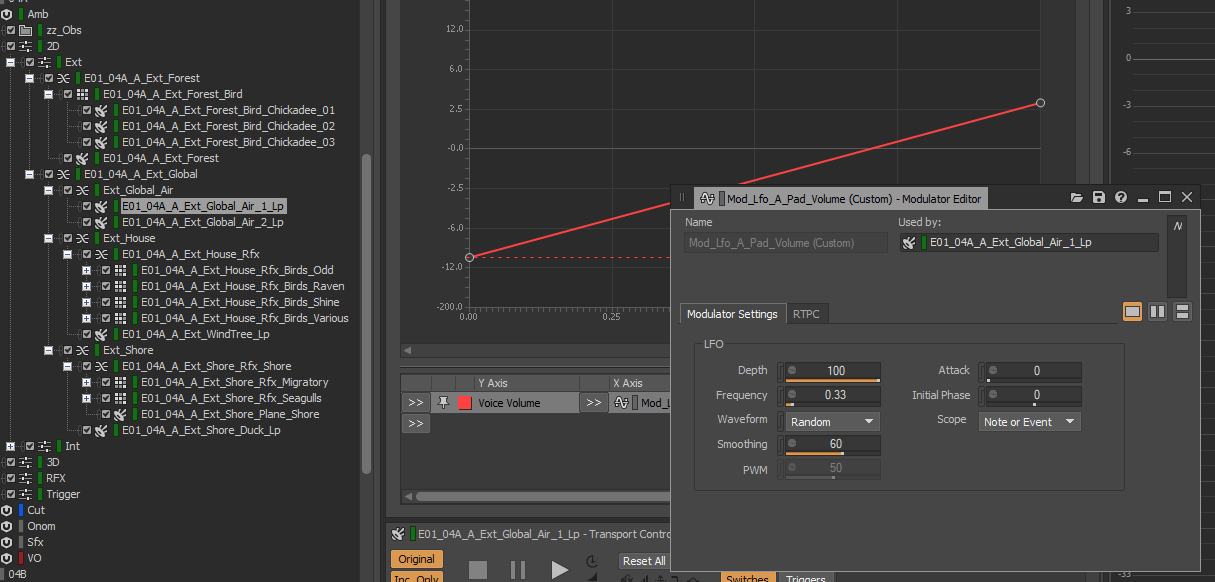

2) Make the ambient beds feels consistent but not disturbing

Quiet wild ambient beds such as wind and air tones tend to be annoying and static when the player is exploring a map. To avoid that we added modulator LFO on several beds layer to keep constant volume variations. The reason we did this at runtime with Wwise’s LFO instead of baking the modulation into the audio file was that we noticed that frequencies and depth settings of the LFO are much more efficient when it is done in real-time. We can also quickly update these settings throughout the production of the game.

In addition, we relied on a wind speed RTPC that let us drive the volume and eq of the main ambient beds and the frequencies of the LFO modulator accordingly to the wind strength used by the environment artists. All we need to do for that is to hook up a SetRTPC function on the float that manages the wind speed of the Wind System in Unreal blueprint.

In the final part of our audio diary series, we will look into technical and artistic decisions we made for mixing and mastering the game in surround sound.

|

LOUIS MARTIN |

Comments