Recently, there has been a lot of hype surrounding spatial audio, but the truth is, “spatial audio” can mean a lot of things and the myriad of available options can be overwhelming. There are many ways to incorporate spatial audio concepts into a game, and these options are not mutually exclusive - one can mix and match various solutions to deliver a customized experience. In future articles on spatial audio, we will cover topics such as the simulation of reflections and diffraction, but today we are going to focus on a single aspect - using “Rooms and Portals” in Wwise. This article explores the benefits of using Wwise’s rooms and portals system, and dives under the hood to show what makes it a useful and powerful tool for delivering a greater sense of immersion in your game. After reading this article, when you are ready to get your hands dirty and learn about the specifics of setting up rooms and portals in Wwise, check out our step-by-step tutorials using either Unity or Unreal.

Anatomy of an Acoustic Portal

For the most part, an acoustic portal is exactly what it sound like - an abstraction that models sound propagation from one room to another through a geometrically-defined opening. Portals model the interface between two different acoustic environments. Note that the term room is really just shorthand for acoustic environment and may be generalized to refer to an outdoor environment as well.

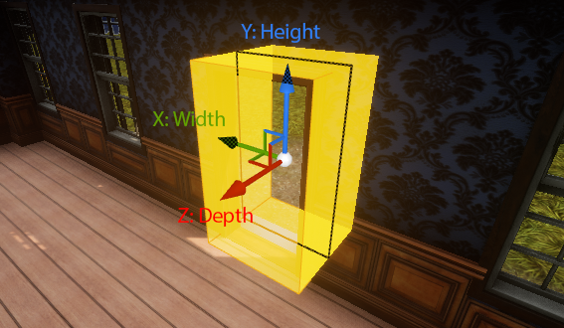

An acoustic portal from the Wwise Audio Lab with labeled X,Y and Z axes. |

In Wwise Spatial Audio, a portal takes the shape of an oriented bounding box; the box is open on each end like a rectangular “tube”. The x-axis defines the width of the portal, the y-axis the height, and the z-axis of the bounding box is “depth” along which the portal transition occurs. While doors and windows are not always thought of as having depth, in Wwise Spatial Audio the z-axis is important for interpolation and to create smooth transitions between the two environments. We will get into more details on how this interpolation along the z-axis works shortly.

Spatializing Diffuse Reverberation

One of the most important roles of a portal is to control transmission of diffuse reverberation. Consider a room containing three sound emitters, connected to a portal, connected to another room where the listener resides. The sound from the three emitters bounces off all the walls of the room enough times that it becomes diffuse - meaning that it has no perceivable directionality. The sounds mix together before being funneled through the portal. From the perspective of the listener in the adjacent room, the portal acts as a single sound emitter, emitting the mixed, reverberated sound from all three emitters.

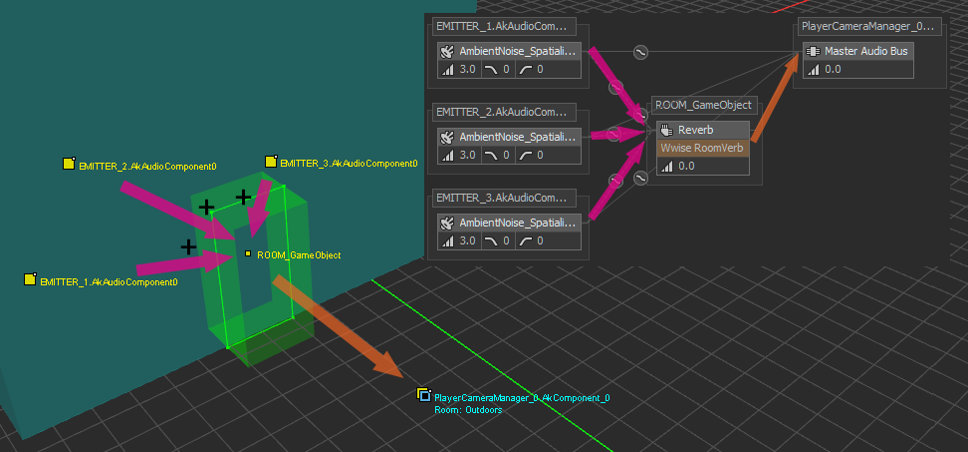

An annotated screen capture from the Wwise 3D object profiler, with the voice graph superimposed top right, shows 3 emitters being mixed on to a room game object (fuchsia arrows) which is then reverberated and spatialized before being mixed onto the listener game object (orange arrow). |

From an architectural standpoint, Wwise Spatial Audio is a separate C++ library that sits on top of the Wwise Sound Engine. Portals in Spatial Audio, automatically control “3D busses” which are instantiated on game objects in the Wwise sound engine. While not necessarily complex from a computational perspective, Spatial Audio removes the burden from the game engine and programmer by automatically managing the appropriate connections and performing the necessary bookkeeping tasks. In the case of diffuse reverb, Spatial Audio instructs the sound engine to first perform a mixdown on a specified bus, then apply a reverb effect (or chain of effects, as defined in the tool), and finally position and spatialize the mix at the location of the portal.

Diffraction of the Direct Path

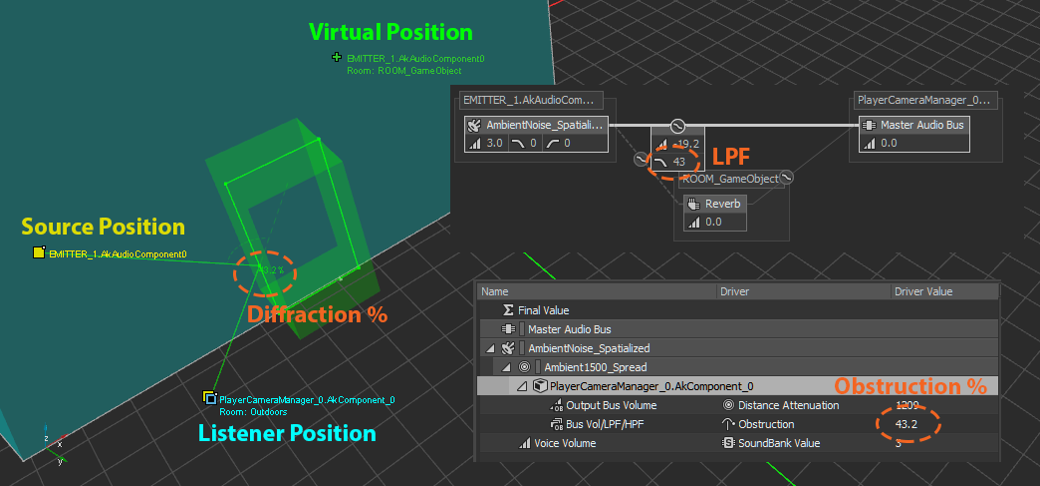

Aside from directing diffuse reverb, portals play a second and perhaps an even more important role: simulating diffraction on the direct path. Consider a single, point source sound emitter in a room adjacent to the listener. A portion of the sound doesn’t reflect off of any surface but propagates directly through the portal - referred to as the direct path. If the sound is directly “visible” to the emitter, it is unobstructed, and the portal (assuming it is large enough) does not affect the direct path, however, things get more interesting when the sound is around the corner. In this case diffraction comes into play, and is important for modeling the obstructed sound and the transition between obstructed and unobstructed states. Spatial Audio calculates the angle at which a sound “bends” when it passes through the portal - a acoustic phenomenon called diffraction - and then maps this angle to the obstruction parameter in Wwise. The sound engine applies attenuation and a low pass filter effect defined by the obstruction curves in the Wwise project settings. The more a sound bends, the more obstruction is applied, modeling the phenomenon whereby lower audio frequencies are able to bend further around corners.

An annotated screen capture from the Wwise 3D object profiler, with the voice graph and voice profiler superimposed on the right, shows and emitter diffracting through a portal. Notice how the diffraction angle as a percentage is passed to the obstruction value, driving a low pass filter, and how the virtual position makes the sound appear as it is coming through the portal, from the perspective of the listener. |

Anatomy (or lack thereof) of a Spatial Audio Room

Let us now take a moment to consider the details of rooms in Spatial Audio. The Spatial Audio library is not directly aware of the position or shape of a room - just its orientation and an assigned aux bus (for diffuse reverb effects). The game is responsible to define the shape of a room, and perform the necessary containment tests to tell Spatial Audio what room each listener and emitter is in. Doing so permits the flexibility to make rooms as simple or as complex as the game requires.

If we go one layer deeper, to inside the Wwise sound engine, a room is simply another game object. The positions of a room game object, as well as the orientation, send levels, occlusion and obstruction, etc, are controlled directly by the Spatial Audio library, and updated continuously according to the relative placement of the connected portals and the listener.

Room Tones and Room Ambience with Spatial Audio

One thing to note here is that room game objects are not just for reverb. It is possible to leverage the room game object’s automatic positioning and post Wwise events, usually room-tones or room-ambiences, directly on the room object. This is a really great way to have a room ambience that surrounds the listener when inside the room, but then attenuates realistically when exiting the room through a portal.

A quick note to programmers: it is possible to post an event on the room game object because the room ID is the same as the game object ID, just make sure to set AkRoomParams::RoomGameObj_KeepRegistered so that Spatial Audio doesn’t clean up the game object when it thinks it is not being used.

Behavior of the Room Game Object

The behavior of a room game object depends on if the listener is inside the room or outside the room; let's first consider the former. The game object is positioned at the exact same point as the listener, however the orientation of the room object is fixed to the room orientation in contrast to the listeners orientation. Reverb and ambience that play through the room game object is “counter rotated” giving the perception that the surround tracks we hear are fixed to the world around us. This is of particular importance for VR experiences utilizing sound field ambiences. You want the listener, which is commonly controlled by a head tracking device with a binauralization effect, to be able to explore and focus attention on different aspects of the sound field as they move their head. Games sometimes make the mistake of fixing the ambience to the listener’s orientation, which results in the sound field “following” the listener as they turn their head, and greatly reduces the level of immersion. While arguably less important for reverb effects which are often diffuse by nature, the same treatment is applied whereby the soundfield is pinned to the room’s orientation.

Now let’s consider a room that is adjacent to the listener. It becomes apparent that controlling the audio for each room as separate game objects is a powerful tool. In this case, the game object is still responsible for the sound coming from inside the room, including room ambience and room reverb, but the object is now positioned at the portals that connect to the listeners current room. If there is more than one portal, Spatial Audio leverages Wwise’s multi-positioning system, so as to not instantiate any extra voices or effects instances.

Portal Spread Factor

Spatial Audio also manipulates each sound position's 'spread' factor - the same spread that can be defined manually in the attenuation settings - so that the angle matches the angle of the portal opening relative to the listener. As the listener moves further and further away from the portal, Wwise’s user-defined attenuation settings kick-in to decrease the volume and apply filtering.

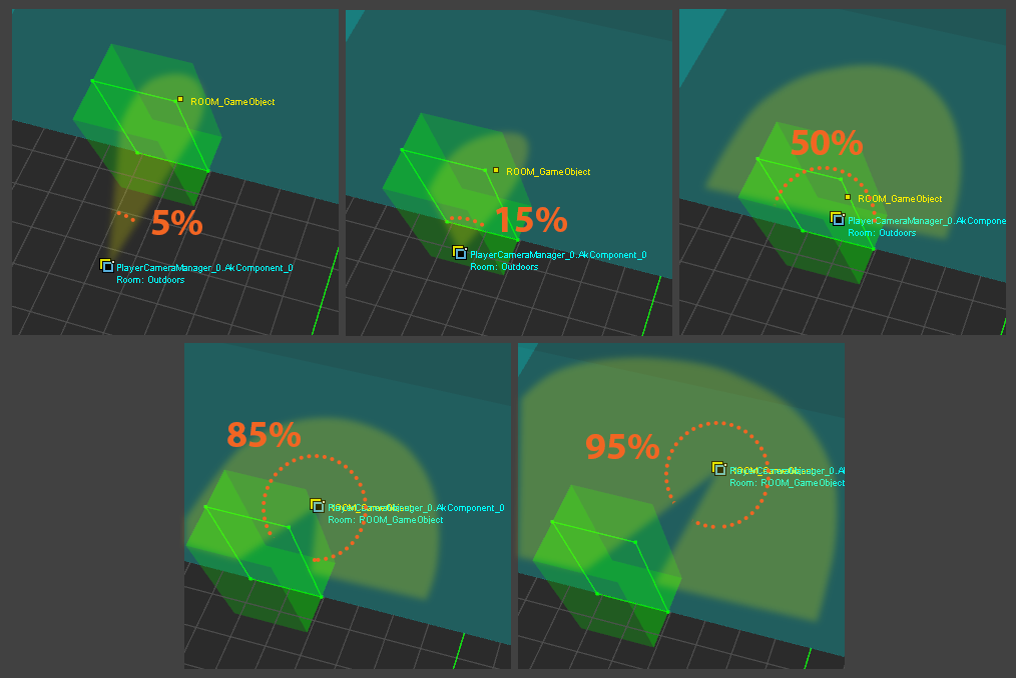

A series of annotated screen captures from the Wwise 3D object profiler show the spread factor of a room game object at various listener positions. Notice how in the top three frames, the room game object is positioned at the portal, but when the listener is inside the room, the object is positioned at the exact same location as the listener. |

When transitioning through the portal, the spread angle increases as the listener approaches the portal, when the listener is directly in the “door frame” of the portal, the spread will be 50% (or 180 degrees), and as the listener transitions into the room, the sound continues to surround the listener, eventually reaching 100% (or 360 degrees).

Portal Depth

Way back at the beginning of the article, we described how portals are represented by a 3-dimensional box and not just a flat rectangle. Having depth allows portals to interpolate reverb, room ambience, and even object positions when transitioning between two rooms. It is all too common that games trigger audio transitions on entry of a trigger volume, cross their fingers, and hope that the player continues to travel in the same direction at the same speed, so that the transition syncs up with the player’s movement. Should the player stop or change directions, the sound is no longer in sync with the player’s position. Portals solve this problem by mapping crossfades over space, and not time, and this is what delivers a smooth and realistic transition between two rooms.

Finally, while singing praises of the Wwise Spatial Audio rooms and portals, it is important to consider the system’s limitations; it is by no means a one-size-fits-all solution. If a level design does not logically divide up into rooms separated by portals, it is best not to shoehorn them in. It is not recommended to try to use portals in complex geometric scenarios such as outdoor environments with many obstacles. These types of scenarios are better handled using Wwise Spatial Audio’s geometric diffraction API. The geometric API is a whole nother aspect of Wwise Spatial Audio and unfortunately, out of the scope of this article. Stay tuned, however, to the Audiokinetic blog, because we going over the geometric API and more in future articles. In the meantime, feel free to jump into the documentation to learn about it.

We have gone over a lot of the internals of the rooms and portals system in Wwise Spatial Audio, the details of which are quite complex. If some of these concepts are still unclear, then I encourage you to try it out yourself. Load up one of our demos such as the Wwise Audio Lab, available through the Wwise Launcher, and attach the Wwise authoring tool to the game. All of the processes described above can be observed in action by watching the emitter, listener and room game objects in the 3D game object viewer. In the end, it is best to figure yourself what works for you, as there is no match for hands-on experience.

Comments

Michael Duss

November 15, 2019 at 10:07 am

Congrats for the creation of the Wwise Spatial Audio tools so far! Unfortunately I have encountered two major problems using them. First, there seems to be something wrong with the routing of audio from the sound object to the aux bus (reverberation) when applying ak room and therefore using game-defined auxiliary sends. The signal is sent post attenuation instead of pre attenuation, which results in increasing reverberation when approaching the emitter. Is there something I can do about this or is it a technical fault? Second, room ambiance instantiated in ak room isn’t affected by portals as it is supposed to be.