Introduction

Hello. My name is Marc Hasselbalch, and I’m a sound designer from Denmark.

I’ve been given the chance to introduce and talk a bit about the thought-process behind a game audio implementation tool I’ve created, called ReaperToWwise, which is a custom Reaper script that allows the user to, mostly from the comfort of the Reaper timeline, post events and set game syncs directly to a Wwise session - without having any engine hooked up.

The purpose is somewhat open-ended, but is primarily meant to aid in testing out implementation systems and design at a time in development, where a game engine is not hooked up for one reason or another.

This could be used by sound designers in, say, pre-production, or for testing an implementation system, perhaps for spotting cutscenes and cinematics and perhaps also for educational purposes, when someone needs some hands-on experience with setting up a Wwise system without relying on the whole scripting and game-engine shebang to handle event triggers.

It’s also not inconceivable that this could be used to showcase and communicate an implementation system by the sound designer to other team members, but that will be up to the user in the end.

All in all, this tool basically turns your Reaper session into a more flexible and malleable Soundcaster session.

Tool pre-requisites

REAPER

https://www.reaper.fm/

Note: I wrote and tested this tool from Reaper v.7.07, but it should be compatible with most Reaper versions, where the Lua interpreter is based on Lua 5.3 (Reaper v.6.x and earlier according to their website).

While Reaper v7 introduced Lua 5.4 functionality, I haven't made use of any of it up until now.

Wwise 2019.1+

(Required for installing ReaWwise)

https://www.audiokinetic.com/en/products/wwise/

ReaWwise

(Can be installed via ReaPack inside of Reaper)

https://blog.audiokinetic.com/en/reawwise-connecting-reaper-and-wwise/ (blogpost by Andrew Costa)

ReaWwise is, as per the blogpost, “a new REAPER extension by Audiokinetic that streamlines the transfer of audio assets from your REAPER project into Wwise”.

A lovely addition is that it also exposes raw WAAPI functions to Lua inside of Reaper, which is necessary for ReaperToWwise to work.

Lokasenna's GUI library v2 for Lua

(Installed via ReaPack inside of Reaper)

It handles the GUI elements.

Tool presentation

Let’s have a look-see at the tool in its version 0.9 form.

ReaperToWwise works by parsing text commands written inside of the Notes field of empty items on the Reaper timeline and will post already existings events and set game syncs inside of Wwise when the playcursor passes the start of these items.

To use the script, you need to open both Reaper and your Wwise session.

When opening the script from Reaper, you are greeted by this floating window menu:

You need to connect to the Wwise project on the communication port listed under

Project -> Project Settings -> Network and check the communication ports ("Game Discovery Broadcast Port (game side)").

You are of course free to type in a (non-conflicting) port of your choosing.

If successful, you are ready to go.

In the Reaper session, you add an empty item, write in your commands, for example: e/Footstep, and the tool will post the event named "Footstep" to Wwise and will trigger it – if it exists in your Wwise project and is set up correctly.

“e/” is a command denoting that it will try to post an event named “Footstep”.

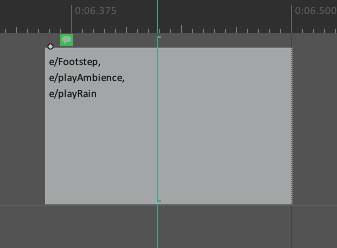

You are able to post more than one event or game sync from a single block by separating each command with a comma and newline.

Such a command could look like:

This will post the events named Footstep, playAmbience and playRain.

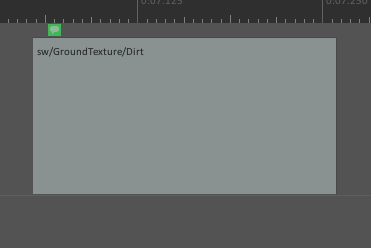

You are also able to set game syncs such as switches.

This would be done by the command sw/ .

This would set the switch group GroundTexture to the switch named Dirt.

The full list of commands is as follows:

Events:

e/eventName

Posts an event with the given 'eventName'.

Switches:

sw/switchGroup/switchName

Sets a switch with the given 'switchName' from the given 'switchGroup'.

States:

st/stateGroup/stateName

Sets a state with the given 'stateName' from the given 'stateGroup'.

Triggers:

t/triggerName

Posts a trigger with the given 'triggerName'.

RTPCs:

r/rtpcName/rtpcValue

Sets a given RTPC to a given value.

You are also able to create automation lanes, which will set RTPC values during playback.

To add an automation lane to control a given RTPC, you do the following:

- Press 'Add RTPC Lane' from the tool window.

- Enter the name of the RTPC you want to control and set its minimum and maximum value.

- A seperate JSFX plugin will automatically be created and added to the selected Reaper track's FX list.

- An automation lane will automatically be created under the selected track.

- Repeat for every RTPC you need.

Note: If you want to set the RTPC value with an empty item, but have an RTPC lane created at the same time, you are advised to disarm the given automation lane, otherwise it will keep updating the automation value at every update, thus interfering with whatever value you set in the empty event item.

So at this point it is not possible to have both working at the same time unless you have a specific use-case in mind.

It is important to note that the tool does not (at this point) create events or game syncs from scratch – it can simply trigger and set them if they exist and are set up in your Wwise project beforehand.

And that’s basically it. I suggest you download and try out the tool and see if it meets your needs.

Why did I want - and need - to make this tool?

I started working on the approach when I found that I wanted to - and needed to - showcase technical implementation skills when applying for game audio roles.

But what do you do, when and if you don’t have much in your portfolio dedicated to technical implementation?

You obviously make a technical implementation reel.

But if you’re at a point in your own path where you, like me, perhaps haven’t worked on a shipped title that can be showcased (or maybe you did, but you still can’t showcase it (...yet?)), the choice is usually to make a small game yourself or to use a game-engine demo project as the basis.

I’ve always had a slight aversion towards using game-engine demo projects as the basis for my reels, which is most definitely a fault on my part and not on those who are choosing to do it, because what else is there to do if you are getting frustrated with only showcasing linear redesigns of small video clips of gameplay or cinematics? You need to showcase that you understand and can comfortably work within the non-linear and adaptive framework of games and game audio.

So the idea was then to devise a way to combine the two: linear and non-linear.

This was first attempted by making a too convoluted workflow in Reaper, Wwise and Max/MSP, where I place markers for every single audio event for a linear video clip, export this list of markers, parse it in Max and then use MIDI to trigger events, switches and drive RTPCs in Wwise.

I showcase it here:

Game audio implementation showcase: Dynamic system for linear video in Wwise + Max (October 2023)

While it did work, it was much too complicated and I really wanted to make it shareable at a later time so other sound designers could use it, which would be a hard sell if you had to manage the convoluted extra step of Max.

Some time went by and I wanted to optimize this, removing the need for Max and doing everything solely from within Reaper. So I started to get more into programming Lua, which is one of the languages available for extending the functionality of Reaper, and I devised a new, very simple system with timeline markers. You simply place a marker for an event, name it, say, “Footstep”, and the script will post the name of the marker to Wwise and trigger the event with the corresponding name.

I showcase that version here: Game audio implementation showcase: Wwise event triggers from Reaper markers

It worked quite alright as well, but this was only for posting events. I now needed all the other game syncs, including RTPCs.

Making this tool was a wonderful way to get into Lua; having a real, tangible task at hand. Every time I came up with a new idea for a feature for the tool, I would most likely have to look up the relevant syntax and logic in the Lua documentation, scripting, trying things out, getting frustrated – and learning.

Little by little a framework started to show itself and while the bugs were plentiful - and still are (just have a look at the repo’s README) - it has grown into a tool that, with the right nudges and workarounds, can actually be used by people other than me, which was also a strong reason for doing this in the first place. To not only create a tool for my own needs, but also being able to share it with others, who might also share the same needs.

There is a strong sense of accomplishment in realizing that your collection of various creative and technical skills, problem-solving and know-how amounts to the possibility of creating tools like these.

I now know that if I need tools of this kind, I can most likely create them.

And If I can’t, I can definitely learn how to do it.

I am not an expert programmer by any measure. Before getting into technical game audio work, my primary experience in programming came from things like Max/Pure Data and Supercollider.

I like the thinking and resilience needed to learn these corners of technical work.

If you do too, I really recommend just exploring all these lovely corners of the technical game audio work. If there’s something you want to know but don’t know, I suggest you get a project with one or multiple smaller outcome goals and start learning what you need to get there.

Custom tools for game audio is a big area, but very much necessary for particular areas of sound design and implementation. Not only to assist and save time during development, but also to allow us sound designers to channel our energy to where it is most needed.

To my knowledge, ReaWwise - and by extension the exposed Lua functions - has been available since September 2022, so not terribly long all things considered, but this means that this approach has been sitting there for the taking since then.

I suppose it’s just a matter of possibility meets necessity.

Mother of invention and all that jazz.

Around the same time I released the first video showcasing my Reaper marker-based version of this script, Thomas Fritz made a tool with a similar approach, called Wwhisper (and I must admit, a much better name, now that “ReaWwise” was obviously taken).

It shares some thoughts and approaches, but he has focused on somewhat different functionality, which just goes to show that once the building blocks are available, people will take similar routes and build similar structures.

This lively exchange of ideas and approaches is one of the things that make the game audio community very special.

How can you use the tool?

While I had a very specific use-case in mind for myself when creating this tool, it could, as mentioned, be used for various purposes.

My idea was to make dynamic and interactive systems for linear video clips to showcase meaningful experience for game audio roles, but when sharing this on various platforms, other ideas came up, such as someone wanting to get hands-on experience with game audio implementation without the necessity of setting it up in-engine and going down that route. Someone else mentioned that it could be a useful tool for spotting cutscenes for games. There is also the use of communicating and demonstrating a technical idea to other non-audio parts of a game development team.

The use of a tool is not always up to its creator. So please try the tool and use it any way you see fit.

If you have an absolutely odd use-case for the tool, I’d love to know about it. I love when the use of a tool is extended and expanded upon.

Going further

The tool is still not released in v1.0 - simply because I need quite a lot more time to add features and iron out all the bugs and possible nil reference scenarios that I might have overlooked.

As of now, there are some planned features, which, among other things, includes a more organized way of handling and processing multiple Reaper empty item events, at the moment dubbed the “Event Manager”. A suggestion I got from user feedback, for which I am very grateful.

I really want to further develop this, but time has been slim since releasing v0.9.

I’ll get there eventually.

Where can you get the tool?

You can download the tool from the dedicated Github repo, which is located over at: https://github.com/mhasselbalch/MH_ReaperToWwise

Once v1.0 has been finished, it will be released and available via ReaPack inside of Reaper, but I’ve chosen not to do that for the time being.

Goodbye for now

Thank you very much for reading along.

I want to thank all the people who have shown interest in this so far, friends, colleagues and like-minded people, who have been providing feedback and notes and of course a big ‘thank you’ to Audiokinetic for letting me write some words about this.

If this is of interest to you, do not hesitate to reach out, say hello or follow along at https://www.linkedin.com/in/marc-hasselbalch/

Take care.

Comments

Simon Pressey

August 12, 2024 at 04:59 pm

Hi Marc, great to see you taking the initiative to expand on the functionality of WAPPI that was introduced with ReaWwise, we, that is the Audiokinetic team that developed ReaWwise, are very happy to see what you have done and hope it will encourage others to play around with ReaWwise and WAAPI, as you have demonstrated there is a lot of potential. Bravo !