Creating truly interactive music can be rather hard. One of the reasons is the nature of music. Music is rather complex, and for a player to feel that they are participating in the creation of music, often, musical qualities have to be compromised. Nevertheless, it’s interesting to explore, and below are a few examples of concepts I worked on for a recent AR book & game by Step-in-books called MUR.

About MUR

The starting point for MUR was a children's book about a bear that can’t sleep. We already had this clear idea that music should play a bigger role in MUR than it did in previous games made by Step-in-books. How we were going to achieve that was yet to be determined, but I knew that I wanted to use Wwise to ease the process and integration of sound and music. Apart from that, the game would be an AR experience for tablets and would use the Vuforia software.

Here’s a short trailer showcasing the MUR experience:

During the first couple of weeks of development, we created several mini-games and puzzles which were mostly music-based, yet out of context from the MUR universe. I sat down with in-house game designers and programmers, and we brainstormed concepts and ideas as well as how they could be integrated in Wwise. We ended up with around 10 mini-games and puzzles, selected those that we thought would work best, and began putting them into context with the MUR universe.

Below I’ll share some of these concepts and the role of music and sound within.

Ambient Music

The first full level you enter is an introduction to the MUR universe. You can touch different elements and learn how to control the game, but there are no actual puzzles. I wanted the music to reflect that this level was about exploring the universe, so I decided that music here should be more ambient - almost sound design in nature - and non-repetitive, and not move in any particular direction.

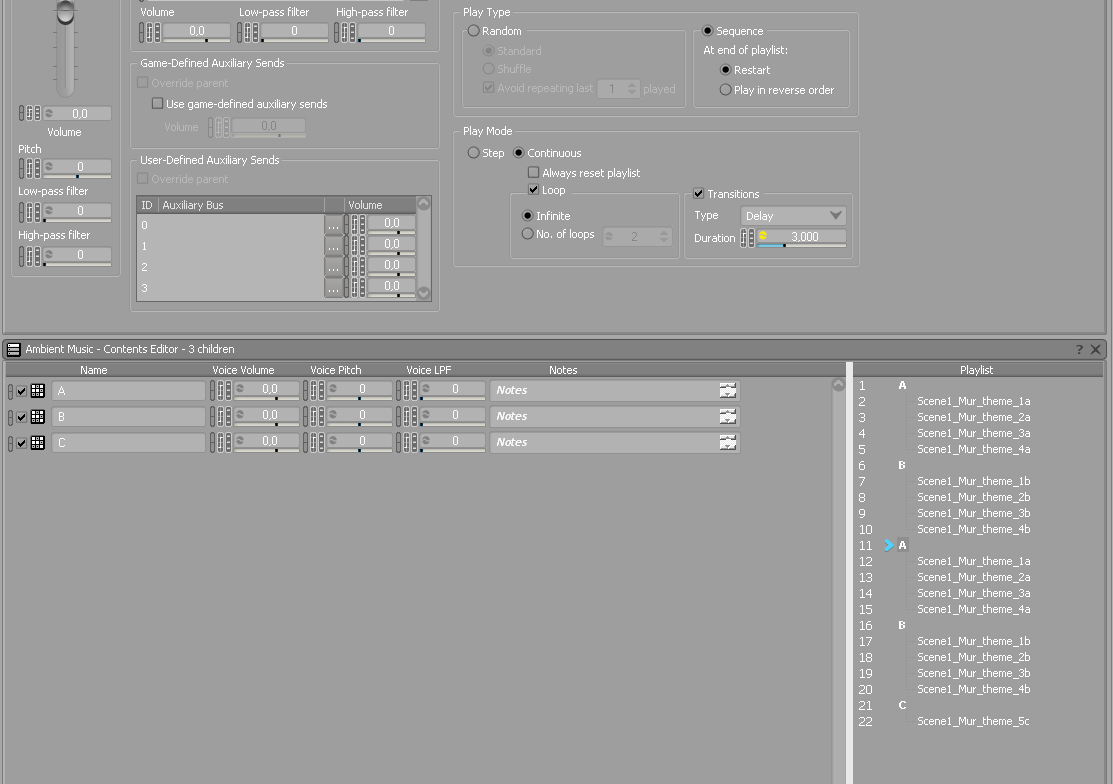

Out of the main theme, I created 4 different A-parts, 4 different B-parts, and a C part. Because I wanted the phrases to trigger with random delay times, I didn't use the music structuring in Wwise, but instead placed them as SFX sounds and used Sequence Containers and Random Containers.

This in a way creates infinite music, and random delay times would obviously work best with ambient music that is more rubato (without rhythm). This concept would have also been possible to implement with more layers at the same time, and we could technically change parameters on the fly to the intensity of the music when needed. But for this game, I kept it simple.

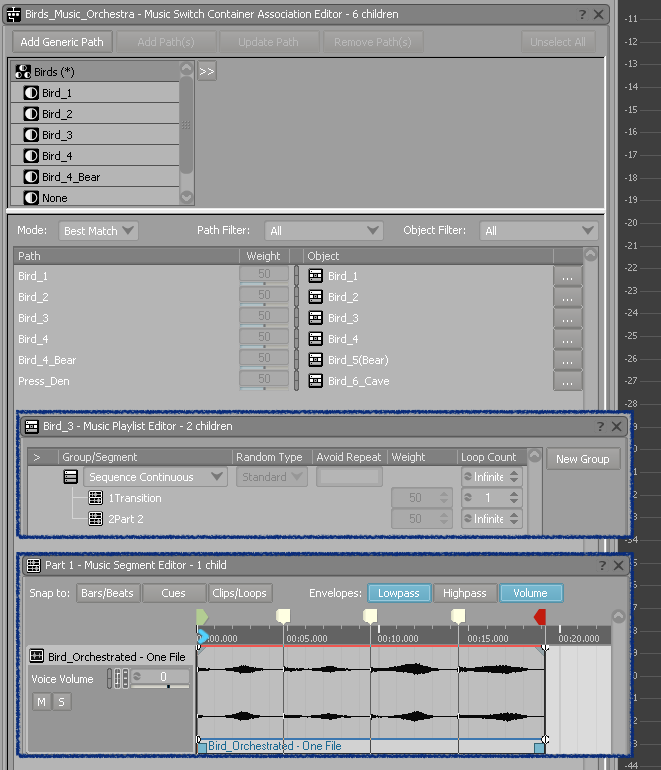

Developing Music

For the second level, we wanted the music to evolve as the player progresses through 4 stages. I didn't want it to just sound like a combination of musical layers, but rather music with transpositions that would really evolve. So I composed 4 pieces of music in different yet related keys, that would be looped, and via transition parts, go to the next section. The objective was to give the player the sense of prompting the music to evolve as they click through the level. It had to feel snappy enough to be in line with the players’ movement within the game, so I created extra custom trigger points for the transition pieces.

Another element we added was to give every object/animal a sound effect and musical voice. For example, touching a squirrel sitting in a tree would make it climb up the tree while a major scale would play ascending on violin. When the squirrel would slide down the tree, the scale would play descending. Since some of the environment sounds also followed transpositions, they are easily controlled by following the same triggers as the music would follow.

Interactive Music

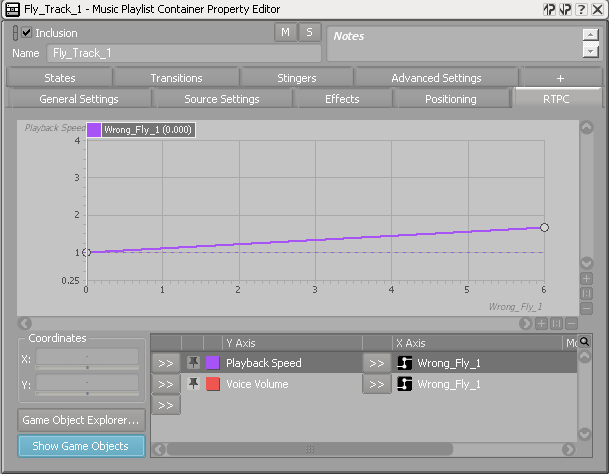

For the third level, we wanted the player to feel that they are shaping the music while solving different puzzles. The second puzzle in this level consists of putting 6 fireflies into their correct 6 alcoves. So I created 6 strong music layers for each fly. The layers would start playing, synchronized with the volume at 0 db, and when you place a firefly in its alcove, the volume gets turned up. But, of course, this would only happen as a confirmation of correct gameplay when the firefly is placed in the correct alcove. So, to make it more interactive, I created several layers for each instrument for when the firefly is placed in 'wrong' alcoves. I decided to use pitch shift that would modify depending on which 'wrong' alcove a firefly is placed in, to make it more interesting.

The result was that when a layer would play a wrong pitch and tempo, when the firefly was put in the correct alcove, the layer would play in sync with the other layers. The great thing about this was that it also reduced memory, since only one sound file would be needed. I did, however, have to run a stream of the sound file that was 'wrong' and could be manipulated, as well as the 'correct' layer when in sync with the other correct layers. To emphasize when a music layer would be correct, I ended up creating a couple of extra layers that also got turned up in volume when a firefly was put in the right alcove.

We played around with some other cool concepts, like having Unity receive the tempo from Wwise so the player has to touch objects specifically in relation to the beat/tempo. But, due to the age range for this experience, several puzzles had to be simplified or didn’t make it to the game.

Mixing for Tablets

The game will be played on a tablet and most likely be played without headphones, so getting MUR to sound good (and loud) on a small set of speakers was very important.

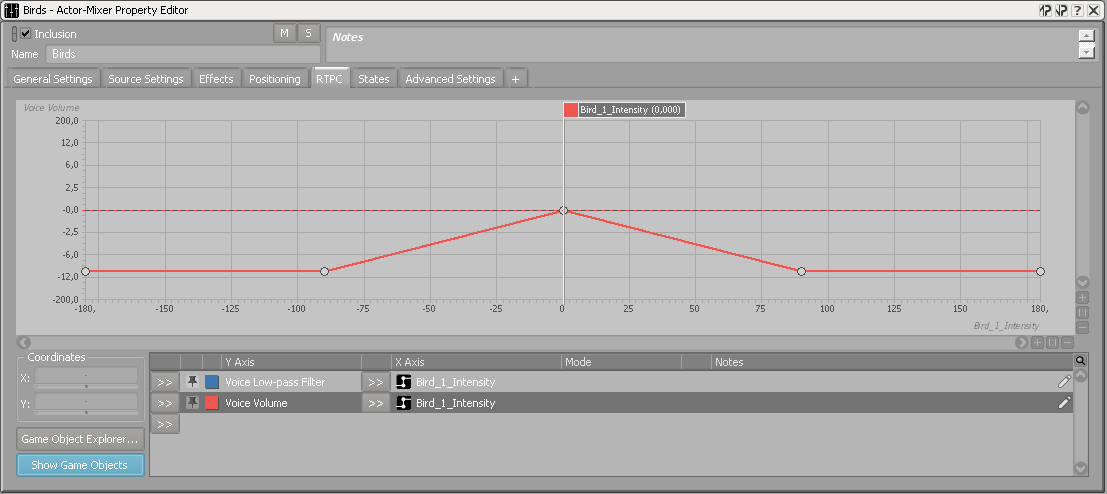

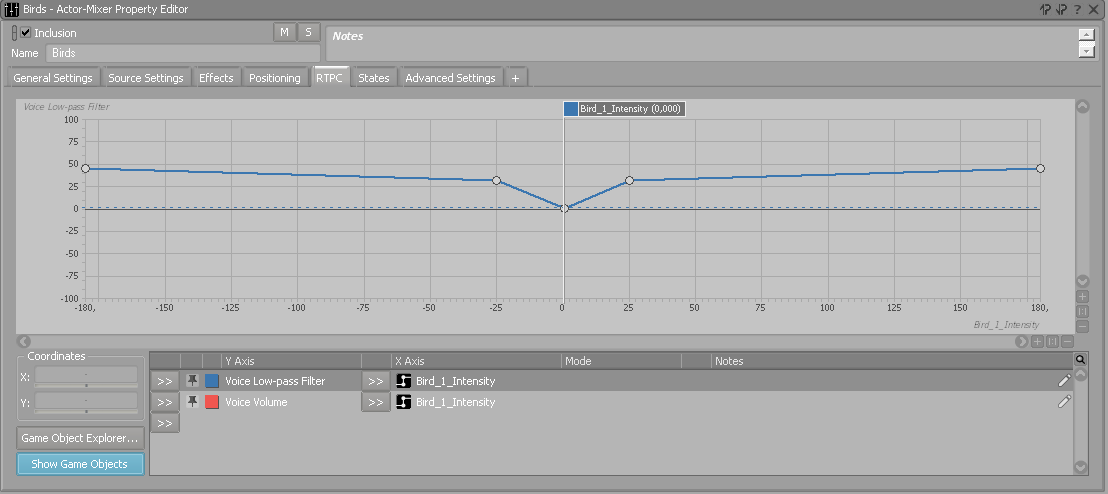

This also limited the experience of 3D sound. And because some of our objects needed very specific volumes, depending on where the player looks and the distance of the object, I used 1 RTPC bind to the built-in parameter Azimuth, instead of 3D sound on several environmental sounds.

For example, the bird whistling in the second level has volume changes, a low-pass filter, and intensity in repetition, depending on the angle of the camera:

Mixing through the tablet's speaker (with Remote Connection), while tweaking RTPCs and volumes, eased up the process a lot.

Another great thing which I used a lot in Wwise was to monitor the amount of voices that would be played simultaneously (on the tablet). For example, with the musical games it would be very bad if sounds would get cut off, as it would ruin the sync of those musical layers. I found that up to 30 musical layers can simultaneously play without any problems; but, in the end, I kept it below 20 as a precaution. We tested on many tablets and found that this was a good amount tablets could handle, without us worrying about sounds dropping out. Needless to say, I also set the priority of sound effects lower than music for most levels.

Comments

Rudy Trubitt

February 06, 2018 at 02:38 pm

Nice work!

Narie Juerian

February 06, 2018 at 10:21 pm

Wow, Jesper, that's really cool -- a perfect example of how Wwise can contribute to one's interactive music system! I really enjoyed the in-game videos and your explanation as well.

Aleksei Kabanov

February 07, 2018 at 07:08 am

Wow, it's really good work!

Niko Huttunen

February 08, 2018 at 06:35 am

Hi, Great post and thank you for sharing it. I was wondering what software did you use when mixing through iPad's speakers? I tried to search for app called Remote Connection, but no luck. Previously I've been using Airfoil, but options are always welcome. Best, Niko

Dr. Reacto

February 08, 2018 at 10:59 am

I loved reading this article and learning how you used Wwise to create a dynamic and interactive music in an AR landscape. I create material for children at http://reactoryfactory.com and http://reactoryfactory.tv and would love to explore these concepts. I was also evaluating using wwise in a new university program and now I'm sold after seeing this article. Instead of just using it in game design, it is being used to enrich the media and educate young children. Also, mixing through the tablet speaker makes so much sense and should be so obvious, but could easily be overlooked as so many recording professionals are used to using near fields and grok boxes. Great article.

Jesper Ankarfeldt

February 09, 2018 at 01:03 pm

Thanks Rudy.

Jesper Ankarfeldt

February 09, 2018 at 01:05 pm

Thanks Narie. Yes, Wwise is a game changer when it comes to more freedom in the sound development, and for me a no-brainer, even on smaller projects.

Jesper Ankarfeldt

February 09, 2018 at 01:14 pm

Hi Niko. Pleasure. It's a build in function :) There's a button on the top bar called "Remote..." Your computer and the tablet has to be on the same Wifi. Also I used build in limiter from Wwise, to get softer parts louder and not being as afraid for the loud parts. Best

Jesper Ankarfeldt

February 09, 2018 at 01:32 pm

Thanks Dr. Reacto. I'm really happy the post gave you some input and made sense. For me, if I can convince my developer, I think Wwise is a no-brainer even on smaller projects. Coming from a sound world, Wwise makes the workflow with sound so much easier and also gives sound designers/musicians, with limited or no scripting skills, a chance to be a time a bigger more important part of the team. With the Remote Connect feature you also get control of you sound, rather then just give it to the mercy of programmers.

Jesper Ankarfeldt

February 09, 2018 at 02:19 pm

Thank you Aleksei.

Donovan Seidle

February 09, 2018 at 10:49 pm

I’m so inspired by this project!!! It’s just the sort of work I want to be doing! I had a question about the intensity and repetition of the birds, and how specifically the repetition element was handled: decreasing rtpc affecting a delay of a sound container? Super neat. I LOOOVE the firefly implementation.

Niko Huttunen

February 12, 2018 at 05:13 am

Ah! Thanks! I didn't realize that you can connect Wwise also to your tablet. Cool!

Jesper Ankarfeldt

February 13, 2018 at 05:07 pm

Hi Donovan. I'm so glad you found it inspiring! Yes, I used the same RTCP with azimut, to control the delay. The more in center (0) the bird object is, the shorter delay. On top of that I also added a bit of randomisation to the delay between each soundfile. You can hear it quite clear in the second video around 8 seconds in. When the bird is dead center, it's almost chipping constantly, and then slowing down when not in the camera.

Live streaming apps

June 04, 2019 at 02:32 am

Really your article is very helpful for me I think this is an informative post and it is very useful and knowledgeable.

Jonas Foged Kristensen

June 29, 2019 at 02:56 am

Really inspiring work! Got me thinking in new ways to use Wwise, thanks Jesper!