Mixing voice in games can be a major logistical challenge, with thousands or even hundreds of thousands of lines, often multiplied by the number of full localized languages.

With a “one-to-one event” VO implementation approach, where VO structure is built in the Actor-Mixer Hierarchy like other sounds, dialogue can become the largest single feature in a Wwise project, quickly dwarfing all others. This can make VO very difficult to manage and can require enormous amounts of manual work whenever there are changes of direction or new mix decisions. Time is rarely a luxury we have in the audio department.

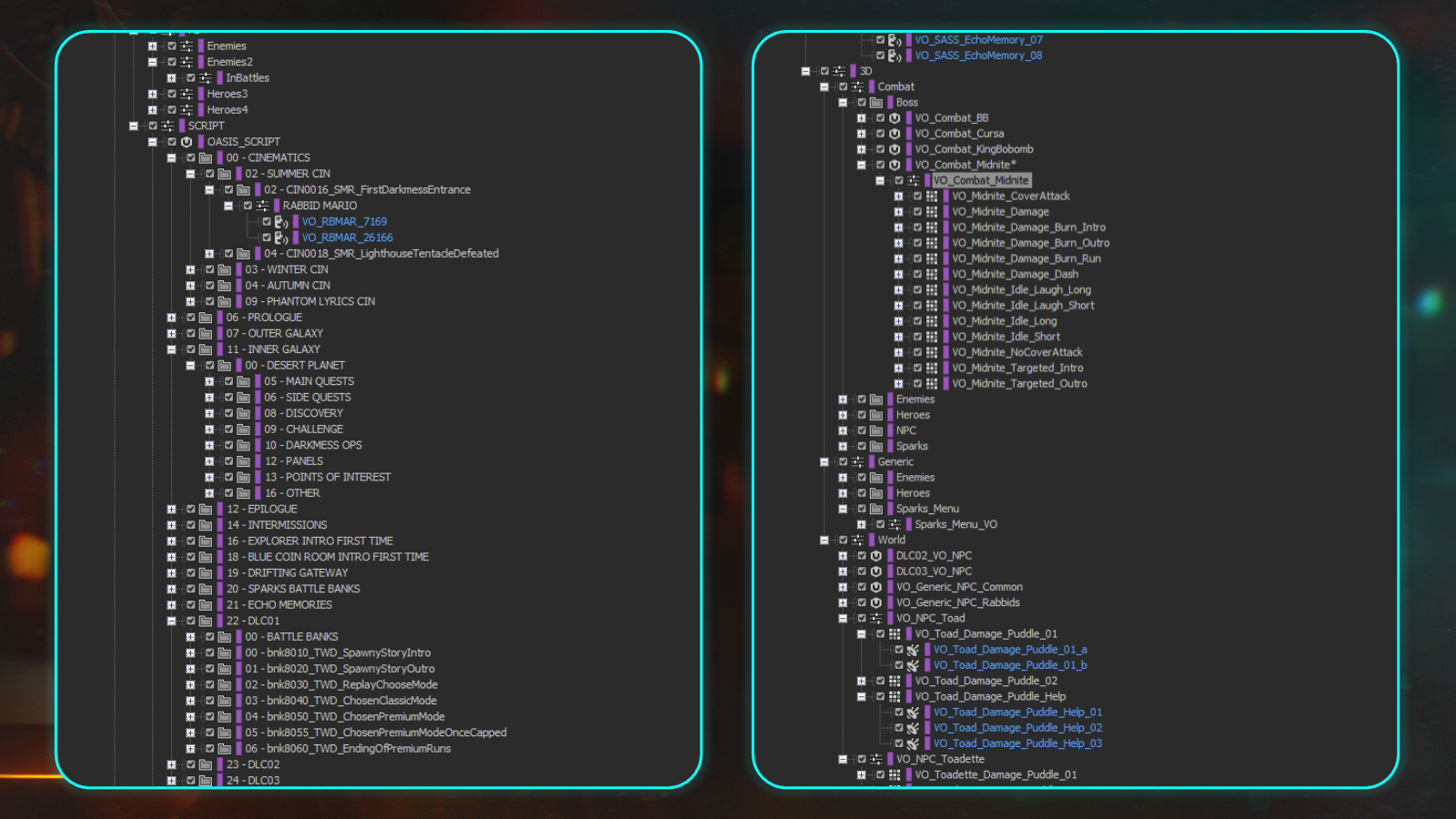

Typical one-to-one event structure as seen from another Ubisoft Wwise project with approximately 6000 VO assets.

Fortunately, Wwise comes with a way to help us mitigate this problem. External Sources are a powerful feature that can radically reduce manual labor while providing an enormous amount of flexibility. Our Voice Design team on Star Wars Outlaws utilized them extensively in Massive Entertainment’s Snowdrop game engine, and in this article I will be exploring the pros and cons and looking at some examples from our game.

What are External Sources?

External Sources look like any other sound object in the Actor-Mixer Hierarchy with one small difference: they do not have a one-to-one relationship with a source audio file. The audio file is an “external source” which is chosen in the game engine at runtime.

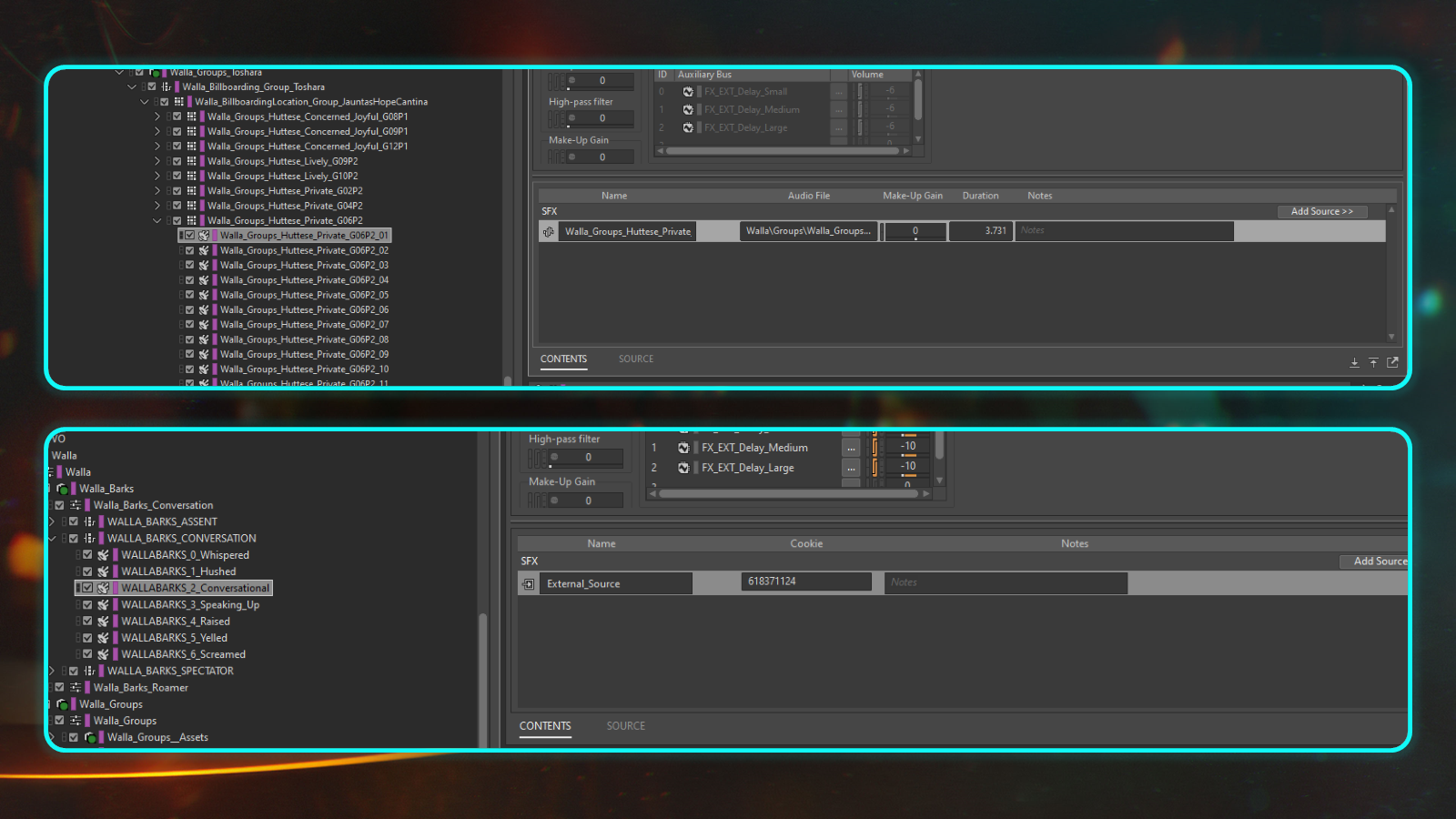

Above, a standard SFX object with an audio file reference, and below it, an External Source object with External Source plugin.

While the events themselves are generated as part of normal SoundBank generation, the audio files are not. Voice assets can still live in the Originals folder with the rest of your content (or alternatively a different location altogether), but must be converted separately from SoundBank generation.

Those assets can now be used with any External Source event for playback. In effect, this event and the sound object it references are like a “template” which any voice line can use, and can be overridden or swapped around at will.

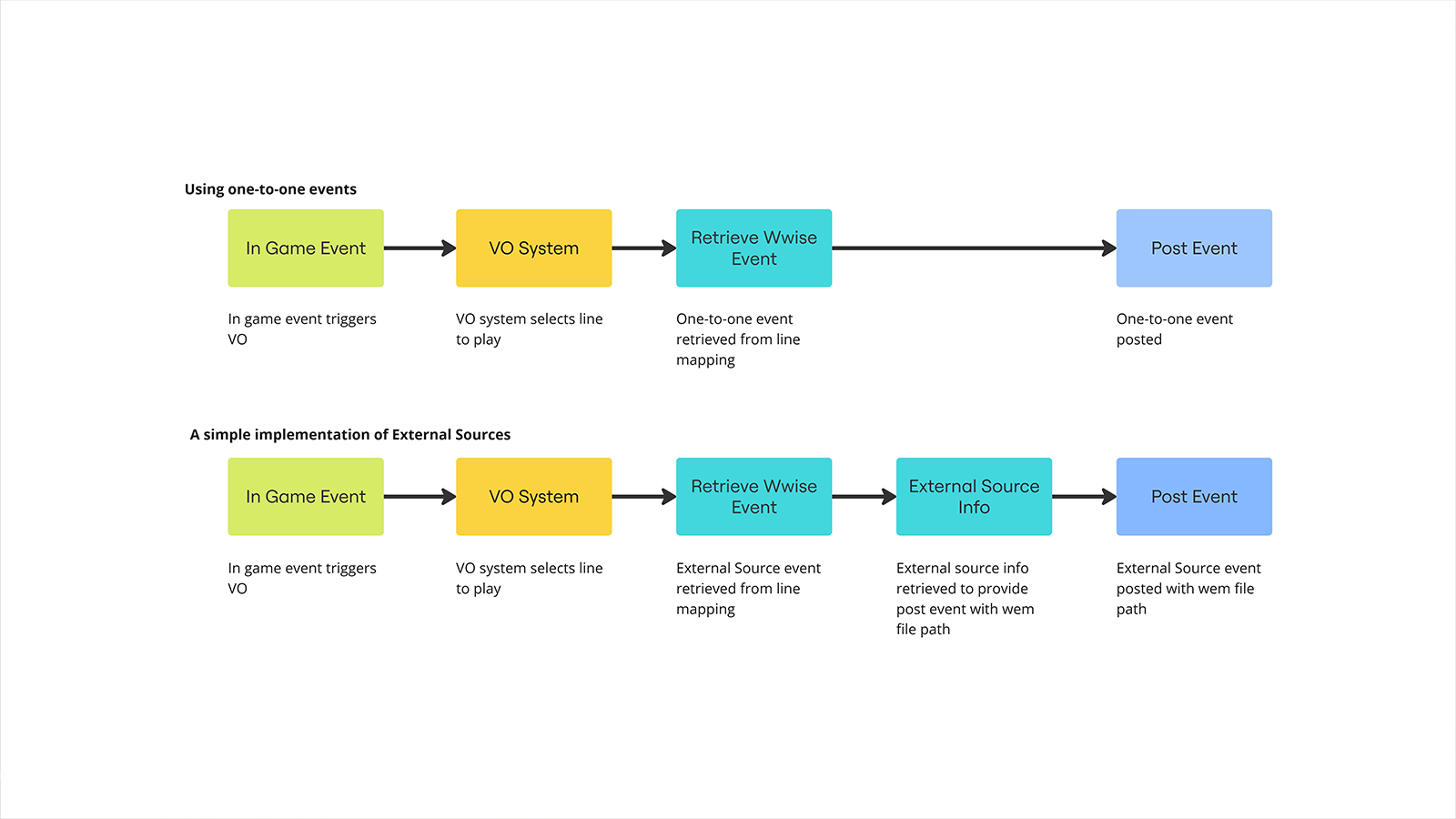

- What does it take to set up External Sources and how do you use them? The simplest implementation of them would require the following to get started:

- A process to generate a Wwise External Sources List file; an XML file detailing the file paths of VO lines.

- A generation step, either as part of SoundBank generation or a separate process using command line, to convert the wav files listed in the XML into wem files for streaming in game.

- A means for Voice Designers to assign a Wwise External Sources event to each line. This line and event mapping could be done in a Text Database or at its simplest, a csv file.

- Your game engine needs to have some sort of VO system which can select the content to play and when to play it. This is a prerequisite for External Sources to be useful. It could be a system which plays lines in story scenes in the correct order referencing the correct in-game characters, or a barks system which can be adapted to handle randomization.

- A function in your game engine to post your VO line using the External Source event defined in the Text Database; this would take the line selected by your VO system, and play the event defined in your mapping with the corresponding wem file.

An example of a simple implementation of External Sources at runtime compared to one-to-one events.

It is worth noting that External Sources are used with Sound SFX Objects and not Sound Voice Objects. Sound Voice Objects exist to support other Localized audio languages and with External Sources this can be on the game engine side at the External Source Info step in the flowchart seen above.

Ultimately, it depends on what your tooling looks like and how your game engine handles cinematics, gameplay scenes and barks; later on, we’ll take a look at some of the ways we’ve utilized them at Massive Entertainment.

Advantages

The first major advantage is simplified structure: instead of potentially hundreds of thousands of objects in the Actor-Mixer Hierarchy, you could instead have a couple of dozen depending on how complex your needs are. While it certainly makes it easier to mix with, it also eliminates an enormous amount of manual labor. There are certainly features to help with importing files like importing media from tab delimited text files, but there’s always going to be manual work maintaining and refactoring that structure throughout the development cycle. The bigger the structure, the more work there’ll be and the less time you’ll be able to dedicate to creative tasks.

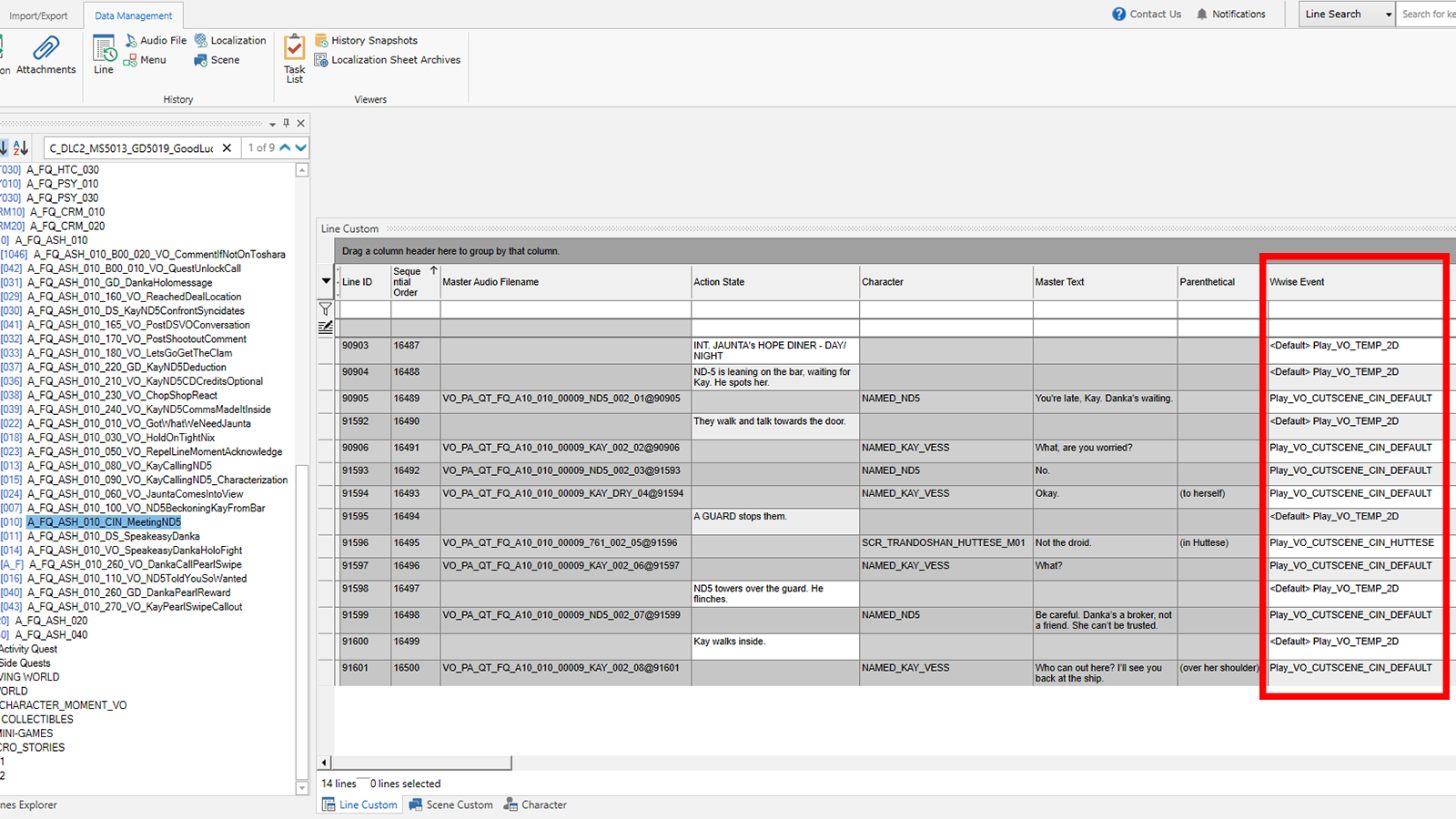

External Source Events can be used as prefab behavior “templates” applied on a line by line basis, as seen highlighted here in Ubisoft’s Oasis Text Database.

This leads us neatly to the next point, iteration. Having lightweight structure enables us to be nimbler, and this rapidly speeds up creative decision making. While you can make some precise adjustments at runtime using one-to-one events, broader structural changes which necessitate a complete overhaul can be really time consuming to do without proprietary tooling to help automate this process.

Inheritance is a powerful feature in Wwise and it is an important tool in managing complexity at scale. This can become difficult to manage manually with a one-to-one event approach to VO implementation due to its large hierarchies of content, and it’s easy to get yourself in a tangle by overriding somewhere deep in the hierarchy and losing track of what is handled where. We’re all human, and where there’s lots of manual work you can guarantee there’ll be a lot of human error. No one is perfect and the larger your VO structure is, the more likely this will become a major problem. Worse still, with large Actor-Mixer structures, problems can remain hidden for a very long time and can be difficult to fix too. The simpler the structure, the faster it will be to fix issues.

Rebuilding your actor-mixer structure from scratch is usually something you will need to do at least a couple of times during a project’s lifecycle, as you figure out what your mix and pre-mix needs are and the best way of managing and organizing your VO. Having a lightweight structure means this becomes a much smaller task, and something that could feasibly be done at a relatively late stage in production if necessary.

Lightweight structure also allows for more regular and more accurate “root and branch” reviews, to ensure everything is where it should be; when you fix an issue, you fix it for every VO line that uses that External Source object. In my finalization pass for our voice pre-mix on Star Wars Outlaws, I checked through every value on every structure before handing over to our Audio Director, Simon Koudriavtsev. With eighty-five thousand VO lines in our game I would not have been able to do this with a one-to-one events approach.

There are also circumstances where you might want to re-use the same content with radically different mix behaviors. In a multiplayer or co-op game you may need to play the same content in two very different perspectives. With a one-to-one-event approach or even Dynamic Dialogue, this means creating copies of your structure, which can become a nightmare if you need to build additional structure, refactor existing structure, or even rebuild from scratch. If nothing ever changed then this wouldn’t be a problem, but this is game development we’re talking about!

The moment you create two or more discrete copies of your project structure, you’re making a long-term commitment to do two or more times as much maintenance. External Source Events allow us to use the same base assets but swap the events at runtime depending on the context. On Star Wars Outlaws, we used this extensively for “generic” barks: multi-purpose VO lines to allow us to plug gaps in gameplay feedback or save writing and recording time by generalizing similar situations.

External Sources can also allow the same content to be used with different behavior, removing the need to copy-paste structure in the Actor-Mixer Hierarchy.

Finally, project load times in the Authoring Tool can be massively impacted by the amount of Actor-Mixer structure in it. If you are using a one-to-one event approach with your VO then that may be a major cause of a significant quality of life problem for the audio team with long wait times whenever you open the project or generate SoundBanks.

Disadvantages

There are always downsides to any implementation choice and while External Sources come with enormous benefits, there are certainly disadvantages as well. The first and most important is that you become more programmer-dependent. As External Sources do not work out of the box, a new system needs to be set up and maintained and that overhead could be significant if you do not have enough audio programmer support.

Maintenance is an important subject to touch on; External Sources means having a more complex VO pipeline which introduces more points of failure. We are in effect trading a greater possibility for human error with one-to-one events as we discussed earlier, for a system with more components and therefore more opportunities for a breakage to occur.

With External Sources not being in common use across the audio department, VO implementation becomes opaque to the wider audio team and that could present a problem for Leads and Directors if you need to reassign staff to provide extra VO support. I’ve worked on multiple projects using External Sources over the last seven years now and there have been many times when a confused Sound Designer reached out to me asking where the VO is!

If your project is reliant on Wwise to provide your VO system needs such as context using Dynamic Dialogue or random container behavior for barks, then you are certainly at a disadvantage. If you use Dynamic Dialogue to provide your barks system needs for instance, then having to build a replacement and develop other technical solutions for randomization is going to increase your startup costs. However, if your project is of a certain size or degree of complexity that might require External Sources, then it is highly likely that you need more sophisticated solutions anyway.

Surgical, per-line adjustments can become difficult to do once your content is integrated. External Sources work best when you are generalizing your approach to mixing VO assets at runtime, instead of working line by line while working in middleware. You could operate an External Source event per line for certain features where it’s most likely to be needed, but depending on how large the use-case is, it could end up counteracting many of the benefits of using External Sources.

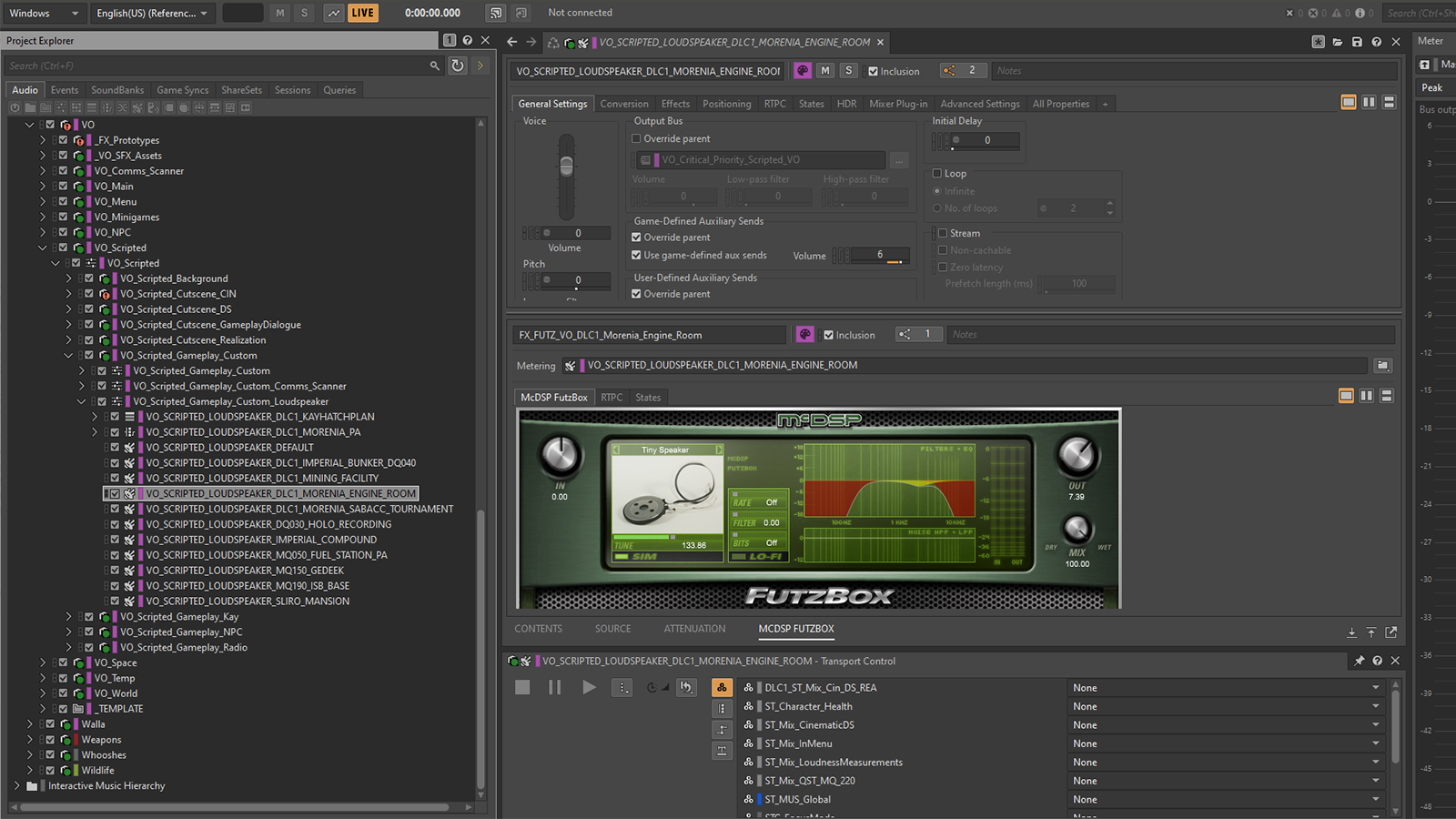

On the same subject, by virtue of External Sources being external, rendering effects in Wwise is not possible. While there’s definitely a performance hit for using runtime effects, this isn’t too much of a problem if you use them sparingly, and you can always adjust mix and pre-mix workflows to account for offline processing. Similarly, when setting up new runtime effects in Wwise, it’s much easier to do if you have content you can actually play directly from the Actor-Mixer Hierarchy! This is straightforward to work around and on Star Wars Outlaws we had an “FX_Prototypes” work unit with some sample lines we could work with, but it’s still an inconvenience worth mentioning.

Lastly, debugging can certainly become more complex as we no longer have the crystal-clear clarity of “one event, one VO line”. The Wwise Profiler now has to provide two pieces of information, the External Source sound object name and the asset name, where previously the sound object name directly reflected the asset name. The extent of this issue can depend on how External Sources have been implemented, but ultimately the complexity must live somewhere – everything is a tradeoff.

Approaches & Examples

I would say there are two main approaches toward using External Sources, the first of which I’ll term “Line Based”, where the event is set on a granular, per-line basis in a Text & Dialogue Database. The second is a “Feature Based” approach, where it is more effective to manage which event is used on a broader context basis at the feature level. On Star Wars Outlaws we used both.

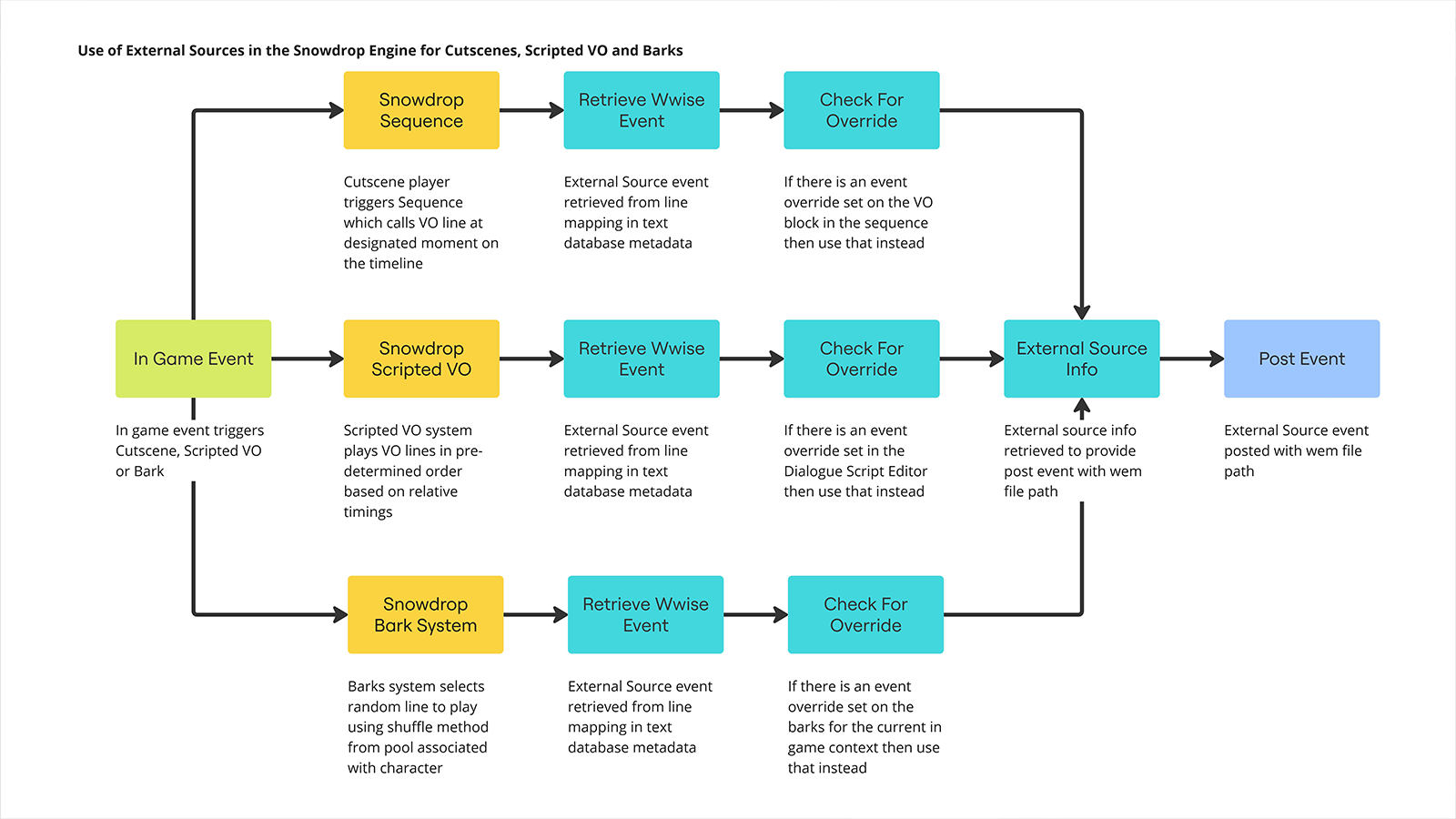

A visualization of how External Sources are used at runtime in the Snowdrop Engine.

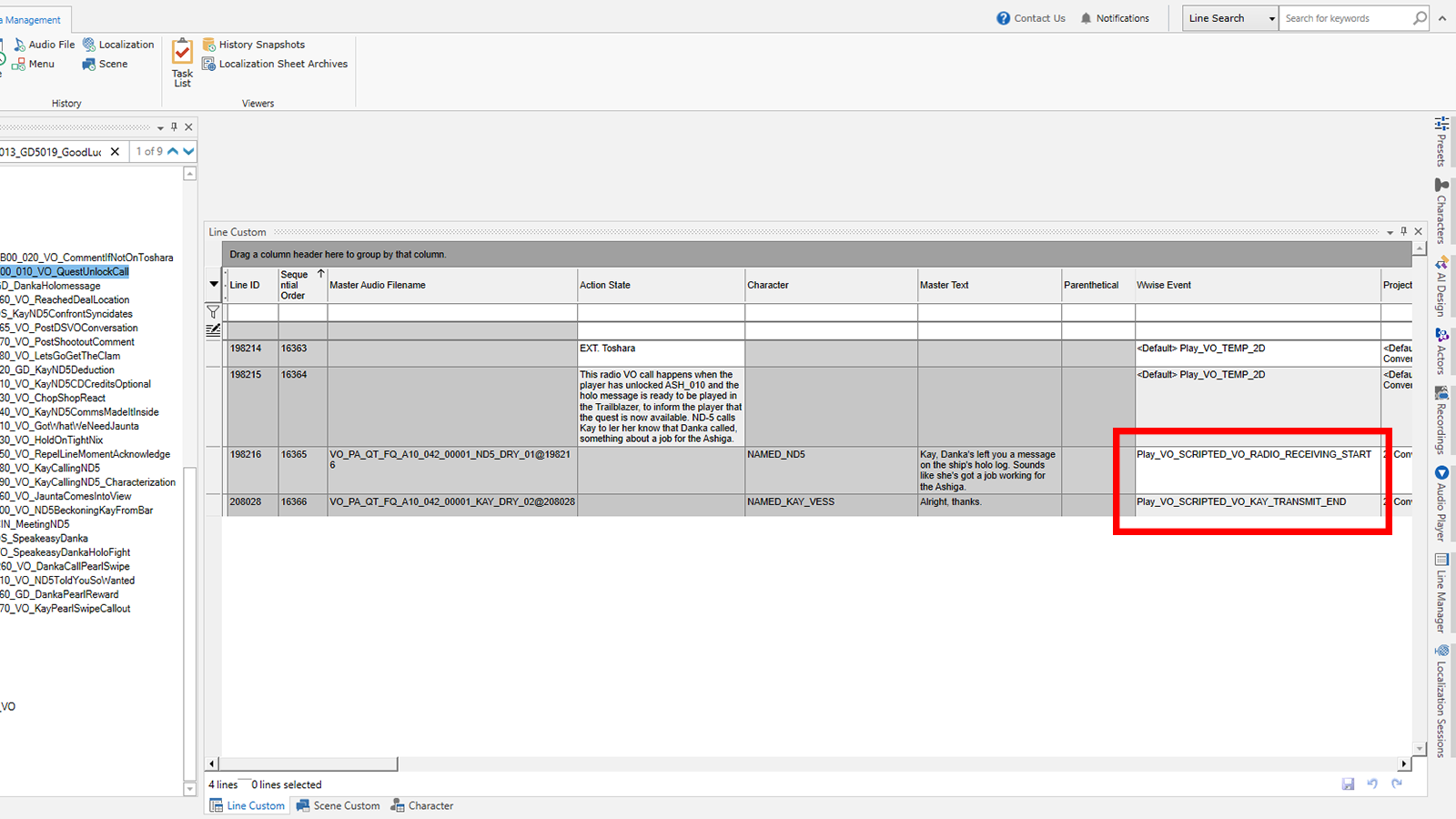

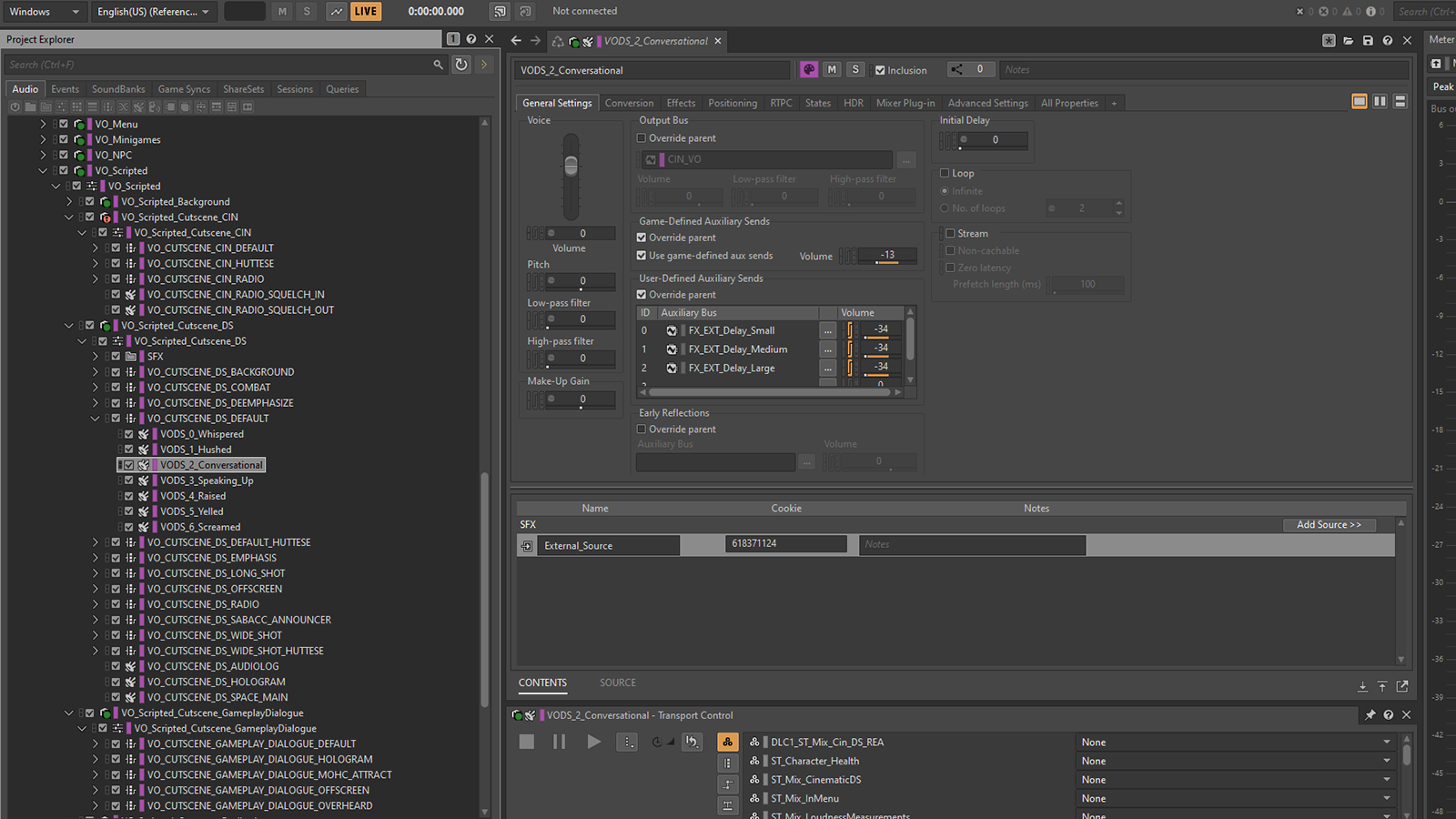

For Scripted VO (our scenes which are played in open gameplay where the player has control over our protagonist), we adopted a Line Based approach. Voice Designers would set these events while making passes on the game’s quests and other story content. As our scenes would only ever play in a single context, this provided us with the fastest way to apply specific audio behavior to each line.

In a Line Based approach, an External Source Event is set on a per line basis in the Text Database, as seen here with a radio conversation in Star Wars Outlaws.

The vast majority of our FX processing was handled through mastering, such as loudness or vocal FX for example, and baked into our assets instead of being handled at runtime in Wwise using Wwise effects. This allowed us to generalize a great deal with our strategy for using External Sources. However, not everything can be fixed in the source VO assets, and some things are just better handled at runtime with a custom event.

Wherever we bumped into a problem that couldn’t be resolved with the existing suite of External Source events or through baked-in FX processing and mastering, Voice Designers could quickly create, implement, test and iterate using a new event with custom behavior. Our premix and mix workflows were adapted to accommodate this approach, and mix notes were issued to Voice Designers, which were often implemented on the same day.

Though mastering can take care of a lot, not everything can be generalized at runtime and there were plenty of circumstances where custom events were needed.

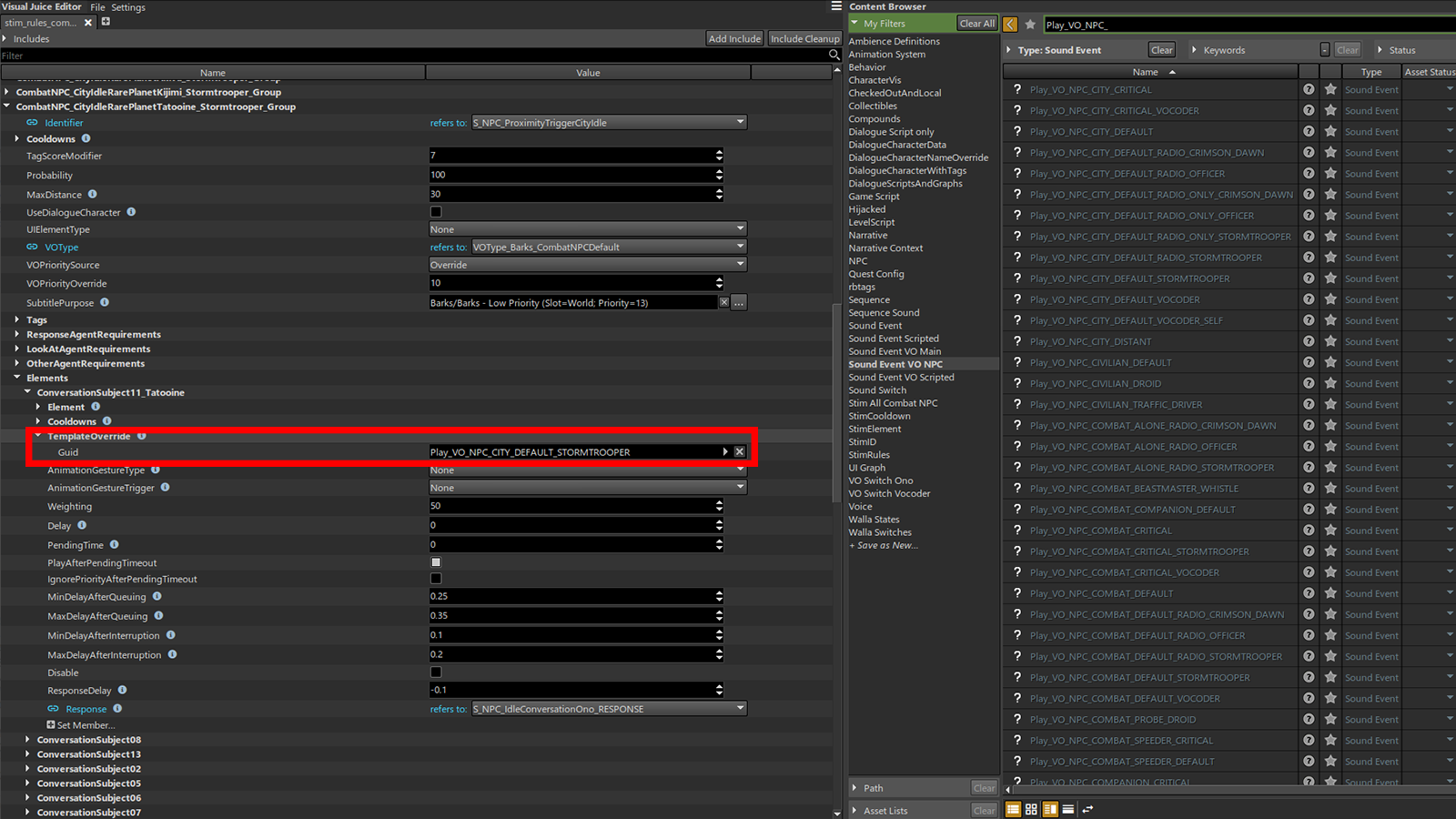

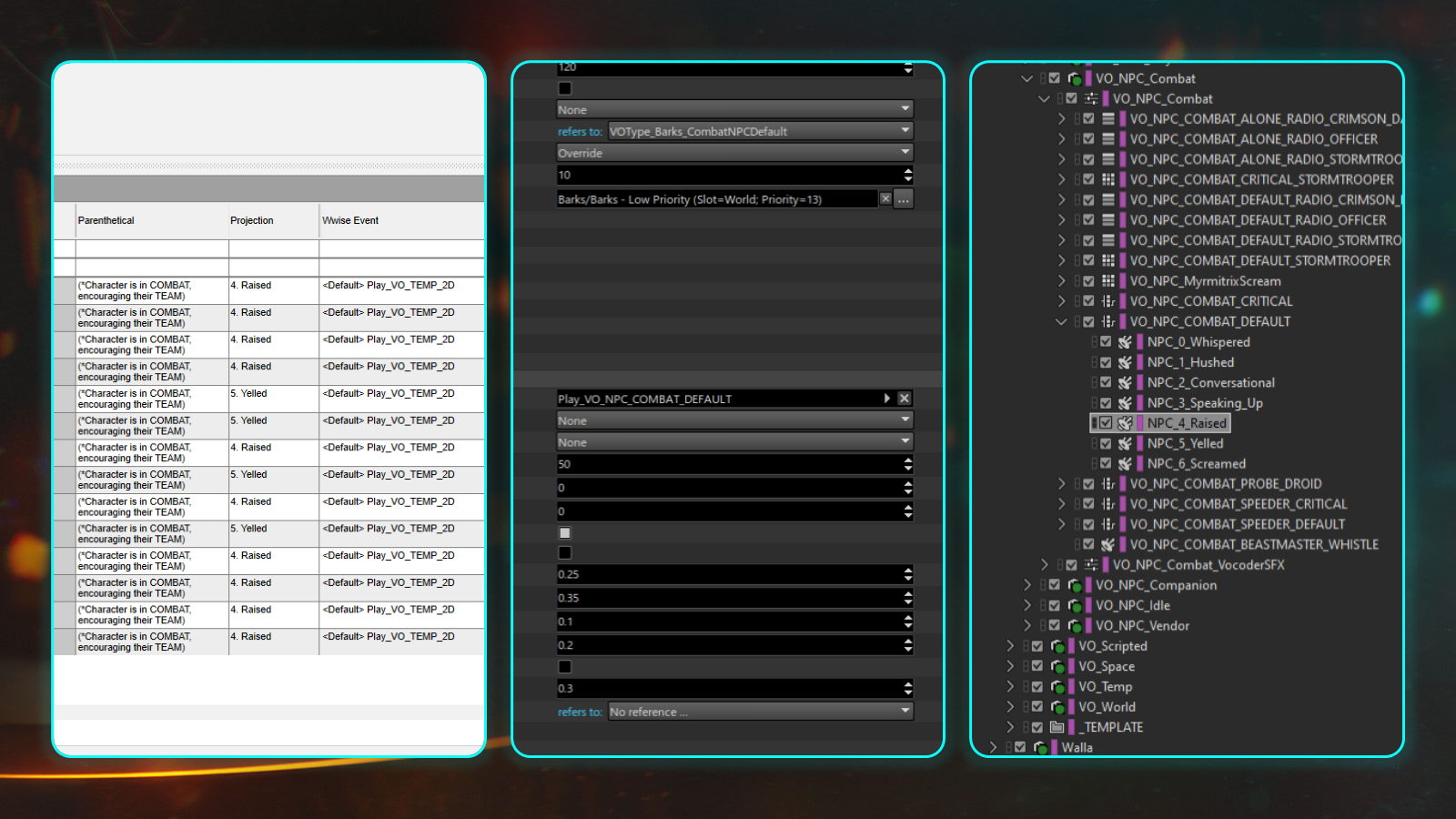

For Barks (our systemic VO which plays a line at random from a pool of content), we used a Feature Based approach. A unique functionality of our Barks System is a “Template Override”, which allowed us to specify an External Source event override for the pool of content we were using in a specific context, overriding the External Source event set in our Text Database. While you can certainly use Wwise State values to change audio behavior, it doesn’t give you full control over a number of important features, such as bus routing and positioning for example.

Cutscenes were an interesting case study, as there were a range of implementations of the Line Based approach depending on the quality level. Simpler “Gameplay Dialogues”, which were our common interactions with NPCs, by and large used a one-size-fits-all approach as intimate conversations from a single camera perspective mean that Kay and the NPC she is speaking to share a single perspective that can be generalized. “Dialogue Scenes” (our fully runtime cutscenes) used a range of perspective-based events reflecting the camera angle to help pull focus, while pre-rendered Cinematics with their hand-authored LCR assets almost all shared a single event as mixing had already been taken care of offline.

Cutscenes used a variety of approaches depending on the feature and gameplay flow.

External Sources can also be used in conjunction with the different types of playback containers in Wwise. Most prominent of these was our use of Switch Containers for projection. Driven by a “projection level” value from our Text Database, a switch selected an External Source object based on the projection level of a line. This allowed us to set auxiliary send levels differently, as a “Yelled” VO line would activate acoustics much more noticeably than a “Hushed” line.

On the left, a projection level value set in our Text Database for a pool of barks and used to set a switch value when the VO was posted. In the center the External Source event used was overridden on the Barks System “rule”. On the right, a Switch Container with an External Source object for each projection level.

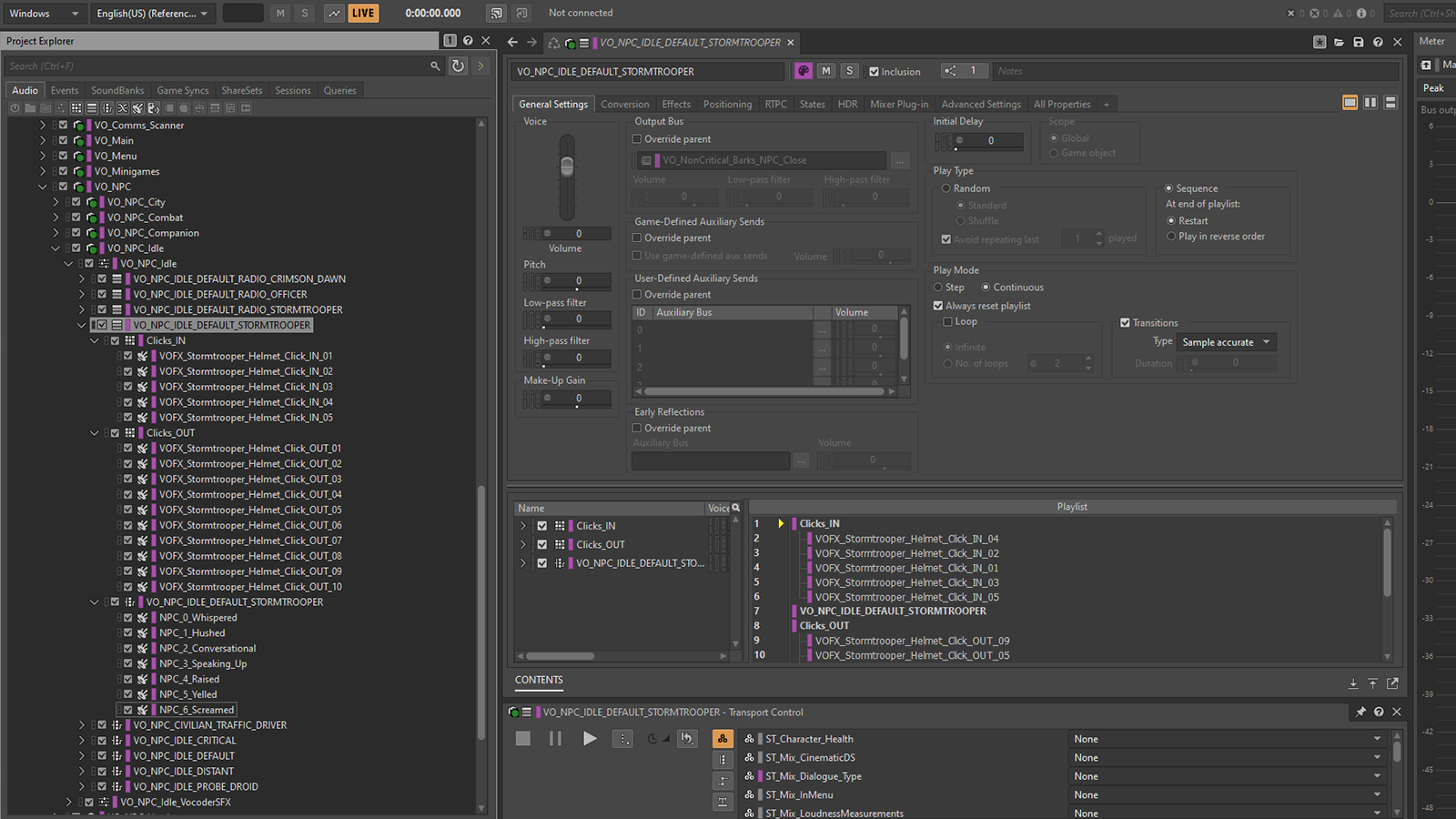

We also found uses for both Blend Containers and Sequence Containers. A Blend Container provided our “3D to radio” processing for companion NPC VO, allowing them to smoothly blend between positional 3D with in-game reverbs, to a dry radio processed object if the player ever moved too far away from them. Sequence Containers were used for radio communications with Kay’s comlink, but also for one-sided radio call idle barks heard from some of our NPCs, as well as the classic Stormtrooper helmet clicks heard in the original Star Wars trilogy.

External Sources are compatible with other container types, such as Switch Containers, used here for playing helmet clicks for Stormtroopers either side of a VO line.

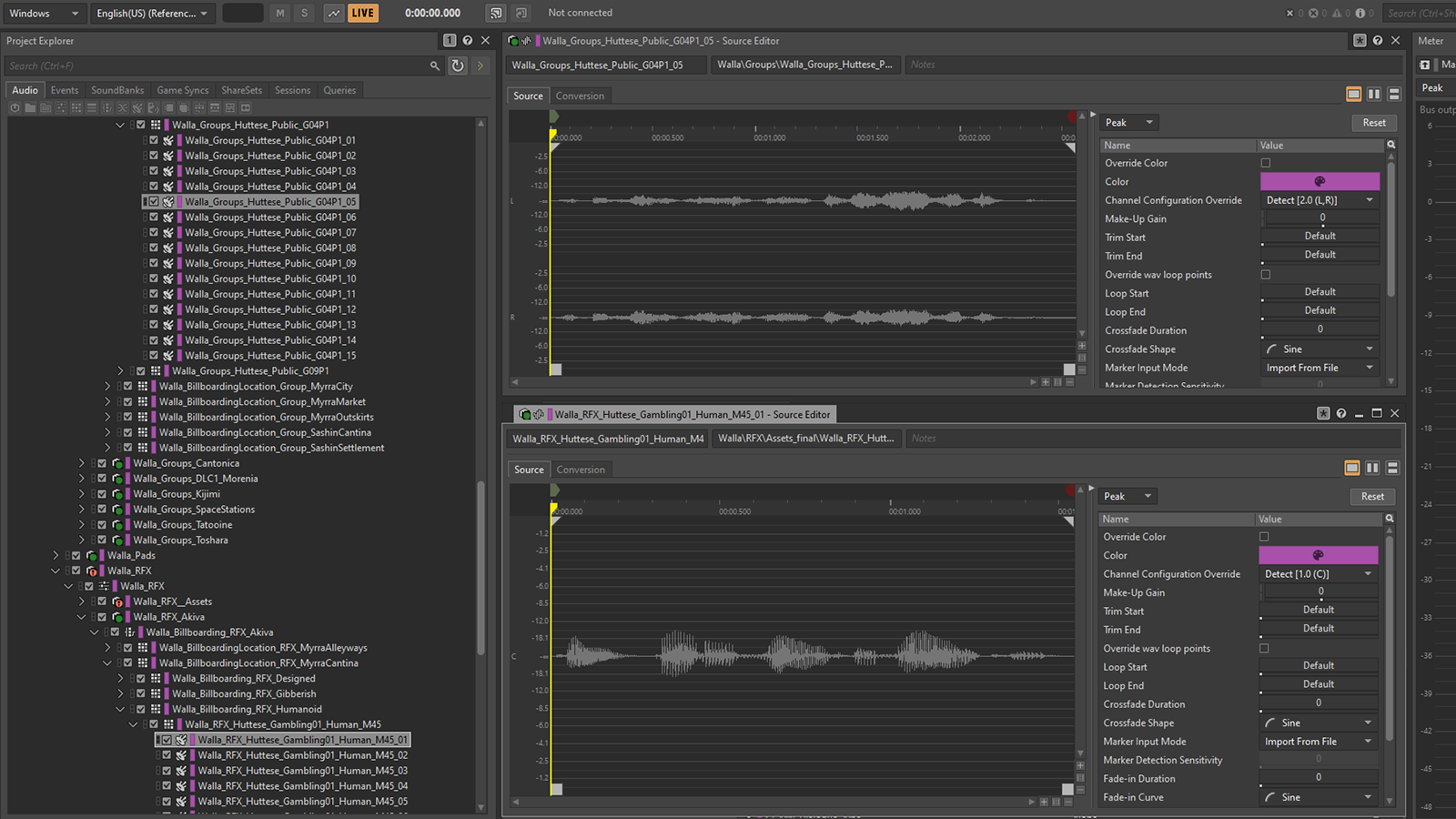

It’s worth noting that not all our voice content used External Source events. Most of our walla content was integrated as standard Sound SFX objects for example. The only exception here was the close perspective content, as it required writing and translation which is closely tied to our VO pipeline and External Sources. Combat NPC efforts and other non-verbal vocalizations were also implemented as standard sound objects as they did not require the subtitling system which is driven by External Sources. Similarly, as our Audio Descriptions for cinematics (an accessibility feature) are speech content that do not require subtitles, these also benefited from the simplicity of one-to-one events.

Not all voice content in Star Wars Outlaws used External Sources; most of the content in our walla system was integrated using standard Sound SFX objects.

Conclusion

I felt compelled to write this article as there aren’t many resources out there providing solid use cases for External Sources or evaluating its benefits and drawbacks. I remember complaining to my friend and former colleague, Chris Goldsmith, about the growing problem of multiplayer VO perspectives on the PvEvP title Hyenas while working at Creative Assembly. He asked if I’d considered using External Sources, and once he explained how they worked (and I understood it!!) my eyes lit up with the possibilities!

While they aren’t the most intuitive feature and can take a bit of time to understand, External Sources can be an enormously powerful way to reduce tedious manual work and create more time for creative choices. In my opinion, they are a must have for large-scale projects.

If you’re on track to have twenty thousand lines or more or you have a lot of complexity to manage and have no proprietary tooling to alleviate some of the problems of one-to-one events, then it’s probably a good idea to start exploring External Sources. However, if you only have a few thousand lines or rely heavily on Wwise for basic VO system functionality and are short on programming resource, then a one-to-one event approach is almost certainly going to be the best one; retaining the surgical control, transferable skills, and saving your precious audio programmer time for bigger rewards.

As always in game development, everything is a tradeoff and nothing is free; if it doesn’t need code, then it probably needs a lot of manual labor and vice versa. I hope this article has helped explain what that curious “Add Source > External Source” option is, and how it could be used to work smarter and carve out more time for creative choices for your Voice Designers.

Comments