Sweet vibration, remember your first time? Maybe you expected it because you bought a Nintendo 64 “Rumble Pak” for Golden Eye—good luck finding items without the pack. Or maybe you were playing a game like Tekken 3 on PlayStation with a new Dual Shock controller when at some point the motors startled you as they began to whirl. Today, you’d probably be startled if your controller didn’t vibrate after you got shot in your favorite FPS.

These home console examples are a couple of decades old, and from arcades there are examples twice as old as that.(1) Haptic feedback, force feedback, rumble, vibration, or, as we at Audiokinetic call it, motion has been around for a long time. We now expect to have some variety of haptic feedback when losing in a fight game, crashing in a driving game, or approaching some danger point in just about any game.

Not impressed?

Maybe you lump the console motion innovations of the later 90s in with their contemporary Web innovations. You might remember some…interesting…style choices back then, think JavaScript lets me put a flaming trail on my pointer and randomly change the background color. However, we learned to properly harness Web development powers and became professionally rigorous. Haven’t we also for haptic feedback?

It could be that you just don’t care; after all, you’re a sound designer, not a motion designer. Why should a blog from an interactive audio company even address motion? I think the parallels with sound design are so abundant that it’s worth your while to stay with me as I take you through a comparison of the two arts, show you how and why motion belongs in Wwise, give you an overview of using motion, and close with a look at what the future could hold for haptic feedback in our industry.

Haptic Feedback vs Sound

Fundamentally, haptic feedback and sound are both types of vibration. Sound vibration is largely transmitted through the air, then picked up by your ears. Haptic varieties are more typically felt directly on the body, especially the hands.

In sound design, one is concerned about rhythm, waves, and their amplitude and frequency. These basic building blocks are shared by motion designers. Understanding how to set these attributes allows a designer to give the right feeling, pun intended, in complement to the game situation. This depends on what you are trying to convey or, quite possibly, why you are trying to convey it.

Normally, for both sound and motion, you are passing information to a player. It may only be another detail in the immersive process, trying to make things realistic. It may also indicate very specific things, which you probably wouldn’t notice in reality. Wouldn’t life be easier if we got indicators like “look here”, “the enemy’s this way”, or “you dropped your wallet”? These attention points can and generally are displayed visually with colored cues, highlighting, text, and other in-game elements or within HUDs. However, visuals can get overwhelming such that the recourse to another sense is invaluable. That’s often where sound design comes in. Yet, sometimes a cacophony of sounds drowns the information too. Having another arrow in your quiver, motion design, fills an important role.

Motion can be purely complementary to audio. For many games, motion reinforces audio by just vibrating along with the sound object. This is easy to do. In Wwise, as we’ll get to later, just send an SFX to a motion bus with an Auxiliary Send. Motion can also stand on its own. No audio source is necessary; more thought about design, however, is.

Informing a player with a visual cue like a written message is straightforward and commonly used. It works. A similar strategy for sound design would be a voice of god. It too can work. However, audio can be more jarring; audio reactions are both faster and stronger than visual reactions. Therefore, key to successful sound design is subtlety. Guess what? Tactile reactions are still faster and stronger than audio reactions.(2) Subtlety is essential for motion. Studies also show that intermodal feedback, combining audio and tactile, is additive; using them together results in the strongest and fastest reactions, especially when accounting for ambient background interference.(3) So, let’s quickly take a look at some ways you use sound and think about how motion may fit too.

Audio accompaniment and VR

Within a game, we’ve come to expect audio in certain places: a click or beep as you move through menu options and appropriate audio associated with game physics, from running and jumping to massive explosions.

Expectations are still higher with Virtual Reality, which depends on strong sensory immersion. Focus is put on the visuals; however, as Audiokinetic VP Jacques Deveau reported to Develop magazine, “Since the player can view all in 360 degrees, care and attention to audio detail is critical to making an immersive experience,”(4) and “poorly implemented audio can quickly break the virtual reality experience.”(5)

Motion in the former cases may not be necessary, although the explosion would be lacking. In the case of VR, I think the quoted statements work just as well if we replace “audio” with “motion.” Poorly done work could take away from the game by overpowering the other sensory components. Well done work, on the other hand, will contribute to the immersion.

Attention to subtle details can have a huge impact on the quality of a game. The visual component still takes precedence over audio, and audio takes precedence over motion. Nevertheless, we should all agree that “it only takes a few seconds of playing your favorite game on mute to realize how important sound is when it comes to creating a captivating experience.”(6) So, why dismiss haptic feedback?

The simple answer is that motion is more primitive than audio. We have substantially less control over it, yet we need it to be subtler than audio. Increasingly, however, opportunities are appearing to increase the sophistication of haptic feedback within video games.

Use in Wwise

Early on, Audiokinetic understood that adding haptic feedback to the sound design pipeline would work.(7) At first, all we supported was the rumble you get along with an existing sound. We then added separate Motion FX and Motion Bus objects to allow designers to synthesize motion without sound. This required the Motion Generator plug-in, but the design flow remained the same as audio.

With Wwise 2017.2, we simplified the workflow for motion by dropping the separate objects. By simply changing its Audio Device, any Audio Bus can now be a motion bus and any Sound SFX object can output motion by sending it to a motion bus.

We still needed better platform-specific settings for the plug-in. So, with 2018.1, we introduced Wwise Motion Source.

Setting it up

Wwise Motion Source is a premium plug-in, which, as its name suggests, replaces the audio source of an SFX. It comes pre-installed with Wwise; however, while you’ll be able to play around with it in the authoring tool(8), it must be licensed to use it within a game(9) or even to build SoundBanks that contain it.

To have Wwise Motion Source work within a game also requires integrating the Wwise Motion plug-in in the Wwise sound engine.(10) Have your audio programmer set things up as described in the SDK doc’s Integrating Wwise Motion page.

Notably, the Wwise Motion plug-in “can be seen as the link between the sound engine and a motion-ready device.”(11) It predefines support for different controllers and platforms. For most users, this should be adequate. Wwise is, nevertheless, designed to be flexible. If ambitious audio programmers want to define their own motion plug-in, many atypical controllers and platforms could also be supported.

There are differences among even the standard controllers defined in the Wwise Motion plug-in. An Xbox One controller has trigger actuators, whereas a PS4 controller does not. If you’ve ever shot a gun, maybe the powerful bang it emits sticks with you. You might equally be impressed by the forceful recoil. Whether you have a full surround sound system with woofer or just a stereo output, mentally the bang connects with your in-game actions. A vibration on the trigger, however, might make a much better mental connection with the recoil than would a more general controller vibration. Maybe if you don’t have a trigger actuator on your controller you wouldn’t even want to add motion for shooting a weapon. Getting shot, in contrast, might call for a more general motion—nothing specific to the trigger.

Wwise Motion Source accommodates multiple controllers and platforms. To ensure your project works well with them, testing your setup should be done early on. Let’s start with a simple example using an Xbox 360 controller on Windows(12), so that you can test things directly in the Wwise authoring tool.

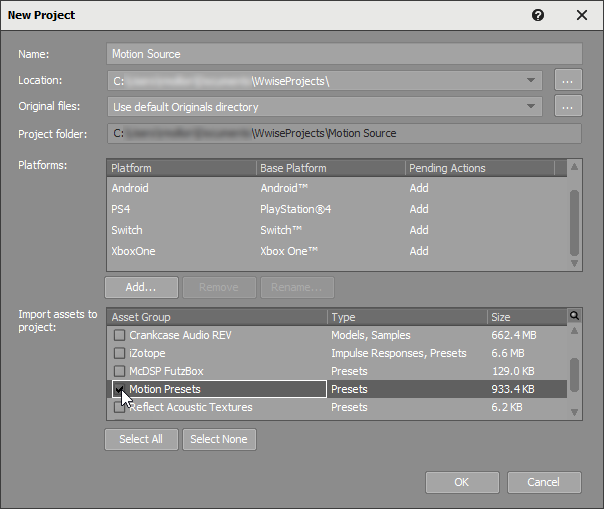

- Open a new Wwise 2018.1 project.(13)

- Add all five of the base platforms that support Wwise Motion: Windows, Android, PS4, Switch, and Xbox One.(14) (It’s not important for this example but will be useful in our next ones.)

- Add only the Motion Presets asset.

- Click OK.

- Close the License Manager.(15)

- Create a Sound SFX object. I’ve called it Motion Test.

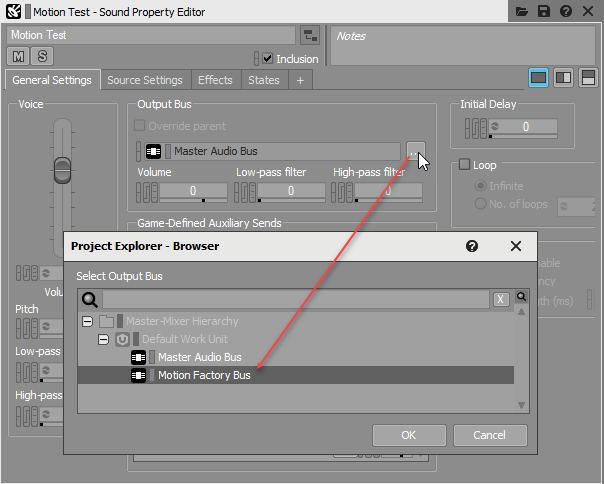

- In the Motion Test Property Editor, change the Output Bus to Motion Factory Bus, which is already set up to use the Default_Motion_Device as its Audio Device.

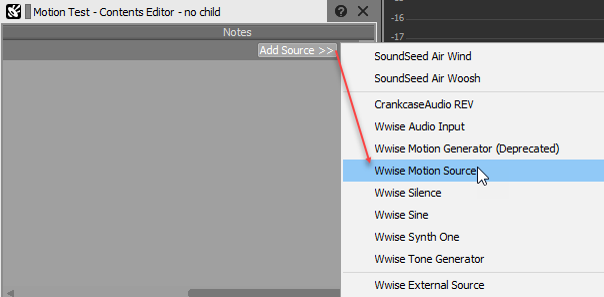

- In the Motion Test Contents Editor, add a Motion Source.(16) I’ve named it Motion Source Test.

- Double-click the Motion Source Test to prompt its Source Editor.

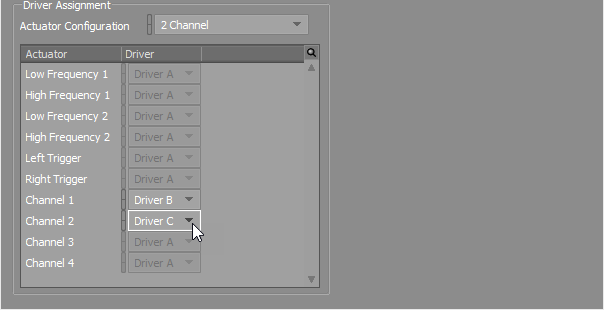

- As we know the Xbox 360 controller has two actuators, choose the 2 Channel Actuator Configuration. You’ll see the Driver lists are activated for Channel 1 and Chanel 2.

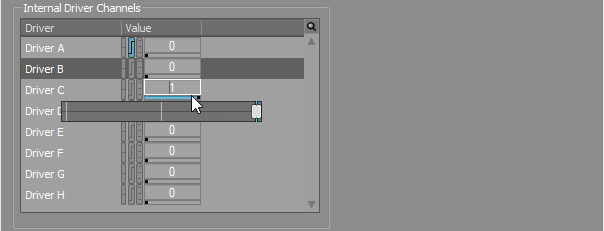

- As Driver A is set up with an RTPC curve by default, select Driver B for Channel 1 and Driver C for Channel 2 so that they are different.

- With your game controller in your hands, press the spacebar to play Motion Test in the Transport Control. Nothing should happen because the Values for the two Drivers are still 0.

- For Driver B, set the Value field to 1.

- With your game controller in your hands, press the spacebar to play Motion Test in the Transport Control. You should feel a strong short rumble.(17) This is the Xbox 360 controller’s low frequency actuator.

- Return Driver B’s Value field to 0. Then, for Driver C, set the Value field to 1.

- With your game controller in your hands, press the spacebar to play Motion Test in the Transport Control. You should feel a gentler short whirl. This is the Xbox 360 controller’s high frequency actuator.

Effectively, the same type of process can be applied to test the Actuator Configuration for any game controller or other motion device. Of course, to test controllers with more actuators, such as for the Switch and Xbox One, you’ll have to be remote connected to a game or some variety of playground set up for those platforms. For the following examples, we’ll use the predefined Actuator Configurations knowing that Wwise Motion will translate the driver values for each supported device. They are set up to work with their namesake platforms even though we won’t be able to fully test them within the authoring tool. We’ll also see how Wwise’s platform linking allows us to respond to platform-specific differences in motion design.

Motion from audio

Arguably, creating motion from audio is even easier to do in Wwise than our simple Wwise Motion Source test above.

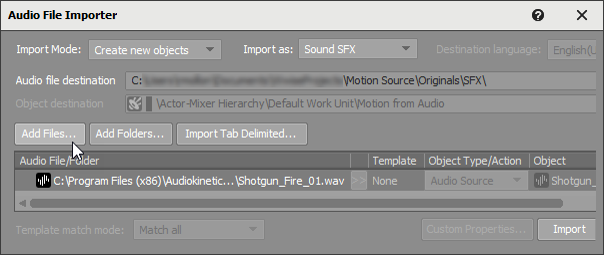

- Create a Sound SFX in Wwise. I named it Motion from Audio.

- Import a short but loud source file. I grabbed a gunshot sound from the Wwise Integration Demo.

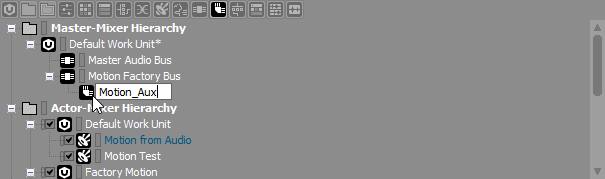

- Going up to the Master-Mixer Hierarchy, create a new Auxiliary Bus under your Motion Factory Bus. I called mine Motion_Aux.

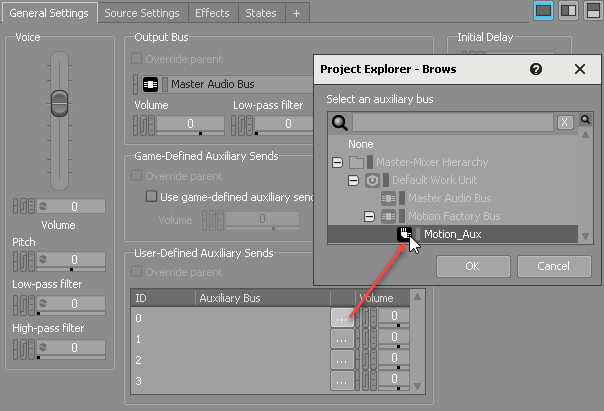

- Returning to the Motion from Audio Property Editor, add Motion_Aux as a User-Defined Auxiliary Send.

- With your game controller in your hands and your sound output on, press the spacebar to play Motion from Audio in the Transport Control. You should both hear the shot and feel an accompanying strong rumble. That’s it!

Not satisfied with the motion response? Need a little more strength? Need a little less? Adjust your Volume in the Motion_Aux Auxiliary Bus. You are also free to play with the sound as you like. Change the Volume or Pitch, add an HPF or an Effect, or whatever else we sound designers do to deliver the right output. In its own way, motion will follow along with it.

Unfortunately, with today’s hardware, you may not notice a great difference on the motion side. As you can imagine, or try out if you please, replacing the Motion from Audio’s gunshot source file with a song or voice source file, say some wonderful classical sonata or a stirring speech, just won’t output motion in any desirable way. To gain more control, use a motion-specific SFX.

Motion from motion objects

In our earlier simple motion test, we saw how easy it is to create a motion-specific SFX. Now we’ll take a look at more detailed motion-specific designs. To continue on the path of ease-of-use, we can take advantage of our ready-to-use Motion Assets to both explain principles and provide a base for our own future motion development. Need motion in no time? As in, just before shipping your game your project manager says, “Oh, by the way, we need some motion on those 10k objects.” (But that would never happen, would it? ![]() ) You might be pleasantly surprised at how well the Motion Assets work, without the work.

) You might be pleasantly surprised at how well the Motion Assets work, without the work.

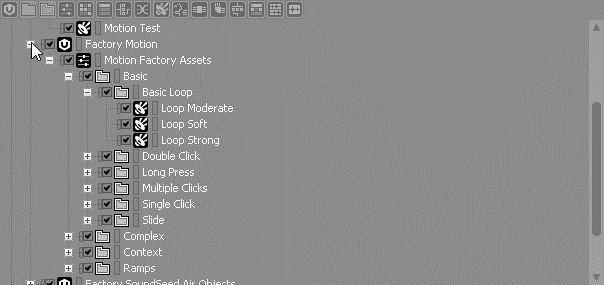

Go to the Project Explorer to open the Factory Motion Work Unit.(18) You’ll find many SFX objects organized in different Virtual Folders. Each of these gives controller and platform-specific settings for different scenarios that often occur in-game and could benefit from some motion.

Let’s take a look at a few of them, focusing on their associated RTPC curves and specific platform differences.

Loop Moderate

- From the Basic > Basic Loop Virtual Folder, load the Loop Moderate SFX in the Property Editor.

- From the Contents Editor, double-click the Wwise Motion Source to load it in the Source Editor.

- Specify Switch in the Platform Selector of the Wwise toolbar.

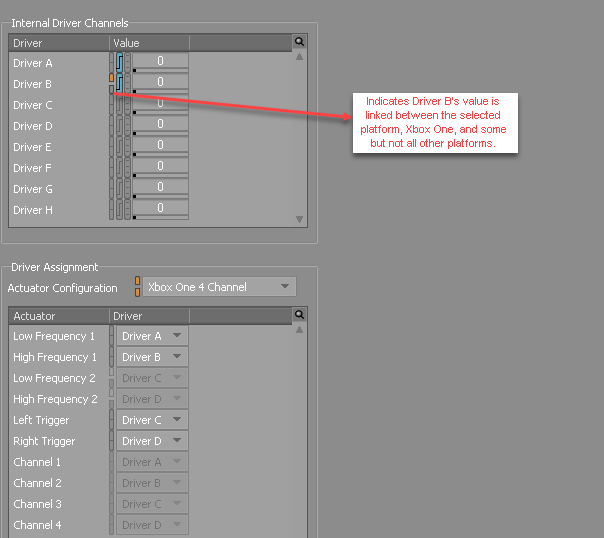

In the Effects tab of the Source Editor, you’ll see the chosen Actuator Configuration, Switch 4 Channel, is specific to the currently selected platform, Switch, and each of the four actuators are assigned to either Driver A or Driver B.(19)

Within the Internal Driver Channels group box, we can see that Driver A is set to 0 and has no RTPC. This means the actuators with this driver will not produce motion, which is desirable in this case to avoid the Switch’s high frequency actuators, which may be too high and therefore too “gentle” for the desired feeling. If you check other platforms, you’ll see in contrast that high frequency actuators are used while the low frequency ones are silenced because their frequencies are closer in line with the desired sensation.

We can also see that Driver B is offset by 0.05 specifically for the Switch. We did this because the Switch Low Frequency actuators are also slightly weaker than other platforms’ High Frequency actuators. To keep a certain amount of consistency among the different controllers, offsets of this variety are useful while maintaining a single curve for all platforms. So, when using the Motion Assets or designing your own settings, it’s important to pay attention to the Link indicator to understand if the changes will apply to all of your project’s platforms (linked) or just the currently selected one (unlinked).(20)

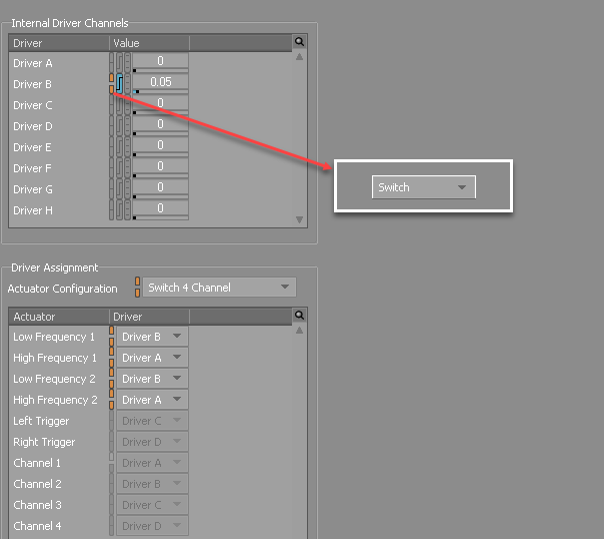

Next to the platform-specific link indicator is the RTPC indicator, whose color currently indicates there is an RTPC curve for Driver B. Go to the RTPC tab to view the curve.

We can see it’s a simple flat curve indicating constant motion output, the Y Axis, over a fixed time defined by selecting a Time Modulator, the X Axis. Double-click the Time Modulator to view its settings in the Modulator Editor. You’ll see that this curve actually has a Loop Count of Infinite. You’ll also see that it would be triggered by an Event and, thereby, stopped only when the Event stops.

This could be used for a game level where the player is close to a generator or surrounded by machines giving off a low constant hum of activity.

Oscillation

- From the Complex Virtual Folder, load the Oscillation SFX in the Property Editor.

- From the Contents Editor, double-click the Wwise Motion Source to load it in the Source Editor.

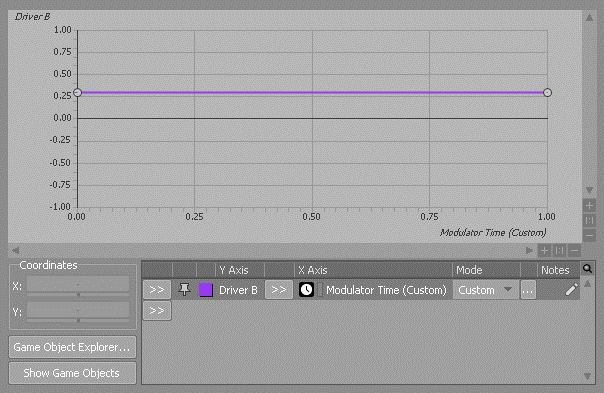

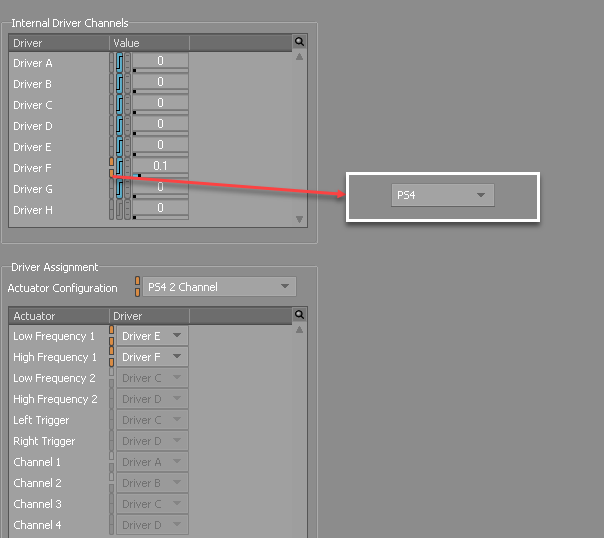

- Specify PS4 in the Platform Selector of the Wwise toolbar.

In the Effects tab of the Source Editor, you’ll see the Actuator Configuration, PS4 2 Channel, is specific to the currently selected platform, PS4, with its Low Frequency actuator set to Driver E and its High Frequency actuator set to Driver F. We’ve also added a small offset to it in order to relatively increase the PS4’s High Frequency actuator strength.

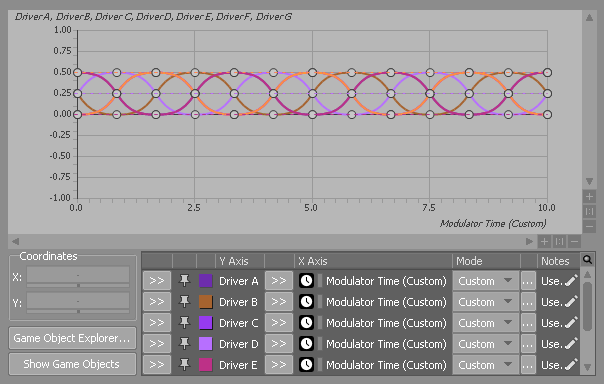

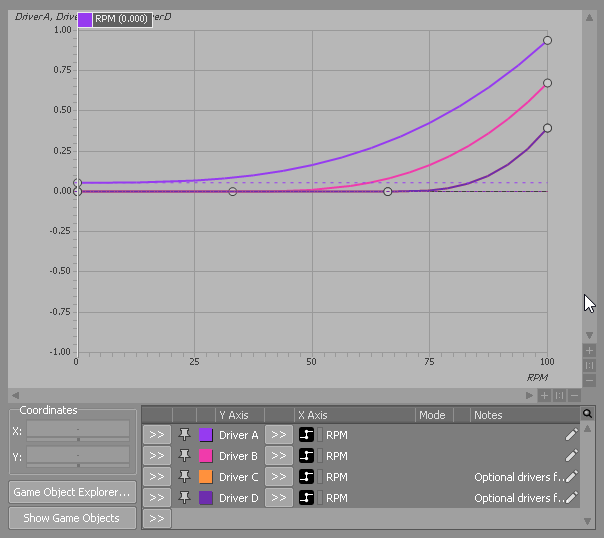

Within the Internal Driver Channels, we immediately see there are seven drivers with RTPCS. So, let’s move to the RTPC tab.

Clearly, this motion asset is more complex than the Loop Moderate one; it uses seven different drivers and curves, but only two are relevant to our currently selected platform. You can highlight individual drivers, E and F for the PS4, to better see their individual curves.(21) However, the concept behind the curves is the same for all platforms: give a sensation of circular movement.

As the vibration moves from left to right to left to right with gradual overlapping transitions, or, in the case of a four-channel controller, from bottom left to top left to top right to bottom right...the physical sensation transmitted to the hands fairly directly reflects the turning of a water pipe gate valve. It might also abstract another oscillating sensation, such as being spun around in a twister or completing a quadruple axel as a figure skater--although we might consider limiting the dizzying looping in at least the latter case, lest the figure skater fall hard to the ice with yet another type of motion feedback.

Off-Road Dirt

- From the Context > Vehicle Virtual Folder, load the Off-Road Dirt SFX in the Property Editor.

- From the Contents Editor, double-click the Wwise Motion Source to load it in the Source Editor.

- Specify Xbox One in the Platform Selector of the Wwise toolbar.

In the Effects tab of the Source Editor, you’ll see the Actuator Configuration, Xbox One 4 Channel, is specific to the currently selected platform, Xbox One. Its Low Frequency and High Frequency actuators are set to Driver A and B, with associated RTPCs, and its Trigger actuators are set to C and D, but without any RTPC, State, or offset value.

For the sensation of being off-road, we may not need anything on the triggers, especially if we’re not behind the wheel. Nevertheless, keep in mind that you have the power to build your own motion objects from these assets, or even to directly edit them. It could be that you want the player to have more direct feedback. For example, if your game engine is set up to have the triggers steer your vehicle, maybe you’d want the drivers--pun kind of intended--to vibrate a little bit; or, maybe you’d want to have a vibration on the triggers corresponding only to your changing directions. As much could be done in a similar fashion for the triggers if they were defined to be the brake and gas or, perhaps, the clutch.

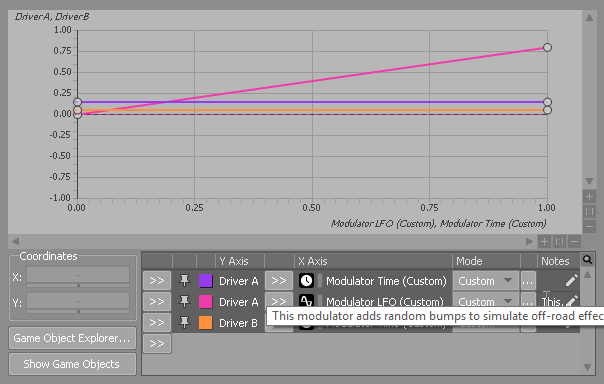

In the RTPC tab, we discover that the Off-Road Dirt SFX is slightly different than our earlier examples. While it only has two drivers of relevance, there are actually three separate curves. This is because we have applied both a Time and an LFO Modulator on Driver A. While the Driver A and Driver B Time Modulators are both low intensity flat lines (which loop infinitely), the LFO Modulator on Driver A can be opened to reveal settings like Random changes with a Depth Variance of up to 85%.

The result is a constant high frequency vibration from Driver B, reflecting perhaps a steady motor, and a low frequency vibration from Driver A imitating the rumble of wheels rolling along the dirt. Key, of course, is that without a smooth surface the rolling should not be consistent. As such, the LFO Modulator’s unpredictable small variations bring us closer to the desired immersion.

Create an Event

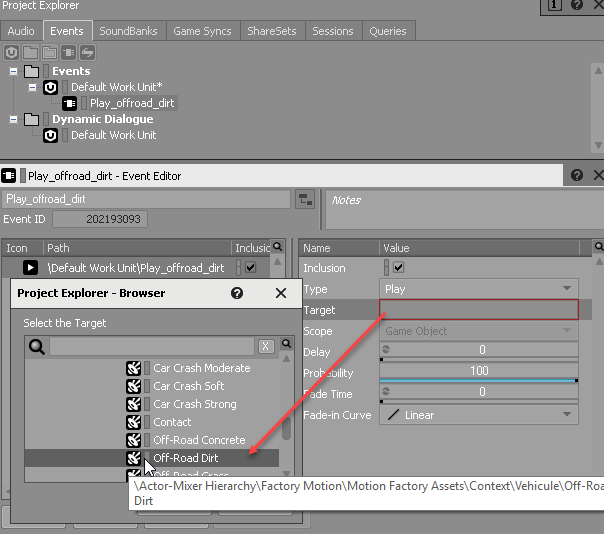

How do any of these motion objects get played? The same as any audio object: call an Event. I won’t delve into detail on how this is done because it's not motion-specific. Still, for the sake of a complete example, let’s go to the Project Explorer’s Events tab.

- Select the Default Work Unit.

- Use the shortcut menu to create a new Play Event. I named it Play_offroad_dirt. You should see where I’m going with this.

- In the Event Editor, from the Play_offroad_dirt Event’s Target field, browse to the Motion Asset we were looking at earlier: Off-Road Dirt.

We now have an Event that can be called like any other to activate motion. Of course, with this motion object happening to have an infinite loop, we must also be sure to create an associated Stop Event in the same way. Just as there is a call to the Play Event to activate the motion object, there’d be a call to the Stop Event to stop the motion.

An Event could, and often will, play audio and motion in tandem. The great advantage with having an individually defined motion object with audio, as opposed to playing motion from audio, is that we circumvent the previously mentioned limitations. Play a stirring speech alongside an accompanying combination of Motion Source RTPCs that highlights important points. It can convey the movement and feeling of the words or just provide the soft complements needed at key points.

Game Parameters

Of course, like audio, having to perpetually call Play Events might not be ideal. Instead, you can have your motion objects tied directly to your in-game parameters. This is done by programming a link between a Wwise-defined Game Parameter and your actual game’s parameters.

Another Motion Asset, the Parametrized RPM in the Context > Vehicle Virtual Folder, shows that the Motion Source drivers can be mapped to Game Parameters. This should come as no surprise, as it is common to use a Wwise Game Parameter object with RTPCs.(22)

This works in that the RPM Game Parameter values are mapped to a corresponding property within the game, such that whenever the game returns a value of 75, for example, the Driver A curve returns a value of a little more than 0.4, Driver B roughly 0.15, and so on.

Mistakes to avoid

With so many Motion Assets and the ease to create and apply them to your game, the biggest mistake I think a motion designer can make in Wwise is overuse. Just because you have four or more actuators doesn’t mean you have to use them. Remember, even more than audio, motion is subtle. Be careful to properly evaluate the added benefit of motion effects. Is it really needed in every case?

Significantly, don’t limit yourself to individual cases either. Motion may work great on one object, as well as a dozen others, but what if they are playing at the same time? What if you have hundreds of individually excellent motion objects playing concurrently? If we were talking audio, then our brain might filter out much of what it would deem to be “background noise” and focus on the louder, more relevant sounds at hand. In contrast, even just two or three motion effects playing at the same time may be too much for the hardware, perhaps comparable to what you may get in my earlier Motion from audio example of outputting motion from classical music.

Be that as it may, the overarching mistake is just how little time, and therefore thought, many of us will put into our motion design. Admittedly, with tight deadlines, it can be tough. Getting things right, however, necessitates both planning and some experimentation.

Where Are We Moving?

The future for haptic feedback in video games and beyond is intriguing. There are a number of researchers looking into different uses. For example, one study evaluated a chair equipped with 16 voice coils aligned from approximately the upper back to the thighs as a crossmodal sensory output to allow Deaf and hard of hearing individuals to haptically feel music, including intended emotional responses, while seated.(23)

Away from the pure research realm, but still sitting in a haptic feedback chair, it would be great to see D-Box make a return to Wwise. It could also be interesting to see some variety of haptic feedback vest. Imagine a fight game where you’d actually feel something when getting kicked in the chest. We’re talking with many key developers in the industry.

Any such examples would provide more opportunity to further immersion. As such, they may become of particular interest to the VR and AR fields.

Wwise is already in motion

You may have noticed that Wwise has eight actuators available to assign, but none of our current Actuator Configurations support more than four. This is a reflection of the controllers available to us today. It will, however, be easy to accommodate another four when the future arrives. We can’t predict how or when that will be, but we’ll be ready to meet the demand.

Fundamentals don't change

Whatever the future may hold for haptic feedback in the industry and the Wwise Motion response that will come from it, the basic motion framework I’ve so quickly gone over remains. Still, motion can be conceived of in many different ways and often experimentation is necessary to reach the desired end. Use our Presets to make things easier, but don’t be afraid to change them to suit your specific needs.

A blog can only give you a taste of what can be done with motion, no matter how much we limit its scope. Nevertheless, I hope you have gleaned from this overview how motion within Wwise is fundamentally the same as sound design. I also hope that I’ve made clear there are differences that need to be taken into account. Above all, I hope that I’ve shown how interconnected motion and audio really are, such that we’ll all gain from a renewed appreciation of this still burgeoning art and not only be ready for its future innovations, but also at the forefront of pushing it to new levels.

Comments