Introduction

This is the last of a 3-part tech-blog series by Jater (Ruohao) Xu, sharing the work done for Reverse Collapse: Code Name Bakery.

- You can read the first article here, where he dives into using Wwise to drive in-game cinematics.

- You can read the second article here, where he explores how the game's tilted 2D top-down view required a custom 3D audio system to solve unique attenuation challenges.

Animation Lip Sync with Wwise Meter Plug-in

Tech Blog Series | Part 3

There are plenty of elements and moments in the game where the gameplay mechanics are driven by the audio. With the help the Wwise Meter plug-in, we are able to acquire real-time accurate audio data that can be sent back to the game engine to power multiple audio systems.

Like many other anime-themed games, Reverse Collapse features rich story dialogues; while some of them are triggered in the combat gameplay, most of them are 2D narratives in which you have 2 characters doing call-and-response sequences on the left and right sides of the screen.

The picture above showcases an example of a 2D narrative system within the game, where the character Mendo is talking while the screenshot was taken. When the speech triggers, a lip animation is played on the character's sprites. This functionality is driven by audio volume data obtained from Wwise.

The game can synchronize lip animations with speech by utilizing audio volume data, enhancing the immersion and realism of character interactions. This approach adds depth to the narrative experience, making it more engaging for players.

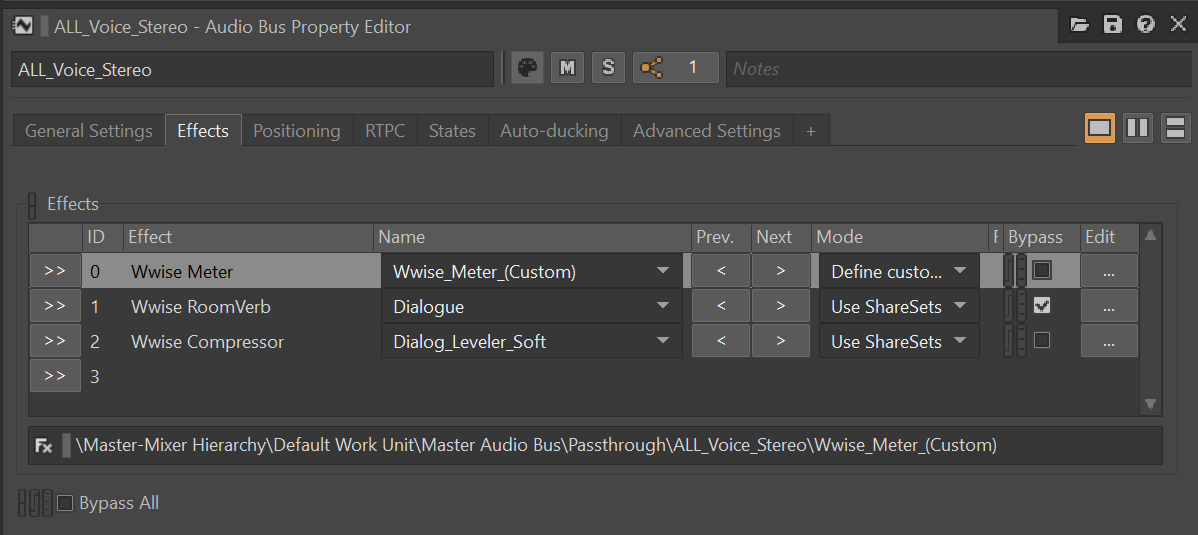

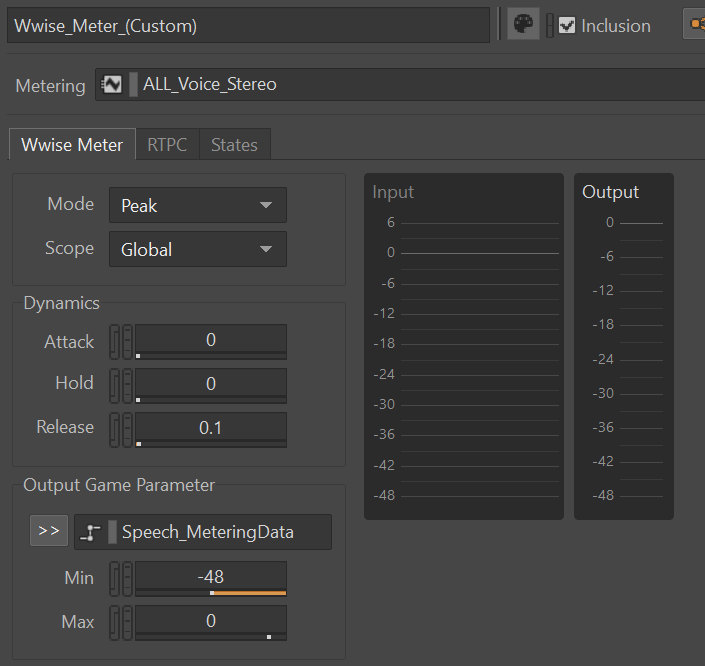

To acquire the volume data in real time, Wwise Meter plug-in (Wwise Meter (audiokinetic.com)) is used, this is an easy-to-use and very effective plug-in that can send the audio data from Wwise to the game engine. The picture below shows the meter setup on our main speech bus.

Inside the Wwise Meter plug-in, we linked the RTPC named Speech_MeteringData, which is responsible for sending data back to the game engine. This RTPC captures the output volume information from speech triggered in the game. We clamp the value from -48 to 0, representing the range of audio volume levels. While it's possible for the value to exceed 0 if the speech volume is peaking, it's generally recommended to avoid this scenario in typical mix settings, ensuring that the value stays below 0.

By setting up this configuration, we can accurately capture and transmit audio volume data to the game engine in a controlled manner, facilitating the implementation of various gameplay mechanics.

The paragraphs above conclude the setup on the Wwise side. To use the data on the game engine side, we just need to add a few lines of code to detect the range of the volume and transfer that number into usable data for our animation system. The animation code here is roughly written as each game will have different animation systems or plugins.

For our game, we do not have a complicated animation system, the character's mouth only has Open and Closed states, thus we can simply use a ternary conditional operation to get if we should open the mouth of the speaking character and animate accordingly. (Refer to the paragraphs above for the implementation of GetGlobalRTPC())

bool bIsCharacterMouthOpen = (GetGlobalRTPC(“Speech_MeteringData”) > -48.0f && GetGlobalRTPC(“Speech_MeteringData”) <= 0) ? true : false;

For many other games, especially 3D ones, characters may have joints and bones in the character skeleton rig, we can adjust the angle of the joint that is used by the animator to alter the mouth openness. This is usually represented by a float number. Here, for example, assume this number can be acquired by speakingCharacter.SetMouthOpenness(float mouthJointAngle), the min and max angle of mouth opening is 0 degrees to speakingCharacter.MaxMouthOpenness() degrees.

In this example, we'll create a small wrapper function to extract the output value of the parameter modifier and apply it on demand in the area where we intend to use this functionality.

public float GetGlobalRTPC(string rtpcName)

{

int rtpcType = 1;

float acquiredRtpcValue = float.MaxValue;

AkSoundEngine.GetRTPCValue(rtpcName, null, 0, out acquiredRtpcValue, ref rtpcType);

if(acquiredRtpcValue >= 0.25 && acquiredRtpcValue <= 16)

{

return acquiredRtpcValue;

}

else

{

return 1.0f;

}

}

In addition to setting the RTPC globally, the function above will also ensure that if incorrect values are detected, it will ignore the RTPC to be set, and reset the value to 1.0f, which is the default.

In this case, we can improve the code above to support this by using the following function:

public float SetMouthOpenessByWwiseAudio()

{

float mouthOpennessToSet = 0.0f;

float retrievedMeteringRTPCvalue = GetGlobalRTPC(“Speech_MeteringData”);

if (retrievedMeteringRTPCvalue > -48.0f && retrievedMeteringRTPCvalue <= 0)

{

mouthOpennessToSet = speakingCharacter.MaxMouthOpenness() * Normalization(retrievedMeteringRTPCvalue, -48.0f, 0.0f));

}

speakingCharacter.SetMouthOpenness(mouthOpennessToSet);

}

Indeed, the function provided will accurately set the mouth openness based on the audio volume data received from the Wwise Meter plug-in. This ensures that the mouth animation is precise and smoothly synchronized with the audio volume.

Disclaimer: The code snippets utilized in this article are reconstructed generic versions intended solely for illustrative purposes. The underlying logic has been verified to function correctly, specific project-specific API calls and functions have been omitted from the examples due to potential copyright restrictions.

Comments