Hello. I’m Thomas Wang (also known as Xi Ye).

In part 1, I used mind maps to summarize WAAPI essentials. With the development environment configured, I wrote several short scripts with Python. And we had a first taste of the power of WAAPI.

Now in part 2, we will go through all the branches of WAAPI. For the sake of readability, I will do my best to describe them in simple terms along with practical API use cases.

I also created a repository on GitHub for easy access. You will find all the code referred to in this blog series.

Before we proceed, there a few things things that I want to clarify:

- The main purpose of listing the APIs here is to clarify their usage, and get you up and running quickly. It’s recommended that you make your own list according to the description in Part 1.

- This blog assumes that you’ve gone through Part 1, knowing how to use those tips and tricks such as formatting the JSON syntax for better readability, looking up the WAAPI arguments and return values, etc. Still, I suggest you check the online documents while reading this blog.

- For the sake of beginners, I tried to add notes to each line to address more details.

- Try to do some investigation before you raise a question. Wwise documents and Google search are more powerful than you can imagine.

- WAAPI features will be updated over time, make sure you install the latest version of Wwise. When the “The procedure URI is unknown.” error message occurs, it means your APIs are not supported by the current version of WAAPI.

Contents:

Wwise.core Overview

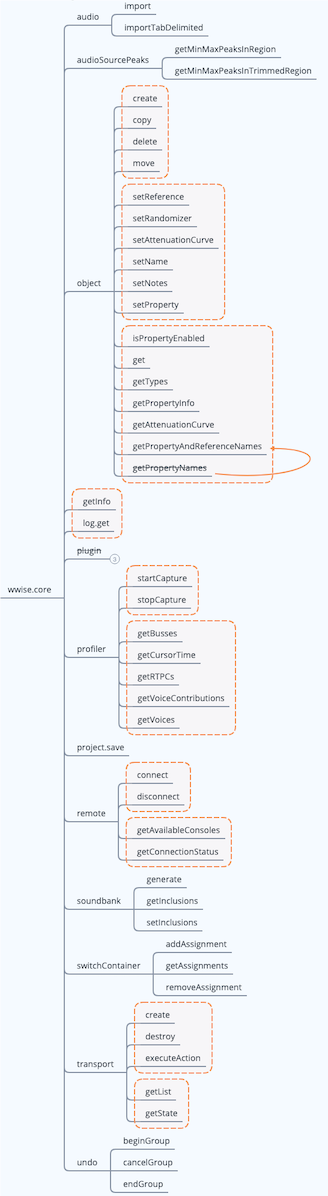

As an essential part of WAAPI, the wwise.core APIs are very powerful. Aside from wwise.core and soundengine, the remaining execution APIs are relatively simple. In this blog, I will focus on wwise.core.

From the mind map shown above, we can see that these APIs are primarily for Wwise audio design instead of sound engine configuration. If you are interested in optimizing Wwise operations, this blog can be very helpful.

Note: As shown above, those with a strikethrough have been replaced by new APIs.

audio (Importing Audio Files and Creating Wwise Objects)

Features

There are two APIs under the audio branch: import and importTabDelimited. As their names suggest, they are used to import audio assets. The former simply imports audio files and creates Wwise objects. The latter allows you to import audio files based on the rules specified in a tab-delimited file, and execute certain operations.

importTabDelimited imports the predefined information from a tab-delimited text file. This information includes the local directories of audio files, different Wwise hierarchies under which the objects are located (containers, Events, Output Busses), and how to make a reference to the property values or use Switches to assign child elements etc.

The text file can be generated by Microsoft Excel or other spreadsheet applications. Its first row defines the properties specified by each column heading. Each column determines what will be created in the Wwise project.

Usage

Obviously, audio is the most important branch in terms of asset importing. These APIs eliminate the need for repetitive operations when you manually import a large number of files. With proper information specified, they can automatically set up the hierarchies, properties, streams etc.

Using import to import audio files, you need to specify the directories and properties in their filenames. To this end, you must create a dictionary to map the designation indicated by these filenames, reducing the possibility of reporting errors due to long filenames.

Operation systems have a certain limit on the filename and path. Technically, the filename in Wwise must be no longer than 256 characters. Otherwise, the importing operation could fail. Taking the system path into account, users usually don’t have many characters to use.

In this case, we can use importTabDelimited to import audio files from a tab-delimited text file in which certain rules are specified. In fact, this process can be further optimized. For example, sound designers can build a simple app with VBA to quickly generate tab-delimited files through Excel, or build a more complex GUI app with Python to create these files directly.

audioSourcePeaks (Getting Peak Values)

Features

There are two APIs under this branch: getMinMaxPeaksInRegion and getMinMaxPeaksInTrimmedRegion. They are used to get the peak values and return certain information. The former gets the peak values in the given region of an audio source. The latter gets the peak values in the entire trimmed region of an audio source. Note that we are not talking about just the Max Peak, but a Min/Max Peak pair specified by the numPeaks argument.

getMinMaxPeaksInRegion has two arguments: timeFrom and timeTo. They determine the start and end time of the section of the audio source for which peaks are required. For getMinMaxPeaksInTrimmedRegion, you don’t have to specify the start and end time, it just evaluates the values after the SFX object is trimmed.

Both of these APIs return base-64 encoded, 16-bit signed integer arrays. They need to be decoded, parsed in a given format (fmt), then converted into dB values.

Usage

I’m new to Wwise plug-in development. As far as I know, you don’t have to take so many Min/Max Peak values from a single audio source. However, if you do, you can use these two APIs to calculate the peaks globally across channels. They can provide you with up to 14294967295 such values.

Object (Object Operations)

Features

Create, copy, delete, move (Basic Object Operations) These four APIs are used to execute the most basic object operations. They have arguments to define the object name, type, source path, target path etc.

Create allows you to create massive objects that are listed in the Wwise Objects Reference document. import and importTabDelimited, by contrast, can only create audio-related objects (containers, Events etc.).

With create, you can create Sound SFX objects, Work Units, containers, even effects and source plug-ins.

setReference, setRandomizer, setAttenuationCurve, setName, setNotes, setProperty (Setting Object Properties)

Create can only set a few object properties (such as notes). To set more object properties, you have to use the APIs listed above.

setReference sets an object's reference value. For instance, you may set a reference to an Output Bus for a Sound SFX object.

As for setRandomizer, setAttenuationCurve, setName and setNotes, they just do what their names suggest.

setProperty sets a property value of an object for a specific platform. To learn more about the properties available on each object type, refer to the Wwise Objects Reference doc.

For example, if you want to set a property within the Mastering Suite, all you need is provide its location information such as GUID or path, then change the "property" key value to the name of the property that you’d like to modify, and finally specify an appropriate value to replace with.

get, getTypes, getPropertyInfo, getAttenuationCurve, getPropertyAndReferenceNames (Getting Object Properties)

If you want to get information on the current project, the APIs above will be your solution. Get is a query-based API. It returns different types of information such as ID, name, size, path, duration etc. You can get what you want by simply setting an appropriate query parameter. As mentioned in Part 1, it can pass in arguments from both tables (Arguments and Options), and get the return result based on what you choose from the Options table.

So, what’s the difference between them?

In the Arguments table, there are two arguments: from and transform.

1. From indicates the data source of your query. You can search an object by its name, GUID, path, type etc. Also, you can filter a range of objects by specifying a sub-argument such as ofType or search.

2. Transform is used to transform a selected object. Let’s say we set a Random Container with from, then added select parent in transform. In result, its parent object will be selected instead. So, transform is an important complement to from. It allows you to search the parent, children or reference based on what you set in from.

In the Options table, there are also two arguments: return and platform. (Note: these arguments can be left empty.)

1. Return specifies what return result you will get after the query process. The GUID and name of the object will be returned if left empty.

2. Platform specifies which platform will be queried. The default platform will be queried if left empty.

Now, why do we need getTypes, getPropertyInfo, getAttenuationCurve, getPropertyAndReferenceNames?

Actually, those APIs are designed differently for various purposes. get queries an object to get its general properties. The other four get the meta properties: getTypes returns the types of all currently registered objects; getPropertyInfo returns specific info about the object properties (such as value range); getAttenuationCurve returns the Attenuation Curve settings of a specific object; getPropertyAndReferenceNames returns the object properties and current reference names.

Usage

There are a variety of situations in which you can use these APIs. Some examples are getting the converted size of an audio file, getting the properties and reference names of an object, querying all the platforms in your project or all the Output Busses in use, etc. It should be easier if you are familiar with the Queries tab in Wwise.

GetInfo and log.get (Getting Project Info and Logs)

Features

These two APIs are used to simply get certain information. getInfo returns global info of the current project, such as Wwise version, platform info, WAAPI version etc. log.get returns the latest log. In fact, the tabs in the Logs window are linked to the channel argument here. If you want to update the log dynamically within your own app, certain subscription APIs can be helpful. (We will talk about this later.) Take ak.wwise.core.log.itemAdded for example. This API returns info when an object is added.

Usage

You can use these two APIs as needed. For example, using log.get to save each log to the database after you execute certain operations, or carry out further analysis so that you can get more customized feedback reports.

Profiler (Profiler Operations)

Features

startCapture, stopCapture (Switching On/Off Capture)

These two APIs are used to simply start and stop the Profiler Capture process, without having to set any arguments.

getBusses, getCursorTime, getRTPCs, getVoiceContributions, getVoices (Getting Info at A Specific Profiler Capture Time)

getBusses, getRTPCs and getVoices are similar. They just pass in a time value and get certain information such as bus gain, RTPC ID, RTPC value, voices etc.

getCursorTime returns the current time of the specified profiler cursor, in milliseconds.

getVoiceContributions returns all parameters affecting the dry path, such as voice volume, high-pass, low-pass etc.

Note that, for getBusses and getVoices, it’s possible to pass in two arguments, with the second defining the query range.

Usage

You can integrate the startCapture and stopCapture features into certain tools for manual or auto control, facilitating more complex capture process. For example, using the Unity WAAPI client to add some profiling capabilities and control the start and stop of capture, then you will get the profiling data for further analysis as needed.

Project.save (Saving Projects)

Features

This API is used to save the current project.

Usage

Instead of simply replacing the Ctrl + S operation, this API automatically saves the project based on certain trigger conditions, without sacrificing the performance. For example, when importing tens of thousands of files at one time or performing other batch processes, you could make a call to this API for every 100 or even 10 files, avoiding potential risks.

Remote (Remote Connecting to Game)

Features

Connect, disconnect (Switch On/Off Connection)

These two APIs are used to remotely connect to and disconnect from a game. By doing this, you have to provide the host IP and optional ports. And that’s why we need getAvailableConsoles listed below. If you want to connect to different game engines, you can use it to get the available clients first.

getAvailableConsoles, getConnectionStatus (Getting Available Consoles and Checking Connection Status)

getAvailableConsoles retrieves all consoles available for connecting Wwise Authoring to a Sound Engine instance, then returns the editor name, IP, port, platform etc.

getConnectionStatus checks the current connection status. Only connected game engines will return their console info and connection status.

Usage

The connection and disconnection features can be integrated into your game menus as described in One Minute Wwise so that you can click and connect to or disconnect from a game quickly. Also, you can use tools like StreamDeck or Metagrid to automatically trigger Python scripts to activate the remote connection, reducing the time wasted on manual operations.

You can combine getAvailableConsoles and getConnectionStatus to integrate the Wwise Profiler data into your own app (refer to the “profiler” section). For example, automatically retrieving desired info, or just informing user of the current connection status in your own app, allowing for a better workflow outside of Wwise.

Soundbank (SoundBank Generation and Settings)

Features

Generate, as its name suggests, generates a list of SoundBank. In addition to simply generating all SoundBanks, you may check specific SoundBanks, Platforms or Languages and click Generate Selected. Instead of doing this manually, you can let generate do it for you.

GetInclusions retrieves a SoundBank's inclusion list, including the GUID, name, or path of the SoundBank to add an inclusion to.

SetInclusions modifies a SoundBank's inclusion list. You may add, remove and replace the SoundBank's inclusion list.

Usage

A SoundBank has to be created before you set its inclusion list. And you can use create to create a SoundBank’s objects and hierarchies.

So to conclude, the audio branch APIs import audio files and create objects; the create branch APIs create and build hierarchies, Events etc.; the soundbank branch APIs create SoundBanks. This workflow can be used to automate the asset creation through externally defined structural files, or inspect the internal structure of a project via soundbank in an app outside of Wwise.

SwitchContainer (Switch Container Operations)

Features

As mentioned previously, you can use the create branch APIs to create containers, including Switch Containers. Now why do we need switchContainer?

Switch Containers won’t work unless they are assigned to Switch/State Groups, and each Switch/State has objects assigned.

You can use the switchContainer branch APIs to make these assignments.

AddAssignment assigns a Switch Container's child to a Switch. removeAssignment removes an assignment between a Switch Container's child and a State. All you have to do is provide the GUIDs of objects within a Switch Container and the GUIDs of Switch/States within a Switch/State Group.

GetAssignments returns the list of assignments between a Switch Container's children and states.

Usage

The switchContainer branch APIs can be used as a complement to the automated import workflow by assigning Switch elements to the containers that you created.

transport (Transport Control)

Features

create, destroy, executeAction

create creates a transport object for the given Wwise object. destroy destroys the given transport object. For create, you need to provide the Wwise object’s GUID. For destroy, you need to provide the transport object’s GUID.

So, what is a transport object? In fact, you are always repeating these two actions in your daily work within Wwise.

For example, when you select Event A, the Transport Control stays focused on it. In other words, you created an Event A transport object. If you select Event B, then Event A will be destroyed, and an Event B transport object will be created. If you click the Pin icon in the Transport Control view, the current transport object will persist, and no other transport objects will be created.

A Soundcaster Session is actually a collection of transport objects.

executeAction executes an action on the given transport object. There are five possible action values: play, stop, pause, playStop and playDirectly.

getList, getState

getList returns the list of transport objects. You can view all of the transport objects unless they are destroyed with destroy.

getState gets the state of the given transport object. You can query the state with this API after executing an action with executeAction.

Usage

The transport branch APIs can be used to define the playback behavior in an app outside of Wwise. This external app can be your own integration tool. You may use it to preview the sounds in your Wwise project or even replicate a Soundcaster Session outside of Wwise.

undo (Undo)

Features

People who are familiar with ReaScript can think of beginGroup and endGroup as Undo_BeginBlock() and Undo_EndBlock(). beginGroup begins an undo group. endGroup ends the last undo group. beginGroup can be used repeatedly.

cancelGroup cancels the last undo group.

Usage

An undo group is used to cancel a sequence of actions at once. Technically, it can also be used to redo these actions. However, WAAPI only supports undo for now. So, we will have to have to wait for future Wwise API updates. The undo branch APIs added the possibility to cancel actions outside of Wwise.

Summary

You may forget what you’ve learned if you don’t practice. And this is why I suggest you make your own list according to the description in Part 1.

To utilize WAAPI, you should:

1) Summarize WAAPI essentials by yourself, with or without mind maps.

2) Open your mind and identify the pain-points in your audio design and integration workflow.

3) Figure out the basic logics, and determine which APIs to use.

4) Write the code to actually implement your ideas.

Use Cases

Let’s have a look at some real WAAPI use cases. These use cases focus on implementing essential features. Interactive design and GUI are not our main concerns here. You can refer to these code lines and write your own code as needed. I tried to cover as many wwise.core APIs as I could. I encourage you to keep researching those which I haven't covered.

If you have bugs reported, double check your code, you should be able to pinpoint what the problem is.

For simplicity, I encapsulated each API into a function. You may modify the sample code described in the “Execution APIs” section (Part 1) as needed.

The directory structure of Wwise on macOS differs from that of Windows. MacOS users should pay attention to the file path. (In the Wwise Launcher, go to PROJECTS > RECENT PROJECTS > show more >>.)

1.You import a Sound SFX object, build the hierarchies, set the properties, create an Event, and set the action type. Then you create a SoundBank, add the newly created Event, and generate that SoundBank.

By quickly importing audio files into Wwise and creating proper hierarchies or even Events, you can greatly increase the efficiency of your audio integration. In the following example, usually we need to populate the arguments with file path, properties and hierarchies by hand. Or, we can just execute these repetitive tasks with WAAPI.

In order to quickly import audio assets into the Wwise project, you can utilize certain naming convention, database interaction and/or regular expressions to prepare the meta data first, then use the programming language of your choice to convert the formatted files into JSON arguments required for WAAPI importing.

Choosing APIs

1) Importing files: import

2) Setting properties: setProperty

3) Creating Events, adding sounds, creating SoundBanks: create

4) Setting inclusions and generating SoundBanks: setInclusions, generate

Writing Code

# 1. Import files and build hierarchies

def file_import(file_path):

# Define the arguments for file importing. imports contains the file path

# and the destination path with the object type defined.

# For non-vocal Sound SFX objects, importLanguage is set to SFX, and

# For non-vocal Sound SFX objects, importLanguage is set to SFX, and

# importOperation is set to useExisting. This way, if the required container

# already exists, it will be replaced directly; if not, a new one will be created.

args_import = {

"importOperation": "useExisting",

"default": {

"importLanguage": "SFX"

},

"imports": [

{

"audioFile": file_path,

"objectPath": "\\Actor-Mixer Hierarchy\\Default Work Unit\\<Sequence Container>Test 0\\<Sound SFX>My SFX 0"

}

]

}

# Define the arguments for the return result. In this case, it will return

# Windows-only info including the GUID and name of the object.

opts = {

"platform": "Windows",

"return": [

"id", "name"

]

}

return client.call("ak.wwise.core.audio.import", args_import, options=opts)

# 2. Set the properties

def set_property(object_guid):

# Set the target object to the GUID of the object that you want to modify.

# The Volume property will be modified. The target platform is set to Mac.

#The value will be changed to 10.

args_property = {

"object": object_guid,

"property": "Volume",

"platform": "Windows",

"value": 10

}

client.call("ak.wwise.core.object.setProperty", args_property)

# 3. Create an Event for the Sound SFX object and define its playback behavior

def set_event():

# Create an Event and define its playback behavior

args_new_event = {

# Specify the storage path, type, name of the Event, and define what to

# do if there is a name conflict

"parent": "\\Events\\Default Work Unit",

"type": "Event",

"name": "Play_SFX",

"onNameConflict": "merge",

"children": [

{

# Define the playback behavior. The name is left empty. @ActionType is

# set to 1 (i.e. Play). @Target is set to the sound object that you want to play

"name": "",

"type": "Action",

"@ActionType": 1,

"@Target": "\\Actor-Mixer Hierarchy\\Default Work Unit\\Test 0\\My SFX 0"

}

]

}

return client.call("ak.wwise.core.object.create", args_new_event{)

# 4. Create a SoundBank and add the Event to it

def set_soundbank():

# Create a SoundBank in the Default Work Unit under the SoundBanks folder

args_new_event = {

"parent": "\\SoundBanks\\Default Work Unit",

"type": "SoundBank",

"name": "Just_a_Bank",

"onNameConflict": "replace"

}

# Get the return values

soundbank_info = client.call("ak.wwise.core.object.create", args_create_soundbank)

# Add the Event to the SoundBank that you just created

args_set_inclusion = {

"soundbank": "\\SoundBanks\\Default Work Unit\\Just_a_Bank",

"operation": "add",

"inclusions": [

{

# Add the Play_SFX Event to the Just_a_Bank SoundBank, using filter

# to specify the return values

"object": "\\Events\\Default Work Unit\\Play_SFX",

"filter": [

"events",

"structures",

"media"

]

}

]

}

return client.call("ak.wwise.core.soundbank.setInclusions", args_set_inclusion)

# 5. Generate the SoundBank

def generate_soundbank():

# Specify the SoundBank that you want to generate. Set writeToDisk to True

args_generate_soundbank = {

"soundbanks": [

{

"name": "Ambient"

}

],

"writeToDisk": True

}

return client.call("ak.wwise.core.soundbank.generate", args_generate_soundbank)

Choosing APIs

- Getting the global info: getInfo

- Getting the converted size of the Sound SFX object: get

- Getting the SoundBank path: get

Writing Code

# 1. Get the global info

def get_global_info():

# Get the project info with getInfo

return client.call("ak.wwise.core.getInfo")

# 2. Get the Sound SFX size

def get_sfx_and_event_size(sound_sfx_guid):

# Set the argument to the object’s GUID

args = {

"from": {

"id": [

sound_sfx_guid

]

}

}

# Set the return values: object name and converted size

opts = {

"return": [

"name", "totalSize"

]

}

return client.call("ak.wwise.core.object.get", args, options=opts)

# 3. Get the SoundBank size

def get_soundbank_size(soundbank_guid):

# Same as the previous use case

args = {

"from": {

"id": [

soundbank_guid

]

}

}

# Set the platform to Windows. Set the return value (i.e. SoundBank path)

opts = {

"platform" : "Windows",

"return": [

"soundbank:bnkFilePath"

]

}

# Use os.path.getsize() to get the SoundBank size and convert the unit to MB.

# Note: For Mac users, the path value needs to be further processed.

path = client.call("ak.wwise.core.object.get", args, options=opts)['return'][0]

size = os.path.getsize(path) / (1024 ** 2)

return size

Soundcaster has two main purposes: 1. Saving a group of transport objects for reuse (Soundcaster Session); 2. Controlling the Game Syncs, object properties, M/S etc. during playback.

Here we look at how to re-create a Soundcaster Session.

Note: The transport objects in WAAPI cannot be saved with the Wwise project. To implement the Soundcaster Session features, you need to save the GUID of each session separately for re-creating those transport objects next time.

Choosing APIs

- Transport control: transport

Writing Code

# 1. Create a transport object (X is a customized number). The transport object’s GUID

# will be returned after being created. For simplicity, the Wwise object’s name and

# the transport object’s GUID were put into the same dictionary.

from waapi import WaapiClient, CannotConnectToWaapiException

def transportX(object_guid):

try:

with WaapiClient() as client:

# Set the argument to the transport object’s GUID

transport_args = {

"object": object_guid

}

# Return the transport object’s ID in dictionary format.

# For example, {'transport': 12}

result_transport_id = client.call("ak.wwise.core.transport.create", transport_args)

# Set id to object_guid. Use ak.wwise.core.object.get to get the Wwise

# object’s name. Surely you can just use the Wwise object’s name to create

# the transport object.

args = {

"from": {

"id": [

object_guid

]

}

}

opts = {

"return": [

"name"

]

}

# Make a remote call. Return the Wwise object’s name in dictionary format.

# For example, {'name’: 'MyObjectName'}

result_dict_name = client.call("ak.wwise.core.object.get", args, options=opts)['return'][0]

# Merge the Wwise object’s name and the transport object’s ID. You will

# get a Transport Session in dictionary format.

# For example, {'name': 'MyObjectName', 'transport': 1234}

return result_dict_name.update(result_transport_id)

except CannotConnectToWaapiException:

print("Could not connect to Waapi: Is Wwise running and Wwise Authoring API enabled?" )

# When you get several transport objects, put their info into a list. You will get a

# simple Soundcaster Session.

# env_session = [{'name': 'MyObjectName1', 'transport': 1234},

# {'name': 'MyObjectName2', 'transport': 12345}]

# 2. Get the transport object’s ID from the Transport Session.

def get_transport_args(env_session, object_name, play_state):

# Go through the session list (env_session). Get the transport_dict dictionary

# for the given object name (object_name).

for i in env_session:

if i['name'] == object_name:

transport_dict = i

# Retrieve the transport object’s ID from transport_dict. Pass in the required

# arguments.

transport_id = {'transport': transport_dict[transport]}

args = {"action": play_state}

return args.update(transport_id)

# 3. Pass in the session list, object name, playback behavior. Make a remote call to

# executeAction to control the playback behavior.

from waapi import WaapiClient, CannotConnectToWaapiException

try:

with WaapiClient() as client:

args = get_transport_args(env_session, object_name, play_state)

client.call("ak.wwise.core.transport.executeAction", args)

except CannotConnectToWaapiException:

print("Could not connect to Waapi: Is Wwise running and Wwise Authoring API enabled?" )

4. You capture the Profiler data and generate a report.

Choosing APIs

- Switching on/off the capture: startCapture, stopCapture

Capturing the data: getBusses, getCursorTime, getRTPCs, getVoiceContributions, getVoices

Writing Code

# 1. Start capturing the Profiler data. Return the start time.

def start_capture():

return client.call("ak.wwise.core.profiler.startCapture")

# 2. Stop the capture. Return the stop time.

def stop_capture():

return client.call("ak.wwise.core.profiler.startCapture")

# 3. Retrieve info from the captured data. Note that the cursor_time

# value must be between start_capture() and stop_capture().

def capture_log_query(cursor_time):

# Set the time point.

args_times = {

"time": cursor_time

}

# Get the names of current voices, their states (physical or virtual),

# and relative game objects’ names and IDs

opts_get_voices = {

"return": [

"objectName", "isVirtual", "gameObjectName", "gameObjectID"

]

}

# Get the active busses

opts_get_busses = {

"return": [

"objectName"

]

}

# Get the active RTPCs’ IDs and values

log_rtpcs = client.call("ak.wwise.core.profiler.getRTPCs", args_times)["return"]

# Review the above description

log_voices = client.call("ak.wwise.core.profiler.getVoices", args_times, options=opts_get_voices)["return"]

log_busses = client.call("ak.wwise.core.profiler.getBusses", args_times, options=opts_get_busses)["return"]

What’s Next?

In Part 3, we will talk about the remaining execution APIs, including wwise.ui, wwise.waapi, wwise.debug and soundengine.

Comments