Jack in…

Black Ice VR is an exciting and dark linear cinematic virtual reality experience that explores the power of memories and how dangerous they can be.

Players inhabit the world of David, a memory slicer, who is tasked by a young woman named Rin to dive into her mind and suppress a dark memory. A memory she isn’t comfortable sharing with just anyone, a memory of murder. The more David tries to help, the worse things get for them both. Ultimately, he discovers that our memories make us who we are, and what we are.

This project was conceived and developed during a six-month residency program at the University of North Carolina School of The Arts (UNCSA) called the Immersive Storytelling Residency, hosted by the university’s Media and Emerging Technology Lab (METL). During this time our small team, Arif Khan (Writer/Director), Lawrence Yip (Programmer/Interaction Designer), and myself (as Technical Artist/Audio Implementer) created this roughly 25-minute interactive narrative VR experience.

I’m Just an Edit Rat

As a team we had many discussions on what story should be told, and how it should look, feel, and sound. We eventually landed on a cyberpunk future where memories and identities can be altered and manipulated. Once we knew the narrative, we wanted to bring our cybernetic vision of the future to life, and audio was a crucial part in this. The music, ambience, and interaction cues needed to land well and feel like part of the world. Connecting all these audio pieces together was achieved using Wwise and its integration with Unreal Engine.

As this was a short development time (about four months), much of the work around the characters, environments, animation / motion-capture, and general look and feel required a rapid and fluid design process.

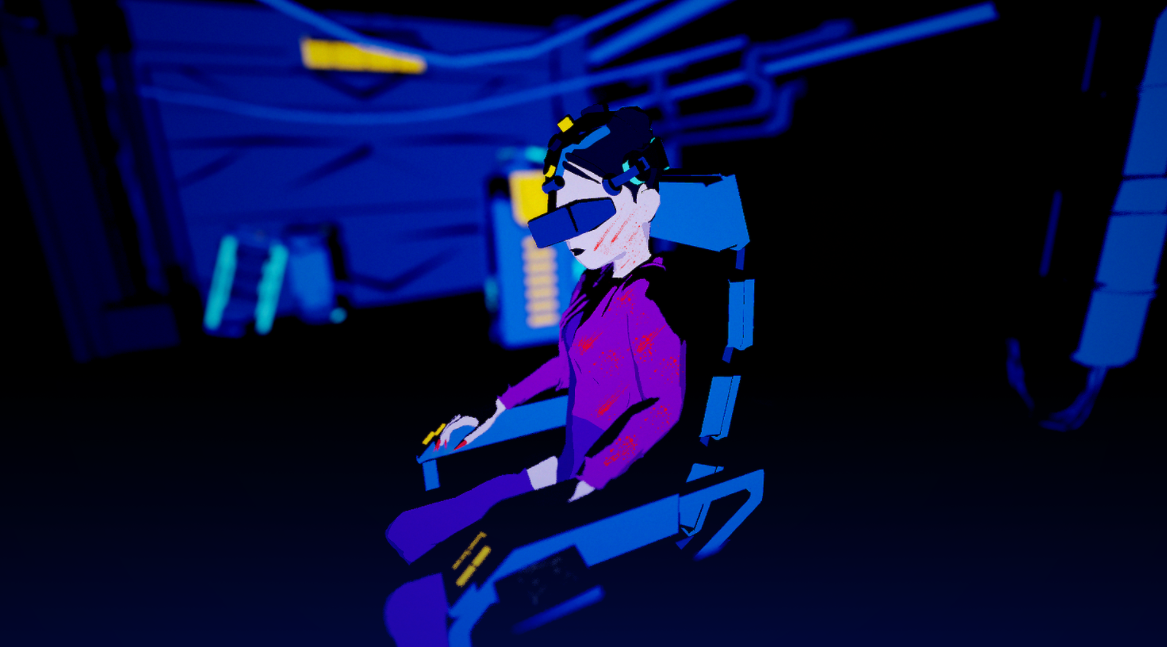

Much of the visual direction for Black Ice VR was inspired by 2D Japanese animation that have sci-fi and cyberpunk influences. The cell-shading, deep blacks, and color schemes used in the work were inspired by comic books, especially those panels with monochromatic and duotone color schemes.

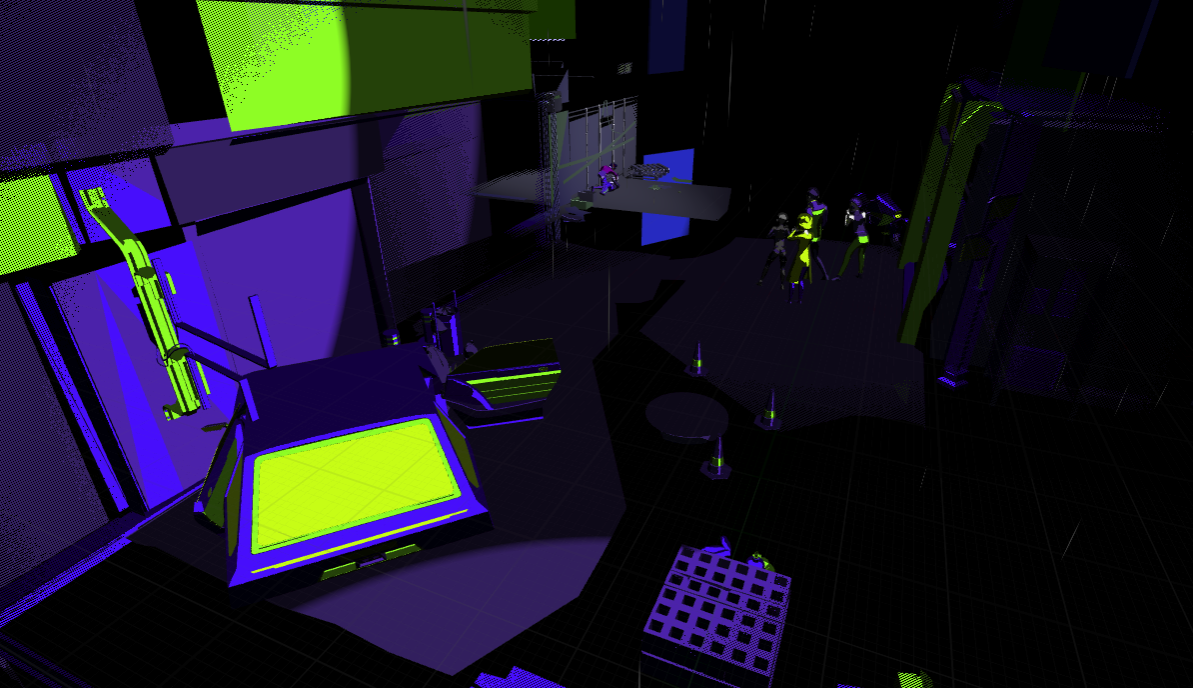

There are three main environments for Black Ice VR (originally five, but for the sake of simplicity and narrative flow it was cut down). The first of those environments that the player inhabits (aside from the loading screen) is the Memory Slicer’s Office, David’s world.

The Memory Slicer’s Office has a blue/yellow duotone color scheme and is intended to evoke a sense of the dark seedy part of the city. His space is also full of technology that he uses for his work, with lights and wires all over. That is what the lighter blue tones and yellow are helping to accentuate. David is not one the high-class expensive memory editor, and we want the user to understand that and situate them in that space quickly, as things move quickly in this experience.

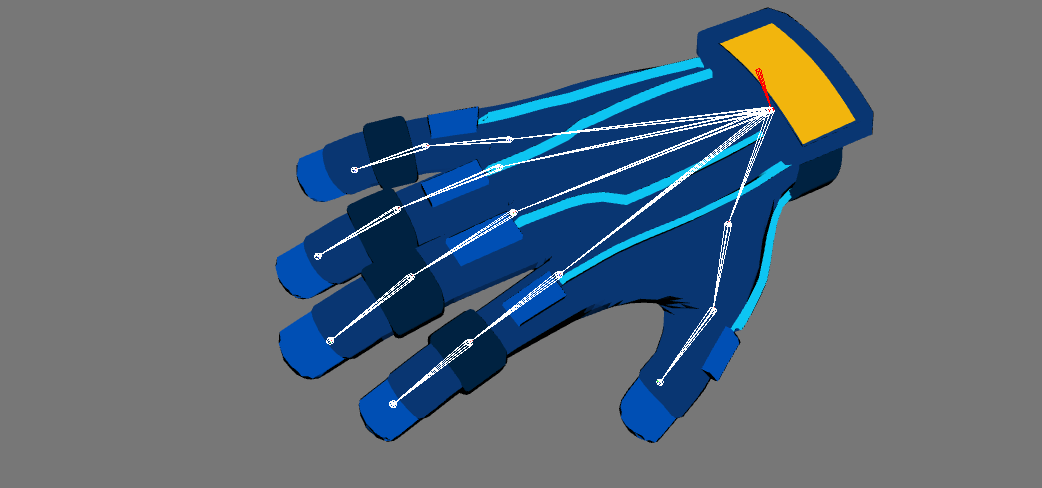

We worked with talented voice actors to bring all the characters and NPCs to life. In terms of characters for our VR experience, we have full bodied and embodiable (more on this later) characters, as well as disembodied characters and voices. The first character the player hears is one of those, the AI companion that onboards the user to the experience and introduces them to the world. David, the character the player plays as for most of the experience is also, mostly, disembodied, besides the custom hand models.

The other character players are quickly introduced to is Rin, Black Ice VR’s protagonist. She is donning a one piece with a leather jacket, clothing to indicate her personality and the world she comes from. With yellow cybernetic eyes and being voiced by a talented voice actress, the action really begins with her line, “First time coming to an edit shop.”

As a team we were very lucky to work with talented freelance sound designers Andrew Vernon and Ajeng Canyarasmi, was well as composer Umberto to help achieve our sound design goals and provide effective emotional music throughout the experience.

Most of the sound design and music choices were made between the sound designers, composer, and producer aka the Audio Team. We did have discussions on what type of cyberpunk world this was, and this discussion was key to informing many of the choices they made. We were deciding if the audio direction should take cues from the cyberpunk of the 80s, which is much more synth heavy and “retro” sounding. Or if it should be more futuristic, sci-fi focused, and grounded. Ultimately, we felt that grounded and futuristic was more relatable to a current player base and fit the narrative better; and this decision is felt in how the team built much of the audio for the experience.

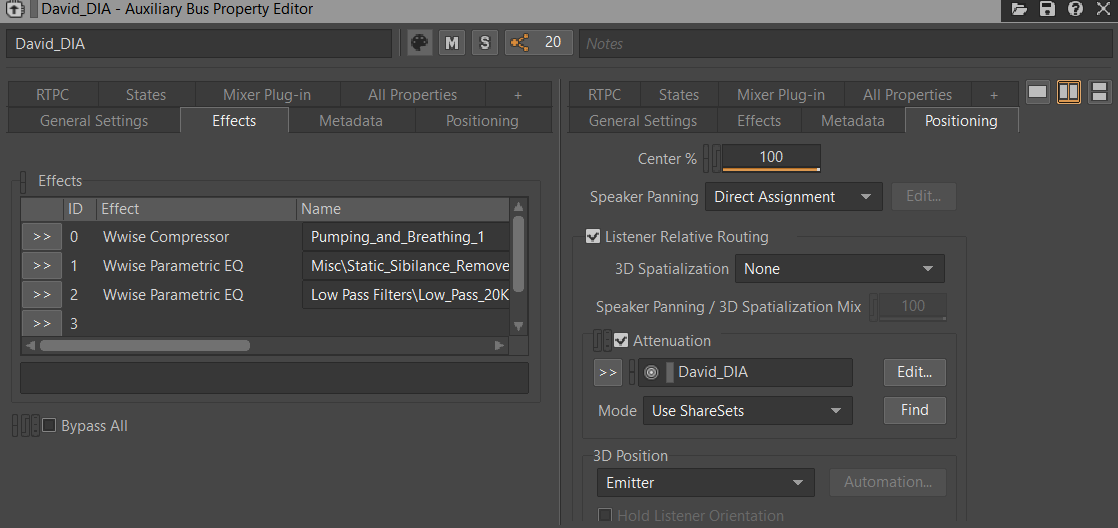

For our embodiable and playable characters we used a combination of effects, position, and mixing to give the player a good sense of who they are in the scene. For David this meant creating a “voice coming from my own head” feeling. Applying filters to allow for a more nasal, deeper tonality to denote that the voice the player hears speaking is them, and it is coming from their position, from their head. For the AI character we had a similar situation, however, they are coming from David’s headset, directly into his ear.

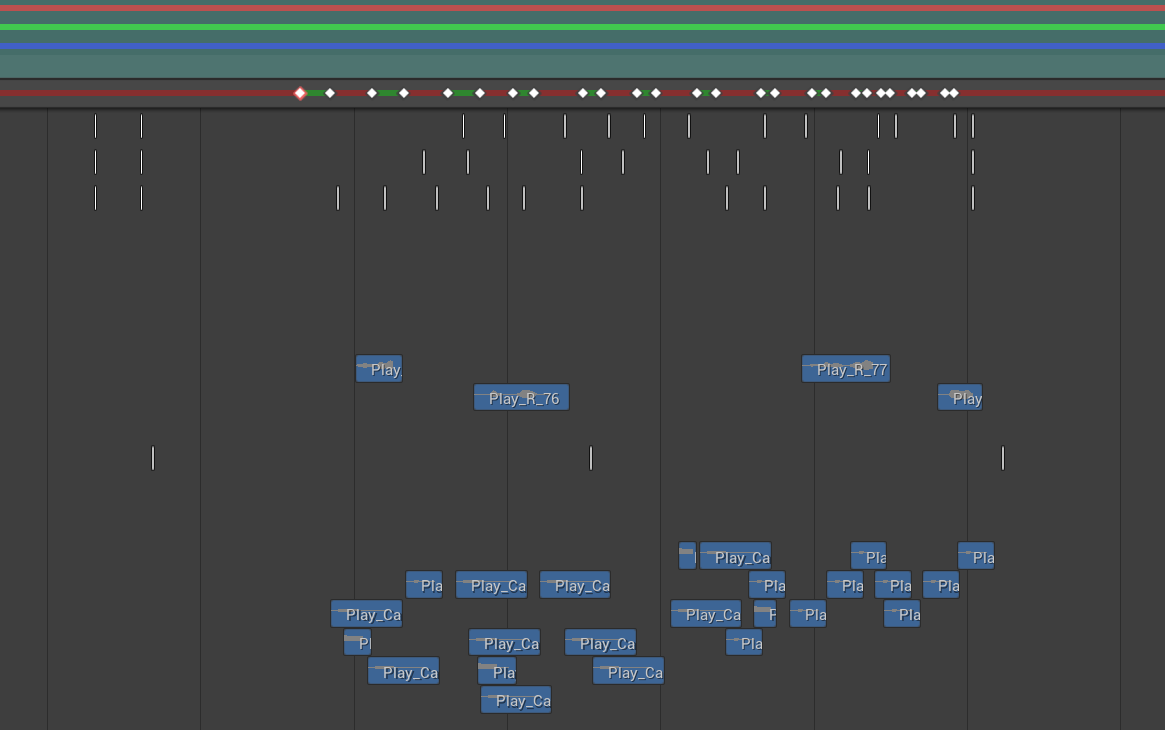

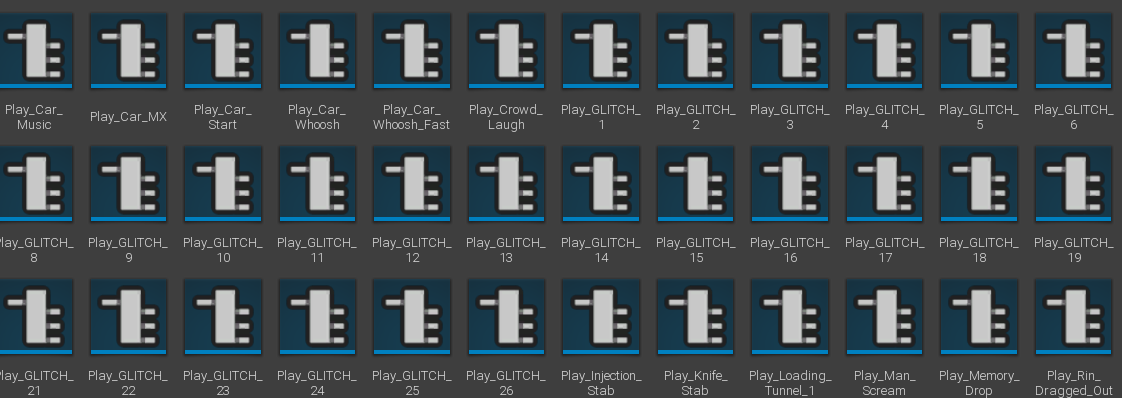

To keep track of all the voice acting assets, we used Wwise to set up our organization. As I mentioned before, this project required a ton of fluidity and Wwise was the perfect tool to easily swap out older, temporary, sound effects and voice assets for new ones.

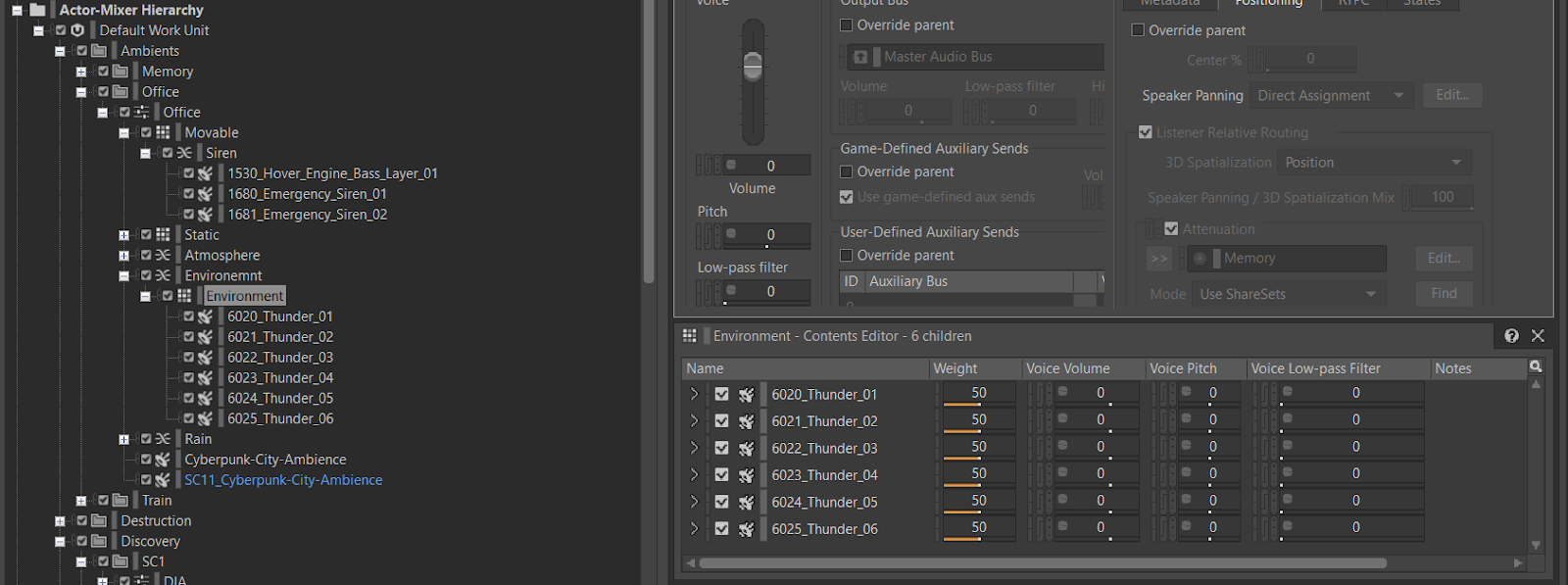

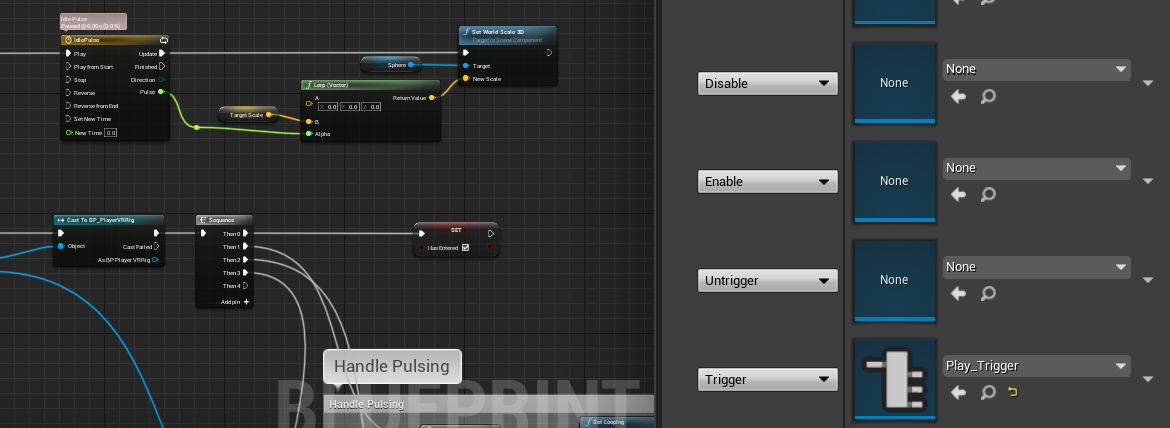

There is a more experimental audio feature that was not implemented into the final version of the experience in their entirety. The first feature is the procedural ambience. This feature was cut because it was decided that a linear narrative would not fully benefit from having audio that was more random and procedural, from a sound design perspective. However, there is some instance of this system in play in the experience, with the weather (thunder and rain) ambience for the memory editor’s office environment. This system used a mix of Blend and Random Containers in Wwise to create the weather variants. The resulting AK Events also used positioning on their final outputs.

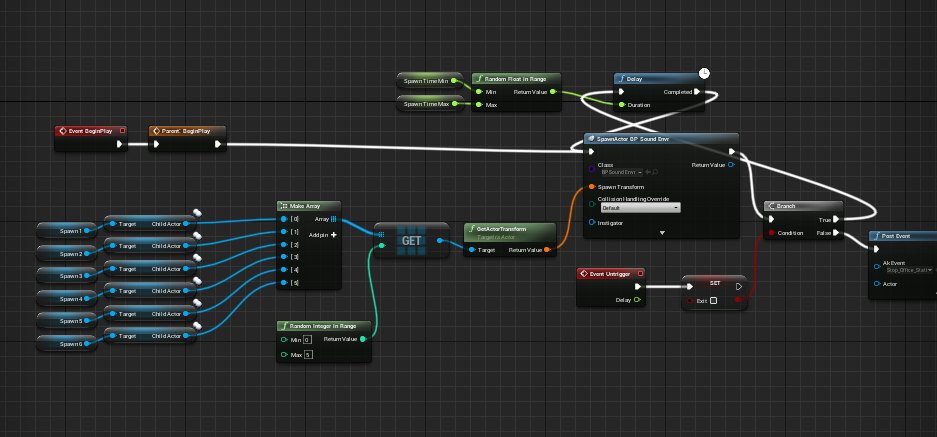

In Unreal Engine, the AK Events associated with the weather were spawned into the scene by a series of spawn points and randomness created by a Blueprints procedural spawning system. Initially this system was duplicated and used to spawn animated sounds like cars/sirens rushing by, people walking outside, and hover cars flying overhead. However, much of this was scaled back from the final build.

Take the Black Ice

The second main environment is the editable memory space, where a large chuck of the interactive elements of the experience take place. This in Rin’s world, her subconscious and past lived experience of the events leading up to the murder are revealed here; and seeing as it is her space, and pivotal to her narrative journey, it reflects stages of her emotional journey. Visually this version of her memory space is meant to have a distinctly different tone than David’s office. While still meaning to be a dark space, it is highlighted by neon green to give a sense of an outdoor neon street scene, drawing heavily from city background stills from the animated film Akira, which is also within the cyberpunk genre.

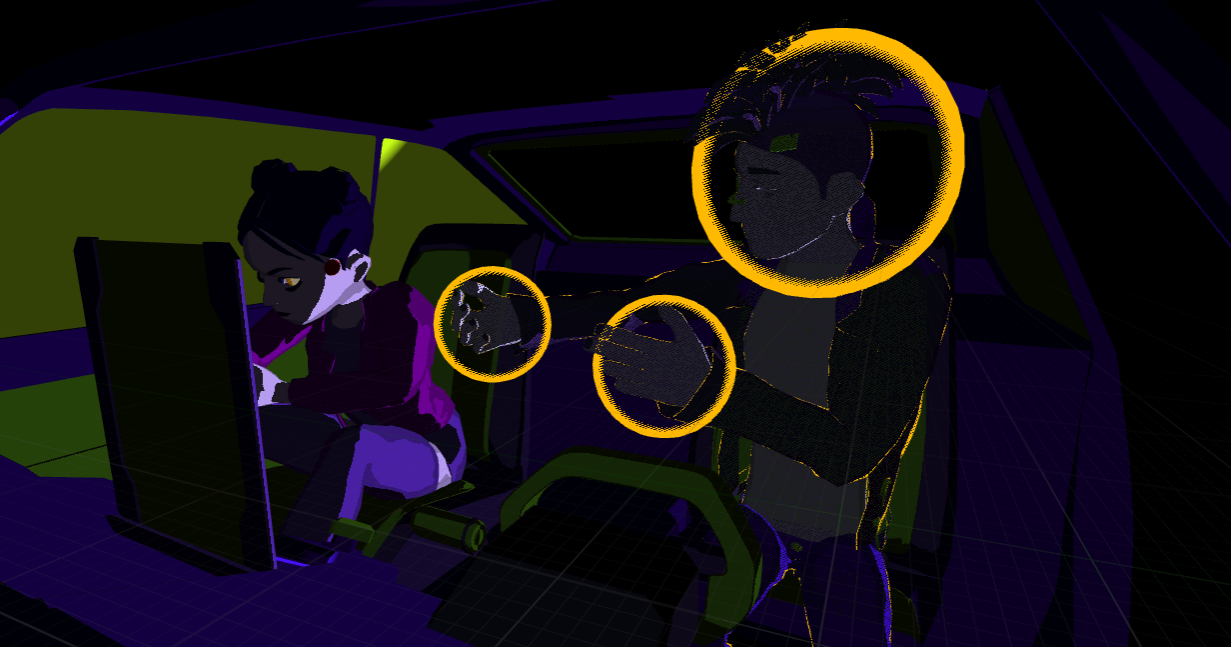

There are a couple of fun and novel interactions that came out of the discussions around player interventions in a linear narrative. How could we make user interactions feel meaningful and authentic to the story? Each of these interactions also had significant sonic components as well. The interactive emotions and feels are intimately linked to the sound design of the interaction. One of these interactions, all of which were created in engine by our brilliant programmer, is what we called the “embodiment mechanic.” Narratively speaking, in order for David to edit and interact with a memory, he must first embody a person who was present in that moment/memory. In the experience this is denoted by floating orbs around a dynamic pose that the player must take to progress the story.

But what does it sound like to “step into” someone else? Considering our conversations around sound design direction, and the themes of cybernetic tech in cyberpunk worlds, we decided it needs to feel electrical. Yet, since we are inhabiting another organic being, it needed to feel somewhat organic as well. To attach these sounds to the moment of embodiment, the interaction was designed in a way that it had “interactive zones” around the hands and head of the person being embodied. These zones were programmed to trigger spatial sound events whenever the player's head/hands matched the position of embodiment pose.

To denote Rin’s emotional and physical journey, this space is broken down into zones of activity, each with an emotional theme. As the player is railroaded through this section, the sound design became pivotal to presenting to the player what Rin was seeing, hearing, and feeling at that moment. These areas were separated into the Car, Netrunner, and Murder zones. The car zone is meant to evoke an “adrenaline rush,” and is the preamble to Rin’s dark moment. The audio team created & developed a mix of sounds and music to accompany and interact with animations and effects taking place. Temporally increasing in the moment crescendos to its conclusion. Like all of our scenes, much of the affect was achieved through the use of Wwise Ak Events (with various characteristics) and Unreal Engine's Sequencer.

The Netrunner zone is all about creating a feeling of being overwhelmed and helpless. From an audio perspective it is texturally polyphonic, with a lot of voices talking and shouting over one another and in quick succession. The player is placed right is the middle of it all and becomes an active participant in Rin’s discomfort. The final zone, the Murder zone, is all about creating an intimate and suspenseful moment for Rin, and the music direction informs this moment well.

Rip It All Out

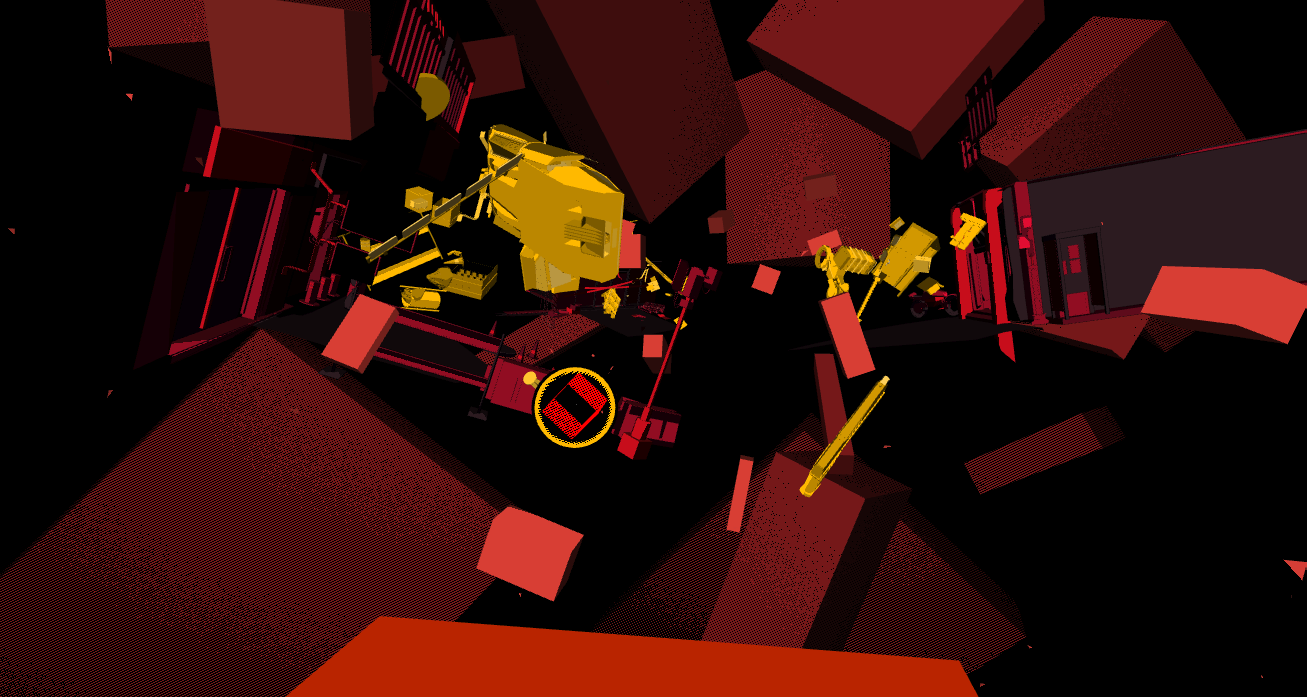

The third main environment the player will encounter is the “Destruction Memory.” This space is defined by chaos and instability. At this point Rin has become emotionally unstable and hostile towards David and demands that he do whatever is necessary to delete this dark memory from her system. The Destruction Memory, visually, is drawing on monochromatic/duotone illustrations of destructive war scenes from comic books. As well as, again, pulling heavily from Akira. The tints and shades of red are meant to evoke a sense of danger and the yellow interactable objects are colored as such to thematically compliment the visual direction yet allow those objects to stand out to the player and interactable.

As David is uncertain of the ramifications of such a drastic course of action, the space is, too, uncertain of its stability and grounding in any form of reality. This point in the story is all about fun and engaging destructive power and creating a sense of urgency and impending doom for the player. The sound and music for this space truly demonstrates these ideas as it is crunchy, mechanical, and glitchy in timbre and sporadic in temporality. Sounds and voices from Rin’s past, present, and future collide in a truly chaotic manner that is complimented by the animations and dynamic lighting.

Conclusion

Black Ice VR had it’s world premiere at the South by Southwest (SXSW) 2022 Film Festival.

If you want to learn more about the project and the team behind it, please check out our site at www.blackicevr.com.

I would like to give a special thanks to Ryan Schmaltz, Karine Fleurima, Stacy Payne, and the entire METL Immersive Storytelling Residency Cohort. I would also like to thank the mentors who help us along the way in developing this project.

Comments