Prologue

Introduction

Music system overview

Contents

Synchronizing NPCs with music

《一剪梅》Random singing of poetic songs

《望海潮》Companion humming scene music melody during pauses or in sync with music

Synchronization of 3D poetic songs with 2D BGM

Synchronization of BGM and 3D sutras chanting of NPCs

Evolution of scene music with real-time

Transition design between different regional scene music without annoying frequent switching

Scene music and managing silence

Technical Details

Wwise project organization

Band music

Combat music

Connecting conductors to the world

Epilogue

Prologue

“How can I deepen immersion and realism in games beyond mere reliance on background music?” As a music supervisor and lead music composer for this project, this initial self-inquiry drove my exploration at the outset.

Introduction

Condor Heroes (射雕) is a Chinese-style Open-World MMO game set in the Song Dynasty era (960-1279), with the story background based on Jin Yong (Louis Cha)’s martial arts novel ‘The Legend of the Condor Heroes’’. According to Chinese history, the Song Dynasty was a prosperous era characterized by a thriving commercial economy and culture, also witnessed the development of music diversity, with the most popular being the traditional folk band performances held in folk art venues known as Goulan Washe (勾栏瓦舍).

Furthermore, according to the research, compared to ancient Chinese poetry, Chinese poetic meters (词牌: the verses adhere to a fixed structure and prosody, dictating the rhythm and phonology) are more capable and conducive to musicalization. At that time, it was commonly employed as a method of cultural promotion, with lyrics often portraying the current political situation, cultural ethos, and societal sentiments.

Chinese Poetic Folk Band in the game

So, what if I “revive” these traditional folk bands in 3D music, arrange the particular stages for them in the game, and give new compositions based on poetic meters (词牌) that relate to the era of the game or its stories? It would recreate the daily life of the civilians from the old times, so both game players and in-game characters can experience this immersive feeling of reliving the past.

Finalized Chinese Instruments arrangement (from left to right, in the above image):筚篥 Bili、箫 Xiao、小镲 Small cymbals、古琴 Guqin、琵琶 Pipa、缶 Fou、大鼓 Large drum、碗琴 Wanqin、拨浪鼓/小笛 Rattle-drum/Little flute (Twins play)、碰铃/古筝 Hand-held bells/Guzheng (Twins play)、尺八 Shakuhachi、方响 Fangxiang、笙 Sheng、檀板 Tanban, and occasionally accompanied by 箜篌 Konghou and 三弦 Sanxian.

They’re all played in 3D with natural simulated attenuation curves from Wwise; so for players who may have the misfortune of getting lost, this could start to serve as a 'sound-based navigation' gameplay or just function as an easter egg for those who inadvertently discover it.

After conceptualizing the folk band, the paramount concern shifts to how these poetic meter songs will be presented and the overall design of the playback logic framework in the game. And you might have some questions. For example:

- Won’t these 3D poetic songs conflict with the 2D BGM playback logic?

- What happens to the BGM when players approach these bands?

- etc.

In terms of music, I actually started working on global music system design from the early stages of game development and decided to depart from the conventional composition, playback rules, and expression forms of traditional Chinese-style gaming music.

This article will start with the mentioned 3D poetic music themes. It will primarily focus and elaborate in detail on the functionality of the listed themes below about our interactive music system design and the technical support of our audio programmer.

- Synchronization of 3D poetic songs and 3D random humming of NPCs.

- Synchronization of 3D poetic songs with 2D BGM.

- Synchronization of BGM and 3D Sutras Chanting of NPCs.

- Dynamic evolutions of scene music in sync with the real-time.

- Transition design between different regional scene music without annoying frequent switching.

Music System Overview

After initial exploration, it was clear that a typical Wwise approach of having one music event controlled by global states or switches would be a bit rigid for our needs, which included the following:

- There should be no clear boundary between diegetic and non-diegetic music. The former can become the latter and vice versa. Additionally, some elements of a theme can be diegetic while others are not; the elements themselves can dynamically appear and disappear as controlled by level markup, gameplay code, or even a cinematic sequence.

- The game contains a large and detailed world filled with characters, locations, and encounters. The music system should dynamically prioritize one over another depending on the situation, schedule silent moments, and manage what happens during pauses.

- The game is based on a book, so storytelling is very important. There are a lot of crafted linear sequences and cinematics that need to be synchronized with music, sometimes to a beat.

Clearly, this requires support for at least multiple 3D music emitters, callbacks, a prioritization system, as well as a ton of editor tools on top of that. This all was implemented in what we called a ‘Conductor System’, consisting of three core components:

- World Conductor. It is a global entity that queues, prioritizes, and schedules music theme playback in accordance with rulesets defined in data tables. For example, scene music themes typically have lower priority than others and will be suspended during combat. The world conductor can also pass music callbacks outside the music system for gameplay synchronization (e.g. during cutscenes).

- Music Conductors manage music theme control flow and coordinate one or more music sources playing at the same time. It can also maintain an internal state, if needed. For example, we could save the last playback position of a music theme to resume its playback later on (e.g. in the case where a theme with higher priority was requested to play.)

- Music Sources are entities that execute commands from an owning Music Conductor and map them to Wwise API calls. Each source can be placed at an arbitrary location in the game world. The first music source of a conductor is always considered as a ‘guide track’ – it’s used for collecting callbacks and all other sources are synchronized to it if needed.

It’s a simple layered architecture where lower-level entities don’t know anything about higher-level ones. Additionally, Music Conductors and Music Sources had to implement their respective Blueprint Interfaces. This added some initial set up friction, but let us to completely override music playback logic while still being integrated into the prioritization and scheduling mechanism of World Conductor. For example, at one point we wanted to add a virtual instrument that players could interact with and play a melody that’s harmonized with a currently-playing music theme. All we had to do was to create a specialized Music Source that doesn’t play music per se, but which is aware of a current chord of the music theme that it belongs to, and contains simple heuristics to trigger random notes in accordance to current harmony.

Music system behavior is configured via Unreal’s data assets and data tables. As World Conductor fences off implementation details from gameplay code, integration with cinematics and dialogue systems, implementing debug UI, as well as adding level markup actors was a breeze. The World Conductor’s state machine would handle any transitions dynamically no matter where a music control request was issued. One interesting system we built on top of that was something we called ‘Conductor Sequencer’. It essentially lets you sequence commands sent to World Conductor in a tracker-like fashion, and we used it extensively during small scripted situations where music had to follow a strict control flow.

Contents

Synchronizing NPCs with music

《一剪梅》Random singing of poetic songs

This poetic lyric utilizes scenic descriptions to evoke emotions. It portrays scenes infused with a deep longing, effusive and rustic. Here are some core design points:

- Simple to sing and catchy (for the civilians).

- Suitable for child NPCs to sing along.

- Avoid the exquisite texture in Chinese instruments, simplicity is necessary.

The humming of players and NPC characters is randomly triggered and synchronized with poetic song. We asked our voice actors to perform such singing.

In the game capture below, you can hear that we placed instruments and vocals in various locations (see screenshot below) to simulate "Every household in this garden area often gather to play the instruments and sing together"; even their children (NPCs) hum along while flying kites. Also, in order to represent the cultural content in music passed down from generation to generation, it also gave this area a touch of rustic charm.

Due to the close proximity of each model, players can hear synchronized musical stems coming from different directions no matter where they move.

《望海潮》Companion humming scene music melody during pauses or in sync with music

In most cases, the NPC following the player will only interact during the combat moments. However, in my design philosophy, the NPC accompanying the player remains a child throughout, he will occasionally hum tunes and also be naughty, so I decided to imbue him with some “spirituality” (just to give a hint of real life).

In the game captured below, you can hear that the brother will occasionally and randomly hum snippets of poetic song, but still perfectly synchronized with the accurate beats.

Synchronization of 3D poetic songs with 2D BGM

In a town, a band can play a song (for example《无题》) that is in sync with the background music of the area. However, this poses a potential issue: won't it conflict with background music?

I followed the following design principles for this case:

- Setting a time limit for the playback of poetic bands.

- BGM plays synchronized with poetic songs at the same time period.

- BGM is composed with the tempo and instrumentation based on poetic music allowing them to blend and become intertwined with each other seamlessly.

- Proximity-based volume control of BGM.

In summary, the final result is that whenever the player approaches this 3D object, the BGM will gradually decrease in volume and shift focus to the 3D object. Furthermore, due to the speeds, keys, and harmonic progressions of the two pieces of music being exactly the same, the player will no longer experience a "sudden music switch" but rather hear the two songs blend seamlessly together, yet it can still exist independently after the player leaves. The transition is quite smooth and hard to notice without active listening, which also blurs boundaries between diegetic and non-diegetic music.

Similar synchronization rules are designed in many other locations of the game.

Synchronization of BGM and 3D sutras chanting of NPCs

Lingquan Temple is one of the historical Buddhist temples, which also holds a significant position in the game. Due to its sacredness, I didn't want to rely solely on thematic background music to create serene solemnity as always, but I hoped to incorporate some fresh elements. Regarding the sound, apart from the wooden fish and bells commonly struck by monks in the temple, are there any other special elements?

So, I came up with the idea of incorporating the monks' daily chanting of Buddhist scriptures.

I asked a recording engineer to visit a Buddhist temple in China to record and collect some samples of monks chanting scriptures. After receiving the recordings, I proceed with the following steps:

- Sync the tonality of chanting with the music.

- Sync the tempo of chanting with the music.

- Trigger the chanting pieces randomly on the downbeat of playing music.

Evolution of scene music with real-time

Some players will inevitably play the game from morning until noon or from afternoon until evening (i.e. their continuous gameplay can span different time periods). Since the in-game time is synchronized with the player's local time, the usual approach for such games is to play a separate piece of music for each time period. However, "obvious music switching" is something we aim to avoid. So, I decided to make the overall scene music themes dynamically evolve and replace and bring more changes in sync with the time within the same tracks.

- Set morning, afternoon, and evening 3 time periods of music changes.

- Set music changes all stem within the same tracks.

- Maintaining the dynamic stems involves both “continuity” and “variation”.

- Set the music to play in one time period as the complete version.

Similarly, the players may not hear any traces of ”music switching," but rather, they might have a feeling more like "the music in this scene seems to have become richer in the afternoon" or "the music in that scene seems to have become faint in the evening.”

Transition design between different regional scene music without annoying frequent switching

The above descriptions outline the interactive changes in music that occur when the player is active within the same scene or under fixed conditions. Additionally, we've also implemented certain designs to the music's logic when the player walks or teleports from one location to another either by the progression of storyline or leisurely exploration.

Simply put, I don't want players to experience an immediate music change every time they enter a new area. Such repetition can only make the players feel bored and tired eventually, and considering that some players may frequently enter and immediately exit an area by mistake, it's best to incorporate a buffering recognition time and "blank interval." For example:

For regular scenes:

- Activate current music action when the system recognizes the player has stayed in a new area for a certain amount of time.

- Activate new music action after a certain number of seconds of quiet interval time.

For a few special scenes:

- Gradually decrease some of the instruments of the current music and continue playing without switching.

- Trigger the exclusive short musical stinger when the player enters the new area.

In open-world games like Condor Heroes, players may not necessarily want to hear music at all times. Providing 30% to 40% of silent moments in the music can help alleviate listening fatigue.

Scene music and managing silence

Level markup can define music volumes that will tell ‘world conductor’ what music is expected to play in this area. By convention, we decided to not loop it and let the players enjoy some quiet time until the music restarts some time later. During such pauses, the music system informs the game that characters can play special voice events, like humming or whistling melodies and motifs from music that has just been played.

World conductor also knows about current in-game time and can schedule to play morning, day, or night variants of the theme. Additionally, there’s a configurable ‘quiet time’ within which no scene music will be scheduled.

Exclusion zones can be defined to smoothly fade out area music when the player approaches certain locations either to emphasize the importance or to give space to things that happen or are about to happen.

Area music can be interrupted by combat or other encounters as it typically has the lowest priority. In this case, the world conductor will pause the currently playing theme and resume it after a higher-priority theme has been explicitly stopped. Speaking of which, paused events are still considered active voices in Wwise as they affect auto-ducking.

‘World conductor’ can also do other more complex transitions reminiscent of those available in Wwise, basic synchronization with music measure and playing stingers to mask the transition. Such transitions are configured in data tables.

Technical Details

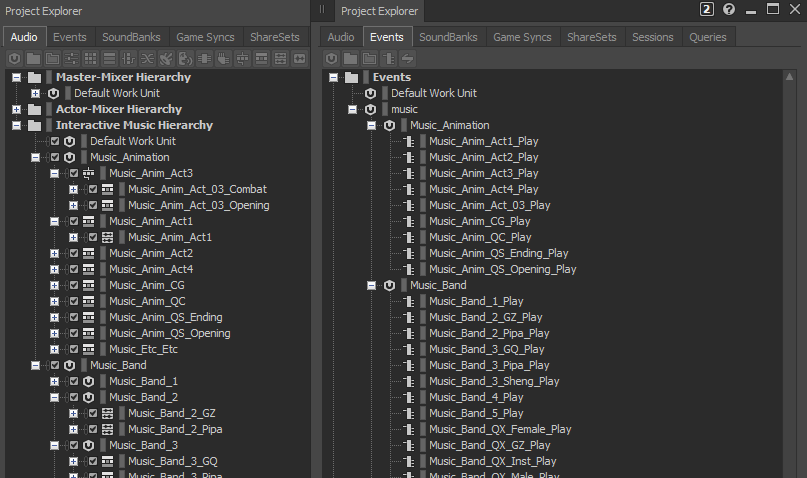

Wwise project organization

Each music theme composed for the game has its own hierarchy independent of others. Work units are organized by music purpose, such as combat, scene, or linear sequence music among others. Each kind goes into its own bus to ease mixing and to get auto-ducking working selectively when needed.

Having each music theme isolated from others increased implementation complexity on the game engine side, which now had to manage transitions, stingers, fade in and outs, as well as silence and synchronization. The latter two were particularly important to us, as they had special requirements that were hard to manage with music managed by one PlayingID, more on this later.

Essentially, we removed a lot of complexity out of the Wwise project and re-introduced it in the code. Music implementation would vary depending on its type, sometimes by a lot, e.g. some themes follow the typical switch-container structure, while others can rely on code for looping them. Speaking in music system terms, they implement different ‘conductor’ classes, some of which I’ll try to describe below.

Band music

This diegetic music is represented in the game by a virtual band that performs songs at specified times. A player can enter listening mode where the camera begins panning around characters. Let me elaborate requirements:

- Any instrument or a voice can be positioned in a scene individually.

- Music themes are not looping.

- Though it’s a single player game, players must hear bands perform at specified times, so that everybody hears approximately the same, even when players join the game in the middle of the performance.

I can think of two ways to implement diegetic music perfectly synchronized between several world locations: by managing a pool of 3D aux bus sends, or by playing each instrument as separate events. We chose the latter. I don’t remember why. The observation was that a group of events of the same length that are posted before the call to AK::SoundEngine::RenderAudio will play in sync forever. With the caveats that these events have to have identical streaming, virtualisation, and seek table settings, which we addressed across the project with some help from WAAPI.

Additionally, Wwise has quite a few restrictions to SeekOnEvent action which we had to use required for synchronizing band playback position. The most important one, looping events cannot be seekable, so all band music instruments are one-shots of equal lengths that are looped by the ‘conductor’ if needed.

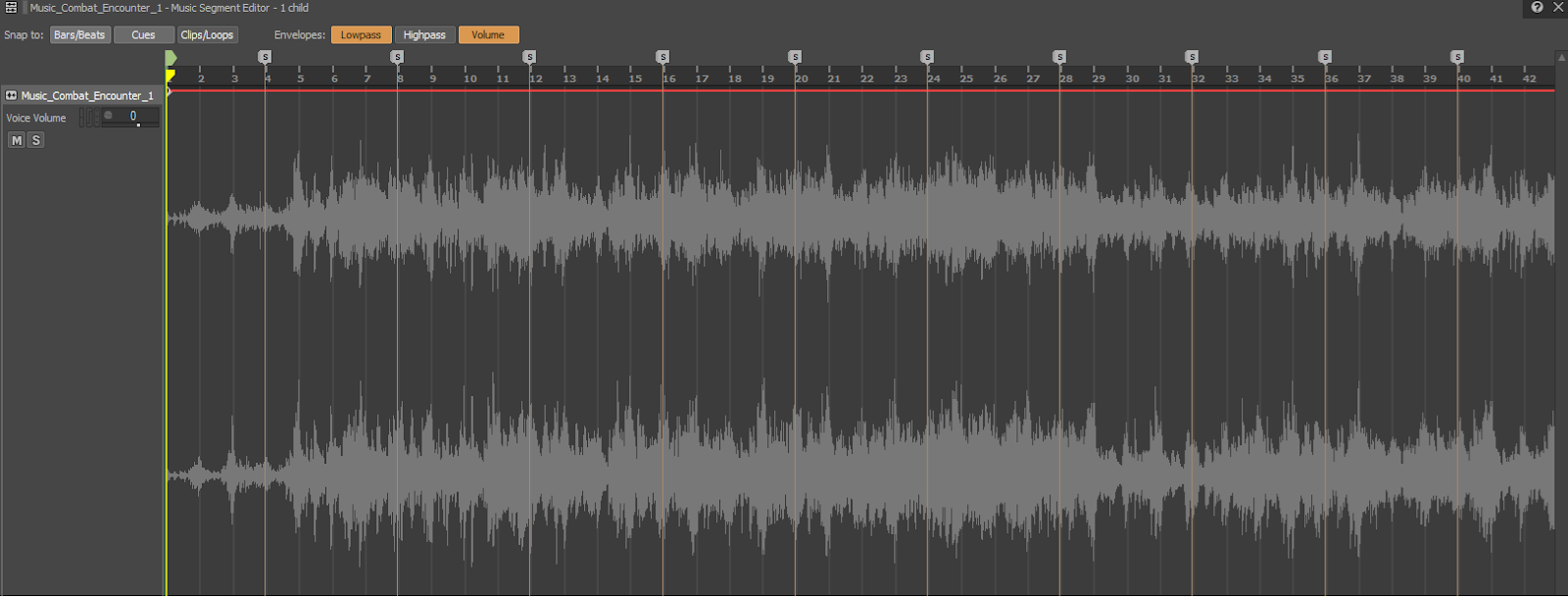

Combat music

The game has two kinds of combat music: boss fights and mini encounters.

I’ll begin with the former, as its conductor follows a typical Wwise implementation model with switch containers and transitions. The boss fight always progresses through specified parts in one direction; for example, intro → stage 1 → stage 2 → victory or fail. We implemented a generic Music_Part switch for this and had a convention on what each numeric part means.

Contrary to boss fights, mini encounters don’t have stages; their music is prepared as an infinite loop. Compositionally, the music is split into chunks with distinct characters and short transitions between them. The music starts with a fade in and stops with a fade out, the conductor tracks the playback position to start the music later again from the point where it was stopped… I would say if life was that easy. However, in our case, this music starts again at the marker that follows the position where music previously stopped. Those markers happen to be placed where chunk transitions begin. Starting music at such transitions with a fade-in reinforces the feeling of insignificance and recurrence of each encounter.

As this music requires seeking, the looping is managed by the conductor.

Speaking of fade-ins, Wwise doesn’t provide an API to control them from code. This can be worked around by going through this sequence: post event → pause it immediately → wait one frame until after RenderAudio is called → resume with specified transition duration. If you don’t wait one frame, Wwise will essentially fold the command sequence and will think that event should be playing but not resumed. I don’t remember why, but there was a reason why we ended up refactoring this workaround to use RTPCs with interpolation times for fades.

Connecting conductors to the world

Up until this point we were covering how different kinds of music themes work internally. What’s left is to provide some examples of how our system enables intricate interaction between music and other game systems:

- A temple in one of the levels has an altar that triggers faint sounds in response to callbacks from Wwise.

- When traversing through outdoors, a player can find a small village where a character sings a song in perfect sync with currently playing background music.

- A musician playing drum attracts player attention to a location. Upon reaching it, a cutscene begins and shows a fight competition. This diegetic drum loop turns into non-diegetic cinematic music that ends with a hit to a gong. As the gong signifies the end of the fight, it was important for us to have a perfect synchronization of the hit with visuals. We implemented it by sending a callback from ‘world conductor’ to a level sequence player that would jump to the next cinematic cut upon receiving it. The callback originated as a Wwise music cue marked up with a special name.

In all the examples, the functionality was implemented with the Conductor API; the game code didn’t need to know anything about Wwise at all.

Epilogue

This article provides a relatively systemic overview of our "Conductor" interactive music system, highlighting its core role in enhancing player immersion, emotional interaction, and overall gaming experience. We worked really hard to make any type of background music to not only feel "emotional" but also be "rational" in given contexts, and hopefully, this was as interesting for you to read about it as it was for us developing it.

Comments