It Takes Two by Hazelight Studios is a split screen action-adventure platformer co-op game. It distinguishes itself from other action-adventure games through the significant amount of variety in its content, as well as its original and playful animated style. When it came to developing the soundscape of such a game, the most significant challenge was the split screen and its implications on the audio.

Join our friends at Hazelight Studio who answer questions about the challenges and opportunities they encountered while developing the audio for It Takes Two. The Audio Team – Philip Eriksson (Lead Sound Designer), Joakim Enigk Sjöberg (Technical Sound Designer), Göran Kristiansson (Audio Programmer), and Anne-Sophie Mongeau (Senior Sound Designer) explore their approach to split screen, the mix, the sound design, the performance, and the music. This interview brings to light the technical solutions that enabled them to deliver a high-quality audio experience while setting new standards for audio in games that fall within the same category.

Split Screen

Can you summarize your technical solution to handle the vertical split screen in It Takes Two?

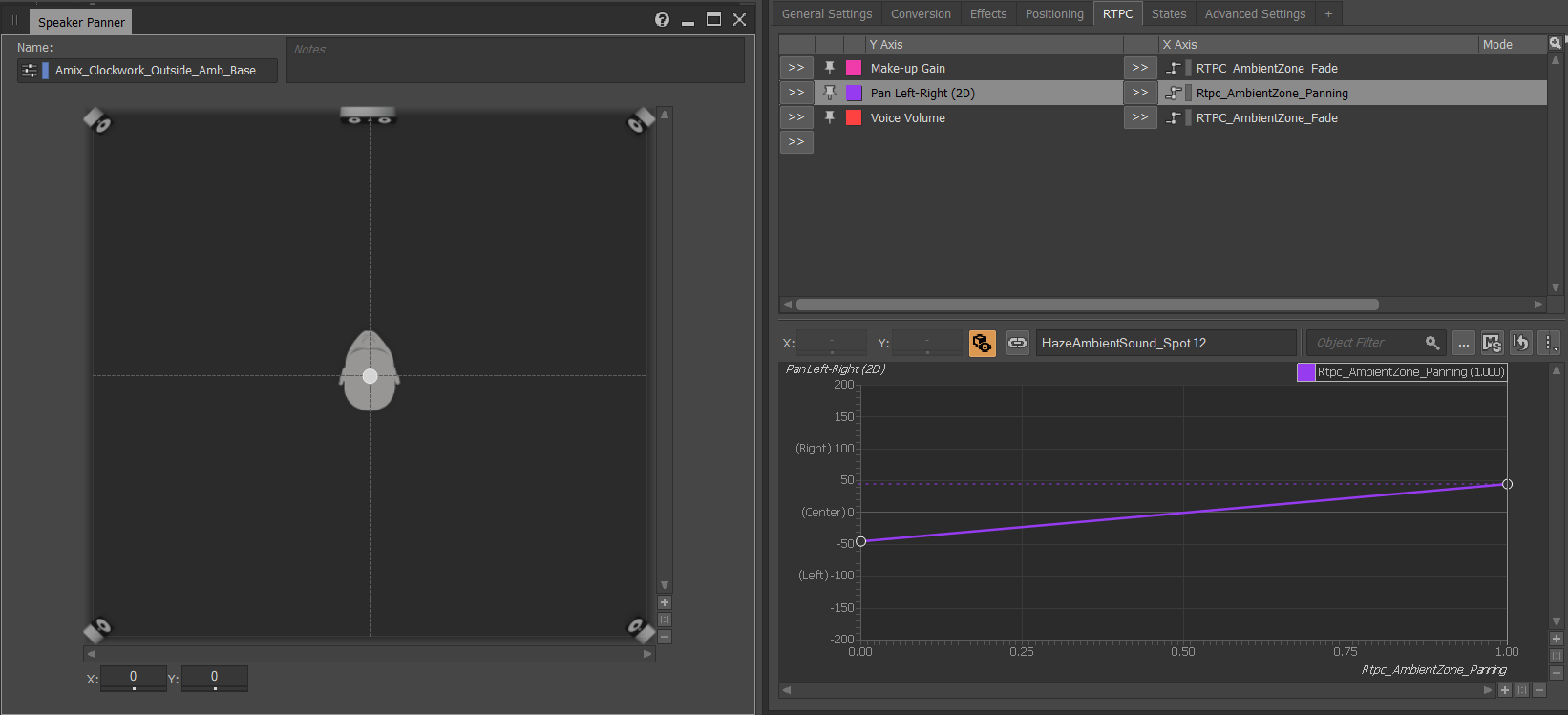

PHILIP: The way we handled vertical visual split screens was to split the sound mix into two halves as well. We used the left, center, and right speakers as our base for all sounds and always favoured the front against the rear speakers. We panned the sounds that belonged to the left half of the screen between the left and center, with a small bleed toward the right speaker. We used this trick for player movement, ability sounds, and even voices. Our ambiences were four channels and therefore played in the rear speakers as well. When the characters heard different ambient sounds while gaming in separate locations, we lowered the ambience from the right character's left, front and rear speakers, and vice versa, this always created a clean experience that matched the screen space.

Image 1: Panning ambiences

Did you handle the split screen any differently when it was not split down the middle? For example, when one player’s side becomes larger, or when the split becomes horizontal, or even when the game becomes full screen?

JOAKIM: Where both players share equal screen space in the vertical split was the most prevalent scenario by far and was where we invested most time in our technical efforts. Another common scenario seen throughout the game is where both players share the same view on a full screen. It was important to us that the established spatial placement framework was not overturned completely in these scenes. Even though the visual perspective is different, the rules of how audio behaves are only slightly changed, to the point where the players hopefully do not even notice. The main method employed in a full-screen section is that we track sound sources relative to the middle point of the screen, from which we then pan them accordingly on the horizontal plane. This ties into our overarching philosophy of managing screen space which greatly improves clarity in these gameplay sections, which by nature are often chaotic and intense.

GÖRAN: One of the technical solutions that brought order to this chaos was our filtering plug-in. Our filtering plug-in is a per-channel biquad filter with volume faders and Q value for the filter. We used the plug-in to have clear control over each channel, utilized when the players die and respawn. We created a cool effect by sweeping the lowpass filters on half of the mix as well as a clear distinction for each player. Due to gameplay limitations (for instance, having low health for long durations), we could not apply a similar effect with the low passing half of the mix because it started sounding bad if applied for too long. So we only used it for the death/respawn moments that were more drastic and shorter. Thanks to the existing codebase in Wwise, the features of the plug-in were easy to implement.

What about the sounds that are fully spatialized (3D)? How did you manage to avoid confusion in terms of locating those spatialized sounds in the world, while both players can be standing at a different position in relation to the sound source?

PHILIP: This was a bit trickier to solve than 2D sounds. We realized this issue early on in the process of making sounds for the game, but it was not until quite late that we fixed it. One of the simplest examples of an issue that arose was that one player could stand very close to a sound source but with their camera turned away from the sound, while the other player was further away from the sound source but looking right at it. The summed mix in this case made the sound appear in both the front and the rear speakers, creating a perceived sound that was very wide and big (more than it should be). For me, this breaks the illusion. It was something that I felt we could not ship the game with. One of our most solid rules, when it came to panning and mixing, was to favour the player who has the source that made the sound in their field of view, and this issue broke that rule, so we had to do something about it.

JOAKIM: The issue we saw in this was based on our particular listener setup, and there were two major pain points. The first was in how spatial sounds were positioned. Since the player closest to the spatial source would usually be the one mixing the loudest output, their perspective would have the largest influence over speaker placement. Their field of view would often not be truthful to what we as designers wanted to focus on in that exact moment, but we did not have the tools yet to control that outcome.

The second issue was how Wwise inherently combined channels for our listeners. When the output from spatial sounds was actively mixed by both listeners, the result became double the amount of signal; resulting in an increased output volume compared to if the same sound was heard by just one player. This would have very unpredictable implications on the final result since the amount of signal per channel would depend on the combination of both players’ relation to the sound source.

The issue of amplitude for spatialized emitters became clear to us as soon as we started implementing sounds into the game. Our first method to combat it was to implement a very simple RTPC, bound to make-up gain at a high-level Actor-Mixer. For every emitter playing spatialized sound, we would track listener proximities, and when they both were in range, this RTPC would be continuously updated to lower volume based on their distances - all to counteract the added intensity of our doubled channels. This was, of course, a very ugly “fix”. And since one could never really predict the exact amount of signal that would end up being combined by the listeners, the implementation as a whole gave sub-par results in flattening the amplitude.

Together, both of these problems meant that we could in no way control the spatial mix of the game. So we decided to crack open the lower levels of Wwise to write our own rules of how to handle spatialized sound sources in It Takes Two.

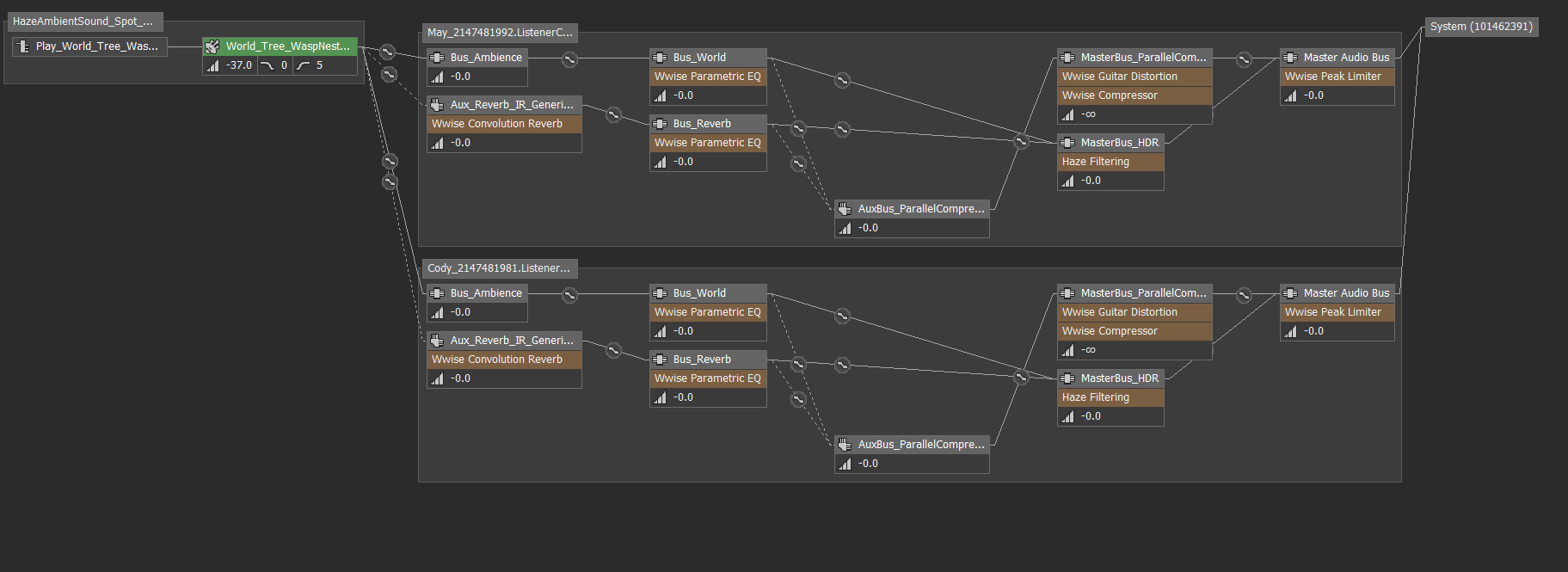

Images 2 & 3: One sound source, two listeners routing

GÖRAN: Before writing our own rules in Wwise’s system, we needed to know the rules of Wwise first. We went through it step-by-step to see what already existed, what needed to be changed, and where to do it.

As mentioned before, we started this late and thus the approach was: how can we reach the result we need while keeping the project on time? To summarize the needs of the design, our goal was to eliminate the doubling of signal and to clamp the signal based on directional focus, which would result in our very own Spatial Panning system.

With that in mind, we needed all the listeners within the attenuation range and their respective orientations for each emitter. We selected to smooth out the signal changes mostly based on distance. But we also indirectly let the gameplay control the speed of the directional signal smoothing since in most situations the rotation changes were rather controlled and smooth. To limit the performance hit and of course to have the data in a format we needed, we had to leave a rather long crumb trail in the Wwise system to reach the desired result and performance.

Did you find that this Spatial Panning solution, while being very helpful, created other problems? If yes, what were those, and what was your approach to avoid them?

PHILIP: I am 100% sure that our fix for this issue can be heard if a player is really trying, but it solved more problems than it created. Since our solution involves moving sounds that, for example, “should” come from the rear speakers to the front speakers, we needed to create a lerp in order for that transition to occur smoothly. This means that if a player makes very fast and extreme movements with their character, there is a risk that our lerp may feel slow even though we have carefully tweaked it to account for all possible scenarios. Although players will never be able to listen to one single sound source on their own, so in the full mix of the game it would be very hard to single out any issue.

JOAKIM: The Spatial Panning feature was made from the ground up to combat the issues as we specifically saw them in It Takes Two. All methods employed to achieve our end result during the course of this project, including the Spatial Panning, were not designed to be a perfect fit for any type of action-oriented split screen game; instead, they were specifically tailored to function within the boundaries of our game, It Takes Two. An example of this is how the gameplay is very linear; the players are always moving forward and the spatial sounds that they encounter are all designed to exist in the world that enables that journey. Even though our solution worked well in the context of a game such as this, one should not expect it to translate very well to a game that has more of an open world or to one where the perspectives between two players are more asymmetrical. While the idea of Spatial Panning will be a place from which we might continue onwards in future endeavours, a new game will surely require rethinking into what one is looking for when merging two halves and compromising perspectives into one truthful whole.

GÖRAN: Since our release window was during the release of new consoles, we had to update Wwise versions rather frequently due to issues and features for the new consoles, which in turn meant taking a lot of time just keeping our features such as Spatial Panning alive and working well. This is a common thing when using third-party software and shouldn’t come as a surprise, but a good thing to keep in mind when modifying existing software to also build tools (or use existing ones) to help that process as much as possible, and clearly mark your changes in the codebase. Thus reducing the overhead when migrating between versions.

Mix

The mix of It Takes Two sounds great, consistently clean and clear, despite the split screen. What was your approach when it came to mixing the game? What vision did you have and how did you put it into application practically?

PHILIP: We wanted to create an interesting mix with drastic changes, always focusing on something new and compelling. Our game is quite linear, so it was easier to achieve this than if it were a more open-world type of game. When the player moves from room to room or follows the main path, we ensure to always focus on the next interesting sound and event. Most of this is accomplished through mix decisions, carefully selecting what sounds the players should be able to hear and not hear. This is something that we consciously did during an audio team spotting session, from making sound effects to creating the final mix. An interesting fact is that we also had to be two players while mixing to make the game behave the way it was designed to. This made the mixing process a lot more fun and it was also very helpful to always have another pair of ears in the room and someone to discuss mixing decisions with.

ANNE-SOPHIE: The split screen had a significant impact on some of our mix decisions. For instance, instead of trying to separate the two screens at all times and fight against the split, we chose to work with the split and to use sound narratively, reinforcing which were the most important elements currently occurring in gameplay (in any of the screens), emphasising those through the mix. By focusing on one important element at a time, we tell the players what to pay attention to. That is especially important during epic, combat, or busy sequences. Sometimes that meant being quite drastic in terms of what comes through and what doesn’t, but it ultimately paid off when it comes to mix clarity and consistency.

Did you consider delivering different mixes based on playback set up? For instance, having different headphone mixes for each player, or a different mix depending on the players playing in ‘couch co-op’ mode or separately over network?

PHILIP: At the beginning of the audio production, this was something that I thought about and I had a few ideas. One idea was to lower the character movement sounds and the voice for the character that you are not playing. Another idea was to mute the world sounds that the other player would hear. When imagining the player experience, I realized that none of these ideas would do any good. Since the visual representation of the game is the same no matter what way you are playing the game, it felt logical to treat the sound the same way. It would also have created more work for us having to maintain all these different types of mixes. We strived for the experience to sound good when the scene was shared between both players when within the same soundscape. Especially for It Takes Two, which is a game that I believe should be shared no matter how you are playing.

HDR

Did you use the Wwise HDR for It Takes Two? What did you find was the best strategy to suit your needs and serve the split screen best?

PHILIP: We did use the Wwise HDR. This was an easy choice to make, we wanted to utilize the HDR to clean up the mix, and as a tool to optimize our game, through the culling of sounds that are not heard. Coming from EA DICE working with HDR on previous titles, it took a bit of testing to become friends with the Wwise HDR. A few tips and tricks are:

- Normalize your sounds;

- Create a guide for which voice volume values to use on different categories of sounds, or choose what sounds are the most important in your mix (it does not need to be the loudest, can be the most interesting sound);

- Try to let the voice volume value stay static with the exception of distance attenuation. So do not apply, for example, a random LFO RTPC on the voice volume value, this will mean that your sound will duck other sounds (or be ducked by other sounds) differently over time and that might not be what you want;

- Use the make-up gain for all variable volumes and for general mixing;

- If you work with multiple layers for the same sound effect, be aware that each voice can have a different voice volume value and therefore also duck layers that are meant to play together. A good way is to set the same voice volume value on layers that should play together and mix the panning between these layers with make-up gain;

- Be aware that if the voice volume is set to a value that is below the bottom of your HDR window range, your sound will most likely be culled if you have chosen that setting in the virtual voice behavior. This can be used deliberately but you need to be aware of it.

Another interesting realization that we had is that, when having two listeners, it also means that you have two HDR instances at the same time. This means that if one listener is, for example, standing next to a high voice volume sound and the other listener is outside the attenuation range, the summed HDR ducking will be less than if both listeners were close to the source. This might be a good behavior but it might also not be what you want.

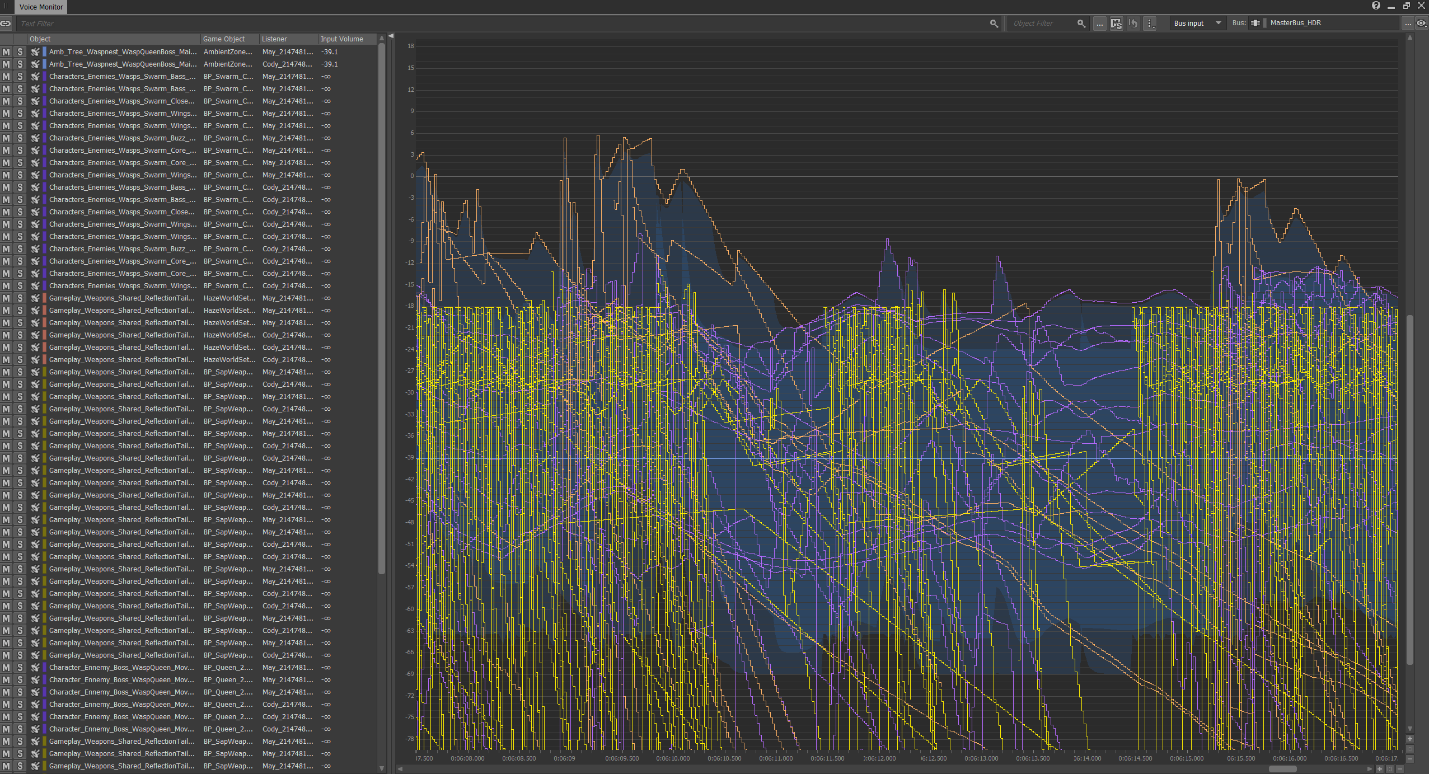

Image 4: HDR visualization using the Voice Monitor

RVB/Worldizing

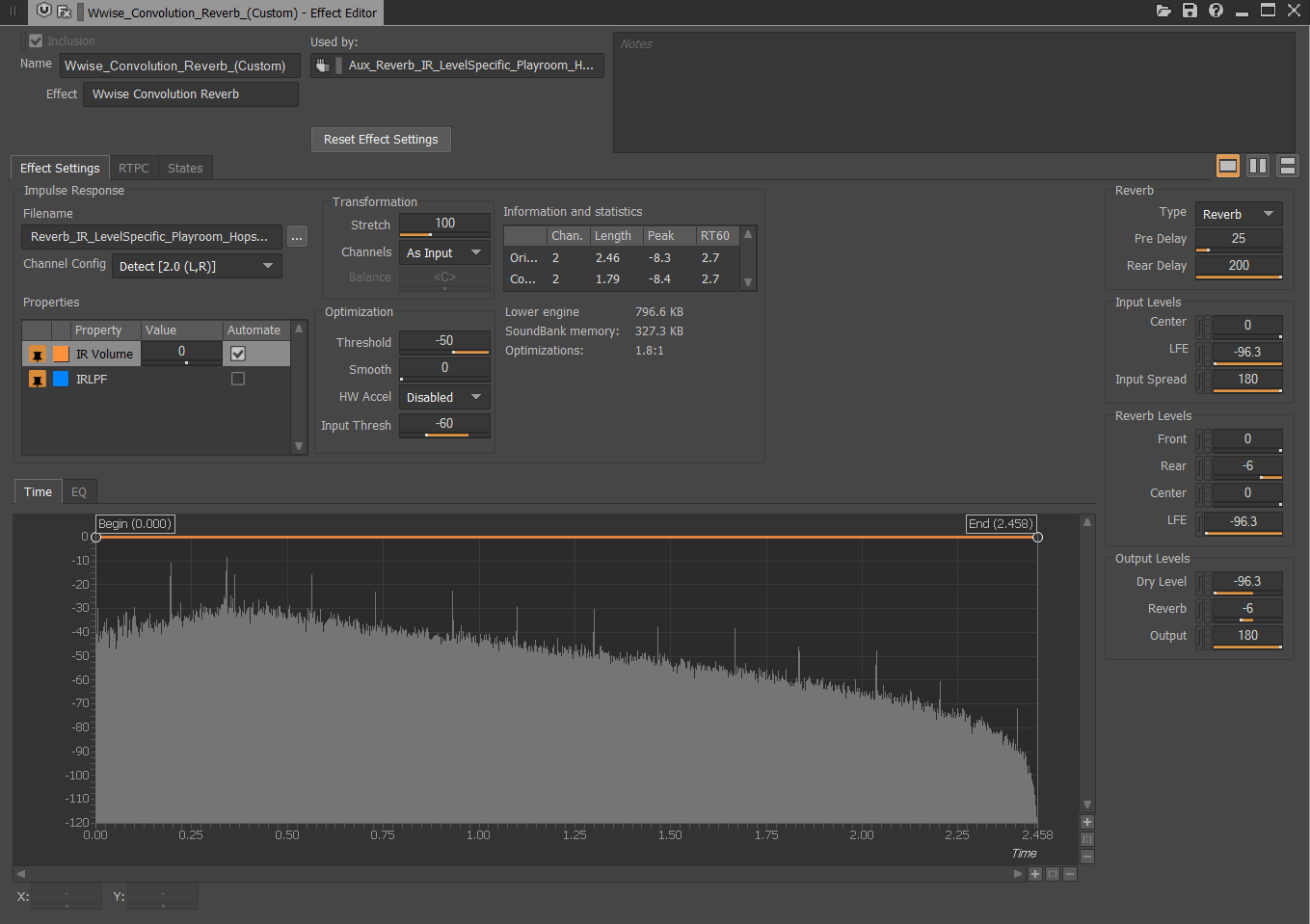

The reverb and the general world of It Takes Two sounds very realistic and immersive. What kind of reverb did you use, and what other solutions did you develop in order to make those environments so convincing?

PHILIP: A key pillar of the audio direction for It Takes Two was to make an immersive experience for the players and to make the world react to sounds, to make the world relatable. We wanted to be able to make creative environments, for example, the vacuum hoses, the glass jars, as well as designed weird reverb effects that were used in the kaleidoscope world level. We did try to work with the Wwise RoomVerb plug-in but it was not enough for us, so we chose to go with only convolution reverbs to be able to be more creative. We did extensive impulse response recordings of different environments with a whip as well as fireworks, and we created very heavily designed impulses for underwater and other imaginary places.

In addition to these convolution reverbs, we also created an ‘Environment Type’ system to be able to play various designed reflection tails. We mostly used these tails for explosions and weapons but also some other sounds that benefitted from having a longer tail to convey the bigness and the loudness of the sound. We actually had two different versions of baked footsteps tails, one for small rooms and one for large rooms.

To create even more realistic worlds we built a runtime delay system that used raycasts to measure the distance between the characters to the reflective surfaces as well as surface types. This added a very pleasant, variable reflection to the other more static reverbs and baked reflections, and made the world react to very subtle changes rapidly.

Image 5: Convolution Reverb’s level-specific impulse response settings

JOAKIM: When designing systems for simulating environmental effects on playing sounds, a good question to ask is whether the spectrum of something is being designed to be predetermined and deterministic versus something designed to happen at runtime with more dynamic and momentary results. Try to find a sweet spot between the two that fits based on the resources and ideals of both the technical and creative. To exemplify the fundamental base of environmental systems in It Takes Two, we look towards the implementation of reverberation through convolution. Even though we are dynamically routing signals into these buses at runtime, the characteristics of the effect unit itself are carefully pre-designed to render a specific type of surrounding. Other than monitoring player locations as they are moving about the world and managing routing into reverb buses based on this, the sound of the reverb itself is very deterministic.

On the other hand, once we had a thick and reliable bed of reverberation, we could venture off into representing the physical phenomenon of early-reflections by using variable-delay lines that were programmed by real-time calculations driven by all manner of data collected from the game, such as distance to walls, the materials around the player and the relation to objects around them.

Any kind of system, whether predetermined or using a result that is constructed as the game runs, will inherit its natural advantages and disadvantages, but combined they can create results truly capable of passing a digital world as real.

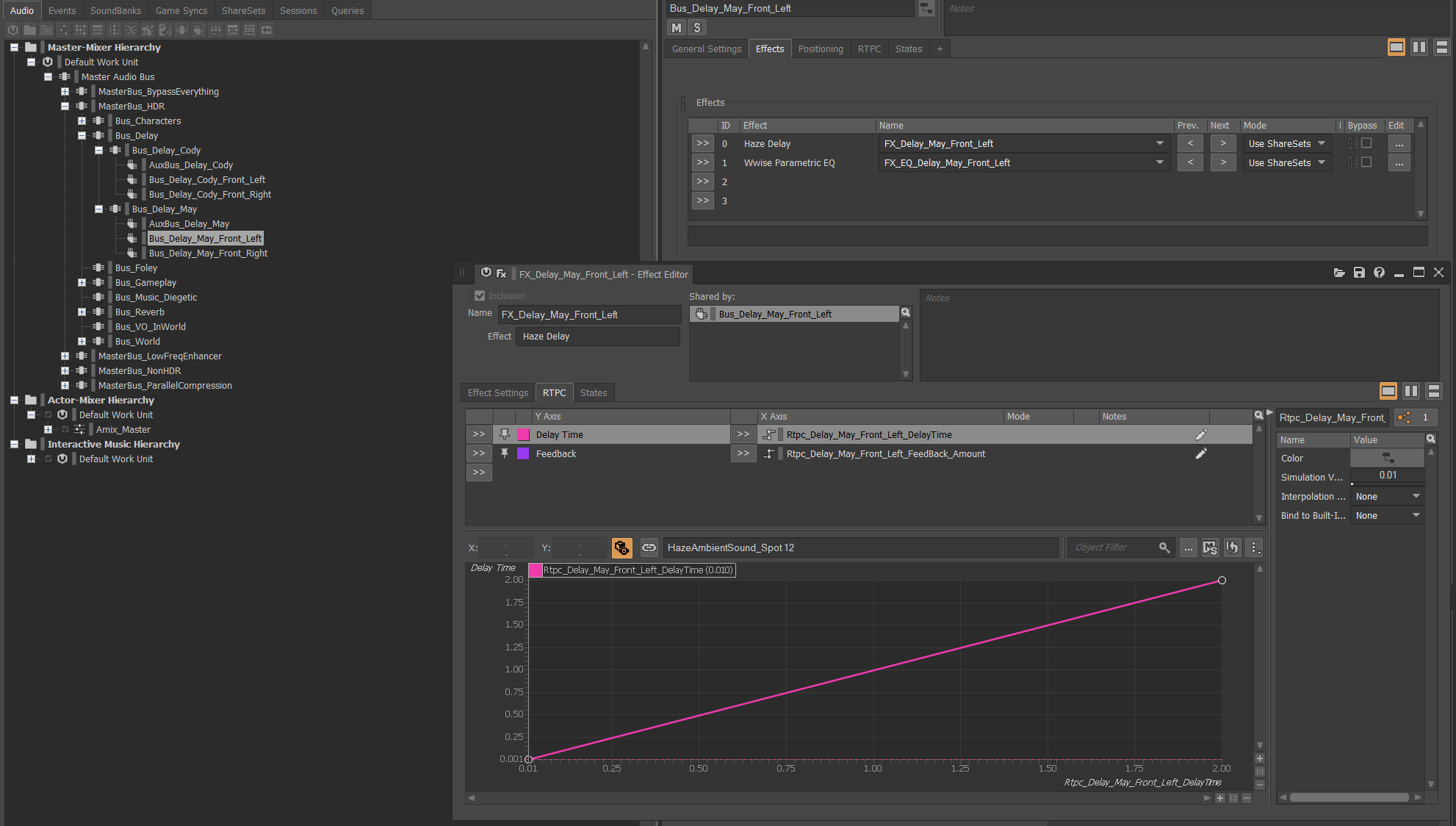

GÖRAN: One feature, unfortunately, missing in the delay plug-ins in Wwise is variable delay time, in other words supporting changes to the delay time at runtime with RTPCs. Which was a must for our early-reflections system.

Initially, when prototyping, we used Pure Data. We also made a Wwise plug-in from the Pure Data patches which used the now discontinued Heavy compiler, so we could try everything in the engine. A thing to note here is that not all platforms are supported, and perhaps today wouldn’t work at all out of the box.

First, we tried using a tape delay but it produced a pitch change when updating the delay time. We also tried using a delay line with variable delay time, but that generated artifacts such as clicks when updating the delay time. But the result was good enough to showcase the potential result and to continue with when making our own Wwise plug-in.

What we ended up with was a fractional delay line with bicubic interpolation to smooth out any noise that could occur when changing the delay time.

Image 6: Hazelight’s own Wwise plug-in, ‘Haze Delay’, a fractional delay line to smooth out changes in delay time

The split screen means that you may end up processing quite a few instances of convolution reverb at the same time, same for the delay. Did those systems cause any performance issues? If yes, how did you solve it?

PHILIP: There is a significant possibility that we have two characters located in different ambient zones and even, in the worst case, in the fade zones between different ambient zones, which would mean that only the character sound would be mixed to four convolution reverbs at the same time. We also let the sounds that are located in other ambient zones mix to the reverb of that zone so we can have a lot of reverb instances at the same time. With the runtime delay systems it's a little bit more simple. The delay is only centered around the character, so there can only be four delay instances at the same time, two per character.

JOAKIM: In It Takes Two, reverb buses occupy compartmentalized spaces of the world (the ‘Ambient Zones’) and only wake up for work once a player enters the area that they represent. Likewise, when there are no longer any players present in that particular area, the associated reverb sends are culled and the bus fades back into an inactive state. During gameplay, players will continuously travel alongside reverb instances that are crossfading between one another, smoothly delivering the player into the next environment and then going to sleep when it no longer receives any input signal from present players. This system was as tight-knit as we could make it while supporting two players in potentially vastly different locations, and even so it’s not rare to see seven or eight unique convolution reverb buses active simultaneously based on the locations of the players and other sounds from within the world. During development Audiokinetic also released their own performance optimizations to the convolution reverb effect - much appreciated!

GÖRAN: One thing we did throughout to reduce the amount of work for any processing, but affected the reverb greatly, was we limited the active objects being processed, by culling those deemed inactive. For instance, those not playing any sound or those out of range.

We also made sure to only update data when we thought it was necessary. For example, with the delay system we used asynchronous raycasts and only when enough data had changed did we update our delay plug-in. So, when possible, we reduced the changes to our variable delay lines, but at the cost of all changes not being on the current frame.

Performance

Aside from the reverb, a split screen game means you have pretty much two instances of the same game running in parallel at all times. Did that cause any major problems with performance? What were the main sources of concern and how did you solve them?

PHILIP: This was of course always in the back of our minds during the entire audio production, which I think it should be. It is not very fun making a fantastic sounding game, and then towards the end of the production having to optimize away all the beautiful creations that you have spent a lot of time on. We monitored our data pretty much from the beginning to always make sure that we were within sane ranges. We tried to be smart and only use layers in our sound design when layers were needed, and to use well-thought-through mixing decisions as well as HDR to remove (or cull) unnecessary sounds. This was never an obstruction for us creatively but sometimes we had to adjust our design techniques to tick both the boxes of good sounding and optimized at the same time. We also utilized all the Wwise built-in optimization systems such as HDR, playback limiting, virtual voice behavior and playback priority, but in a combination with our own optimization systems.

JOAKIM: With any given game, the basic act of playing numerous sounds simultaneously will almost always be the biggest consumer of the CPU - a split screen game like It Takes Two is certainly no exception. Voice and bus limiting made up the baseline of restrictions, but one should also mention HDR and its fantastic ability to not only make sure that the mix is clean and dynamic, but also to help alleviate the pressure of handling non-important sounds in the moment of gameplay, by allowing them to be culled due to passing under the established volume threshold.

It Takes Two released for five different platforms that all have different needs, requirements, weaknesses and strengths. Our approach was to continuously identify and go after the worst offenders and make them work within the context of all consoles, as opposed to individual settings for hierarchies in Wwise for different platforms. An example of something that we went back and forth on quite a bit in order to find a sweet spot that worked for all of our devices, was categorizing what archetypes of sounds to stream from disk as we found that it was something that, in our game, gave immediate results to performance. This was the act of fitting all the pieces of the puzzle together; the time the processor spends on your audio frame, the amount of uncompressed PCM-data sitting in your RAM, and the amount of streams juggled by the hard drive.

If Wwise had just the Profiler and no other features, I would still use it.

GÖRAN: Just to clarify, we don’t have two instances of the game but two perspectives, and for the auditory experience, at least always two listeners. Meaning we only have a single audio source but the signal is mixed to two listeners.

As Joakim mentioned previously, our main concern was the amount of concurrent sounds playing, but also their individual complexity. Some required multiple RTPCs, reverb and positions to be updated each frame, so to clearly separate the different requirements for each object, we had a ton of settings and easy-to-use shortcuts to enable a smooth development process. For instance, Fire-Forget sounds, which just live the duration of the sound and then deactivate until another sound needs to be played. To know when most sound objects could be disabled, we fully utilized the attenuation radius, with some added padding to account for the velocity at which our listeners moved, so the auditory experience could be kept.

Sound Design

It Takes Two is characterized by a huge variety of gameplay, which means enormous amounts of content to create for audio. How did you manage to pull this off? Did you work with any outsourcing companies?

PHILIP: We did work with Red Pipe Studios, they delivered sound and mixing for all our cinematics. On the music side, we worked with Kristofer Eng who acted as a composer duo together with our in-house composer Gustaf Grefberg. SIDE did almost all of our in-game voice-over recordings and initial editing. On top of that, Red Pipe worked together with Foley Walkers to provide foley for the cinematics, and I did an extensive foley recording session with Ton & Vision (Patrik Strömdahl).

To be honest, we never had enough time during the audio production for It Takes Two, so a running joke has been “first pass only pass”, and it is not far from the truth. We had, in most cases, only one attempt to make a good sound because we were never certain that we would be able to go back and do any polish. I think this is healthy though, to be a bit extreme, I’d rather have sound on the whole game rather than really polished sound on the first level only.

Even though we had this approach, we set a very high quality target from the start, and to quote our studio manager, “You set the quality bar very high and bolted it up there and never moved it whatever happened.” Our goal was simply to make the best sounding split screen game ever made, and even though we had a short amount of time to try to do that, we still kept that as our goal all the way.

We did have a little bit of time in the end to go back and add or expand on a few sounds as well as do a little bit of polish, and we worked hard until the game was basically ripped out of our hands and shipped to the consumers to make it sound as awesome as we possibly could.

ANNE-SOPHIE: I must credit Philip for his clever planning for the entire duration of this project, without which it would have been impossible to produce quality content for the whole game. This goes for our sound design production and implementation, technical systems development and iteration and cutscene audio outsourcing. We basically allocated set periods of time for each level and sublevel, and accounted for some extra time for polishing and mixing. Once those set blocks of time were spent, we simply had to move on to the next. Given that drastic time pressure, our approach has been to bring everything to release quality from the very first iteration, this includes quality of the sound design, but also of the mix - we were all continuously responsible for the mix and for making sure that anything new making it into the game was of consistent quality with the rest. And lastly we simply had to bring out our most creative selves and use our imagination as much as possible in order to bring all those objects and characters to life! Overall the variety of the content was a challenge, but also one of the absolute most fun things about this project.

Music

The Music level is the last level of the game and it sounds amazing - How did you solve the singing ability, and is the music driving any of the gameplay through beatmatching?

JOAKIM: In the Music level there are quite a lot of gameplay elements that, in some shape or form, are controlled by the background music. Front-and-center we find May’s Singing Ability, which is used to solve puzzles and progress through the level. Whenever the player activates the ability, May will start singing alongside the currently playing music, staying in sync in both tempo and key. We realized this by having a vocalist perform the melody to the music using a MIDI track as a template. The MIDI data was then parsed as markers and ingrained into the source files, to later be read by the game engine and used to inform our systems.

When it comes to having gameplay synced to events occurring on the audio thread, one must consider the fact that It Takes Two can be played not only locally, where both players play on the same device and share one Wwise instance, but also by connecting online over network. In such a case, two separate instances of the game exist, and with that two separate instances of Wwise. The gameplay state will always be communicated between the two game instances - the two Wwise instances, however, have no knowledge of one another and will simply play and act upon the sounds as they are happening locally. The nature of the audio thread means that it runs at a fixed speed, but since any one frame of the main thread takes an untold amount of milliseconds to compute, sounds might start and stop at different times on both ends. For most gameplay implications this is trivial - both players will see and hear their “truth”, but if we are waiting for events to pass on the audio thread of one side and in turn have that spark an event in the game on both sides then timing becomes a crucial factor.

For the Music level we were faced with this fact, and decided that we had other dragons to slay. Even so, music callbacks were used heavily to freely trigger events that were not timing-intensive, such as background effects and other visual “fluff”. These were then free to happen, whenever they happened, and have them be visually synced with what is happening musically.

Both sides get to experience their playing music affect the world - nobody gets upset because a downbeat that happened a fraction of a second earlier inside the other player's game instance triggered an event that killed them.

Wwise

Can you share one way in which Wwise as an audio engine has served you especially well, and one way where it hasn’t, and how you worked around it or fixed it?

PHILIP: It Takes Two was the first game that I made in Wwise. I would say that one of the best things about Wwise is that it is like the Soviet Union car Lada, this is something very positive. It just goes and goes! We of course had our technical issues with Wwise but we could still always proceed with our work and were never completely blocked. Another very good thing is that if you exclude me, we had a lot of Wwise expertise in the team with Anne-Sophie and Joakim, so they could teach and guide the rest of the audio team. I also found it pretty easy to get started, so it was not a very large mountain to climb to learn Wwise and you can get started quickly.

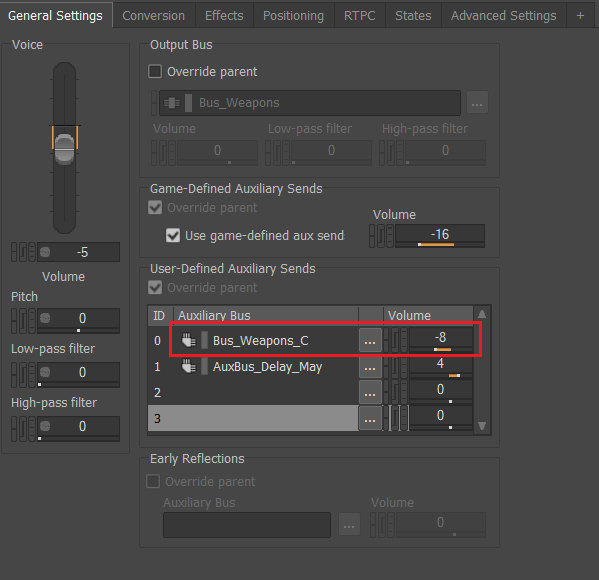

Wwise is used by so many different audio teams around the world and that is mostly a good thing, but because of that it also means that Wwise does not have that specific behavior that fits maybe only one team or even just one game. Of course for us that meant dealing with split screens in most cases. One very clear example for us was the problem of routing Balance-Fade (2D) sounds to the center channel. We wanted to use the center channel a lot in our game to be able to split the sound in two halves but to share the center between the halves to maintain stereo capabilities. Wwise does not support using Balance-Fade and the center percentage send, so we had to develop a workaround for this by creating a lot of aux busses to send signals to the center channel instead.

Image 7: Creating aux busses to send to the center channel as a workaround

JOAKIM: Wwise is a reliable machine, consistently so. This means that even when it does something you do not expect, or work differently from what you need, it does so consistently! As a framework it obviously comes packed with a lot of the baseline features one would expect from a modern sound engine, but for any studio serious about venturing into experiences rooted in fantastic sound using Wwise, I want to make the case for what I believe that Wwise is, and what it isn’t. It is not delivered to you as an end-all solution for all your sound needs.

Such a third-party tool does not exist. It does not come packed with out-of-the-box solutions to all gameplay circumstances or features.

What it is is a fantastic starting point. It comes delivered to you as a box that starts the first time, every time, and from which you might construct your own fantastic machine. If you are only interested in the parts that come pre-assembled in the box then sooner or later you will be disappointed. If you are interested in what you can build from those parts, then there is no telling what you can make.

For us, the biggest step taken in order to extend Wwise-functionality to better suit our needs was the change in how we combined spatialized signals when handled by multiple listeners. The initial result produced by our two-listener setup in Wwise did not produce satisfying results, they were, in fact, compromising the immersion of the world. A lot of our early tests and efforts came up short, where no one solution available to us in Wwise seemed to tick all of our boxes.

Luckily, the architecture of Wwise is designed to be customizable to users given that one is ready to dive into the deep end of the engine. This is how we at that point started making fundamental changes to low-level systems and tailor them to our specific needs. The result was the emergence of Spatial Panning, where Wwise met with a touch of Hazelight to create the flavour we needed.

GÖRAN: As this was my first project as an audio programmer and came late into the production, one of my first priorities was to improve and build automation tools for Wwise. A major help there was WAAPI, being able to, in any programming language, leverage Wwise’s authoring features. Unfortunately WAAPI isn’t exactly feature-complete, but most if not all common usages exist as is, and Audiokinetic is constantly updating and improving it.

When the production started, there was no concept known as Event-Based Packaging in Unreal but something we started our own version of early on. Even though we have a custom integration of an event-based bank generation, we still just use the supplied generation from Wwise when generating the .bnk and .wem files. Which has served us well, both being deterministic and performant, which was one of our highest priorities due to the time wasted when working iteratively.

If you could recommend one or a few things to another studio using Wwise for a split screen game, what would it be?

PHILIP: Make up rules for how you will treat panning and mixing and stick to them. You will have to compromise when making audio for a split screen game. It's just about prioritizing the right sounds and making rules that will make most sounds sound good in the end. It can be hard to make these choices but you will have to make them if you want the result to be a clear and pleasurable experience for the players.

JOAKIM: Make an effort, as early as possible, to look at the visual frame of your game and make a decision on what elements within are important to you as a sound designer. Try to identify anchor points and use these as sources for spatial consistency when positioning sounds within that frame. The earlier one is able to establish rulesets and hierarchies, both within Wwise and at the source of asset creation, the more manageable a reality with the ever increasing list of variables that is split screen audio will be later on. Finally - take risks where you can! Audio for split screen is a mostly uncharted territory of our craft, and the possibilities to tell new stories through sound in ways that no other type of game can are vast.

GÖRAN: As an audio programmer, I don’t know what we would have done without the Wwise source code, so I would say get to know the codebase, and don’t be afraid to, as we say here at Hazelight, "f**k s**t up".

ANNE-SOPHIE: I second Philip about the panning and mixing rules and sticking to them. Ultimately the way the sounds are spatialized is what will define the sound of a split screen game, and setting this vision and framing it into rules as early and as consistently as possible will save you a lot of headache and messiness. In the case of It Takes Two, a mixing rule example that worked out well for us, was to not aim for fully spatialized sound sources, but rather use the screen space as a frame for most of the soundscape. It improved mix clarity and minimized confusion due to the split screen, and where sounds end up playing from in relation to the players and their camera angles. By ‘using the screen space as a frame’, I mean that we deliberately left many sounds non-spatialized (2D), to be played in the Left-Center-Right speakers as you would do in a linear media like a movie, to match the visuals with as much precision and details as possible. Only with the sounds that made sense to do so of course, such as sounds coming from the characters themselves (foley, vehicles driven by the characters, player abilities), or sounds coming from sources that remained within the visual frame at all times (like short, one-shot sounds). So overall, for a linear split screen game, I’d certainly recommend at least exploring more deterministic sound design and mixing approaches rather than fully spatialized.

In the end, what has shaped the success of the Hazelight Audio Team in surmounting the challenges that were presented, is its continuous collaboration between the creative and the technical designers within the team. Indeed, sound designers, composers, a technical sound designer, and an audio programmer have all contributed with their specific strengths and skillsets, as well as relied on each other’s knowledge in order to create an uninterrupted collaborative dynamic, in which they were able to push the quality bar to the highest possible level. Constant productivity driven by a clear and uncompromised vision, the use of reliable and customizable tools, and a desire to create the best-sounding split screen game ever made, are the main factors behind the success of It Takes Two’s audio experience.

Comments