This series of blog articles is related to a presentation delivered at GameSoundCon in October 2020. The goal of the presentation was to provide perspective and tools for creators to refine their next project by using object-based audio rendering techniques. These techniques reproduce sound spatialization as close to how we experience sound in nature as possible.

This content has been separated in three parts:

- The first part exposes audio professionals to the advantages of authoring their upcoming projects using object-based audio, as opposed to channel-based audio, by showing how this approach can yield better outcomes for a plurality of systems and playback endpoints.

- The second part, covered by this article, will explain how Wwise version 2021.1 has evolved to provide a comprehensive authoring environment that fully leverages audio objects while enhancing the workflow for all mixing considerations.

- Finally, the third part will share a series of methods, techniques, and tricks that have been employed by sound designers and composers using audio objects for recent projects.

The future: always in the distance, never arriving, and yet we always seem to be catching up with moments that have already passed. Whether it’s staying abreast of current events, getting current with a favorite series, or jumping into the latest version of Wwise, to quote Ferris Bueller: “Life moves pretty fast. If you don't stop and look around once in a while, you could miss it.” This article is a chance to take a look at the high-level considerations of authoring with Audio Objects in the latest version of Wwise. It provides an overview of the retooled audio pipeline and imagines the future of interactive audio through the lens of a virtual “soundwalk”. While nothing will replace the experience of living in the moment, engaged in the process described here in text, this text should provide you with a framework to project your future creative audio aspirations towards authoring for the greatest spatial precision across all outputs. At the intersection of education, technology, and creativity sits the author who can align these aspects towards the realization of immersion and full embrace of the moments we experience through our medium.

Wwise 2021.1 Audio Pipeline

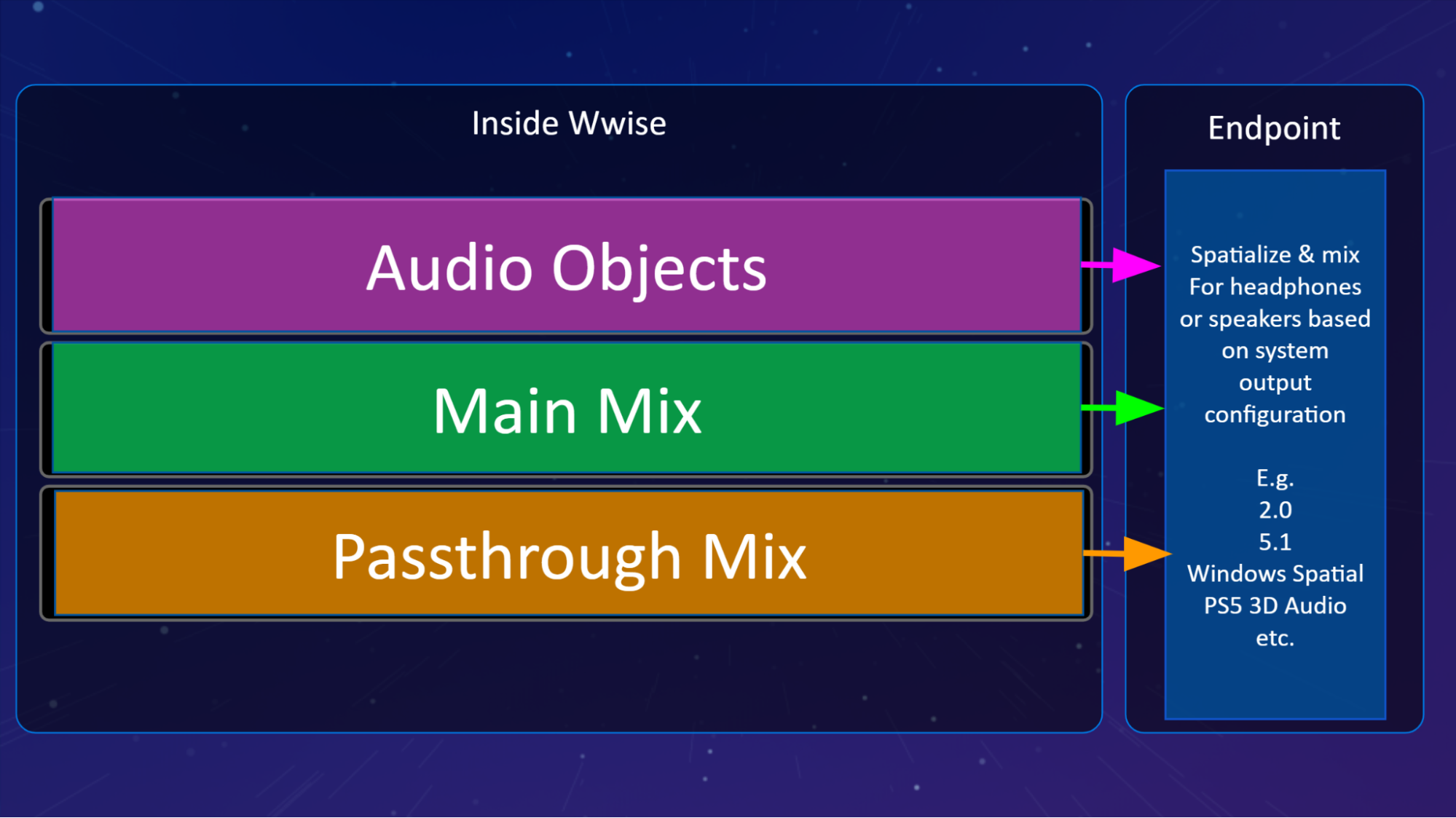

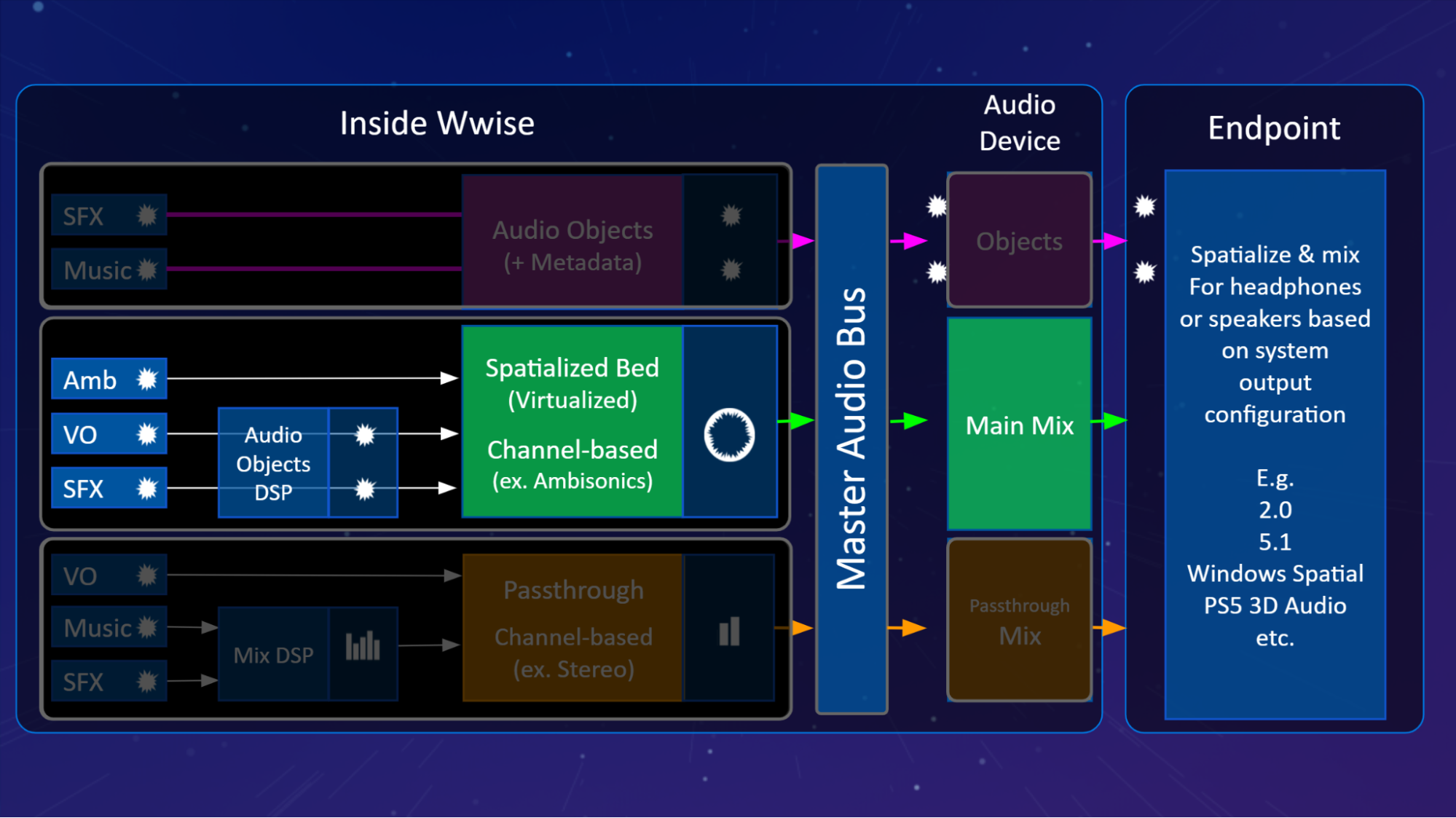

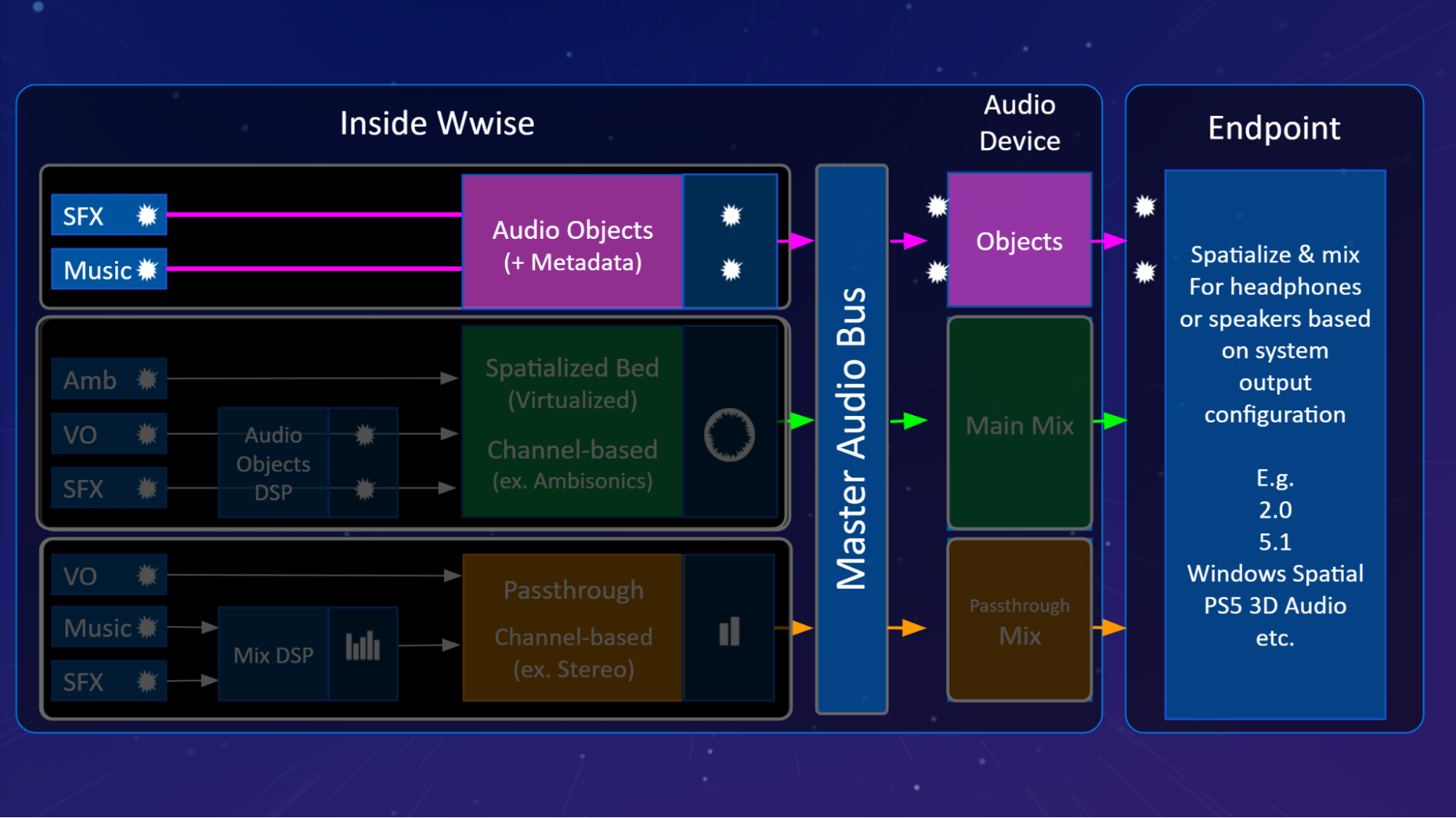

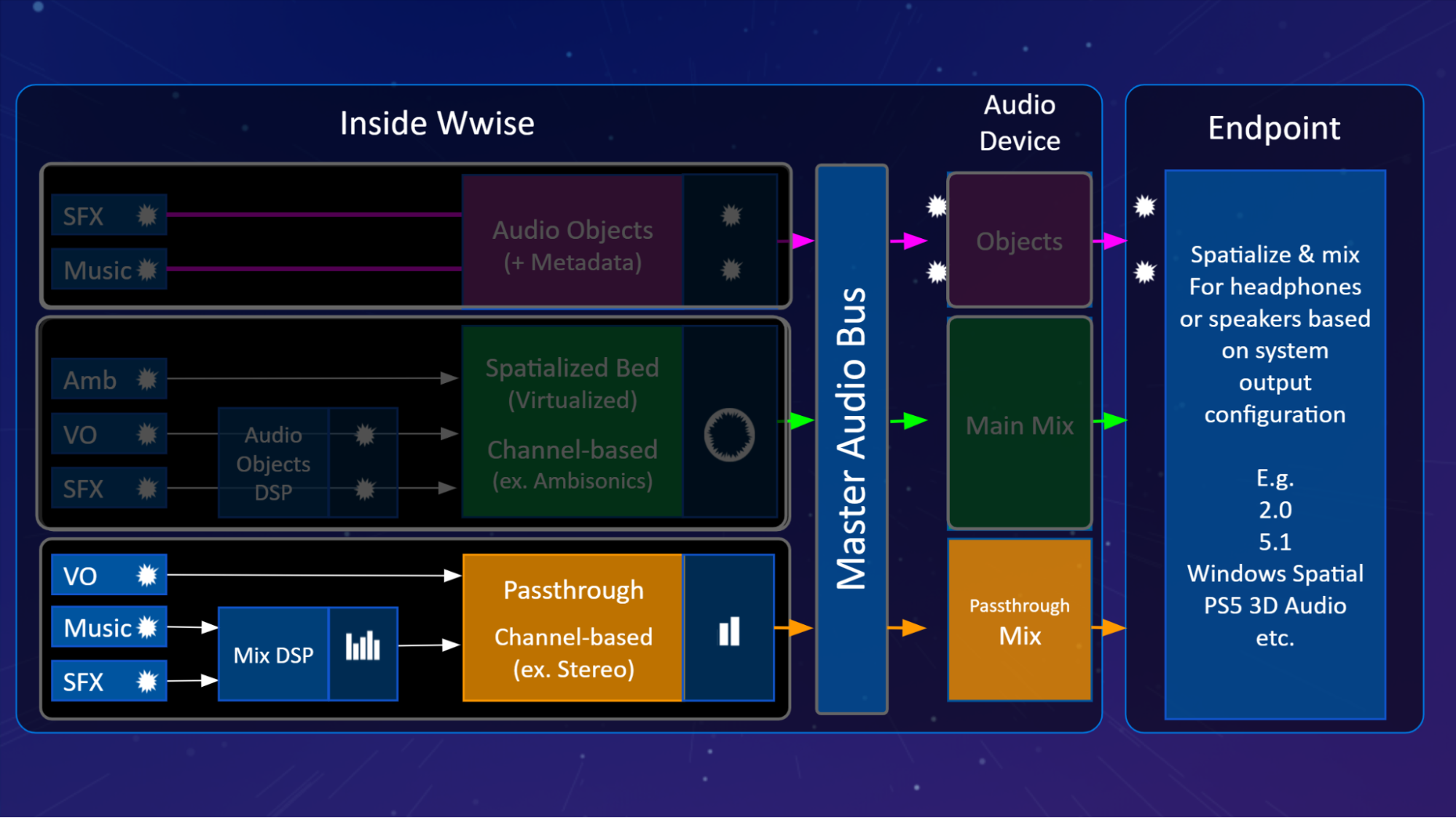

The Wwise 2021.1 Audio Pipeline allows for the authoring and flow of three paths of sound routing (Audio Objects, Main Mix, and Passthrough Mix) and provides visibility to help understand routing decisions that can affect the delivery of sound throughout Wwise authoring and when connected to the Wwise runtime. These routing decisions flow through Wwise to the Audio Device where a combination of channel configurations (Main Mix and Passthrough Mix) and Audio Objects (when available) are prepared for delivery according to the endpoint-defined output configuration settings. The Wwise Help Documentation includes a thorough and succinct section called “Understanding Object-based Audio” that provides additional context for what follows.

Cross-Platform System Audio Device

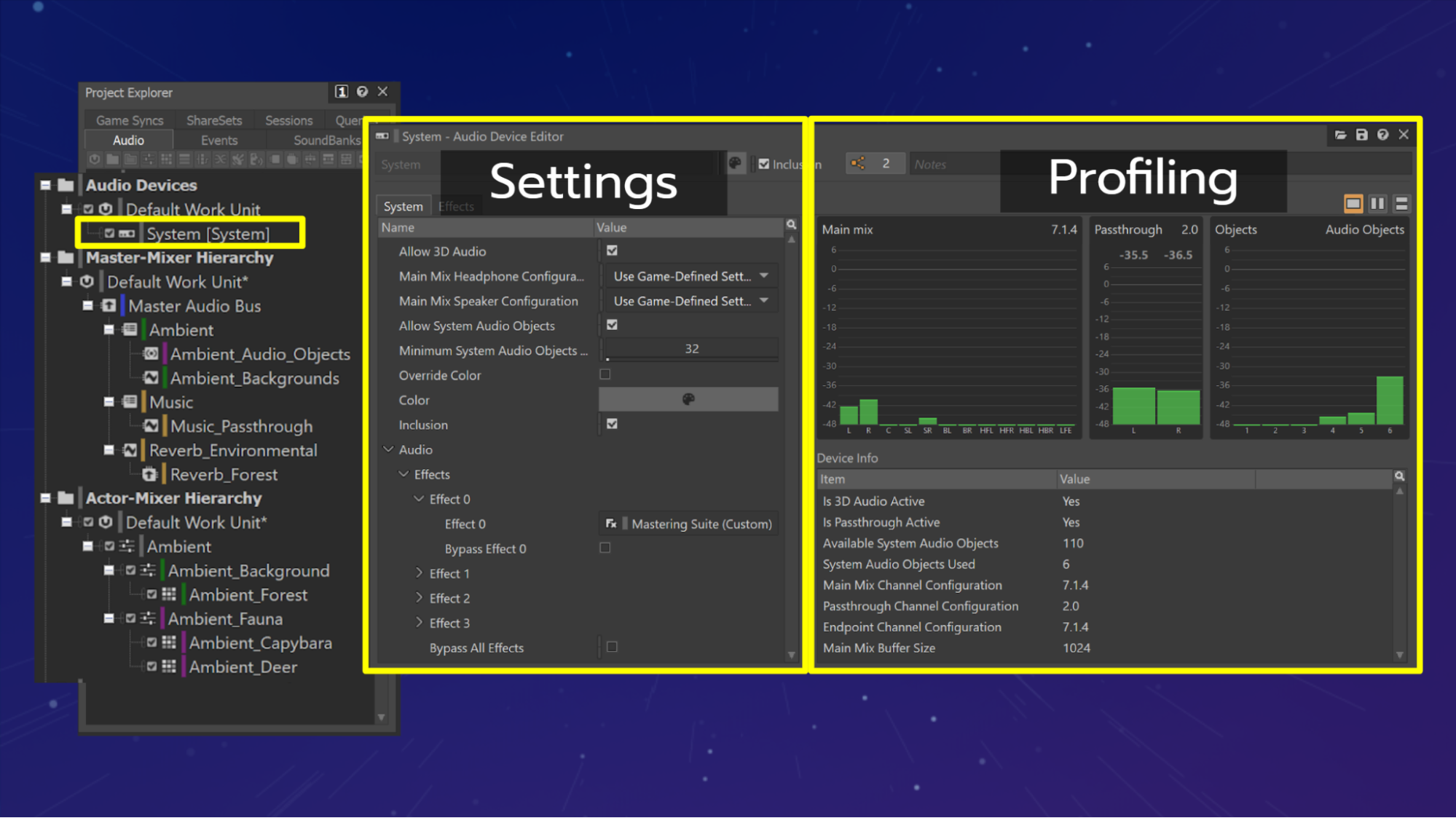

The Audio Devices work unit has been moved to the Audio tab of the Project Explorer and the System Audio Device now includes settings and profiling information.

The first thing you may notice when opening Wwise 2021.1 is the new location of the Audio Device ShareSets, which have been moved to the top of the hierarchies in the Audio tab of the Project Explorer. This move helps to illustrate the flow of sound from the Actor-Mixer and Interactive Music hierarchies, through the Master-Mixer, and finally to the Audio Device where the final output of Wwise is prepared for delivery to an endpoint.

The new cross-platform System Audio Device also exposes properties in the Audio Device Editor that can be authored in anticipation of the different output configurations available at a given endpoint. Additionally, when profiling, the Device Info pane displays information received from the endpoint, allowing you to see its initialized state.

Sidebar: Endpoint

We use the term endpoint to refer to the system device (e.g., the section in charge of the audio at the operating system level of the platform) that is responsible for the final processing and mixing of the audio to be sent out to headphones or speakers. At initialization of the game, Wwise is informed of the endpoint’s audio configuration (e.g., 2.0, 5.1, Windows Sonic for Headphones, or PS5 3D Audio) so it renders its audio in the format expected by the endpoint.

Audio Device Routing

Explaining the three categories of routing: Main Mix, Audio Objects, and Passthrough Mix.

Changes to the System Audio Device also include the routing of up to three destination types to an endpoint that is able to receive a combination of these different formats:

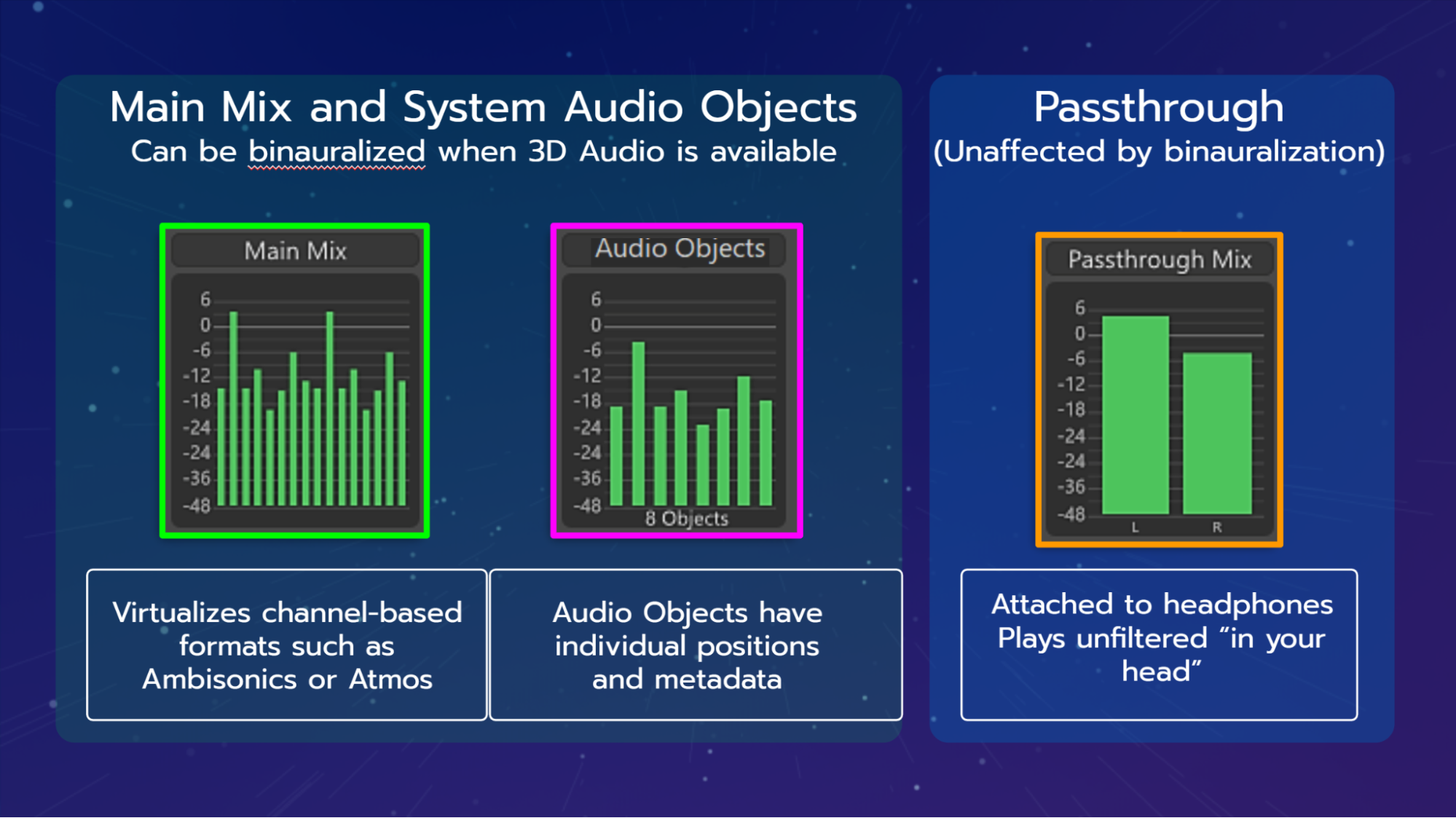

- Main Mix (channel-based) - The Main Mix (or Spatialized Bed) is an intermediate representation of sounds and their positions rendered in a channel-based format (ex. 5.1, 7.1, 7.1.4, Ambisonics). This will produce a mix suitable to be virtualized (positioned virtually according to ideal speaker positions) and processed using Binaural Technology when 3D Audio is enabled in the System Audio Device and initialized by the endpoint.

- Audio Objects (mono) - Audio Objects have individual metadata (e.g. position, spread, etc.) that can be used by an endpoint in order to create the best spatial resolution in accordance with the selected output configuration. These Audio Objects can be rendered as System Audio Objects when 3D Audio is enabled in the System Audio Device and initialized by the endpoint. [See Part 1 for a great overview of How Audio Objects Improve Spatial Accuracy]

- Passthrough Mix (stereo) - The Passthrough Mix is a stereo configuration of sounds which will produce a mix suitable for bypassing binaural technology at the endpoint whether 3D Audio is enabled in the System Audio Device and initialized by the endpoint or not.

Example Scenario: A Virtual Soundwalk

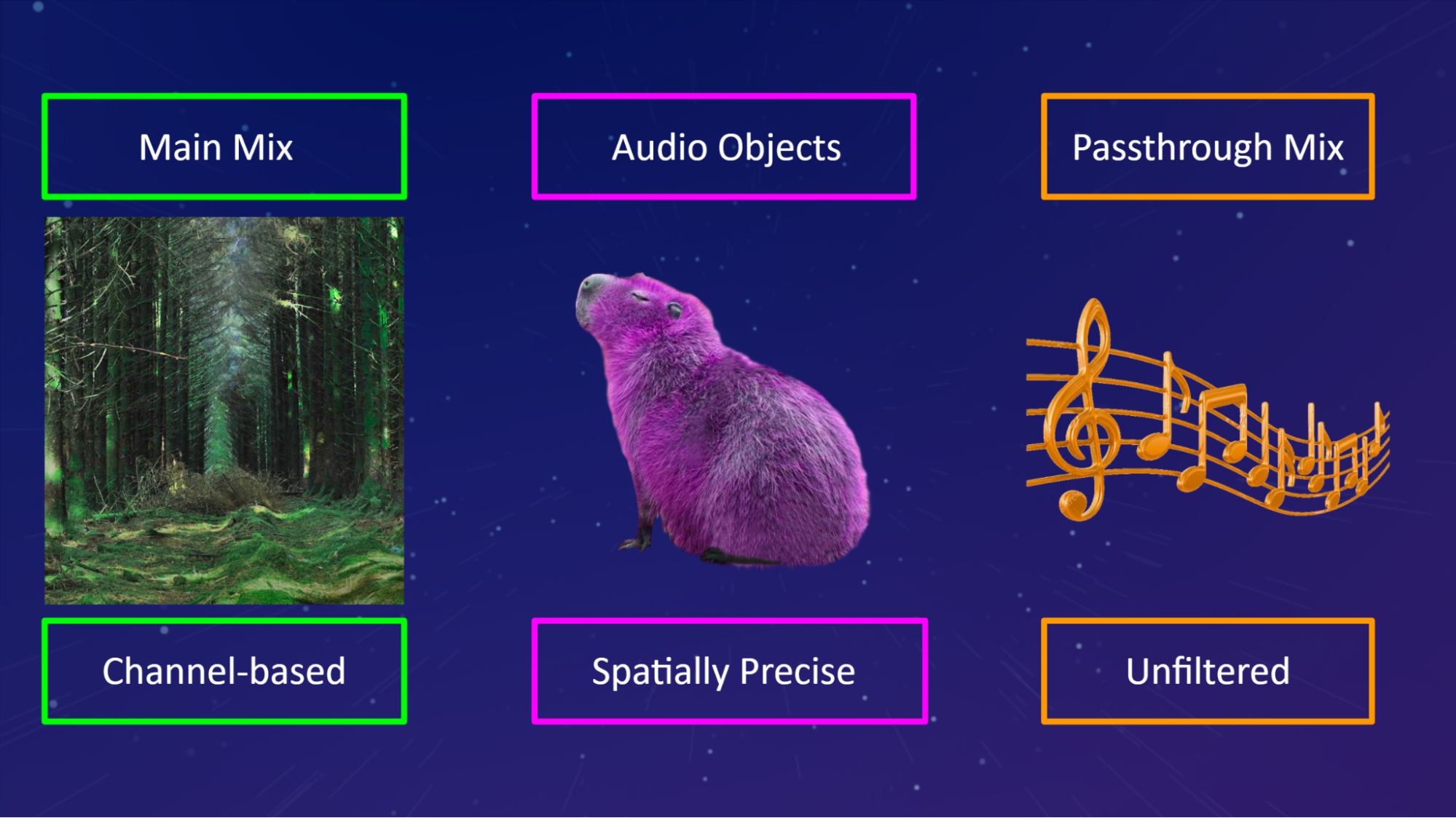

Color coding for the Main Mix (Green), Audio Objects (Pink), and Passthrough Mix (Orange).

This example scenario investigates the implementation of sound for a virtual reality "soundwalk" through a vibrant forest rich with environmental sound and output over headphones using spatial rendering technology including System Audio Objects.

The color coding established for the different System Audio Device outputs will be indicated using the following scheme:

- Audio Objects = Pink

- Main Mix = Green

- Passthrough = Orange

While this imagines the explicit routing of sounds to each of these three configurations, the ability to create routing in the Master Mixer hierarchy that leverages the “Same as parent” Audio Bus configuration allows for the Audio Device to define the configuration based on the Output Settings.

Sidebar: As Parent

Parent means that this Bus inherits the bus configuration of its parent. The bus configuration of the Master Audio Bus is implicitly set to Parent, because it inherits the bus configuration of the associated audio output device. If an Audio or Aux Bus is set to anything other than Parent or Audio Objects, it forces a submix at this level of the hierarchy. Also see: Understanding the Voice Pipeline and Understanding Bus Configurations

As with any development, the initial experience begins with a silent world that must be authored for sound before any can be heard.

Beginning with a channel-based implementation, we can imagine the sounds of the forest coming to life with an ambient background mixed to a 7.1 channel configuration and then virtualized and (optionally) processed binaurally over headphones by an endpoint. We can further expand the virtualized channel-based sound scene by introducing height-based sound available through a 7.1.4 channel configuration on an Audio Bus. While finally arriving at a full-sphere Ambisonic channel configuration allowing for up to 5th order spatialized representation of the virtual forest ambient background, providing sounds both above and below the horizontal plane in 360 degrees.

Sidebar: Virtualization

Channel configurations can be "virtualized" using headphones when combined with HRTF processing. This means that, for instance, a 7.1 Channel configuration can be positioned “virtually” around the head of the listener using standard distances and angles and then processed binaurally to simulate the positioning of the speakers.

Authoring for the Main Mix

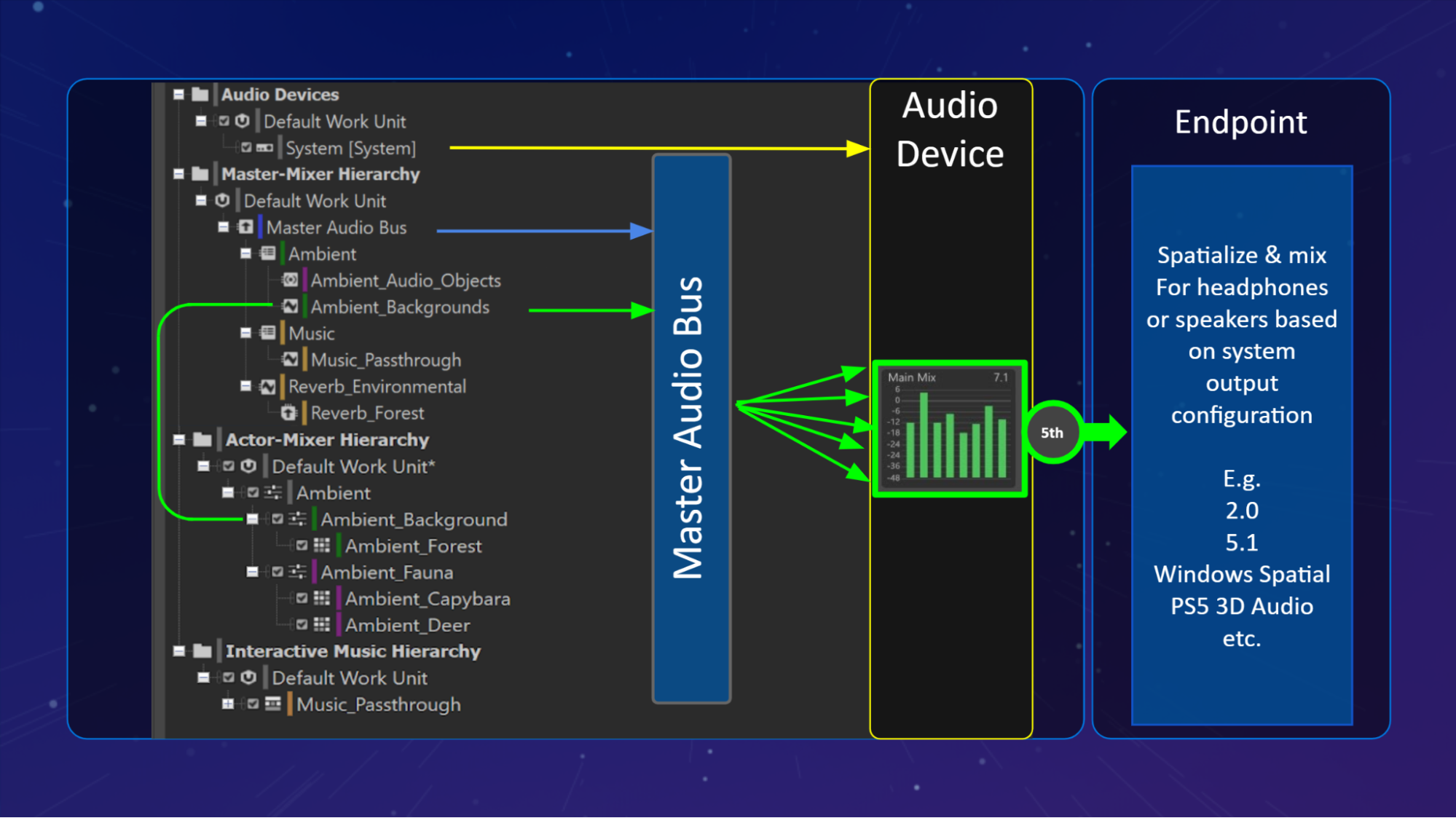

Wwise Main Mix Pipeline.

Sounds routed to the Main Mix by the Audio Device will be mixed to the channel format initialized by the endpoint, specified by the Audio Device, or as defined by an Audio Bus Configuration. Channel-based audio is delivered by the Audio Device to the endpoint and then rendered according to the user-defined output configuration; either speakers, headphones, or headphones with binaural processing. This channel-based Main Mix will be output directly to a specified speaker configuration, or virtualized and processed using 3D Audio technology available to different endpoints over headphones (e.g., Windows Sonic for Headphones, Sony Playstation 5 3D Audio, Dolby Atmos for Headphones, or DTS Headphone:X).

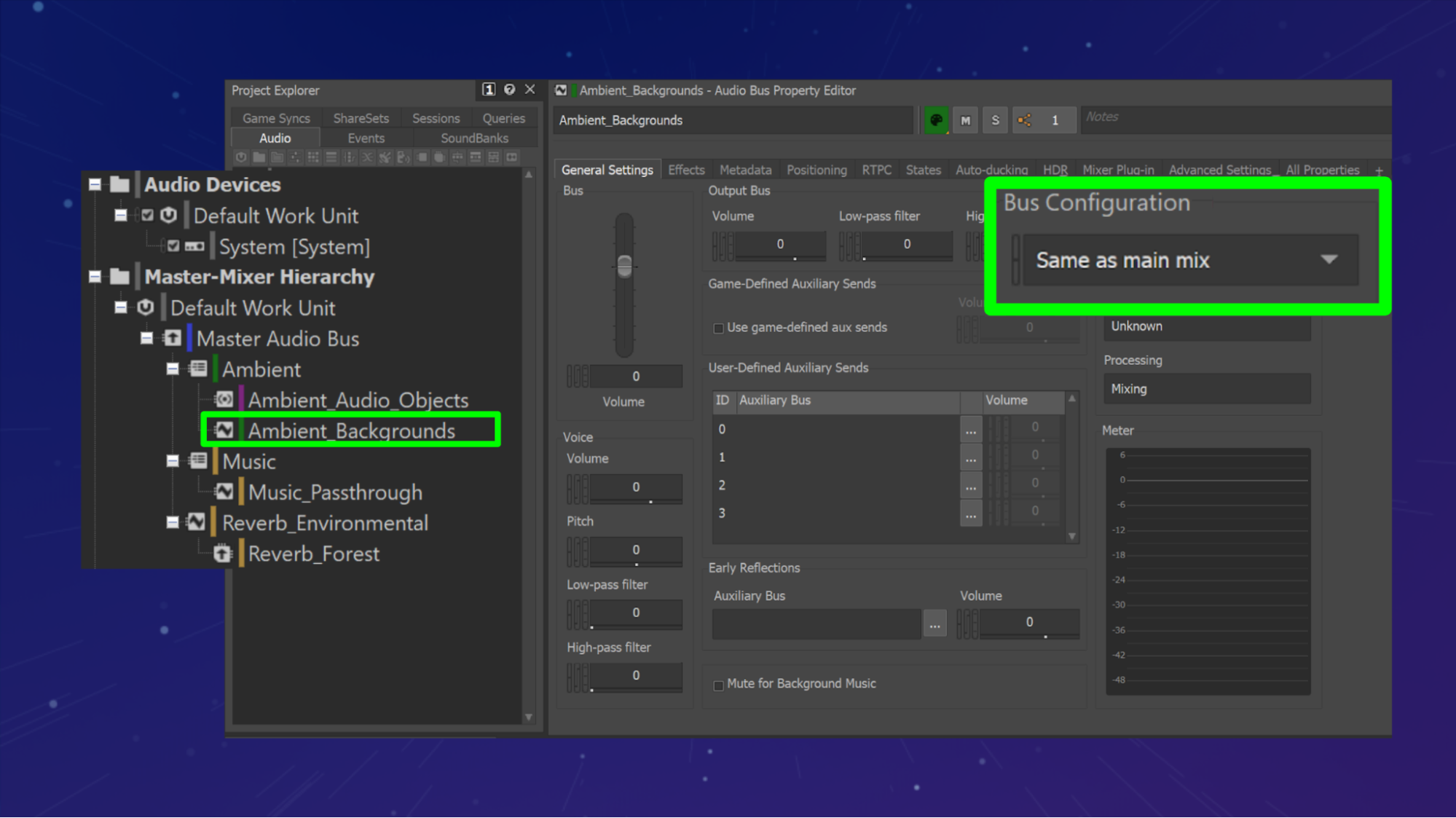

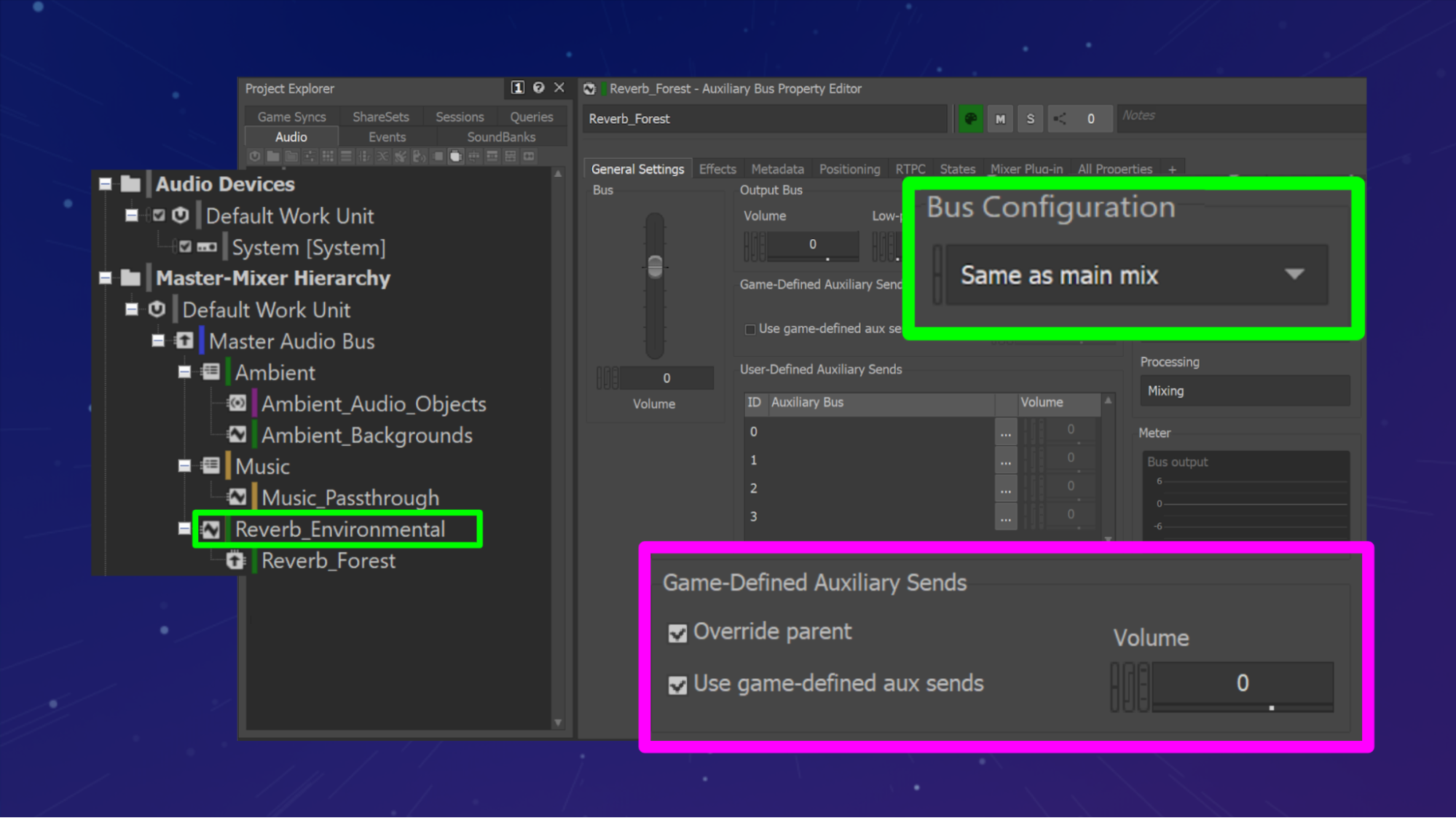

"Same as main mix” Bus Configuration in Wwise.

Inside of the virtual forest soundwalk, we'll configure our ambient backgrounds to flow through the audio pipeline to be delivered as part of the Main Mix.

In Wwise, these are the steps to author for the Main Mix:

- Step 1: Create an Audio Bus as a child of the Master Audio Bus called "Ambient_Backgrounds".

- Step 2: Set the Bus Configuration to "Same as main mix".

- Step 3: Route sounds from the Actor-Mixer hierarchy intended for the Main Mix to the “Ambient_Backgrounds” Bus.

Sounds intended to be represented as part of the forest ambient background will be routed to this bus and mixed according to the Main Mix channel configuration of the System Audio Device either defined using the Main Mix Headphone and Speaker Configurations settings or as initialized by the endpoint.

The Main Mix has the flexibility of being rendered to an output configuration defined by an endpoint and can adapt to the myriad of different listening scenario environments. With up to 5th Order Ambisonic rendering possible and mix configurations up to 7.1.4, the Main Mix allows for an optimized presentation of positional sound as part of a spatialized bed that is able to leverage 3D Audio technology when available.

Authoring for Audio Objects

Wwise Audio Object Pipeline.

Sounds routed as Audio Objects by the Audio Device will be preserved as individual Audio Objects, carrying metadata (e.g. position, spread, etc.) to the endpoint. Object-based audio is delivered by the Audio Device to the endpoint and then rendered according to the user-defined output configuration; either speakers, headphones, or headphones with binaural processing. Audio Objects have the potential to be positioned and processed using 3D Audio technology available to different endpoints over headphones (e.g., Windows Sonic for Headphones, Sony Playstation 5 3D Audio, Dolby Atmos for Headphones, or DTS Headphone:X).

Sidebar: Excerpt from Understanding Object-based Audio

The System Audio Device is the only type of built-in Wwise Audio Device that supports Audio Objects. The System Audio Device determines what combination of outputs will be delivered to the endpoint based on the endpoint's capabilities and the System Audio Device settings.

The System Audio Device will route Audio Objects to either the Main Mix, Passthrough Mix, or System Audio Objects, according to the following rules:

> An Audio Object will be routed to the endpoint as System Audio Objects if and only if it meets all of the following requirements:

- It has a 3D position.

- Its Speaker Panning / 3D Spatialization Mix is set to 100%.

- All of its channels are located on the listener plane.

- There are enough available System Audio Objects to accommodate all of its channels. In other words, the number of System Audio Objects Used would not exceed the number of Available System Audio Objects as shown in the Audio Device Editor when profiling (see following figure).

- Note: It is good practice to avoid exceeding the maximum number of Available System Audio Objects. Surplus Audio Objects routed to the Main Mix might be processed differently, resulting in perceptible variation. System Audio Objects are allocated using first-in, first-out prioritization.

> An Audio Object that does not meet the above requirements will be routed to the Passthrough Mix if and only if it meets all of the following requirements:

- It has a mono or stereo channel configuration.

- It does not have a 3D position at all.

> Audio Objects that do not meet the above requirements will be routed to the Main Mix.

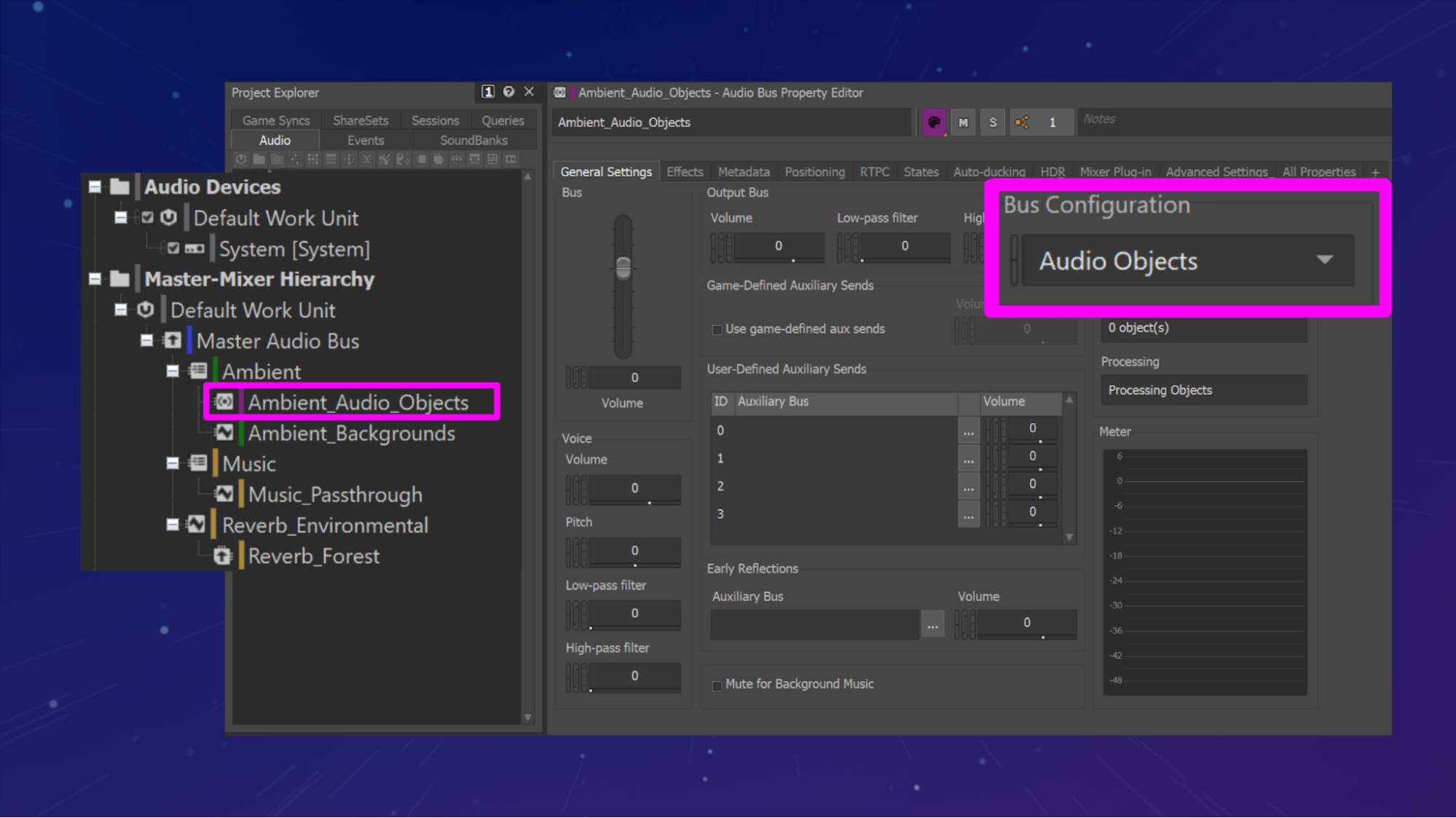

“Audio Objects” Bus Configuration in Wwise.

Meanwhile, back inside the virtual forest soundwalk, we'll configure the sounds of the various forest animals to be represented as individual Audio Objects, when available.

In Wwise, these are steps to author for Audio Objects:

- Step 1: Create an Audio Bus as a child of the Master Audio Bus called "Ambient_Audio_Objects''

- Step 2: Set the Bus Configuration to "Audio Objects".

- Step 3: Route sounds from the Actor-Mixer hierarchy that should be preserved as Audio Objects to the endpoint to the “Ambient_Audio_Objects” Bus.

Audio Objects are delivered by the System Audio Device to the endpoint when the endpoint has 3D Audio available and enabled and System Audio Objects available. The sounds of our forest animals should be routed to this bus, delivered to the Master Audio Bus, and then to the Audio Device where they will be rendered by the endpoint to the user-defined output configuration; either speakers, headphones, or headphones with binaural processing. In the event that availability of System Audio Objects has reached its maximum on an Audio Object enabled endpoint, Audio Objects will be rendered to the channel-based configuration set by the endpoint or Audio Device Headphone/Speaker Configuration settings.

Sidebar: Why use Audio Objects instead of ambisonics? In a nutshell, Audio Objects give the best spatial rendering, and do so regardless of processing costs, while ambisonics has a fixed cost (for a fixed number of channels) and balances the cost against spatial resolution. The following articles provide a good overview of the Ambisonics format, and how it can be used as an intermediate spatial representation.

Audio Objects maintain the highest level of spatial precision and provide the greatest degree of flexibility across all of the different output configurations available. Preserving Metadata, including 3D position, to the Audio Device without destroying it through the process of mixing is essential. An Audio Object without a 3D position will be mixed to the Main Mix by the Audio Device.

Authoring for the Passthrough Mix

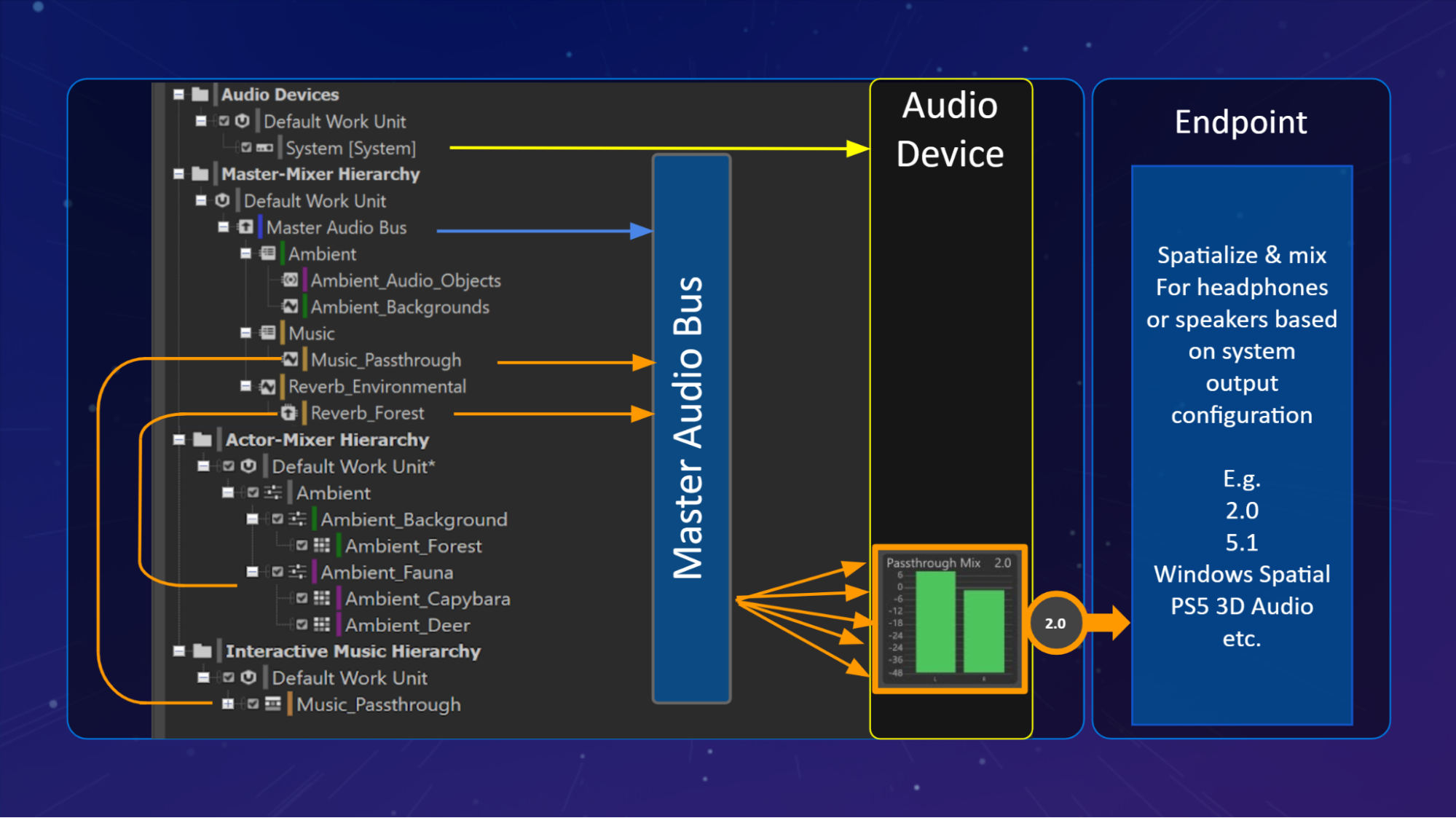

Wwise Passthrough Mix Pipeline.

Sounds routed to the Passthrough Mix by the Audio Device will be mixed to a channel format initialized by the endpoint for the Passthrough Mix and will remain unprocessed by any 3D Audio technology. When 3D Audio is not available or enabled, sounds routed to the Passthrough Mix will be mixed according to the Main Mix channel configuration of the System Audio Device either defined using the Main Mix Headphone and Speaker Configurations settings or as initialized by the endpoint.

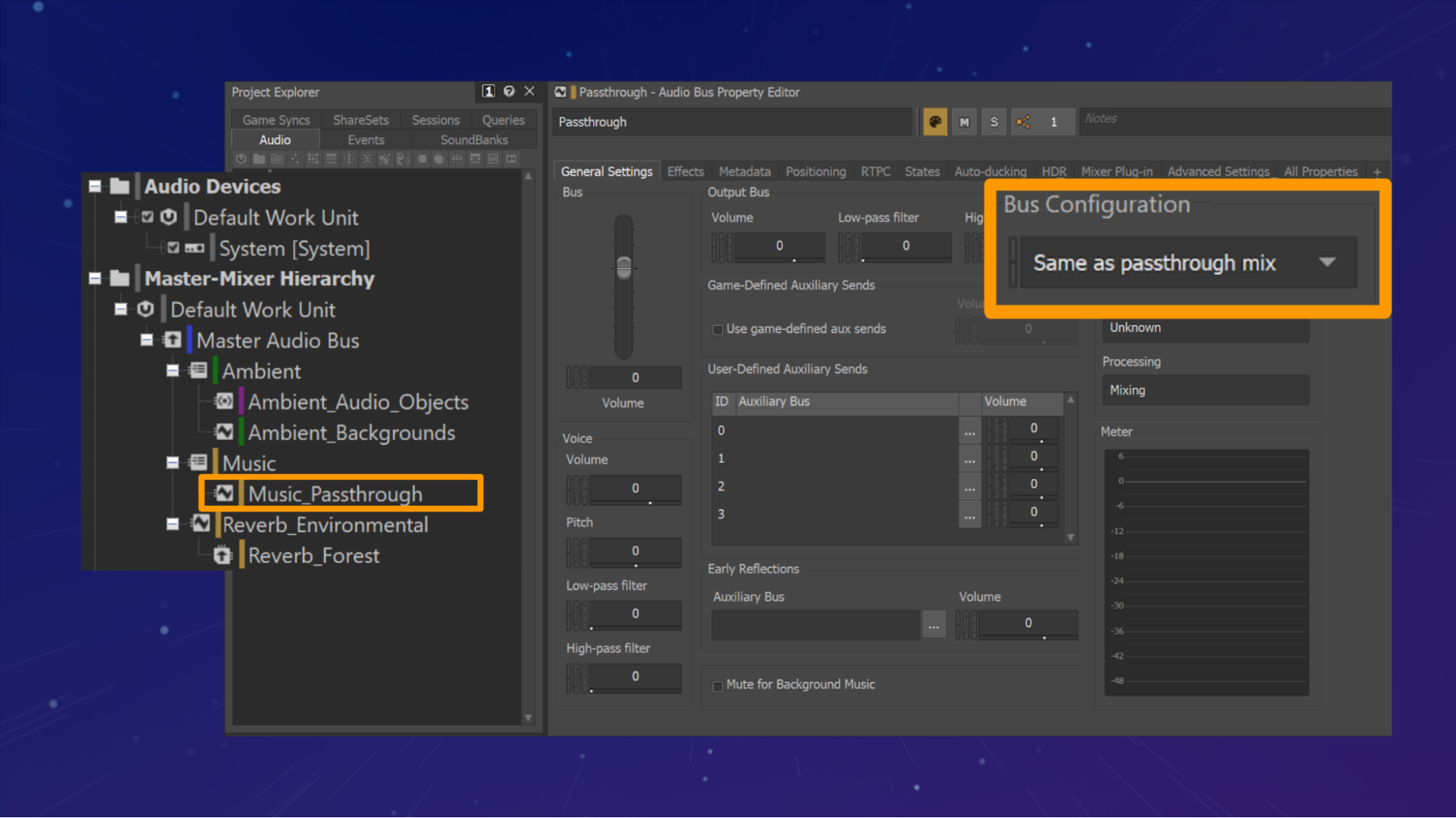

“Same as Passthrough Mix” Bus Configuration in Wwise.

We'll continue the implementation of audio within the virtual "soundwalk" by adding some extradiegetic music (music that exists outside [extra] the diegesis [in this case, the forest]) to accompany the established sounds of the ambient forest.

In Wwise, these are the steps to author for the Passthrough Mix:

- Step 1: Create an Audio Bus as a child of the Master Audio Bus called "Music_Passthrough"

- Step 2: Set the Bus Configuration to "Same as Passthrough Mix".

- Step 3: Route music whose frequency content should remain unprocessed by the endpoint to the “Music_Passthrough” Bus.

Music will then be mixed and routed to the Master Audio Bus, and then to the Audio Device where sounds will be delivered to the endpoint for mixing without processing by any 3D Audio technology.

Authoring for the Passthrough Mix ensures that the full-frequency and original channel configuration of sounds or music will remain untouched by any possible 3D Audio processing at the endpoint. Making decisions about what sounds or parts of a sound should remain unfiltered is often dependent on the content and intention of the interactive scenario.

Off the Beaten Paths

Outside of the explicit routing decisions that can be made, the ability to leverage the hierarchical inheritance of the Master Mixer brings a valuable flexibility that leverages the strengths of a given output configuration. The next section will cover the use of effects in-conjunction with Audio Objects and different Reverb routing possibilities.

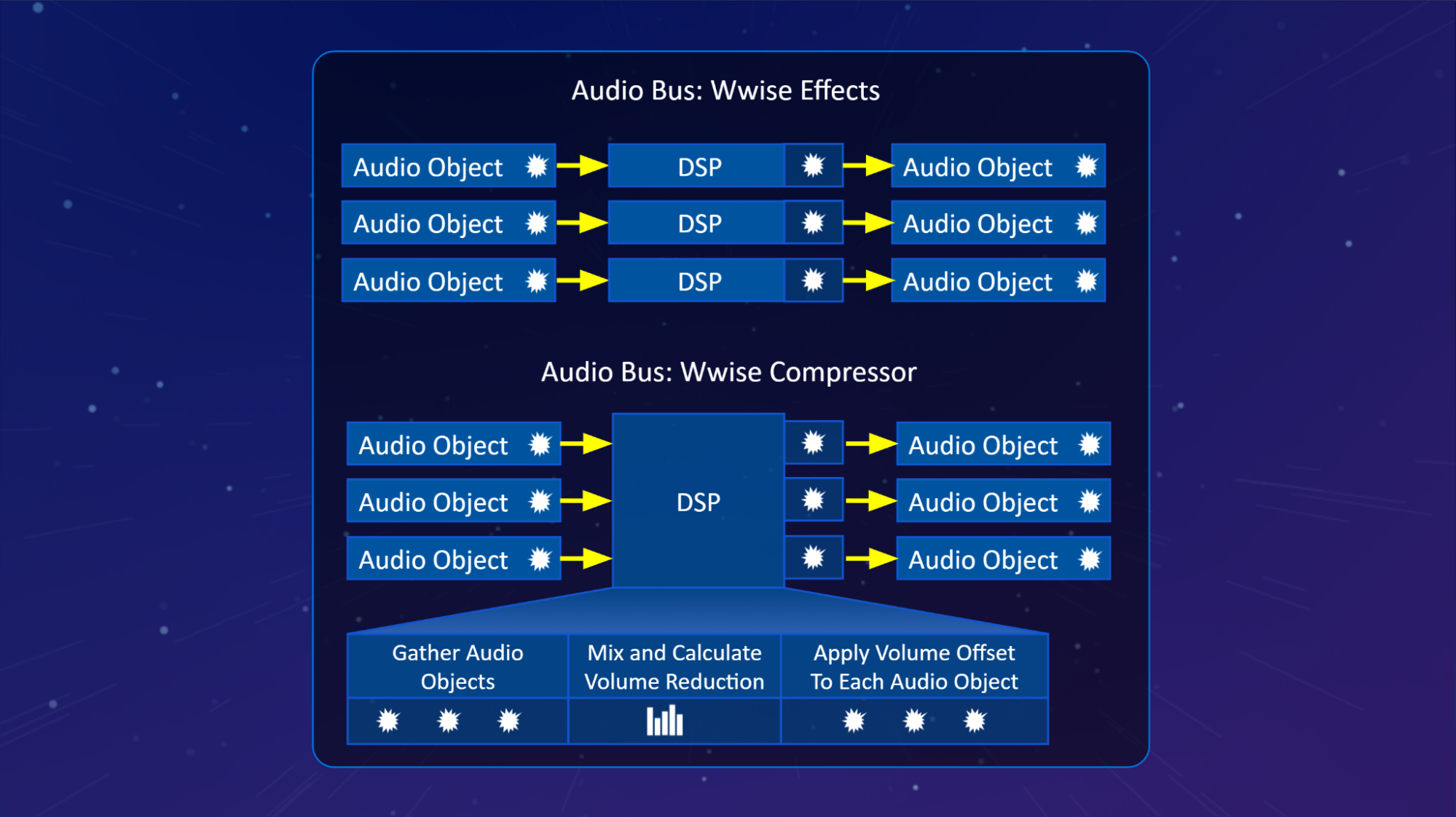

Effects and Object Processors

Effects on Audio Objects

Effects in the Master Mixer Hierarchy have been reconfigured to allow for the processing of individual Audio Objects without requiring the mixing of sources to a channel configuration. This means that every Audio Object that passes through an Audio Bus will receive an instance of the effect while maintaining its Metadata. This is similar to the way that effects are handled in the Actor-Mixer Hierarchy, where effects are applied individually to each voice before arriving at the Master Mixer Hierarchy.

The CPU cost of processing each Audio Object, with effects, in the Master Mixer hierarchy should be measured against the desired result and balanced against available resources. Making explicit decisions about the channel configurations of Audio Busses can help optimize the amount of effect instances where processing can be tailored for a specific platform.

Wwise Compressor

The Wwise Compressor has been rewritten to optimize the processing of Audio Objects and provides reduced CPU cost over processing each Audio Object individually by an effect. When used on an Audio Bus set to an Audio Objects configuration, the Wwise Compressor will gather and evaluate the Audio Objects as a group, without mixing them into a channel configuration at the output of the Audio Bus. The volume of the group is measured and the resulting volume offset is then applied to each individual Audio Object, allowing for the preservation of Metadata at the output of the Audio Bus.

Audio Bus Processing individual Audio Objects compared to the Wwise Compressor gathering, evaluating, and processing individual Audio Objects.

To follow through with the use of the Wwise Compressor in our forest ambient example, we can control the dynamic range of the forest animals and help create a better balance between the ambient background and ambient elements. This can be accomplished using the Wwise compressor on the "Ambient_Audio_Objects" Audio Bus where the forest animal sounds for our virtual “soundwalk” have been routed.

Reverb

Adding Reverb to the sounds of the forest animals can help anchor them in the environment during our virtual soundwalk. Sounds from the Actor-Mixer Hierarchy can be routed via the Game Auxiliary Sends property settings and will route a copy of the sound(s) to an associated Aux Bus where it can be processed using effects like Reverb.

Aux Busses can be configured for a specific channel configuration or can inherit the configuration initialized by the endpoint by defining one of the three Bus Configurations:

-

Same as Parent: An Audio or Aux Bus will inherit its Parent Bus configuration. If there are no explicit Bus configurations set between this bus and the Master Audio Bus, the configuration set by the Master Audio Bus will be used.

-

Same as Main Mix: An Audio or Aux Bus will inherit the Main Mix configuration set by the endpoint and System Audio Device settings. If there are no explicit Bus configurations set between this bus and the Master Audio Bus, the configuration set by the endpoint and Audio Device for the Main Mix will be used.

-

Same as Passthrough Mix: An Audio or Aux Bus will inherit the Passthrough Mix configuration set by the endpoint at the System Audio Device. If there are no explicit Bus configurations set between this bus and the Master Audio Bus, the configuration set by the endpoint and Audio Device for the Passthrough Mix will be used.

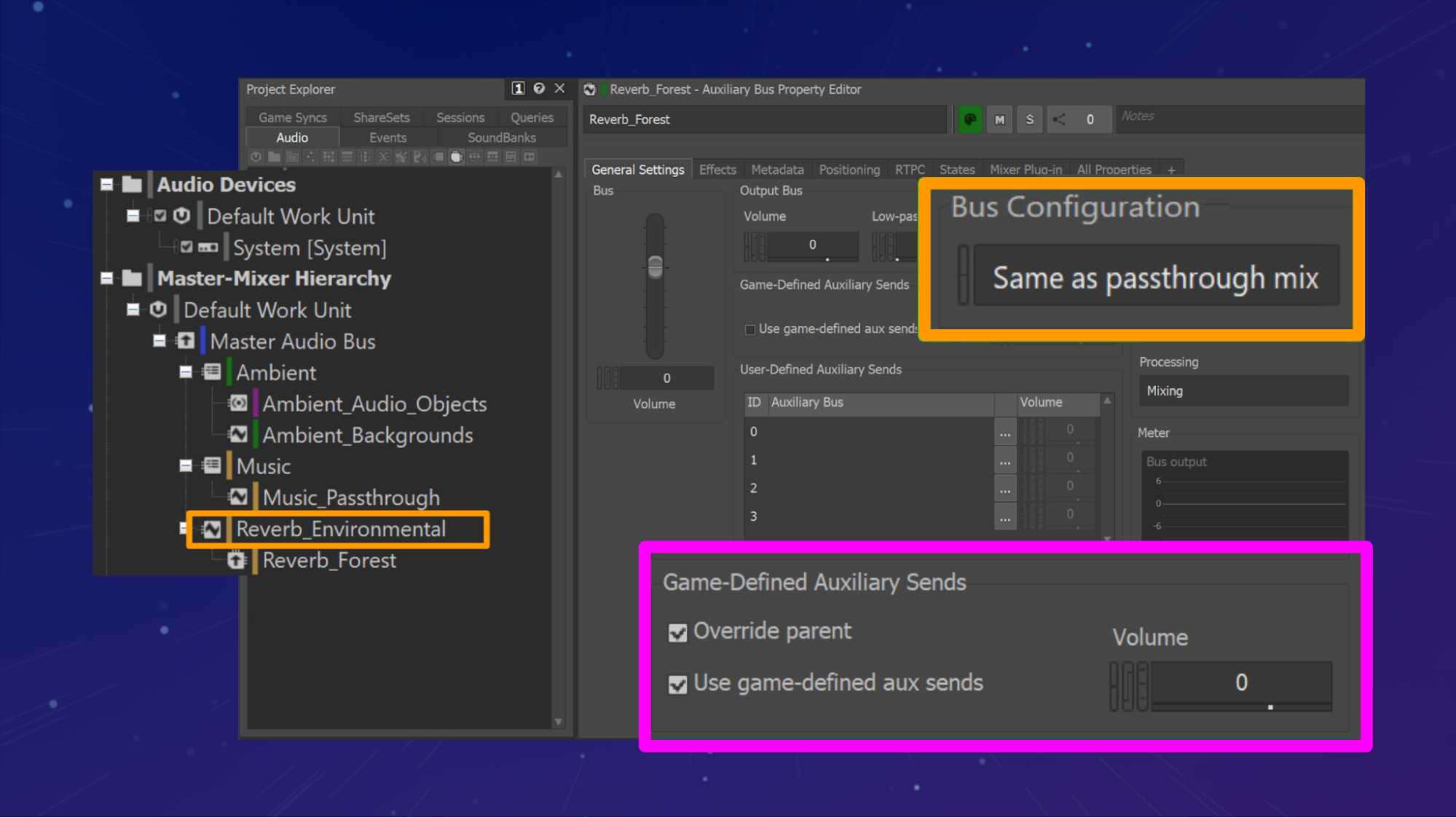

One of the key considerations of establishing Reverb when taking the possibility of binaural processing in-mind is whether you intend the Reverb to be processed by an endpoint that has 3D Audio activated and allowed in the settings of the Audio Device. Because there may be creative considerations making either choice valid, we’ll present both scenarios to help illustrate the different ways that Reverb can be routed.

In Wwise Authoring begins with the creation of an Aux Bus called “Reverb_Forest” as a child of a parent Audio Bus called "Reverb_Environmental".

Reverb: Passthrough Mix

Configuring Environmental Reverb to use the “Same as Passthrough Mix” Bus Configuration in Wwise.

This example uses the “Same as passthrough mix” Bus Configuration for the “Reverb_Forest” Aux Bus and will inherit the channel configuration set by the endpoint at the Audio Device. Sounds routed to the “Reverb_Forest” Aux Bus will result in a mix suitable for bypassing binaural technology at the endpoint. Leveraging the passthrough mix allows for preservation of the Reverb effect without further processing from 3D Audio, maintaining the full-frequency representation without additional filtering.

Reverb: Main Mix

Configuring Environmental Reverb to use the “Same as Main Mix” Bus Configuration in Wwise.

Alternatively, the “Same as main mix” Bus Configuration can be used for the “Reverb_Forest” Aux Bus and the Main Mix channel configuration will be inherited from Audio Device. Sounds routed to the “Reverb_Forest” Aux Bus will result in a mix suitable to be processed using Binaural Technology at the endpoint. Leveraging the Main Mix for Reverb allows for additional processing by an endpoint that has 3D Audio activated and allowed in the settings of the Audio Device. This might be interesting when for Reverb that is intended to be positional and might benefit from any binaural processing that may occur.

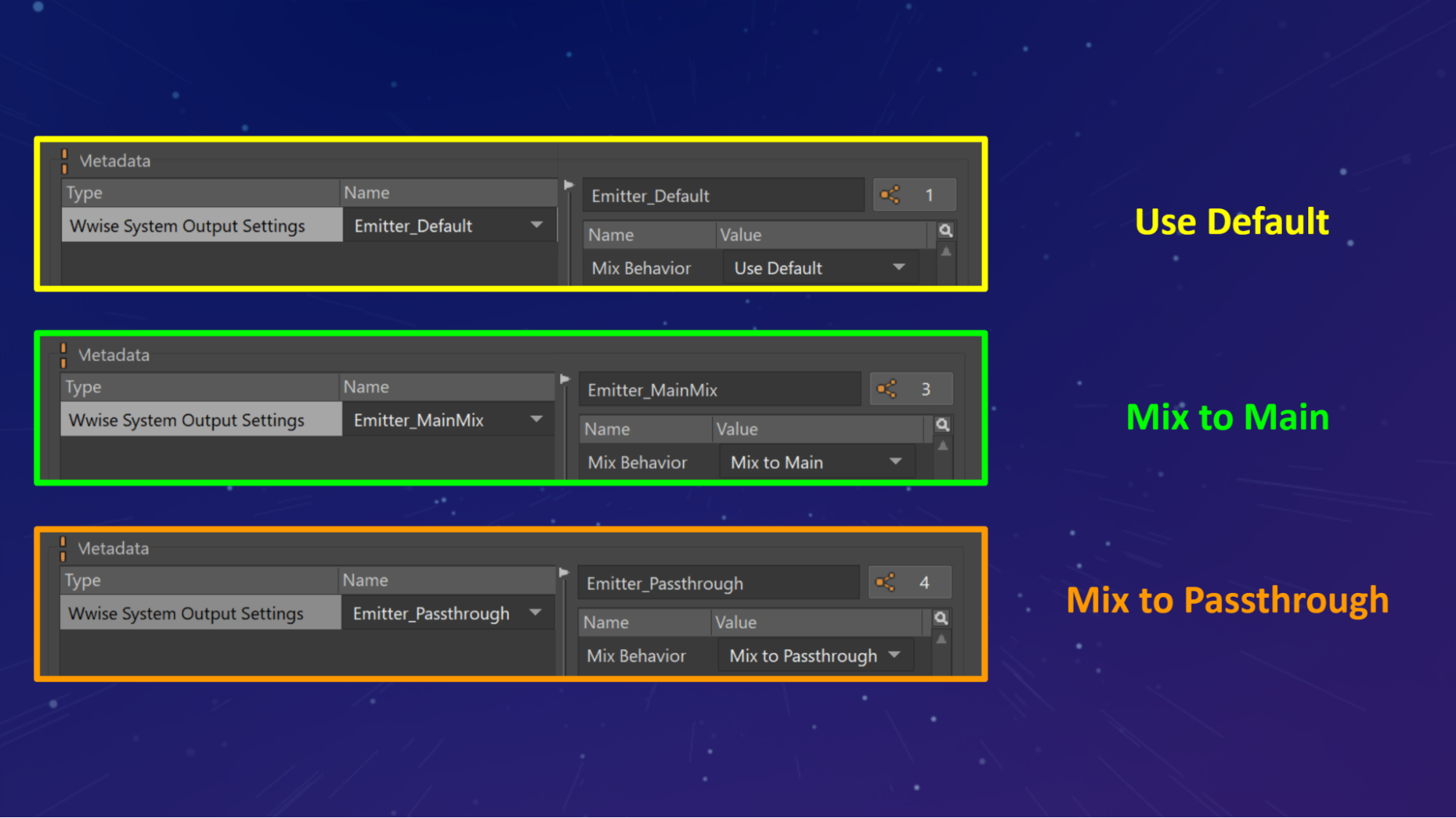

Custom Metadata: Wwise System Output Settings

Custom Metadata for the Wwise System Output Settings.

There is a new workflow available in the Property Editor for the authoring of metadata that can be attached to sounds as they flow through the Wwise pipeline. The first available metadata type that can be specified is related to the Wwise System Output Settings. For any Wwise Object in the Actor-Mixer or Master-Mixer Hierarchies, an explicit Mix Behavior can be defined as part of a sound metadata as either a Custom or ShareSet. This means that sounds routed to an Audio Bus of one Bus Configuration in the Master Mix can instead inherit the configuration of the Main Mix or Passthrough Mix of the Audio Device. This flexibility opens up the ability to organize sounds based on sound type while maintaining the flexibility of routing specific sounds or groups of sounds to inherit a different mix configuration than the one configured on the Audio Bus.

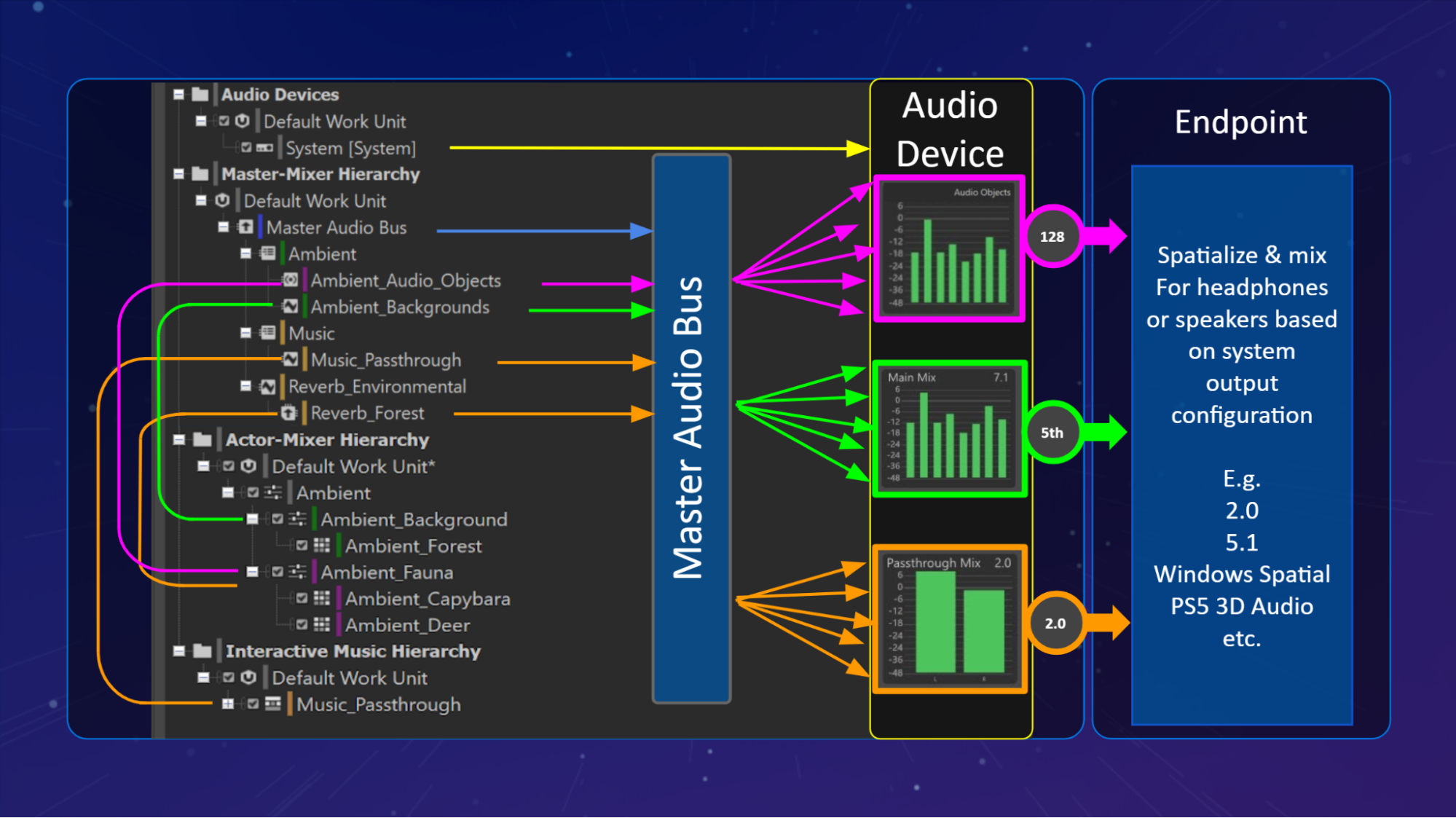

Wwise Hierarchy Routing

Routing in the Wwise hierarchy for headphones overview.

To better understand the routing of sounds through the Actor-Mixer, Master-Mixer, and Audio Device, the hierarchies have been arranged to represent signal flow from bottom to top as sound flows through Wwise to an Audio Object enabled endpoint.

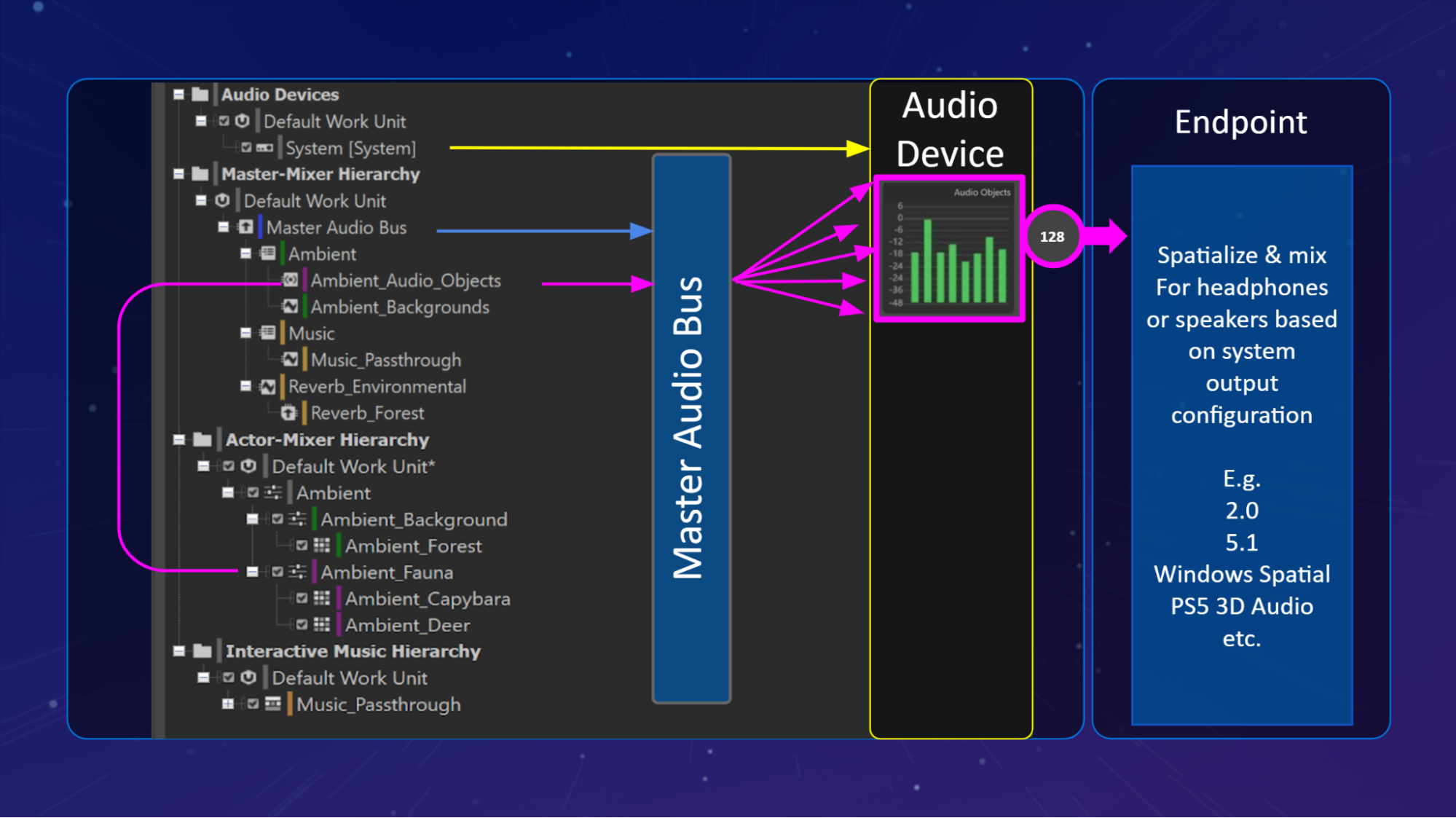

Wwise Hierarchy Routing - Audio Objects

Routing in the Wwise hierarchy with Audio Objects overview.

The "Ambient_Fauna" Actor-Mixer is being routed to the "Ambient_Audio_Objects" Audio Bus where the Bus Configuration has been set to "Audio Objects" to be delivered from the Audio Device as Audio Objects, including their Metadata, to a 3D Audio activated endpoint. Once Audio Objects have been received, their position will be mixed to the output configuration along with the decision to process them binaurally for headphones.

Wwise Hierarchy Routing - Main Mix

Routing in the Wwise hierarchy with the Main Mix overview.

The "Ambient_Background" Actor-Mixer is being routed to the "Ambient_Backgrounds" Audio Bus where the Bus Configuration has been set to "Same as main mix" to be delivered from the Audio Device where the properties defined for the Main Mix Headphone and Main Mix Speaker Configurations are applied and delivered to the endpoint. The Main Mix will produce a mix suitable to be processed using Binaural Technology and at the endpoint a decision will be made as to whether to virtualize the channel configuration and process it binaurally for headphones, depending on the availability and activation of 3D Audio.

Wwise Hierarchy Routing - Passthrough Mix

Routing in the Wwise hierarchy with the Passthrough Mix overview.

The Music_Passthrough Music Playlist Container in the Interactive Music Hierarchy is being routed to the "Music_Passthrough" Audio Bus where the Bus Configuration has been set to "Same as passthrough mix" to be delivered from the Audio Device to produce a mix suitable for bypassing binaural technology at the endpoint.

The "Ambient_Fauna" Actor-Mixer is using the Game Defined Auxiliary Send routed to an Aux Bus defined by the game called "Reverb_Forest" where sounds will be processed using a Reverb Effect and delivered from the Audio Device produce a mix suitable for bypassing binaural technology at the endpoint.

Outro

With the arrival of Wwise 2021.1, the ability to author for spatialized endpoints has reached a level of accessibility that encourages experimentation with this newly emerging format for interactive audio. In part one of the series, we discussed the underlying technology and reasons for its adoption, part two deals with authoring in Wwise towards the greatest spatial representation, and in part three we’ll look at some of the ways that Wwise authors have already been leveraging this technology creatively across some incredible experiences. In the meantime, download the latest version of Wwise through the Wwise Launcher and begin to explore the possibilities that authoring with Audio Objects unlocks.

Here are 9 Simple Steps to Profiling Audio Objects in Wwise to get you started.

Comments