In this article I’d like to show you how approaching UI audio as if you yourself were the UI Designer*. This can help get you closer to crafting a UI that is both aesthetically and functionally cohesive. Whether you’re working with a system that will form the core experience for your players, or just trying to maintain feel and flow between more central parts of the game, this information should give you a solid reference point to plan, work, and iterate from. In case you missed part 1 of this article, be sure to check that out first.

Feedback

UI Design

Arguably, the most important job of UI is to make sure the player knows what they’re doing and what effect it has. This is feedback. Perhaps a button changes hue when someone presses it. Maybe a slider has a numerical readout to give the user an exact value. There might be a small vibration in the controller when certain buttons are pressed. When the player crafts a weapon, they might see a short visual effect of anvil sparks emitting from the button they just pressed.

In addition to physical and informational feedback, emotional feedback also becomes a huge factor to consider. You would probably want the player to feel pretty good when they confirm an in-game purchase, or accept a friend request. What about when they permanently delete an item? What if they remove someone from their party? Depending on the context, you may or may not want certain emotions to go along with these actions. Additionally, the intensity of these emotions all have to fall somewhere on a spectrum that you establish. What’s the most intensely rewarding thing a player might do through the UI? Conversely: What should they feel most punished for?

Audio

This is where audio can really shine, and bring out the best of a good UI design. Audio plays a huge part in the physical, informational, and emotional feelings of interacting with a game’s UI. The degree of physicality we want the player to feel will usually depend on the game and what sort of experience the team is trying to achieve overall. Designing very tactile, clicky, organic sounds will produce a very different experience from designing bubbly tonal sounds.

Maybe we really want the UI to feel dynamic and responsive, in which case a very modular approach might be appropriate. In this case, a single button press might have separate assets for the press and release of the button. We could even include a third sound in case the player presses the button, but moves their finger/cursor off of the button before releasing it, thus canceling the action. It’s also possible that a button is time-dependent, and has different effects depending on how long it is held down or when it is released. In this case, we would probably want to design a sound that can build up to the completion of the action(s), most likely with some kind of auditory confirmation. At the same time, it needs to also react to an incomplete action (the player releases the button before it is confirmed).

A casual word-puzzle game doesn’t necessarily require strong physical feedback, so it might use softer, more relaxing sounds in its UI. However, a World War II game probably intends to keep some level of tension and build anticipation. In this case, using gun mechanics could be a good way to make joining a game or unlocking a weapon really satisfying to do.

Informational feedback tends to be a little more difficult. One very easy way to go about it is to use skeuomorphic sounds. That is, sounds that represent the actual, real-world counterpart of the action that the player is doing in the game. As a simple example, naming a character could emulate actual keyboard sounds when selecting letters.

For more explicit emotional feedback, there are tons of tricks that sound designers have, many of which are probably already familiar. For example, harmonically consonant tonal sounds are an easy way to give positive emotional feedback, while inharmonic tonal sounds can easily sound negative. Ascending sounds will evoke success, anticipation, opening, or entering. Descending sounds, in contrast, will evoke failure, finality, closing, or leaving. The sound of things coming together or locking into place is satisfying and rewarding, while the sound of things breaking apart or shattering is disappointing and punishing. All of these are general approaches that can be combined to create a particular emotional balance, depending on what the UI is trying to achieve.

We’ve discussed three types of feedback here: physical, informational, and emotional. However, it’s important to remember that these are not exclusive to each other, and that good UI design utilizes a balance of all three to fit the goal of the game. Audio, of course, is no different.

Let’s take the earlier example of crafting a weapon; the player hits the crafting button, their weapon gets made, and the button flashes some anvil sparks. We can work with that by designing a sound with a nice harmonically resonant anvil hit, maybe with some hot metal sparking sounds layered in. To give the sound some more body, we can even add some interlocking metal and leather into the mix. Timed correctly with the visuals, this would be a great way to tie in the informational feedback of crafting with the physical satisfaction of performing the action, as well as the positive emotional connotation of successfully creating the weapon. Taking it one step further, let’s say there’s a chance that the crafting fails. Removing or replacing some of those layers would leave just enough missing from the interaction to inform the player that the crafting attempt failed. In this case, the physical feedback of pressing the button could remain the same, but the emotional and informational feedback could be dulled down.

Tolerance

UI Design

To err is human, and in most cases, the target audience of your game is, in fact, humans. When UI is designed with tolerance in mind, it’s compensating for potential human error, while also making sure that it never annoys the player. It means that even though the player might accidentally open the wrong menu, getting back to the menu they meant to open is relatively easy. It means that even though they didn’t mean to hit “Buy” in a shop, they still have a chance to confirm or cancel the purchase. It means that even if they’re given an error, or aren’t allowed to perform a certain action, they never feel explicitly punished just for using the UI.

Nearly every decent game menu allows for easy traversal and compensation for potential mistakes. In “Rocket League”, the Options menu allows the player to easily switch between option categories. It also provides convenient Back and Default buttons.

Audio

This is where things can sometimes be tough for UI sounds. Tolerant UI audio doesn’t necessarily need to compensate for human error (though it can). However, it does need to be designed and tested with long-term human use in mind. Regardless of how fantastic a sound is the first time you hear it, there’s always the possibility that it becomes repetitive, annoying, and/or distracting during long-term play. In a resource-based strategy game, earning resources is good (obviously). However, if we focus too hard on rewarding the player for gaining resources, it could easily become distracting and annoying to a player who does well and sets up efficient systems. This creates a paradox where better players are emotionally rewarded less than players who aren’t as efficient. A scenario like this one wouldn’t necessarily reveal itself without a certain amount of testing the audio in the actual game.

Similarly, negative feedback should be handled carefully as well. Sounds that are intended to be punishing or disappointing still need to avoid sounding harsh or causing ear pain. Nobody likes it when their game gives them tinnitus. Even if our negative feedback sound avoids this, we need to keep in mind how necessary it really is. The crafting example in the last section is a good reference here. Assuming the failed crafting attempt is a random event, there’s no reason that we should really punish the player. Simply removing or downplaying the positive feedback would be effective enough.

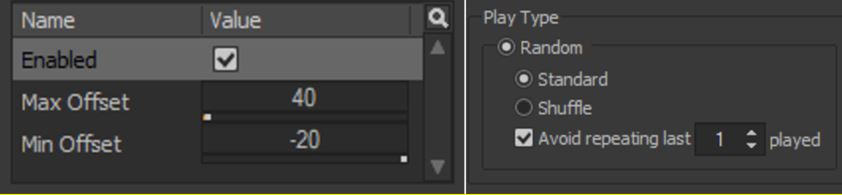

One last suggestion here is to use implementation to your advantage. Sometimes, all you really need in order to fix a repetitive sound is a few variations to randomly choose from, or even just some slight pitch randomization. In a much more complex scenario, you may want to design your mix to gradually evolve depending on how active various sections of the game are in terms of audio.

Randomizing assets and parameters is a quick and easy way to prevent audio from becoming exhaustively repetitive.

Reuse

UI Design

Designing UI with reuse in mind is a great way to keep everything cohesive. It’s the idea that patterns, shapes, behaviors, and really all aspects of UI can and should be used in a modular fashion. Your protagonist’s magic skills are circular buttons and their physical skills are squares? It might be a good idea to keep that association when displaying the skill tree. The health bar is red and the stamina bar is green? You might then choose to make health-restoration items have red backgrounds and stamina-restoration items have green backgrounds. Reusing patterns across the whole of your UI’s design will help new players learn more quickly, as well as help veteran players navigate more efficiently and confidently, without the need to memorize small trivialities.

Audio

Reusing sound layers and even sharing a single asset for multiple actions can easily help reinforce clarity in a game’s UI audio. In some cases, this is simple and obvious. Toggling through the options screen can–and probably should–just use the same sounds as toggling through the settings screen. This can still apply to more complex actions too, though.

If our game allows the player to refill their health with an item, we’ll probably give that a sound. What if it allows them to overfill their health, so they have a temporary HP boost? Sure, we could create a new sound entirely. We could also just reuse the health sound we already have, but that would ignore the significance of this special buff. Another option is to use the same health sound (or main layers from it), with some additional layers designed into it. A different approach would be to take that same health sound and just add some fx processing to make it a bit more juicy. Then end result is that the player will intuitively identify this sound with filling their health, but also know instantly that it is a special effect.

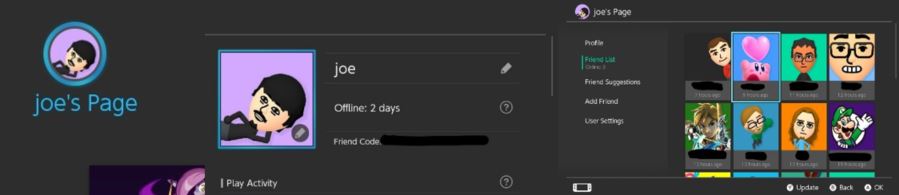

On the Nintendo Switch, highlighting your profile, profile picture, and friend’s profile picture all use the same sound.

Bringing It All Together

I hope that by now you’ve seen that none of these principles exist in isolation. Each principle overlaps every other principle in some way or another. Reuse is an excellent tool to improve Feedback. Tolerance is something that should be built into the Structure. Simplicity can be a guide to improve Visibility. Keep this in mind when approaching the sound design that will accompany your game’s UI, and you’ll have a much larger and more powerful toolbox to work with creatively. You can also probably guess that, although our focus here was UI, each of these principles can be extrapolated to other elements of a game. How could the points on UI visibility translate to how you would design the sound for the core gameplay loop of a dungeon crawler? How could the points on UI feedback translate to how audio could improve the feel of a fighting game? I invite you to explore these ideas for yourself! As mentioned before, these concepts can turn into quite the rabbit hole.

Another thing to keep in mind when working with UI is accessibility. Creating audio with accessibility in mind is a whole separate topic that we can’t go into here. However, know that most of these principles can (and should!) be approached in a way that doesn’t neglect players who may have difficulty hearing certain frequency ranges, or those who are visually impaired.**

One other extremely important point I’d like to stress is that the best possible way for your audio to support and enhance the UI is communication. As early as possible, talk with your UI designers! Get involved in their meetings. Ask to look at their documentation. Invite them to join in on audio meetings where UI will be discussed. Nothing holds back a project like a team that doesn’t talk. You might also be surprised with what you could learn and what suggestions you might receive from people outside of audio.

Thanks to Emilio González and George Lobo for lending their professional feedback on this article as it was written.

* I should clarify that I am not in any way a professional UI designer. I am purely a sound designer. If any actual UI designers would like to correct me or modify any of the information in this article, I would love to receive your critique and contribution!

**If you’d like to read more on designing audio visually impaired players, this previous Audiokinetic Blog article by Adrian Kuzminski is a great place to start.

Comments