Background

I got my first job as an Audio Game Engineer in 2015, which is when I was introduced to Wwise by my Art Director at the time. Before then, my career goal was to write music for games. I was interested in interactive music, music generation, and algorithmic composition for a while. However, I never expected that they already existed in video games which I loved since I was a child.

Out of curiosity, while seeking more potential methods of designing interactive music for games, I started my journey of self-education through Google and YouTube. Kejero’s YouTube channel, "Music By Kejero", was a big inspiration. It encouraged me a lot in the beginning. The video series systematically introduce various design methods such as Vertical Techniques, Horizontal Techniques, Stingers, Transition Segments, Hybrid Techniques, etc. His explanation of how to implement these techniques inspired me to think about the relationship between design methods and music implementation. In the following years, I watched all videos that I could find on YouTube, which talk about interactive music for games. And I read most articles on that topic from various sources such as the Audiokinetic Blog, DesigningMusicNow, Melodrive Blog, etc. This allowed me to review the development history of game music - from buzzer/chiptune, adaptive orchestra to hardware FM synthesizer, algorithmic percussion (Intelligent Music Systems). That was when I learned about the genius engine, iMuse by Lucas Arts, which has been implementing Hybrid Techniques and Procedural Audio since 1991. And there were bold and ingenious ways to combine audio-based and MIDI technologies in music design (e.g. Get Even).

Learning Materials

Learning always makes me happy. However, while applying the knowledge in practice, I realized that:

- Many learning materials concerning interactive music for games focus primarily on music design driven by game engines. Little mention is made of the situation where audio engines are not driven by game engines;

- The term “hybrid” is used frequently since there are various techniques involved in in-game audio design. However, that term could have a very different meaning for Kejero and Olivier Derivière;

- The same processing methods are named differently. For example, “Vertical Techniques” is also described as “Vertical Remixing” or “Layering”. Some people even use “Multi-track” as jargon to imply such techniques;

- There isn’t enough attention being given to the interactive music creation using mixing technologies. This is the key point that Olivier Derivière means by “hybrid” (Audio + MIDI). More complex music design could be achieved on that basis.

(To read more about Hybrid Interactive Music, here are two articles written by Olivier Derivière himself; Part 1 and Part 2 )

My Solutions

Michael Sweet once said, "...This doesn’t preclude the fact that many games utilize more than one adaptive music technique at the same time. But if we, as composers, are going to get better at writing music for games, then it’s worth organizing some general guidelines for the use of each technique.”

The potential approaches to artistic creation are endless. However, just like using shortcuts or project templates in our daily work, summarizing the design methods could help us avoid confusion and optimize the workflow. This is important to reduce our trial-and-error costs and quickly find reliable music design solutions that best suit the game. It allows us to put more time into complex and refined implementation. For this purpose, I tried to create a set of standards to classify the processing methods. They were intended to guide my music design practice but could be helpful to you too.

User-Driven & Game Driven

First, based on position and auxiliary, concepts are sent to Wwise according to whether the music implementation will be affected by the game engine. I classified potential processing methods into two categories: User-Driven and Game Driven. This way, the dynamic music system that is independent of the game engine won’t lose the attention it deserves. In other words, it can be better noticed and well developed.

Static & Dynamic

Then, according to whether the implementation results will be executed as expected, I further divided the design techniques into Static and Dynamic. This way, the linearity and non-linearity in music design can be better distinguished. This will help us select an appropriate processing method.

Note: In most cases, it’s impossible to predict the player’s actions in the game. So, all game music designed using the Game Driven methods can be described as “dynamic"; however, you could be using real-time cut-scenes concurrently to drive different Music Segments to play linearly. As such, even if the music is not rendered into an audio file, the Game Driven methods themselves can still be considered as "static" (so there are Game Driven Static methods). These methods are all Game Driven, the only difference is whether the process is linear. So I didn’t state “Static/Dynamic” (as you can see in the following image).

Mixing & Transition

In an article published on DesigningMusicNow, Michael Sweet grouped 6 most commonly used design methods into two main categories: Vertical Remixing (Layering) and Horizontal Re-Sequencing. They are the most popular terms that we usually think of while talking about interactive music for games.

Just like the name and nature of the Vertical Remixing (Layering) method, no matter how complicated the technique you are using, it’s always mixing-related.

The Horizontal Re-Sequencing method is essentially about how to switch the Music Segments and how to transition during the process. Therefore, according to what’s going to be processed, I further divided the processing methods into Mixing and Transition.

Summary

According to the classification standards suggested above, we will get 8 potential processing methods:

- User-Driven Static Transition — Used to process the Music Segments, with predictable results, not affected by the game state.

- User-Driven Static Remixing — Used to process the music mixing, with predictable results, not affected by the game state.

- User-Driven Dynamic Transition — Used to process the Music Segments, with unpredictable results, not affected by the game state.

- User-Driven Dynamic Remixing — Used to process the music mixing, with unpredictable results, not affected by the game state.

- Game-Driven (Static) Transition — Used to process the Music Segments, with predictable results, affected by the game state.

- Game-Driven (Static) Remixing — Used to process the music mixing, with predictable results, affected by the game state.

- Game-Driven (Dynamic) Transition — Used to process the Music Segments, with unpredictable results, affected by the game state.

- Game-Driven (Dynamic) Remixing — Used to process the music mixing, with unpredictable results, affected by the game state.

Note: The last four are all Game Driven, the only difference is whether the process is linear. So I didn’t state “Static/Dynamic” (as you can see in the following image).

Structural Units in Interactive Music Hierarchy

With the new classification standards established, we need to use them to guide our music design practice, and the tool is Wwise. Before starting, we also need to look at this tool from a new perspective.

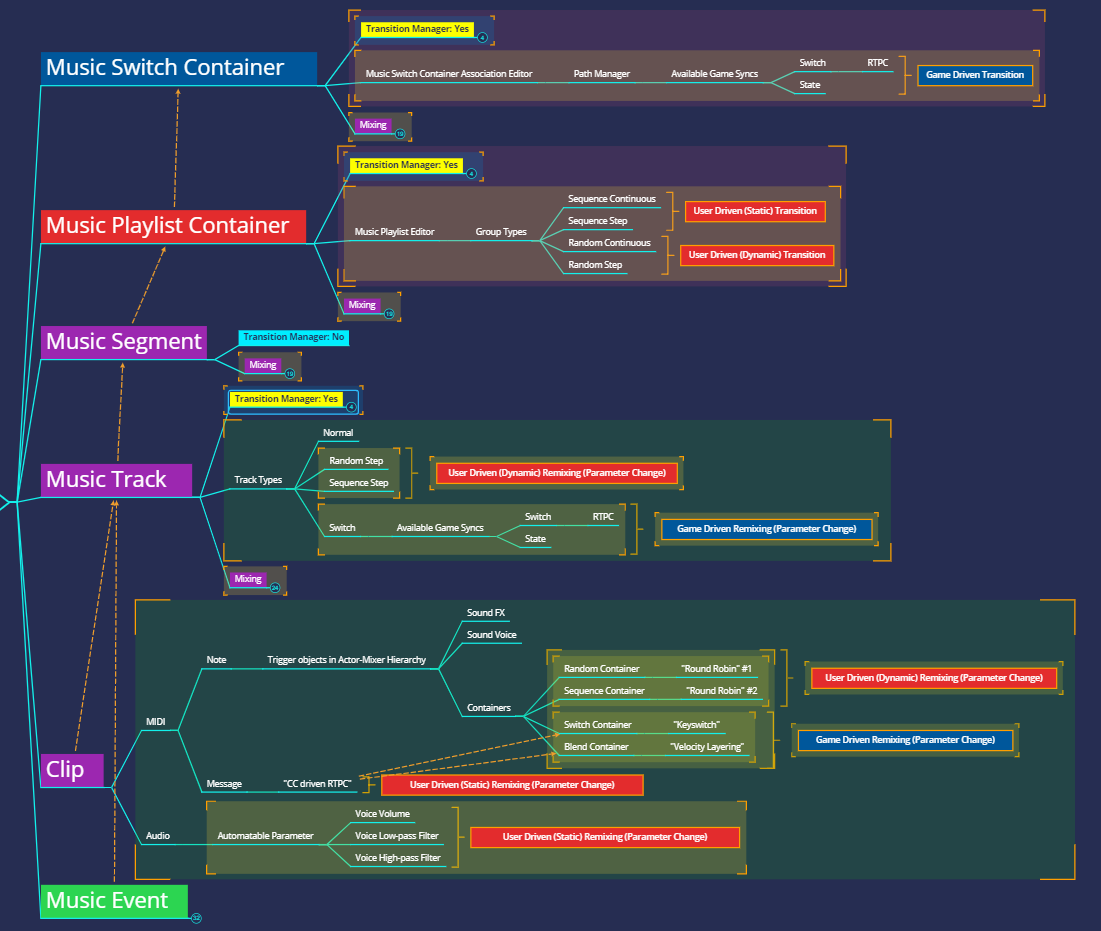

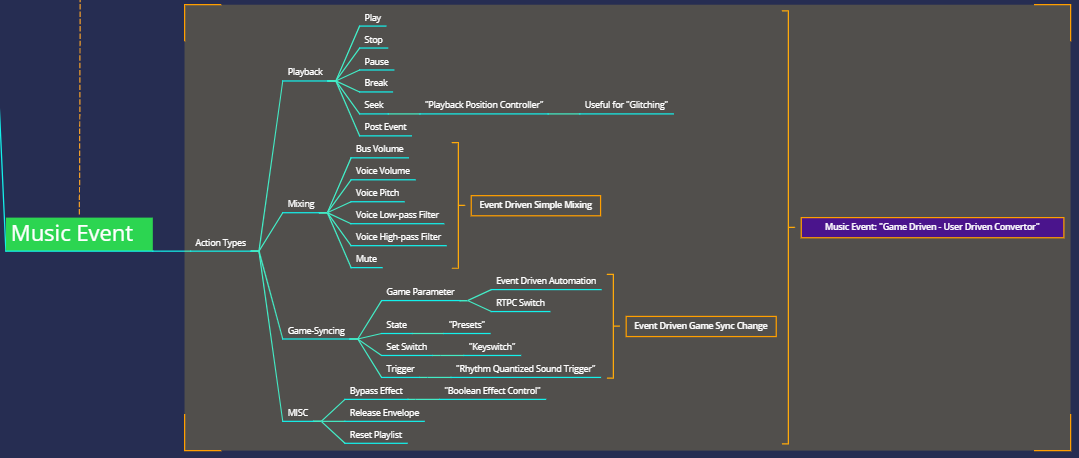

I will show you a mind map to share my understanding of the structure of the Wwise interactive music system.

To access the PNG file, go to https://share.weiyun.com/5XNv7gf

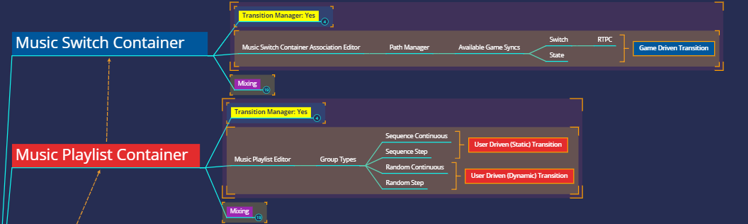

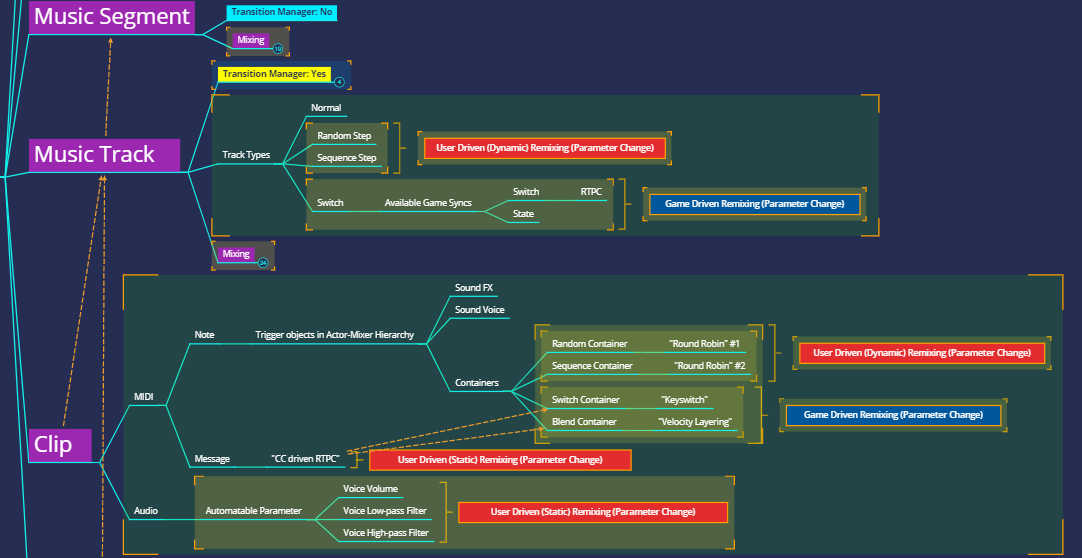

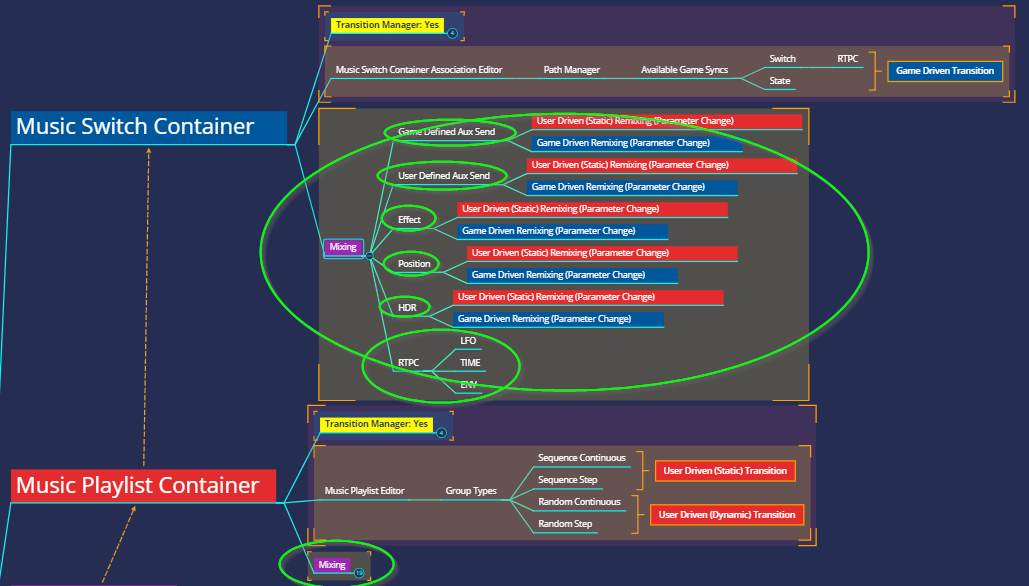

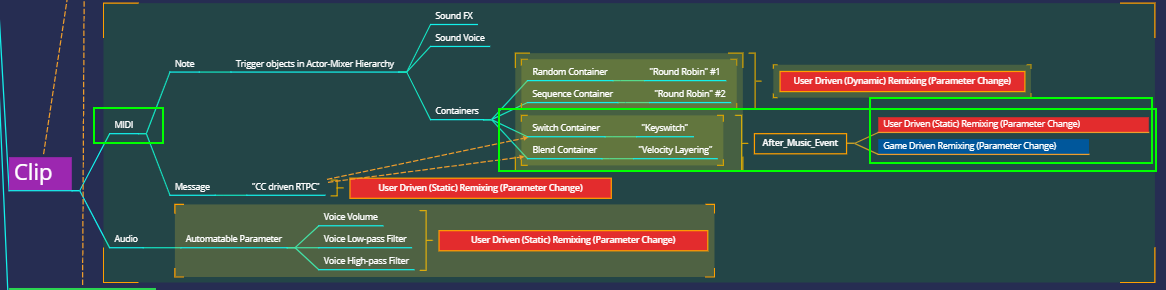

In the image above, I used different colors to mark the role of each structural unit in the music design.

Red boxes indicate that you may use the User-Driven methods; blue ones indicate that you may use the Game Driven methods; purple ones indicate that you may use both the User-Driven and the Game Driven methods. Yellow ones indicate Transition Managers (i.e. the Transitions tab of the Property Editor). They are listed separately because they can be used in all kinds of music design, no matter if you're using the User-Driven or the Game Driven methods. The green one indicates the Music Event feature introduced since 2019.1.0. It is also listed separately because it greatly increases the potential approaches to music design. We will talk about that later.

Note: Before marking the colors, I limited the techniques to be used according to the core function of each structural unit. This way, we can avoid introducing too much complexity (usually related to the mixing). You can see that there are small purple boxes that say “Mixing”. They can be expanded to show potential processing methods that could be implemented with consideration of other non-core Interactive Music Hierarchy functions (such as State, RTPC, Positioning, HDR, etc.).

Macro Level

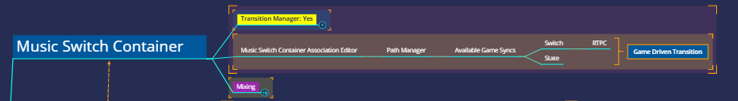

Music Switch Container

First, let’s have a look at the Music Switch Container. The Music Switch Container Association Editor is an editing window specific to the Music Switch Container. It’s used to associate individual or combined Game Syncs (Switch and State) with the corresponding playback target. The Transition Manager can be used to set how to implement them for potential transitions. As such, Music Switch Containers are the preferred structural units for handling the Game Driven Transition.

Note: You can see the Game Syncs available for the Music Switch Container: State and Switch. Both of them can be used to make transitions (without discriminating the functions, core or non-core). But unlike States, Switch changes can be driven by Game Parameters. So, different interaction modes could be used.

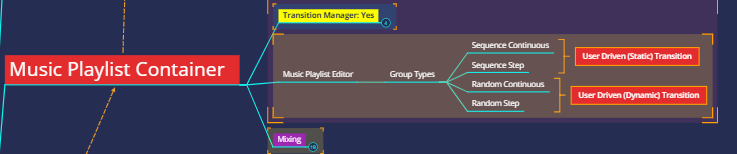

Music Playlist Container

Next, let’s have a look at the Music Playlist Container. Music Playlist Containers control the playback order of the Music Segments under the Playlist. They support random playback as well as sequence playback. As such, Music Playlist Containers are the preferred structural units for handling the User-Driven Static/Dynamic Transition.

Note: Sequence Continuous/Step is used for linear User-Driven Static Transition, Random Continuous/Step is used for non-linear User Driven Dynamic Transition.

Summary

These two structural units in the Wwise interactive music system are all transition-related. With Music Switch Containers and Music Playlist Containers, the User-Driven (Static/Dynamic) Transition and Game Driven (Static/Dynamic) Transition methods can be well implemented. However, they are the largest units in the interactive music system. It means that they are at the highest level. It’s inappropriate to use them to implement the Remixing methods directly. Sometimes we will have to use one Event to play multiple such units concurrently. In Part 2 of this blog series, I will show you how to use Wwise to generate Terry Riley's In C composition in which the methods mentioned here will be used.

Micro Level

Let’s now have a look at the micro-level. You can see that the structural units (Music Segment, Music Track, and Clip) are marked in purple. It means that they can be used to implement both the User-Driven and the Game Driven methods; you can also see that, unlike the Music Switch Container and Music Playlist Container, the methods marked with red and blue boxes are Remixing-related rather than Transition-related. The potential approaches to music design exist in the details rather than at a higher level. And they are all mixing-related.

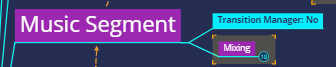

Music Segment

First, we noticed that the Transition Manager for the Music Segment is marked blue and says “Transition Manager: No”. This is because we cannot see the Transitions tab in the Property Editor while clicking to select a Music Segment.

Music Segments are the most special units in the Wwise interactive music system: First, Music Segments have Sync Points (Entry Cue and Exit Cue). They are used to communicate between the child and the parent's structural units. This ensures that the Transition methods can work properly;

Second, through the Music Segment and Music Track icons, we can see that Music Segments may contain multiple Music Tracks. Their direct sub-units are Music Tracks. There is no segment transition for Music Tracks. As such, Music Segments are the preferred structural units for handling the User/Game Driven Remixing.

Note: The "traditional" vertical techniques (Game Driven Remixing) are the most preferable method for the Music Segments.

Also, Entry Cue and Exit Cue are set in the Music Segments. To get a variable rhythm similar to the progressive rock, we need multiple Music Segments with different Bars/Beats or Entry Cue/Exit Cue settings.

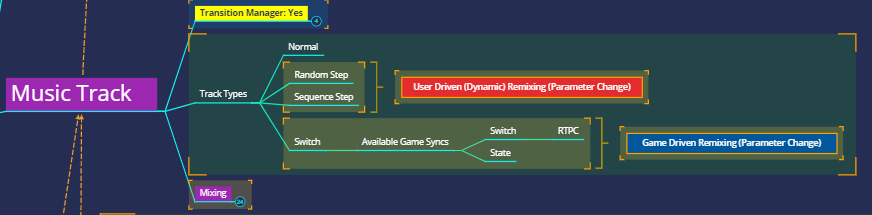

Music Track

Let’s now have a look at the Music Track. Music Tracks are similar to Music Segments. They can be used to implement both User-Driven Remixing and Game Driven Remixing methods. The difference is that Music Tracks create mixing variations by changing the playback content. The User-Driven (Dynamic) Remixing method is implemented using Random Step/Sequence Step Tracks. The Game Driven Remixing method is implemented using Switch Tracks. Music Tracks can be considered as Voices in the interactive music system. They can be combined with Transition Managers to implement "discrete" Remixing (unlike "continuous" Remixing with RTPCs controlling the volume).

Note: Sequence Step Tracks cannot be used to implement Static Remixing methods. Every time a Sequence Step Track is played, the playback content will switch to the next Sub-Track. So this method is not fully controllable. Only the switching order is configurable.

Note: You can see the Game Syncs available for the Switch Track: State and Switch. Both of them can be used to make transitions (without discriminating the functions, core or non-core). But unlike States, Switch changes can be driven by Game Parameters. So, different interaction modes could be used.

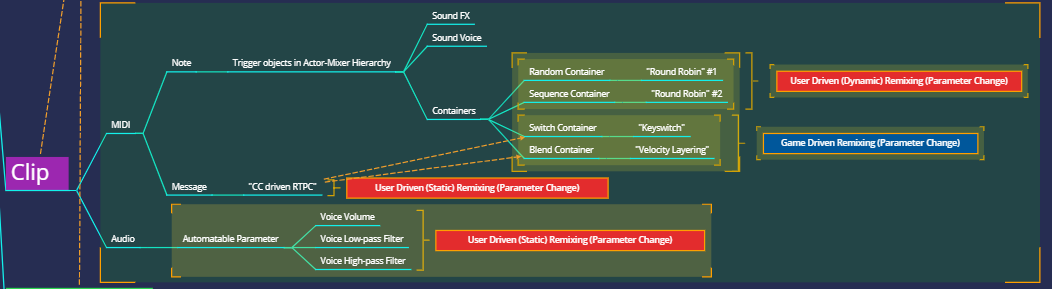

Clip

Let’s now have a look at the Clip. For Audio Clips, only the User-Driven Static Remixing method can be implemented. In other words, all you can do is simple and automatic volume, LPF, HPF editing. For MIDI Clips, a large number of methods can be implemented. Although, most of them are User-Driven Remixing or Game Driven Remixing. MIDI itself doesn’t produce sound. You need to trigger the audio objects in the Actor-Mixer Hierarchy. These objects can be a source plug-in or a structural unit containing samples. The CC information in the MIDI file can be used as Game Parameters to control Switch/Blend Containers to implement User Driven-like Game Driven Remixing. However, with the Music Event feature introduced since 2019.1.0, the conversion between the User-Driven and the Game Driven methods becomes easier. For composers, there are many more User-Driven methods that can be used.

Note: The mixing of sources, samples, or container objects triggered by MIDI cannot be implemented by the structural units in the Interactive Music Hierarchy. Instead, it needs to be implemented in the Actor-Mixer Hierarchy or Master-Mixer Hierarchy.

MIDI Advantages

To make better use of the new possibilities brought by the Music Event feature, we need to pay more attention to conventional MIDI technologies. The early development of video game music is inseparable from MIDI. Since its release in the early 1980s, it was quickly adopted by various platforms as a general information exchange protocol for computer music development. The iMUSE engine developed by LucasArts was initially based on MIDI (although the MIDI module was removed later). However, after CD audio sources were introduced, MIDI became less popular. The main reason is that composers could not accurately predict the actual implementation of the MIDI music playing on the player’s devices, and MIDI technologies are less convenient than the audio-based methods in terms of mixing.

However, with the introduction of Wwise MIDI music features in 2014 (MIDI is incorporated into the audio-based framework), the MIDI advantages are being revealed once again:

1. MIDI has a small data footprint. In contrast to audio files, MIDI files are more like music scores used for electronic instruments. Therefore, the cost of increasing musical variations by adding MIDI assets rather than audio assets is much lower. The more music content is implemented through MIDI, the more obvious this advantage will be. The Remedy audio team shared Control's music design at Wwise Tour 2018. They showed a large number of MIDI assets with different rhythmic patterns generated in batch using Python. With a minimal impact on the overall data volume, nearly infinite variations in sound details were obtained. Due to automated generation and deployment, the musical beats can be switched very conveniently. This method for creating rhythm variations can also be used for pitched assets;

2. From the perspective of music and art, MIDI assets can be used to render audio tones to achieve different listening objectives. You can also use them in certain situations to maintain the internal connection between various listening objectives (like motivation);

3. From the perspective of modular synthesizers, MIDI notes can be used as Envelop Triggers in Wwise to drive the real-time synthesis. This way, we can get more organic variations than that of audio. You can even use a large number of MIDI tracks with different note velocities as clock signals for synthesizing real-time music at a variable tempo (refer to Olivier Derivière's blog series on Audiokinetic Blog); Also, Midi CC is a great information source for static automation. Users can use the CC info in MIDI data to drive real-time synthesis parameters. Also, you can use the info to render the artistic expression of your game.

Music Event

Knowing the MIDI advantages, let's have a look at the Music Event feature introduced since 2019.1.0. The reason why I put it at the end is that Music Events are more powerful than MIDI notes (MIDI notes can only exist as Clips): Music Events are not included in Clips but exist independently. They can be used to play more than just audio objects. They can do almost everything that Events can. We can use Music Events to control the sound playback, mixing, or sound engine in various ways, even to drive the animation. In a recent blog post, Peter “PDX” Drescher described how to interact between Music Events and animation sequences.

In the image above, I listed the Actions included under the Music Event and made a simple classification from the perspective of music design. In the past, you would need the game engine to send Game Calls to trigger Events. Music designers could use Music Callbacks/Custom Cues to do the same thing, but they still needed to perform other tasks outside of Wwise. In other words, they had to go to programmers for help. However, they can now complete all those tasks within Wwise by themselves. This greatly improves efficiency and provides a lot of creative possibilities.

Taking the Music Switch Container for an example, with RTPCs and States introduced to control the mixing, we can see from the image above: With Music Events, it’s possible to select Set Game Parameter and Set State in the Event Actions. As such, music designers can implement the User-Driven Static Remixing method, using Music Events to create mixing variations (you can also implement the User-Driven Static Remixing method, using Music Events to control Sample & Hold).

Let’s go back to the Clip level. With Music Events, we can accurately control Switch Containers and Blend Containers. Music designers can freely use Kontakt instruments in the game just like in DAW. (Imagine you incorporate KeySwitch and Sample Morphing into the game!)

In Part 2 of this blog series, I will compare the advantages and disadvantages of the 8 processing methods listed here, and rebuild the Mozart Dice Game and Terry Riley’s In C composition in Wwise; and in Part 3, I will show you how to use computer-assisted composition tools to write music for games. Stay tuned!

Comments

Daniel Nielsen

March 05, 2021 at 08:31 am

awesome read. Was part 2 ever released?